Home >Web Front-end >JS Tutorial >Some knowledge points about front-end performance optimization that you deserve to know (Part 1)

Some knowledge points about front-end performance optimization that you deserve to know (Part 1)

- 青灯夜游forward

- 2021-06-28 10:26:022455browse

The purpose of front-end performance optimization is to make the page load faster and provide users with a better user experience. So how to optimize front-end performance? How to do it specifically? The following article will introduce you to some knowledge points about front-end performance optimization.

Read the following three questions repeatedly.

- Have different people asked you: What is front-end performance optimization? Have different interviewers asked you: What have you done

- for front-end performance optimization? Have you ever asked yourself: Someone asked me how to optimize front-end performance Answer

- ? Do you have your own set of answers about performance optimization

- ? It can allow technical experts to discuss it with you, and it can also make novices nod in agreement.

Let’s first discuss one thing, how a front-end project goes from conception to implementation

For example, the entire Tmall homepage. There are many answers, see if there is one you think of.

- create-react-app

Initialize a project. Write some components on the home page, such as tofu block,

Header, Bar, and long scrollScrollView. Then the project is compiled, packaged and put online. - #webpack

Hands on a project.

- Route

- On-demand loading

Get it, high-performance ScrollList with

loadmorefor invisible browsing. Because it is the homepage, it will obviously involve the problem of loading the first screen. Then arrange the - skeleton screen

.

Let’s develop a set of best practices for webpack, with the goal of finally getting a packaged file that is as small in size as possible. - SSR comes with a set. Because the DOM structure of the homepage is too complex, if you go with

- Virtual DOM

, it will be too slow until the GPU renders the UI.

- On-demand loading

This article will not go into details about other contents. But here you have to mention it and leave an impression.

System layer designI always feel like something is missingSo, you should have discovered that "

- A new system leaves 20% to satisfy the current existing business. The remaining 80% is used for system evolution. You can think about it, the Tmall homepage keeps changing, but it can still ensure that the performance reaches the standard.

Business layer design

- Whether the existing business is coupled with other businesses, whether there is a front-end business, and if so, where are the permissions of the front-end business. Can existing services be continuously compatible with new services according to the degree of system evolution?

Application layer design

- For example, micro front-end, such as component library, such as

npm install

Code layer designwebpack optimization- Skeleton Screen

- Road has dynamic on-demand loading

- Write high-performance React code? How to make good use of Vue3 time slicing?

- No more. Too basic

waste- just accumulate slowly.

" is where it is missing. Yes, front-end performance optimization is not only the optimization of the application layer and code layer, but more importantly, the optimization of the system layer and business layer. After reading the above psychological presets, let’s get to the official theme.

Performance optimization processTry to complete the following process:

- Performance indicator settings (FPS, page seconds Open rate, error rate, number of requests, etc.)

-

How to let your boss understand your optimization plan? Suppose your boss doesn’t understand technology.

- Tell the boss that the page white screen time

- has been reduced by 0.4s. Tell the boss that the home page will open in seconds if the network is weak .

- Tell the boss that in the past, the large number of http requests caused too much pressure on the server. Now, each page only has less than 5 requests for resources from the server. Tell the boss...

-

Confirm which indicators are required

-

Programming for boss satisfaction. /face covering.png (manual dog head)

-

The list will tell you the data of each indicator.

Optimization means -

Hybrid APP performance optimization

-

App startup Stage optimization plan

- Optimization plan for the page white screen stage

- Optimization plan for the first screen rendering stage

-

Lazy loading

- Cache

- Offline

- Offline package design to ensure that the first load is opened within seconds

- Parallelization

-

Component Skeleton Screen

- Picture Skeleton Screen

NSR - Layer and code architecture level optimization

- WebView performance optimization

- Parallel initialization

- Resource preloading

- Data interface request optimization

- Front-end architecture performance tuning

- Long list performance optimization

- Packaging optimization

-

-

Performance project establishment

- Once you’re sure, let’s get started.

-

Performance Practice

- Be prepared to quickly create pages in various harsh environments!

Summary

Now, do you have a complete understanding of front-end performance optimization? Many times when talking about performance optimization, we first need to talk about how to "diagnose" performance. In most cases, you won't be asked how performance is monitored. (The voice is getting lower and lower...)

Let’s talk about the optimization methods in detail

Various optimization methods for opening the first screen instantly

1. Lazy loading

One of the most common optimization methods.

Lazy loading means that during a long page loading process, key content is loaded first and non-key content is delayed. For example, when we open a page and its content exceeds the browser's visible area, we can load the front-end visible area content first, and then load the remaining content on demand after it enters the visible area.

Give me a chestnut. Tmall home page selection. Pictured above.

It happens to be the Tmall 618 event. This is a selection of the IOS version of Tmall’s homepage. If you frequently visit the Tmall homepage selection, you will find that it has almost enabled invisible browsing. Lazy loading is used very well here. Of course, it is not just lazy loading that achieves this effect.

It’s just about lazy loading, what does Tmall home page selection do? guess.

Lazy loading

- Lazy loading of images

- The images are cached by

native. - It will only be loaded when it appears in the visible area

- The images are cached by

- The card is pre-loaded (the timing of lazy loading changes)

- It is not loaded until it enters the visible area Card. Instead, the next card is preloaded when the previous card enters the visible area. Because compared to pictures, loading card UI will be much faster. This is also one of the guarantees for incognito browsing.

- Animation lazy loading

- If you scroll quickly,

Listthe scroll height changes greatly, then the requested data will eventually be No match for your high-speed sliding. In other words, you can't see the next card until there is new data, so you have to wait. At this time, aloadinganimation will be displayed, and then when new data is obtained, the new card will appear and automatically slide completely into the visible area. - Someone will say here that the damping of

IOSwould have made the animation and scrolling effects smoother. What I want to say here is thatAndroidcan also be used.

- If you scroll quickly,

#2. Caching

If the essence of lazy loading is to provide the ability to request non-critical content after the first screen, then caching is It is to give the ability for secondary access without repeated requests. In the first screen optimization solution, interface caching and static resource caching play the mainstay role.

Going back to the question mentioned about lazy loading, why do you have to swipe the screen quickly for a while before encountering a situation where there is no new data? The reason is that the cached data has been used up, so the server can only give the latest data.

-

Interface cacheThe implementation of interface cache, if it is in-end, all requests will go through Native requests to implement interface cache. Why do you do that?

There are two forms of page presentation in App, page presentation developed using Native and page presentation developed using H5. If you use Native to make requests uniformly, there is no need to request the data interface that has been requested. If you use H5 to request data, you must wait for WebView to be initialized before requesting (that is, serial request). When making Native requests, you can start requesting data before WebView is initialized (that is, parallel request), which can effectively save time.

So, how to cache the interface through Native? We can use SDK encapsulation to achieve this, that is, modify the original data interface request method to implement a request method similar to Axios. Specifically, the interfaces including post, Get and Request functions are encapsulated into the SDK.

In this way, when the client initiates a request, the program will call the SDK.axios method. WebView will intercept the request and check whether there is data cache locally in the App. If so, it will use the interface cache. If not, first request the data interface from the server, obtain the interface data and store it in the App cache.

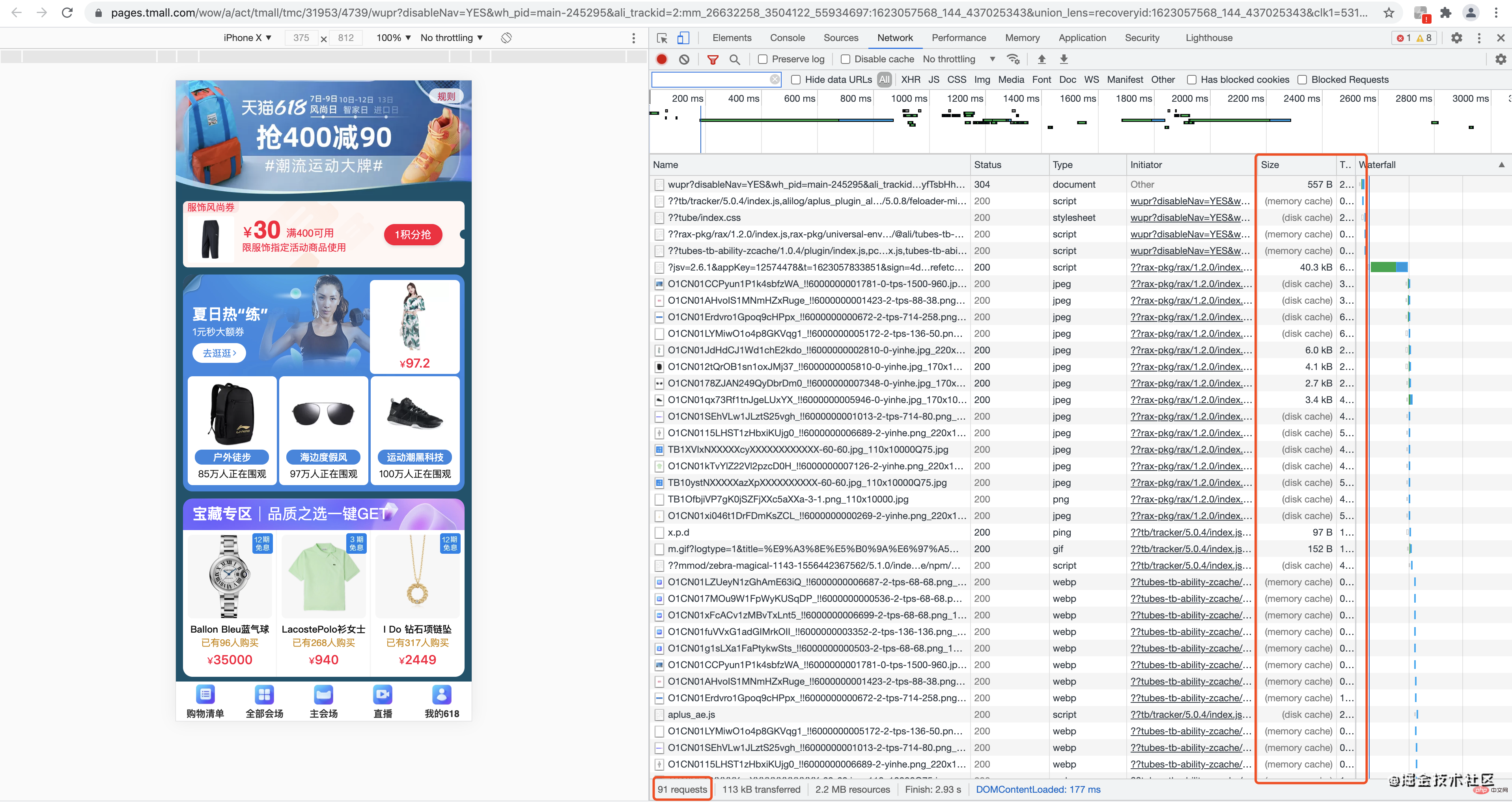

Static Resource CacheLook at the picture first.

91 requests, 113 kB transferred, 2.2 MB resources, Finish: 2.93 s, DOMContentLoaded: 177 ms.2.2M resources, opened in seconds. Take a look at the Size column, and you should understand something.

That’s right. HTTP cache. Generally speaking, there are only a few requests for data interfaces, while there are too many requests for static resources (such as JS, CSS, images, fonts, etc.). Taking the Tmall homepage as an example, among the 91 requests, except for a few scripts, the rest are static resource requests.

So, how to make a static caching solution? There are two situations here, one is that static resources do not need to be modified for a long time, and the other is that static resources are modified frequently. You can try refreshing the page a few more times to see.

If the resources remain unchanged for a long time, such as not changing much for a year, we can use strong caching, such as Cache-Control, to achieve this. Specifically, you can set Cache-Control:max-age=31536000 to allow the browser to directly use local cache files within one year instead of making requests to the server.

As for the second type, if the resource itself will change at any time, you can achieve negotiation caching by setting Etag. Specifically, when requesting a resource for the first time, set the Etag (for example, use the md5 of the resource as the Etag), and return a status code of 200. Then, add the If-none-match field to subsequent requests to ask the server whether the current version is available. If the server data has not changed, a 304 status code will be returned to the client, telling the client that there is no need to request data and can directly use the previously cached data. Of course, there are also things related to WebView involved here, so I won’t go into details first. . .

3. Offline processing

Offline processing refers to the solution in which resource data that changes online in real time is statically transferred locally, and local files are used when accessed.

Offline package is an offline solution. It is a solution to store static resources locally in the App. I will not go into details here.

But another more complex offline solution: Staticize the page content locally. Offline is generally suitable for scenarios such as homepage or list pages that do not require a login page, and can also support SEO functions.

So, how to achieve offline? The page is pre-rendered during packaging and construction. When the front-end request falls on index.html, it will already be the rendered content. At this time, you can use Webpack's prerender-spa-plugin to implement pre-rendering and achieve offline rendering.

Webpack implements pre-rendering code examples as follows:

// webpack.conf.js

var path = require('path')

var PrerenderSpaPlugin = require('prerender-spa-plugin')

module.exports = {

// ...

plugins: [

new PrerenderSpaPlugin(

// 编译后的html需要存放的路径

path.join(__dirname, '../dist'),

// 列出哪些路由需要预渲染

[ '/', '/about', '/contact' ]

)

]

}

// 面试的时候离线化能讲到这,往往就是做死现场,但风险和收益成正比,值得冒险。那就是,你有木有自己的预渲染方案。

4. Parallelization

If we talk about lazy loading, caching and offline They are all doing things in the request itself, trying every means to reduce the request or delay the request. Parallelization is to optimize the problem on the request channel, solve the request blocking problem, and then reduce the first screen time.

For example, the queues for vaccination in Guangzhou were reported on the news as being blocked. In addition to allowing people to stagger the time for vaccination, it can also increase the number of doctors who can vaccinate. When we deal with request blocking, we can also increase the number of request channels - solving this problem with the help of HTTP 2.0's multiplexing solution.

In the HTTP 1.1 era, there were two performance bottlenecks, serial file transfer and the limit on the number of connections for the same domain name (6 ). In the era of HTTP 2.0, because of the multiplexing function, text transmission is no longer used to transmit data (text transmission must be transmitted in order, otherwise the receiving end does not know the order of characters), but Binary data frames and streams are transmitted.

Among them, the frame is the smallest unit of data reception, and the stream is a virtual channel in the connection, which can carry bidirectional information. Each stream will have a unique integer ID to identify the data sequence, so that after the receiving end receives the data, it can merge the data in order without order errors. Therefore, when using streams, no matter how many resource requests there are, only one connection is established.

After the file transfer problem is solved, how to solve the problem of limiting the number of connections with the same domain name? Take the Nginx server as an example. Originally, because each domain name had a limit of 6 connections, the maximum concurrency was 100 requests. After using HTTP 2.0, now it is It can achieve 600, which is an improvement of 6 times.

You will definitely ask, isn’t this what the operation and maintenance side has to do? What do we need to do in front-end development? We need to change the two development methods of static file merging (JS, CSS, image files) and domain name hashing by static resource servers.

Specifically, after using HTTP 2.0 after multiplexing, a single file can be online independently, and there is no need to merge JS files. Here is a reserved question. Have you used the Antd component library of the Alibaba system? Every time the library is updated, not all of them are updated. Maybe only one Button component is updated this time, and only one Card component is updated again. So how do you achieve separate releases of individual components?

In order to solve the static domain name blocking (This is a performance bottleneck point), the static domain name needs to be divided into pic0-pic5, which can improve the request parallelism capability.

Although the problem is solved through static resource domain name hashing, the DNS resolution time will become much longer, and additional servers will be required to meet the problem. HTTP 2.0 Multiplexing solves this problem.

For more programming-related knowledge, please visit: Introduction to Programming! !

The above is the detailed content of Some knowledge points about front-end performance optimization that you deserve to know (Part 1). For more information, please follow other related articles on the PHP Chinese website!

Related articles

See more- 8 CSS performance optimization tips worth knowing

- MySQL paging query method for millions of data and its optimization suggestions

- Learn about several settings to optimize PHP7 performance

- recommend! Extension package for easily optimizing sql statements in Laravel

- Learn 10 tips to optimize PHP program Laravel 5 framework in one minute

- How to optimize images to obtain width and height in php