The difference between knn and k-means: 1. [k-means] algorithm is a typical distance-based clustering algorithm. It uses distance as the evaluation index of similarity, that is, the closer the distance between two objects is, the The greater the similarity; 2. The knn algorithm has no obvious pre-training process. When the program starts running, the data set is loaded into the memory and classification begins.

The difference between knn and k-means:

1. k-means clustering algorithm process And Principle

k-means algorithm (k-means clustering algorithm) is a basic partitioning algorithm with a known number of cluster categories. It is a typical distance-based clustering algorithm, which uses distance as the similarity evaluation index. That is, it is considered that the closer the distance between two objects, the greater the similarity. It is measured using Euclidean distance (a simple understanding is the straight-line distance between two points. Euclidean distance just standardizes the definition of this distance and extends it to N dimensions). It can handle large data sets and is efficient. The clustering result is k data sets divided into k categories. According to the expression method of clustering results, it can be divided into hard k-means (H CM) algorithm, fuzzy k-means algorithm (F CM) and probability k-means algorithm (P CM).

1.1. Basic idea

It is based on a given clustering objective function. The algorithm adopts an iterative update method. Each iteration process proceeds in the direction of decreasing the objective function. Finally, The clustering result makes the objective function obtain the minimum value and achieves better classification effect

1.2 Principle

The original k-means algorithm first randomly selects k points as the initial clustering center, and then Calculate the distance between each data object and each cluster center, and classify the data object into the class of the cluster center closest to it; Calculate the new cluster center for the adjusted new class. If the cluster centers are adjacent twice There is no change, indicating that the adjustment of the data object is completed and the clustering criterion function f has converged. In each iteration, it is necessary to check whether the classification of each sample is correct. If it is not correct, it must be adjusted. After all data are adjusted, the cluster center is modified and the next iteration is entered. If all data objects are correctly classified in an iterative algorithm, there will be no adjustment and there will be no change in the cluster center, which indicates that f has converged and the algorithm ends.

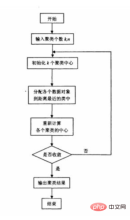

1.3 Algorithm flow chart

1.4 How to choose the initial point of the algorithm?

1) Select K points that are as far apart as possible in the batch

First randomly select a point as the first initial cluster center point, and then select the point farthest from that point As the center point of the second initial cluster, then select the point with the largest nearest distance from the first two points as the center point of the third initial cluster, and so on until K initial cluster center points are selected.

2) Select hierarchical clustering or Canopy algorithm for initial clustering, and then use the center points of these clusters as the initial cluster center points of the K-Means algorithm.

1.5 How to choose k in algorithm?

As long as the number of clusters we assume is equal to or higher than the real number of clusters, the indicator will rise very slowly, and once we try to get less than the real number of clusters, the indicator will rise sharply. rise. Cluster index serves as an important reference index.

The diameter of a cluster refers to the maximum distance between any two points in the cluster.

The radius of a cluster refers to the maximum distance from all points in the cluster to the center of the cluster.

1.6 Advantages, disadvantages and how to improve?

It is simple to use because it uses a random element, so it cannot guarantee to find the best class. There is no need for a reasonable initialization of the number of clusters: that is, K needs to be initialized.

2. K-nearest neighbor classification algorithm (K N N)

2.1 The problem introduces the idea of

K N N : From the picture above, we can see that the data set in the picture is good data, that is, it has been labeled. One type is a blue square, the other is a red triangle, and the green circle is what we are waiting for. Classified data. If K=3, then there are 2 red triangles and 1 blue square closest to the green point. These 3 points vote, so the green point to be classified belongs to the red triangle. If K=5, then there are 2 red triangles and 1 blue square closest to the green point. There are 2 red triangles and 3 blue squares recently. These 5 points vote, so the green point to be classified belongs to the blue square. That is, if a sample is among the k most adjacent samples in the feature space , most of them belong to a certain category, then the sample also belongs to this category. We can see that KNN is essentially based on a statistical method! In fact, many machine learning algorithms are also based on data statistics.

2.2 K N N algorithm

Introduction

K N N stands for K-Nearest Neighbor, which is a kind of memory-based learning, also called instance-based learning, and belongs to lazy learning. That is to say, it does not have an obvious pre-training process. Instead, when the program starts running, after loading the data set into the memory, no training is required, and classification can be started. K N N is also a supervised learning algorithm. It calculates the distance between the feature values of the new data and the training data, and then selects K (K>=1) nearest neighbors for classification (voting method) or regression. If K=1, new data is simply assigned to the class of its nearest neighbor.

Steps

1) Calculate the distance between the test data and each training data; you can use the Euclidean distance formula to calculate.

2) Sort according to the increasing relationship of distance;

3) Select the K points with the smallest distance (the k value is determined by yourself)

4) Determine The frequency of occurrence of the category where the first K points are located;

5) Return the category with the highest frequency of occurrence among the first K points as the predicted classification of the test data.

Features

Non-parametric statistical method: No need to introduce the selection of parameter K: When K = 1, the sample to be classified is classified into the class of the sample closest to it. When K = | K needs to be selected reasonably. If it is too small, it will be easily disturbed, and if it is too large, it will increase the computational complexity. The complexity of the algorithm: the curse of dimensionality. When the number of dimensions increases, the number of training samples required increases sharply. Dimensionality reduction is generally used.

2.3 Advantages and Disadvantages of the Algorithm

Advantages: Simple and effective

Disadvantages: Large amount of calculation. The output is not very interpretable. All training samples need to be stored.

3. The difference between K N N and k-means

##Related free learning recommendations:php Programming(video)

The above is the detailed content of What is the difference between knn and k-means. For more information, please follow other related articles on the PHP Chinese website!

deepseek web version official entranceMar 12, 2025 pm 01:42 PM

deepseek web version official entranceMar 12, 2025 pm 01:42 PMThe domestic AI dark horse DeepSeek has risen strongly, shocking the global AI industry! This Chinese artificial intelligence company, which has only been established for a year and a half, has won wide praise from global users for its free and open source mockups, DeepSeek-V3 and DeepSeek-R1. DeepSeek-R1 is now fully launched, with performance comparable to the official version of OpenAIo1! You can experience its powerful functions on the web page, APP and API interface. Download method: Supports iOS and Android systems, users can download it through the app store; the web version has also been officially opened! DeepSeek web version official entrance: ht

In-depth search deepseek official website entranceMar 12, 2025 pm 01:33 PM

In-depth search deepseek official website entranceMar 12, 2025 pm 01:33 PMAt the beginning of 2025, domestic AI "deepseek" made a stunning debut! This free and open source AI model has a performance comparable to the official version of OpenAI's o1, and has been fully launched on the web side, APP and API, supporting multi-terminal use of iOS, Android and web versions. In-depth search of deepseek official website and usage guide: official website address: https://www.deepseek.com/Using steps for web version: Click the link above to enter deepseek official website. Click the "Start Conversation" button on the homepage. For the first use, you need to log in with your mobile phone verification code. After logging in, you can enter the dialogue interface. deepseek is powerful, can write code, read file, and create code

How to solve the problem of busy servers for deepseekMar 12, 2025 pm 01:39 PM

How to solve the problem of busy servers for deepseekMar 12, 2025 pm 01:39 PMDeepSeek: How to deal with the popular AI that is congested with servers? As a hot AI in 2025, DeepSeek is free and open source and has a performance comparable to the official version of OpenAIo1, which shows its popularity. However, high concurrency also brings the problem of server busyness. This article will analyze the reasons and provide coping strategies. DeepSeek web version entrance: https://www.deepseek.com/DeepSeek server busy reason: High concurrent access: DeepSeek's free and powerful features attract a large number of users to use at the same time, resulting in excessive server load. Cyber Attack: It is reported that DeepSeek has an impact on the US financial industry.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Atom editor mac version download

The most popular open source editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Chinese version

Chinese version, very easy to use