Home >Operation and Maintenance >Nginx >Why is nginx so fast?

Why is nginx so fast?

- 王林forward

- 2020-10-20 17:25:172999browse

First of all, we need to know that Nginx uses a multi-process (single-thread) & multi-channel IO multiplexing model. Nginx, which uses I/O multiplexing technology, becomes a "concurrent event-driven" server.

(Recommended tutorial: nginx tutorial)

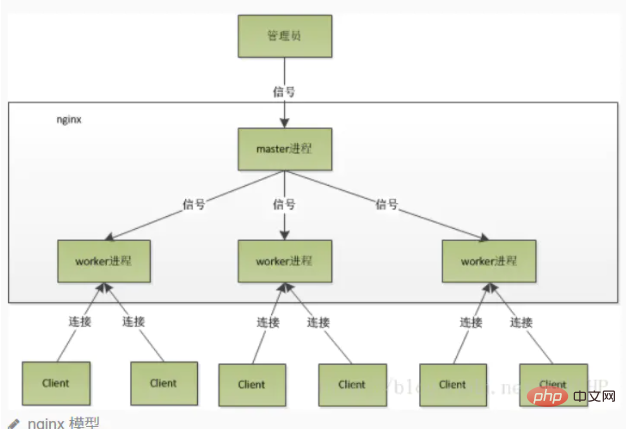

##Multi-process working mode

After Nginx is started, there will be a master process and multiple independent worker processes. The master receives signals from the outside world and sends signals to each worker process. Each process may handle the connection. The master process can monitor the running status of the worker process. When the worker process exits (under abnormal circumstances), a new worker process will be automatically started. Note that the number of worker processes is usually set to the number of machine cpu cores. Because more workers will only cause processes to compete with each other for the CPU, resulting in unnecessary context switching. Using multi-process mode can not only improve the concurrency rate, but also make the processes independent of each other. If one worker process hangs up, it will not affect other worker processes.Thinging herd phenomenon

The main process (master process) first creates a sock file descriptor for listening through socket(), and then forks to generate child processes (workers process), the child process will inherit the sockfd (socket file descriptor) of the parent process, and then the child process will create a connected descriptor (connected descriptor) after accept(), and then communicate with the client through the connected descriptor. Then, since all child processes inherit the sockfd of the parent process, when a connection comes in, all child processes will receive notifications and "race" to establish a connection with it. This is called "shock group" Phenomenon". A large number of processes are activated and suspended, and only one process can accept() the connection, which of course consumes system resources.Nginx's handling of the thundering herd phenomenon

Nginx provides an accept_mutex, which is a shared lock added to accept. That is, each worker process needs to acquire the lock before executing accept. If it cannot acquire it, it will give up executing accept(). With this lock, only one process will accpet() at the same time, so there will be no thundering herd problem. accept_mutex is a controllable option that we can turn off explicitly. It is turned on by default.Detailed explanation of Nginx process

After Nginx is started, there will be a master process and multiple worker processes.master process

is mainly used to manage worker processes, including: Receive signals from the outside world, send signals to each worker process, and monitor worker processes. In the running state, when the worker process exits (under abnormal circumstances), a new worker process will be automatically restarted The master process serves as the interactive interface between the entire process group and the user, and monitors the process at the same time. It does not need to handle network events and is not responsible for business execution. It only manages worker processes to implement functions such as service restart, smooth upgrade, log file replacement, and configuration files taking effect in real time. To control nginx, we only need to send a signal to the master process through kill. For example, kill -HUP pid tells nginx to restart nginx gracefully. We generally use this signal to restart nginx or reload the configuration. Because it restarts gracefully, the service is not interrupted. What does the master process do after receiving the HUP signal? First, after receiving the signal, the master process will reload the configuration file and then start the new worker process and send signals to all old worker processes to tell them that they can retire honorably. After the new worker is started, , start receiving new requests. The old worker will no longer receive new requests after receiving the signal from the master, and after all unprocessed requests in the current process are processed, Exit again. Of course, sending signals directly to the master process is an older method of operation. After nginx version 0.8, it introduced a series of command line parameters to facilitate our management. For example, ./nginx -s reload is to restart nginx, and ./nginx -s stop is to stop nginx from running. How? Let's take reload as an example. We see that when executing the command, we start a new nginx process, and after the new nginx process parses the reload parameter, we know that our purpose is to control nginx to reload the configuration file. , it will send a signal to the master process, and then the next action will be the same as if we sent the signal directly to the master process.worker process

Basic network events are handled in the worker process. Multiple worker processes are peer-to-peer. They compete equally for requests from clients, and each process is independent of each other. A request can only be processed in one worker process, and a worker process cannot process requests from other processes. The number of worker processes can be set. Generally, we will set it to be consistent with the number of CPU cores of the machine. The reason for this is inseparable from the process model and event processing model of nginx.

Worker processes are equal, and each process has the same opportunity to process requests. When we provide http service on port 80 and a connection request comes, each process may handle the connection. How to do this? First, each worker process is forked from the master process. In the master process, the socket (listenfd) that needs to be listened is first established, and then multiple worker processes are forked. The listenfd of all worker processes will become readable when a new connection arrives. To ensure that only one process handles the connection, all worker processes grab accept_mutex before registering the listenfd read event. The process that grabs the mutex registers the listenfd read event. Call accept in the read event to accept the connection. When a worker process accepts the connection, it starts reading the request, parsing the request, processing the request, generating data, and then returns it to the client, and finally disconnects the connection. This is what a complete request looks like. We can see that a request is completely processed by the worker process, and is only processed in one worker process.

worker process workflow

When a worker process accepts () the connection, it begins to read the request, parse the request, process the request, and generate data. Then return to the client, and finally disconnect, a complete request. A request is handled entirely by the worker process, and can only be handled in one worker process.

Benefits of doing so:

Saving the overhead caused by locks. Each worker process is an independent process, does not share resources, and does not require locking. At the same time, it will be much more convenient when programming and troubleshooting. Independent processes reduce risks. Using independent processes can prevent each other from affecting each other. After one process exits, other processes are still working and the service will not be interrupted. The master process will quickly restart new worker processes. Of course, the worker process can also exit unexpectedly.

In the multi-process model, each process/thread can only handle one channel of IO. So how does Nginx handle multiple channels of IO?

If IO multiplexing is not used, then in a process, only one request can be processed at the same time, such as executing accept(). If there is no connection, the program will block here until there is a connection. Come here before you can continue to execute.

And multiplexing allows us to return control to the program only when an event occurs, while at other times the kernel suspends the process and is on standby.

Core: The IO multiplexing model epoll adopted by Nginx

Example: Nginx will register an event: "If a connection request from a new client arrives, Notify me again", after that, only when the connection request comes, the server will execute accept() to receive the request. For another example, when forwarding a request to an upstream server (such as PHP-FPM) and waiting for the request to return, the processing worker will not block here. It will register an event after sending the request: "If the buffer receives data , tell me and I will read it in again", so the process becomes idle and waits for the event to occur.

The above is the detailed content of Why is nginx so fast?. For more information, please follow other related articles on the PHP Chinese website!