Home >Backend Development >Golang >About Golang coroutine scheduling

About Golang coroutine scheduling

- 藏色散人forward

- 2020-09-01 14:50:022813browse

The following column will introduce Golang coroutine scheduling to you in the golang tutorial column. I hope it will be helpful to friends in need!

1. Thread model

- N:1 model, N user space threads run on 1 kernel space thread. The advantage is that context switching is very fast but it cannot take advantage of multi-core systems.

- 1:1 model, 1 kernel space thread runs 1 user space thread. This takes full advantage of the advantages of multi-core systems, but context switching is very slow, because every schedule switches between user mode and kernel mode. (POSIX thread model (pthread), Java)

- M:N model, each user thread corresponds to multiple kernel space threads, and one kernel space thread can also correspond to multiple user space threads. Go intends to adopt this model and use any number of kernel models to manage any number of goroutines. This combines the advantages of the above two models, but the disadvantage is the complexity of scheduling.

Let’s take a look at golang’s coroutine scheduling

- M: A user space thread corresponds to a kernel thread, similar to posix pthread

- P: Represents the running context, which is the scheduler we implemented in the previous section. A scheduler will also correspond to a ready queue

- G: goroutine, that is, coroutine

2. Introduction to the scheduling model

Groutine can have a powerful concurrency implementation through the GPM scheduling model. The following explains the goroutine scheduling model.

Go's scheduler has three important structures inside: M, P, G

M: M is an encapsulation of kernel-level threads, and the number corresponds to the real number of CPUs , an M is a thread, and goroutine runs on M; M is a large structure that maintains a lot of information such as small object memory cache (mcache), currently executing goroutine, random number generator, etc.

G: Represents a goroutine, which has its own stack, instruction pointer and other information (waiting channels, etc.) for scheduling.

P: The full name of P is Processor. Its main purpose is to execute goroutine. Each Processor object has an LRQ (Local Run Queue). Unallocated Goroutine objects are stored in the GRQ (Global Run Queue), waiting to be allocated to a certain P in the LRQ. Each LRQ contains several user-created Goroutines. object.

Golang uses a multi-threading model. In more detail, it is a two-level threading model, but it encapsulates system threads (kernel-level threads) and exposes a lightweight coroutine goroutine. (User-level threads) are for users to use, and the scheduling of user-level threads to kernel-level threads is handled by golang's runtime, and the scheduling logic is transparent to the outside world. The advantage of goroutine is that context switching is performed in the complete user state. There is no need to switch between user state and kernel state as frequently as threads, which saves resource consumption.

Scheduling implementation

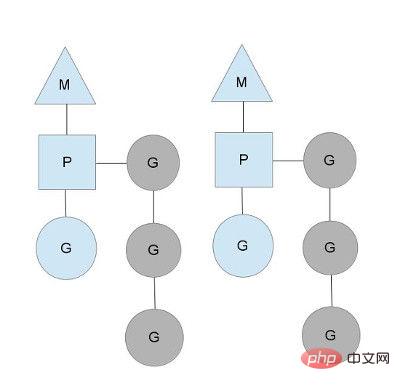

As seen from the above figure, there are 2 physical threads M, each M has a processor P, and each There is a goroutine running.

The number of P can be set through GOMAXPROCS(), which actually represents the real concurrency, that is, how many goroutines can run at the same time.

The gray goroutines in the picture are not running, but are in the ready state, waiting to be scheduled. P maintains this queue (called runqueue). In

Go language, it is easy to start a goroutine: just go function, so every time a go statement is executed, a

goroutine is added to the end of the runqueue queue. , at the next scheduling point, a goroutine is taken out from the runqueue (how to decide which goroutine to take?) and executed.

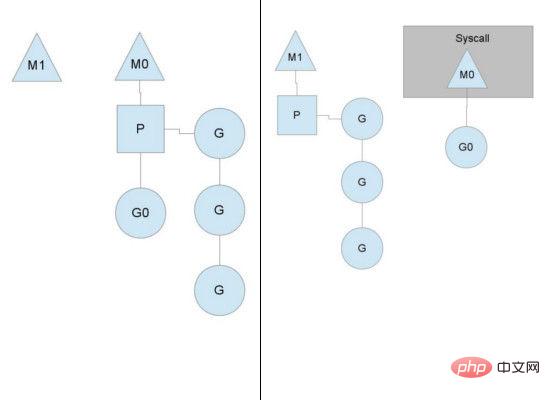

When an OS thread M0 is blocked (as shown below), P is running M1 instead. M1 in the picture may be being created or taken out from the thread cache.

When MO returns, it must try to get a P to run the goroutine. Normally, it will get one from other OS threads. P come over,

If it doesn’t get it, it will put the goroutine in a global

runqueue, and then sleep by itself (put in the thread cache). All P will also check global periodically

runqueue and run the goroutine in it, otherwise the goroutine on the global runqueue will never be executed.

Another situation is that the task G assigned by P is quickly completed (uneven distribution), which causes the processor P to be very busy, but other Ps are still busy. There is a task. At this time, if global There is no task G in runqueue, so P has to get some G from other P to execute. Generally speaking, if P wants to get a task from other P, it usually takes run half of the queue, which ensures that each OS thread can be fully used, as shown below:

3. Issues related to GPM creation

How to determine the number of M and P? Or when will M and P be created?

1. The number of P:

- is determined by the environment variable $GOMAXPROCS at startup or by the runtime method GOMAXPROCS() (the default is 1). This means that at any time during program execution, only $GOMAXPROCS goroutines are running at the same time.

2. Number of M:

- Limitations of the go language itself: When the go program starts, the maximum number of M will be set, with a default of 10000. However, it is difficult for the kernel to support it. With so many threads, this limit can be ignored.

- SetMaxThreads function in runtime/debug, sets the maximum number of M

- If an M is blocked, a new M will be created.

M has no absolute relationship with the number of P. If one M blocks, P will create or switch to another M. Therefore, even if the default number of P is 1, many M may be created. come out.

3. When P is created: After determining the maximum number n of P, the runtime system will create n Ps based on this number.

4. When M is created: There is not enough M to associate with P and run the runnable G in it. For example, if all M are blocked at this time, and there are still many ready tasks in P, it will look for an idle M. If there is no idle M, a new M will be created.

Which P association should M choose?

- M will select the P association that caused this M to be created.

When will the relationship between P and M be switched?

When M is blocked due to a system call (G running on M enters a system call), M and P will be separated. If there are tasks in P's ready queue at this time,

P It will associate with an idle M, or create an M for association. (That is to say, go does not handle IO blocking like libtask? Not sure.)

How does the ready G choose which P's ready queue to enter?

- By default: Because the default number of P is 1 (M is not necessarily 1), if we do not change GOMAXPROCS, no matter how many goroutines we create with the go statement in the program, they will It will only be stuffed into the ready queue of the same P.

- When there are multiple Ps: If GOMAXPROCS is modified or runtime.GOMAXPROCS is called, the runtime system will evenly distribute all Gs in the ready queue of each P.

How to ensure that each P's ready queue will have G

If all tasks in a P's ready queue have been executed, then P will try to take it out from other P's ready queues Part of it is put into its own ready queue to ensure that each P's ready queue has tasks to execute.

The above is the detailed content of About Golang coroutine scheduling. For more information, please follow other related articles on the PHP Chinese website!