This article introduces the node.js stream api to you from the shallower to the deeper. Please see below for specific details.

Basic introduction

In Node.js, there are two ways to read files, one is to use fs.readFile, the other is to use fs.createReadStream to read.

fs.readFile is the most familiar to every Node.js user. It is simple to understand and easy to use. But its disadvantage is that all the data will be read into the memory first. Once a large file is encountered, the efficiency of reading in this way is very low.

And fs.createReadStream reads data through Stream. It divides the file (data) into small pieces and then triggers some specific events. We can listen to these events and write specific processing functions. This method is not easy to use compared to the above, but it is very efficient.

In fact, Stream is not only used for file processing in Node.js, it can also be seen in other places, such as process.stdin/stdout, http, tcp sockets, zlib, crypto, etc.

This article is a summary of my learning about the Stream API in Node.js. I hope it will be useful to everyone.

Features

Event-based communication

You can connect streams through pipe

Type

Readable Stream readable data stream

Writeable Stream writable data stream

Duplex Stream is a bidirectional data stream that can read and write at the same time

Transform Stream converts data streams, which can be read and written, and can also convert (process) data

Event

Events for readable data streams

readable triggers when data flows out

data For those data streams that are not explicitly paused, add the data event listening function, which will switch the data stream to stream dynamics and provide data to the outside as soon as possible

end is triggered when the data is read. Note that it cannot be confused with writeableStream.end(). writeableStream does not have an end event, only the .end() method

close triggers when the data source is closed

error triggered when an error occurs while reading data

Events of writable data streams

drain writable.write(chunk) returns false. After all the cache is written, it will be triggered when it can be written again

When finish calls the .end method, it is triggered after all cached data is released, similar to the end event in the readable data stream, indicating the end of the writing process

Triggered when pipe is used as a pipe target

unpipe Triggered when unpipe is the target

error triggered when an error occurs when writing data

Status

The readable data stream has two states: stream dynamic and pause state. The method to change the data stream state is as follows:

Pause status -> Streaming status

Add a listening function for the data event

Call resume method

Call the pipe method

Note: If there is no listening function for the data event and no destination for the pipe method when converting to streaming dynamics, the data will be lost.

Streaming status -> Pause status

When the destination of the pipe method does not exist, the pause method is called

When there is a destination for the pipe method, remove all data event listening functions, and call the unpipe method to remove all pipe method destinations

Note: Only removing the listening function of the data event will not automatically cause the data flow to enter the "pause state". In addition, calling the pause method when there are destinations of the pipe method does not guarantee that the data flow is always paused. Once those destinations issue data requests, the data flow may continue to provide data.

Usage

Read and write files

var fs = require('fs');

// 新建可读数据流

var rs = fs.createReadStream('./test1.txt');

// 新建可写数据流

var ws = fs.createWriteStream('./test2.txt');

// 监听可读数据流结束事件

rs.on('end', function() {

console.log('read text1.txt successfully!');

});

// 监听可写数据流结束事件

ws.on('finish', function() {

console.log('write text2.txt successfully!');

});

// 把可读数据流转换成流动态,流进可写数据流中

rs.pipe(ws);

读取 CSV 文件,并上传数据(我在生产环境中写过)

var fs = require('fs');

var es = require('event-stream');

var csv = require('csv');

var parser = csv.parse();

var transformer = csv.transform(function(record) {

return record.join(',');

});

var data = fs.createReadStream('./demo.csv');

data

.pipe(parser)

.pipe(transformer)

// 处理前一个 stream 传递过来的数据

.pipe(es.map(function(data, callback) {

upload(data, function(err) {

callback(err);

});

}))

// 相当于监听前一个 stream 的 end 事件

.pipe(es.wait(function(err, body) {

process.stdout.write('done!');

}));

More Usage

You can refer to https://github.com/jeresig/node-stream-playground. After entering the sample website, click add stream directly to see the results.

Common Pitfalls

Using rs.pipe(ws) to write files does not append the content of rs to the back of ws, but directly overwrites the original content of ws with the content of rs

Ended/closed streams cannot be reused and the data stream must be recreated

The pipe method returns the target data stream, for example, a.pipe(b) returns b, so when listening for events, please pay attention to whether the object you are monitoring is correct

If you want to monitor multiple data streams and you use the pipe method to connect the data streams in series, you have to write:

data

.on('end', function() {

console.log('data end');

})

.pipe(a)

.on('end', function() {

console.log('a end');

})

.pipe(b)

.on('end', function() {

console.log('b end');

});

Commonly used class libraries

Event-stream feels like functional programming when used, which I personally like

awesome-nodejs#streams Since I have never used other stream libraries, so if you need it, just look here

The above content is the use of Stream API in Node.js introduced by the editor. I hope you like it.

Replace String Characters in JavaScriptMar 11, 2025 am 12:07 AM

Replace String Characters in JavaScriptMar 11, 2025 am 12:07 AMDetailed explanation of JavaScript string replacement method and FAQ This article will explore two ways to replace string characters in JavaScript: internal JavaScript code and internal HTML for web pages. Replace string inside JavaScript code The most direct way is to use the replace() method: str = str.replace("find","replace"); This method replaces only the first match. To replace all matches, use a regular expression and add the global flag g: str = str.replace(/fi

Custom Google Search API Setup TutorialMar 04, 2025 am 01:06 AM

Custom Google Search API Setup TutorialMar 04, 2025 am 01:06 AMThis tutorial shows you how to integrate a custom Google Search API into your blog or website, offering a more refined search experience than standard WordPress theme search functions. It's surprisingly easy! You'll be able to restrict searches to y

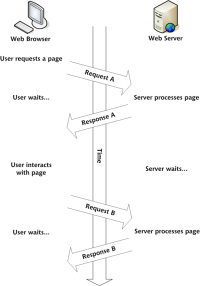

Build Your Own AJAX Web ApplicationsMar 09, 2025 am 12:11 AM

Build Your Own AJAX Web ApplicationsMar 09, 2025 am 12:11 AMSo here you are, ready to learn all about this thing called AJAX. But, what exactly is it? The term AJAX refers to a loose grouping of technologies that are used to create dynamic, interactive web content. The term AJAX, originally coined by Jesse J

Example Colors JSON FileMar 03, 2025 am 12:35 AM

Example Colors JSON FileMar 03, 2025 am 12:35 AMThis article series was rewritten in mid 2017 with up-to-date information and fresh examples. In this JSON example, we will look at how we can store simple values in a file using JSON format. Using the key-value pair notation, we can store any kind

8 Stunning jQuery Page Layout PluginsMar 06, 2025 am 12:48 AM

8 Stunning jQuery Page Layout PluginsMar 06, 2025 am 12:48 AMLeverage jQuery for Effortless Web Page Layouts: 8 Essential Plugins jQuery simplifies web page layout significantly. This article highlights eight powerful jQuery plugins that streamline the process, particularly useful for manual website creation

What is 'this' in JavaScript?Mar 04, 2025 am 01:15 AM

What is 'this' in JavaScript?Mar 04, 2025 am 01:15 AMCore points This in JavaScript usually refers to an object that "owns" the method, but it depends on how the function is called. When there is no current object, this refers to the global object. In a web browser, it is represented by window. When calling a function, this maintains the global object; but when calling an object constructor or any of its methods, this refers to an instance of the object. You can change the context of this using methods such as call(), apply(), and bind(). These methods call the function using the given this value and parameters. JavaScript is an excellent programming language. A few years ago, this sentence was

Improve Your jQuery Knowledge with the Source ViewerMar 05, 2025 am 12:54 AM

Improve Your jQuery Knowledge with the Source ViewerMar 05, 2025 am 12:54 AMjQuery is a great JavaScript framework. However, as with any library, sometimes it’s necessary to get under the hood to discover what’s going on. Perhaps it’s because you’re tracing a bug or are just curious about how jQuery achieves a particular UI

10 Mobile Cheat Sheets for Mobile DevelopmentMar 05, 2025 am 12:43 AM

10 Mobile Cheat Sheets for Mobile DevelopmentMar 05, 2025 am 12:43 AMThis post compiles helpful cheat sheets, reference guides, quick recipes, and code snippets for Android, Blackberry, and iPhone app development. No developer should be without them! Touch Gesture Reference Guide (PDF) A valuable resource for desig

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SublimeText3 Linux new version

SublimeText3 Linux latest version

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Atom editor mac version download

The most popular open source editor

SublimeText3 Mac version

God-level code editing software (SublimeText3)