Home >Backend Development >Golang >Why is Go so 'fast”?

Why is Go so 'fast”?

- 藏色散人forward

- 2020-03-04 09:34:313181browse

This article mainly introduces the Go program's internal scheduler implementation architecture (G-P-M model) in order to achieve extremely high concurrency performance, and how the Go scheduler handles thread blocking scenarios in order to maximize the use of computing resources.

How to make our system faster

With the rapid development of information technology, the processing power of a single server is getting stronger and stronger, forcing the programming model to change from the previous series Row mode is upgraded to concurrent model.

Concurrency models include IO multiplexing, multi-process and multi-threading. Each of these models has its own advantages and disadvantages. Most of the modern complex high-concurrency architectures use several models together, and different models are used in different scenarios. , use strengths and avoid weaknesses to maximize the performance of the server.

Multi-threading, because of its lightweight and ease of use, has become the most frequently used concurrency model in concurrent programming, including later-derived coroutines and other sub-products, which are also based on it.

Concurrency ≠ Parallel

Concurrency and parallelism are different.

On a single CPU core, threads realize task switching through time slices or giving up control rights to achieve the purpose of running multiple tasks "at the same time". This is the so-called concurrency. But in fact, only one task is executed at any time, and other tasks are queued through some algorithm.

Multi-core CPU can allow "multiple threads" in the same process to run at the same time in a true sense. This is parallelism.

Process, thread, coroutine

Process: Process is the basic unit of resource allocation in the system and has an independent memory space.

Thread: Thread is the basic unit of CPU scheduling and dispatch. Threads are attached to the process, and each thread will share the resources of the parent process.

Coroutine: Coroutine is a lightweight thread in user mode. The scheduling of coroutine is completely controlled by the user. Switching between coroutines only requires saving the context of the task, without kernel overhead.

Thread context switching

Due to interrupt processing, multitasking, user mode switching and other reasons, the CPU switches from one thread to another, and the switching process needs to be saved. The state of the current process and restore the state of another process.

Context switching is expensive because it takes a lot of time to swap threads on the core. The context switch latency depends on different factors and can range from 50 to 100 nanoseconds. Considering that the hardware executes an average of 12 instructions per nanosecond per core, a context switch may cost 600 to 1200 instructions in latency. In fact, context switching takes up a lot of the program's time to execute instructions.

If there is a cross-Core Context Switch, it may cause CPU cache failure (the cost of the CPU to access data from the cache is about 3 to 40 clock cycles, and the cost of accessing data from the main memory is about 100 to 300 clock cycles), the switching cost in this scenario will be more expensive.

Golang is born for concurrency

Since its official release in 2009, Golang has quickly occupied market share relying on its extremely high running speed and efficient development efficiency. Golang supports concurrency from the language level and uses lightweight coroutine Goroutine to realize concurrent running of programs.

Goroutine is very lightweight, mainly reflected in the following two aspects:

The cost of context switching is small: Goroutine context switching only involves the value modification of three registers (PC / SP / DX); In contrast, context switching of threads requires mode switching (switching from user mode to kernel mode) and refreshing of 16 registers, PC, SP... and other registers;

Low memory usage: the thread stack space is usually 2M, the minimum Goroutine stack space is 2K;

Golang program can easily support 10w level Goroutine operation, and when the number of threads reaches 1k, the memory usage has reached 2G.

Go scheduler implementation mechanism:

The Go program uses the scheduler to schedule Goroutine to execute on the kernel thread, but Goroutine is not directly bound to the OS thread M-Machine Instead of running, the P-Processor (logical processor) in the Goroutine Scheduler serves as the "intermediary" to obtain kernel thread resources.

The Go scheduler model is usually called the G-P-M model. It includes four important structures, namely G, P, M, and Sched:

G: Goroutine. Each Goroutine corresponds to a G structure. Body, G stores Goroutine's running stack, status and task functions, which can be reused.

G is not an execution body. Each G needs to be bound to P to be scheduled for execution.

P: Processor, represents a logical processor. For G, P is equivalent to a CPU core. G can only be scheduled if it is bound to P. For M, P provides relevant execution environment (Context), such as memory allocation status (mcache), task queue (G), etc.

The number of P determines the maximum number of G that can be parallelized in the system (premise: the number of physical CPU cores >= the number of P).

The number of P is determined by the GoMAXPROCS set by the user, but no matter how large the GoMAXPROCS setting is, the maximum number of P is 256.

M: Machine, OS kernel thread abstraction, represents the resource that actually performs calculations. After binding a valid P, it enters the schedule loop; and the mechanism of the schedule loop is roughly from the Global queue, P's Local queue and wait queue. obtained from. The number of

M is variable and is adjusted by Go Runtime. In order to prevent the system from scheduling too many OS threads due to the creation of too many, the current default maximum limit is 10,000.

M does not retain the state of G, which is the basis for G to be scheduled across M.

Sched: Go scheduler, which maintains queues that store M and G and some status information of the scheduler.

The scheduler cycle mechanism is roughly to obtain G from various queues and P's local queue, switch to G's execution stack and execute G's function, call Goexit to do the cleanup work and return to M, so repeatedly.

To understand the relationship between M, P, and G, you can illustrate the relationship through the classic model of a gopher cart moving bricks:

Gopher’s job is: there are a number of bricks on the construction site, and the Gopher uses a trolley to transport the bricks to the tinder for firing. M can be regarded as the gopher in the picture, P is the car, and G is the bricks installed in the car.

After clarifying the relationship between the three of them, let’s start to focus on how the gophers carry bricks.

Processor (P):

Create a batch of cars (P) based on the GoMAXPROCS value set by the user.

Goroutine(G):

The Go keyword is used to create a Goroutine, which is equivalent to making a brick (G), and then placing this brick (G) into the current This car is in (P).

Machine (M):

The mole (M) cannot be created externally. There are too many bricks (G) and too few moles (M). I am really busy. However, if there happens to be a free car (P) that is not used, then borrow some more gophers (M) from elsewhere until all the cars (P) are used up.

There is a process where the mole (M) is not enough and the mole (M) is borrowed from elsewhere. This process is to create a kernel thread (M).

It should be noted that: Gophers (M) cannot transport bricks without carts (P). The number of carts (P) determines the number of gophers (M) that can work. In Go The corresponding number in the program is the number of active threads;

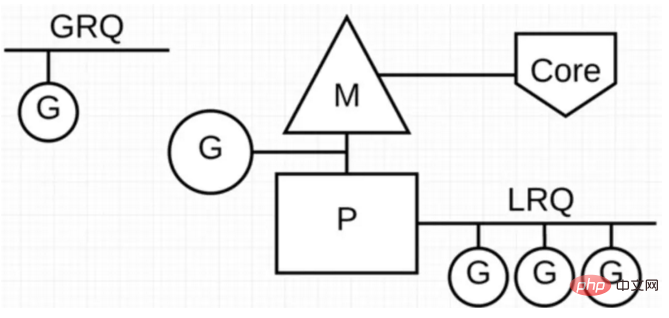

In the Go program we use the following illustration to show the G-P-M model:

P stands for "parallel" "The logical processor running, each P is assigned to a system thread M, G represents the Go coroutine.

There are two different run queues in the Go scheduler: global run queue (GRQ) and local run queue (LRQ).

Each P has an LRQ, which is used to manage the Goroutines assigned to execute in the context of P. These Goroutines are in turn context switched by the M bound to P. GRQ applies to Goroutines not yet assigned to P .

As can be seen from the above figure, the number of G can be much greater than the number of M. In other words, the Go program can use a small number of kernel-level threads to support the concurrency of a large number of Goroutines. Multiple Goroutines share the computing resources of the kernel thread M through user-level context switching, but there is no performance loss caused by thread context switching for the operating system.

In order to make full use of thread computing resources, the Go scheduler adopts the following scheduling strategies:

Task stealing (work-stealing)

We know that the reality is Some Goroutines run fast, and some run slowly, which will definitely bring about the problem of being busy to death and idle to death. Go definitely does not allow the existence of fishing P, and it is bound to make full use of computing resources.

In order to improve Go's parallel processing capabilities and increase overall processing efficiency, when the G tasks between each P are unbalanced, the scheduler allows G execution to be obtained from GRQ or LRQ of other Ps.

Reduce blocking

What if the executing Goroutine blocks thread M? Will Goroutine in LRQ on P not be able to obtain scheduling?

Blocking in Go is mainly divided into the following 4 scenarios:

Scenario 1: Goroutine is blocked due to atomic, mutex or channel operation calls, and the scheduler will switch the currently blocked Goroutine Go out and reschedule other Goroutines on LRQ;

Scenario 2: Goroutine is blocked due to network requests and IO operations. In this case of blocking, what will our G and M do?

The Go program provides a network poller (NetPoller) to handle network requests and IO operations. Its background uses kqueue (MacOS), epoll (Linux) or iocp (Windows) to implement IO multiplexing. use.

By using NetPoller to make network system calls, the scheduler can prevent Goroutine from blocking M when making these system calls. This allows M to execute other Goroutines in P's LRQ without creating a new M. Helps reduce scheduling load on the operating system.

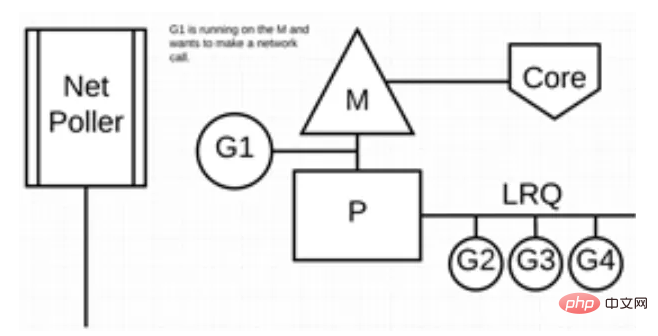

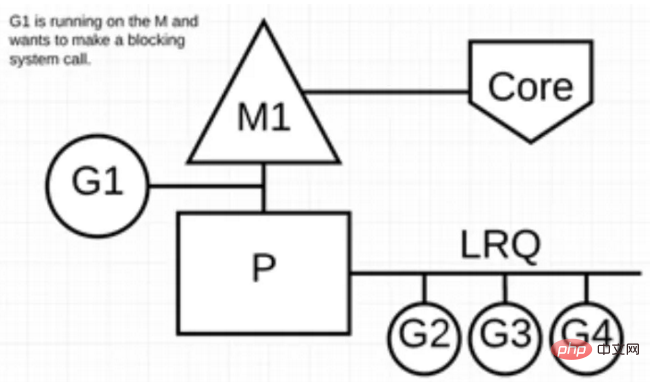

The following figure shows how it works: G1 is executing on M, and there are 3 Goroutines waiting to be executed on LRQ. The network poller is idle, doing nothing.

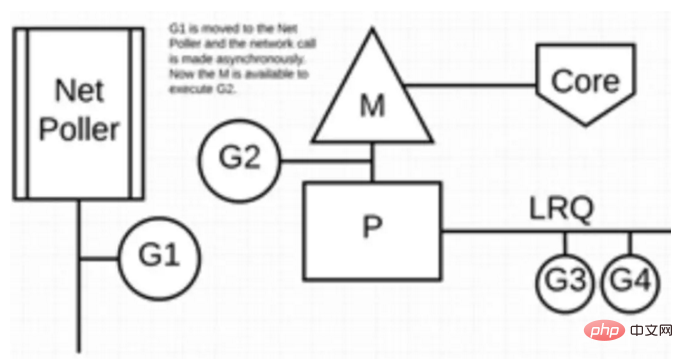

Next, G1 wants to make a network system call, so it is moved to the network poller and handles the asynchronous network system call. M can then execute additional Goroutines from the LRQ. At this time, G2 is context switched to M.

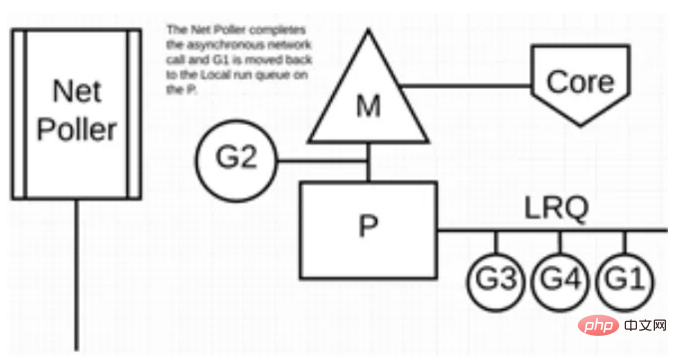

Finally, the asynchronous network system call is completed by the network poller, and G1 is moved back to P's LRQ. Once G1 can context switch on M, the Go-related code it is responsible for can be executed again. The big advantage here is that no extra M is required to perform network system calls. The network poller uses a system thread, which processes an active event loop at all times.

This calling method seems very complicated. Fortunately, the Go language hides this "complexity" in Runtime: Go developers do not need to pay attention to whether the socket is It is non-block, and there is no need to register the callback of the file descriptor in person. You only need to treat the socket in the "block I/O" method in the Goroutine corresponding to each connection, realizing a simple goroutine-per-connection network. Programming mode (but a large number of Goroutines will also bring additional problems, such as increased stack memory and increased burden on the scheduler).

The "block socket" in Goroutine seen by the user layer is actually "simulated" through the Non-block socket I/O multiplexing mechanism through the netpoller in the Go runtime. The net library in Go is implemented exactly this way.

Scenario 3: When calling some system methods, if the system method is blocked, in this case, the network poller (NetPoller) cannot be used, and the Goroutine that makes the system call will block the current M.

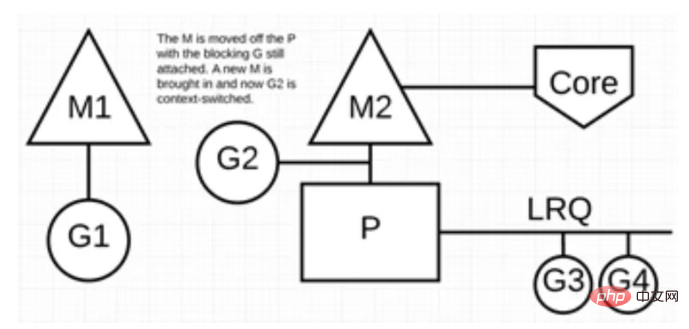

Let's look at the situation where a synchronous system call (such as file I/O) will cause M to block: G1 will make a synchronous system call to block M1.

After the scheduler intervenes: it recognizes that G1 has caused M1 to block. At this time, the scheduler separates M1 from P and also takes G1 away. The scheduler then introduces a new M2 to serve P. At this point, G2 can be selected from the LRQ and a context switch can be performed on M2.

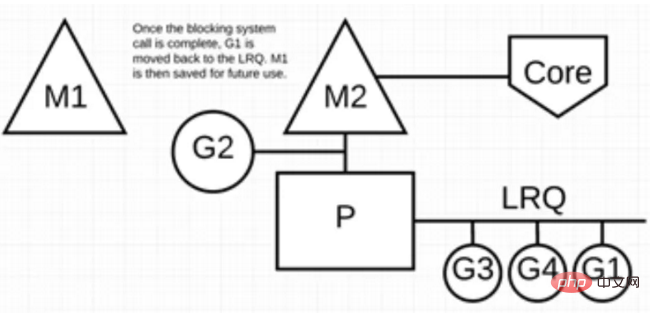

After the blocked system call completes: G1 can be moved back to LRQ and executed by P again. If this happens again, the M1 will be set aside for future reuse.

Scenario 4: If a sleep operation is performed in Goroutine, M will be blocked.

There is a monitoring thread sysmon in the background of the Go program. It monitors long-running G tasks and then sets identifiers that can be usurped, so that other Goroutines can preemptively execute them.

As long as this Goroutine makes a function call next time, it will be occupied, the scene will also be protected, and then put back into P's local queue to wait for the next execution.

Summary

This article mainly introduces the G-P-M model from the perspective of the Go scheduler architecture. Through this model, how to achieve a small number of kernel threads to support the concurrent operation of a large number of Goroutines. And through NetPoller, sysmon, etc., it helps Go programs reduce thread blocking and make full use of existing computing resources, thereby maximizing the operating efficiency of Go programs.

For more go language knowledge, please pay attention to the go language tutorial column on the php Chinese website.

The above is the detailed content of Why is Go so 'fast”?. For more information, please follow other related articles on the PHP Chinese website!