Home >Database >Mysql Tutorial >MySQL interview questions with answers-2019

MySQL interview questions with answers-2019

- angryTomforward

- 2019-08-05 16:35:285086browse

With the advancement and development of technology, interviewers have higher and higher requirements for interviewees. Now, as long as it is a back-end development position, the interview will definitely ask about the database. Relevant knowledge, and mysql is currently the most popular free relational database management technology. It is not surprising that questions related to it are asked during interviews. So now, we have collected some interview questions and answers for you. Let’s take a look.

Recommended tutorial: MySQL introductory video

# #1. Primary key super key candidate key foreign key

Primary key:

Store the pair in the database table A combination of data columns or attributes that uniquely and completely identifies a data object. A data column can only have one primary key, and the value of the primary key cannot be missing, that is, it cannot be null.

Super key:

The set of attributes that can uniquely identify a tuple in a relationship is called the super key of the relationship pattern. key. An attribute can be used as a super key, and multiple attributes combined can also be used as a super key. Super key contains candidate key and primary key.

Candidate key:

is the minimum superkey, that is, a superkey without redundant elements.

Foreign key:

The primary key of another table that exists in one table is called the foreign key of this table .

2. Four characteristics and meanings of database transactions

Four basic elements for the correct execution of database transaction transaction . ACID, Atomicity, Correspondence, Isolation, Durability.

Atomicity:All operations in the entire transaction are either completed or not completed, and it is impossible to stagnate in some intermediate link. If an error occurs during the execution of the transaction, it will be rolled back to the state before the transaction started, as if the transaction had never been executed.

Consistency: Before the transaction starts and after the transaction ends, the integrity constraints of the database are not violated.

Isolation: Isolate state execution of transactions so that they appear to be the only operations performed by the system at a given time. If there are two transactions, running at the same time, performing the same function, transaction isolation will ensure that each transaction in the system thinks that only that transaction is using the system. This property is sometimes called serialization. To prevent confusion between transaction operations, requests must be serialized, or serialized, so that there is only one request for the same data at the same time.

Persistence:After the transaction is completed, the changes made to the database by the transaction are permanently saved in the database and Will not be rolled back.

3. The role of the view. Can the view be changed?

A view is a virtual table. Unlike a table containing data, a view only contains queries that dynamically retrieve data when used; it does not contain any columns or data. Using views can simplify complex SQL operations, hide specific details, and protect data; once views are created, they can be utilized in the same way as tables.

Views cannot be indexed, nor can they have associated triggers or default values. If there is order by in the view itself, order by again on the view will be overwritten.

Create view: create view It can be updated, and updates to the view will update the base table; however, the view is mainly used to simplify retrieval and protect data, not for updating, and most views cannot be updated.

4. The difference between drop, delete and truncate

drop deletes the table directly and truncate deletes the data in the table. When inserting again, the id will increase automatically and delete will start from 1 to delete the data in the table. You can add where clause.

(1) The DELETE statement deletes one row from the table at a time, and at the same time saves the row's deletion operation as a transaction record in the log for rollback operation. TRUNCATE TABLE deletes all data from the table at once and does not record individual deletion operation records in the log. Deleted rows cannot be recovered. And the delete trigger related to the table will not be activated during the delete process. Execution speed is fast.

(2) The space occupied by tables and indexes. When a table is TRUNCATE, the space occupied by the table and indexes will be restored to the original size, and the DELETE operation will not reduce the space occupied by the table or indexes. The drop statement releases all the space occupied by the table.

(3) Generally speaking, drop > truncate > delete

(4) Scope of application. TRUNCATE can only be used for TABLE; DELETE can be table and view

(5) TRUNCATE and DELETE only delete data, while DROP deletes the entire table (structure and data).

(6) truncate and delete without where: only delete the data, but not the structure (definition) of the table. The drop statement will delete the constraints (constrain) that the structure of the table depends on. , Trigger index (index); the stored procedures/functions that depend on the table will be retained, but their status will become: invalid.

(7) The delete statement is DML (data maintain language). This operation will be placed in the rollback segment and will take effect only after the transaction is submitted. If there is a corresponding tigger, it will be triggered during execution.

(8) Truncate and drop are DLLs (data define language), and the operations take effect immediately. The original data is not placed in the rollback segment and cannot be rolled back

(9) If there is no backup, use drop and truncate with caution. To delete some data rows, use delete and combine it with where to limit the scope of influence. The rollback segment must be large enough. To delete a table, use drop; if you want to retain the table but delete the data in the table, if it has nothing to do with the transaction, use truncate. If it is related to a transaction, or the teacher wants to trigger a trigger, still use delete.

(10) Truncate table table name is fast and efficient because:

truncate table is functionally the same as the DELETE statement without a WHERE clause: both Delete all rows in the table. However, TRUNCATE TABLE is faster than DELETE and uses fewer system and transaction log resources. The DELETE statement deletes one row at a time and records an entry in the transaction log for each row deleted. TRUNCATE TABLE deletes data by freeing the data pages used to store table data, and only records the page freeing in the transaction log.

(11) TRUNCATE TABLE deletes all rows in the table, but the table structure and its columns, constraints, indexes, etc. remain unchanged. The count used to identify new rows is reset to the seed of that column. If you want to preserve the identity count value, use DELETE instead. If you want to delete a table definition and its data, use the DROP TABLE statement.

(12) For tables referenced by FOREIGN KEY constraints, TRUNCATE TABLE cannot be used, but a DELETE statement without a WHERE clause should be used. Because TRUNCATE TABLE is not logged, it cannot activate the trigger.

5. The working principle and types of indexes

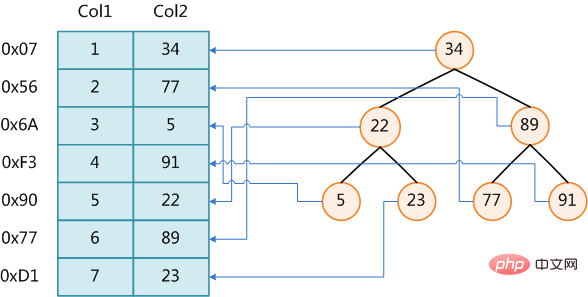

## Database index is a database A sorted data structure in the management system to assist in quickly querying and updating data in database tables. Index implementation usually uses B-tree and its variant B-tree.

In addition to data, the database system also maintains data structures that satisfy specific search algorithms. These data structures reference (point to) the data in some way, so that these data structures can Implement advanced search algorithms. This data structure is an index.

There are costs to be paid for setting indexes on tables: First, it increases the storage space of the database, and second, it takes more time to insert and modify data (because the index also needs to be updated at any time). changes).

The figure shows a possible indexing method. On the left is a data table with a total of two columns and seven records. The leftmost one is the physical address of the data record (note that logically adjacent records are not necessarily physically adjacent on the disk). In order to speed up the search of Col2, you can maintain a binary search tree as shown on the right. Each node contains the index key value and a pointer to the physical address of the corresponding data record. In this way, you can use binary search in O(log2n) The corresponding data is obtained within the complexity.

Creating indexes can greatly improve system performance.

First, by creating a unique index, the uniqueness of each row of data in the database table can be guaranteed.

Second, it can greatly speed up data retrieval, which is also the main reason for creating an index.

Third, it can speed up the connection between tables, which is particularly meaningful in achieving referential integrity of data.

Fourth, when using grouping and sorting clauses for data retrieval, the time for grouping and sorting in the query can also be significantly reduced.

Fifth, by using indexes, you can use optimization hiders during the query process to improve system performance.

Someone may ask: There are so many advantages to adding an index, why not create an index for every column in the table? Because, adding indexes also has many disadvantages.

First, creating and maintaining indexes takes time, and this time increases as the amount of data increases.

Second, the index needs to occupy physical space. In addition to the data space occupied by the data table, each index also occupies a certain amount of physical space. If you want to build a clustered index, then you need The space will be larger.

Third, when adding, deleting, and modifying data in the table, the index must be dynamically maintained, which reduces the data maintenance speed.

Indexes are built on certain columns in the database table. When creating an index, you should consider which columns can be indexed on and which columns cannot be indexed on. Generally speaking, indexes should be created on these columns: on columns that are frequently searched, it can speed up searches; on columns that serve as primary keys, it enforces the uniqueness of the column and organizes the arrangement structure of the data in the table; on columns that are often searched, Used on connected columns, these columns are mainly foreign keys, which can speed up the connection; create indexes on columns that often need to be searched based on range, because the index has been sorted, and its specified range is continuous; in often needed Create an index on the sorted column, because the index has been sorted, so that the query can use the sorting of the index to speed up the sorting query time; create an index on the column that is often used in the WHERE clause to speed up the judgment of conditions.

Similarly, indexes should not be created for some columns. Generally speaking, these columns that should not be indexed have the following characteristics:

First, indexes should not be created for columns that are rarely used or referenced in queries. This is because, since these columns are rarely used, indexing or not indexing does not improve query speed. On the contrary, due to the addition of indexes, the maintenance speed of the system is reduced and the space requirements are increased.

Second, indexes should not be added to columns with few data values. This is because, since these columns have very few values, such as the gender column of the personnel table, in the query results, the data rows in the result set account for a large proportion of the data rows in the table, that is, the data that needs to be searched in the table The proportion of rows is huge. Increasing the index does not significantly speed up retrieval.

Third, indexes should not be added to columns defined as text, image and bit data types. This is because the data volume of these columns is either quite large or has very few values.

Fourth, when the modification performance is far greater than the retrieval performance, the index should not be created. This is because modification performance and retrieval performance are contradictory to each other. When adding indexes, retrieval performance will be improved, but modification performance will be reduced. When reducing indexes, modification performance will increase and retrieval performance will decrease. Therefore, when modification performance is much greater than retrieval performance, indexes should not be created.

Based on the functions of the database, three types of indexes can be created in the database designer: unique index, primary key index and clustered index.

Unique Index

A unique index is an index that does not allow any two rows to have the same index value.

Most databases do not allow newly created unique indexes to be saved with the table when there are duplicate key values in the existing data. The database may also prevent adding new data that would create duplicate key values in the table. For example, if a unique index is created on the employee's last name (lname) in the employee table, no two employees can have the same last name. Primary Key Index Database tables often have a column or combination of columns whose values uniquely identify each row in the table. This column is called the primary key of the table. Defining a primary key for a table in a database diagram automatically creates a primary key index, which is a specific type of unique index. The index requires each value in the primary key to be unique. It also allows fast access to data when using a primary key index in a query. Clustered Index In a clustered index, the physical order of the rows in the table is the same as the logical (index) order of the key values. A table can contain only one clustered index.

If an index is not a clustered index, the physical order of the rows in the table does not match the logical order of the key values. Clustered indexes generally provide faster data access than nonclustered indexes.

Locality principle and disk read-ahead

Due to the characteristics of the storage medium, the disk itself access is slower than the main disk. Storage is much slower. Coupled with the cost of mechanical movement, the access speed of disk is often one hundredth of that of main memory. Therefore, in order to improve efficiency, disk I/O must be minimized. In order to achieve this goal, the disk often does not read strictly on demand, but reads in advance every time. Even if only one byte is needed, the disk will start from this position and sequentially read a certain length of data backwards into the memory. The theoretical basis for this is the well-known locality principle in computer science: when a piece of data is used, nearby data is usually used immediately. The data required during program execution is usually concentrated.

Because sequential disk reads are very efficient (no seek time, very little spin time), read-ahead can be used for programs with locality. Improve I/O efficiency.

The read-ahead length is generally an integral multiple of the page. Pages are logical blocks of computer-managed memory. Hardware and operating systems often divide main memory and disk storage areas into consecutive equal-sized blocks. Each storage block is called a page (in many operating systems, the page size is usually 4k), main memory and disk exchange data in units of pages. When the data to be read by the program is not in the main memory, a page fault exception will be triggered. At this time, the system will send a read signal to the disk, and the disk will find the starting position of the data and read one or more pages backwards. Load into memory, then return abnormally, and the program continues to run.

Performance analysis of B-/Tree index

At this point we can finally analyze the performance of B-/Tree index .

As mentioned above, the number of disk I/Os is generally used to evaluate the quality of the index structure. Let’s start with the B-Tree analysis. According to the definition of B-Tree, it can be seen that a maximum of h nodes need to be visited for one retrieval. The designers of the database system cleverly took advantage of the disk read-ahead principle and set the size of a node to be equal to one page, so that each node can be fully loaded with only one I/O. In order to achieve this goal, the following techniques need to be used in the actual implementation of B-Tree:

Every time a new node is created, a page of space is directly applied for, thus ensuring that a node is physically It is stored in a page, and the computer storage allocation is page-aligned, so a node only needs one I/O.

A retrieval in B-Tree requires at most h-1 I/O (root node resident memory), and the asymptotic complexity is O(h)=O(logdN). In general practical applications, the out-degree d is a very large number, usually more than 100, so h is very small (usually no more than 3).

As for the red-black tree structure, h is obviously much deeper. Since nodes (parents and children) that are logically close may be physically far away and locality cannot be exploited, the I/O asymptotic complexity of the red-black tree is also O(h), and the efficiency is obviously much worse than that of the B-Tree.

In summary, using B-Tree as an index structure is very efficient.

6. Type of connection

Execute in query analyzer:

--Create tables table1, table2:

create table table1(id int,name varchar(10)) create table table2(id int,score int) insert into table1 select 1,'lee' insert into table1 select 2,'zhang' insert into table1 select 4,'wang' insert into table2 select 1,90 insert into table2 select 2,100 insert into table2 select 3,70

For example, table

------------------------------------------------- table1 | table2 | ------------------------------------------------- id name |id score | 1 lee |1 90| 2 zhang| 2 100| 4 wang| 3 70| -------------------------------------------------

The following are all in query analysis Executed in the processor

1. Outer join

1. Concept: including left outer join, right outer join or complete outer join

2.左连接:left join 或 left outer join

(1)左向外联接的结果集包括 LEFT OUTER 子句中指定的左表的所有行,而不仅仅是联接列所匹配的行。如果左表的某行在右表中没有匹配行,则在相关联的结果集行中右表的所有选择列表列均为空值(null)。

(2)sql 语句

select * from table1 left join table2 on table1.id=table2.id

-------------结果------------- idnameidscore ------------------------------ 1lee190 2zhang2100 4wangNULLNULL ------------------------------

注释:包含table1的所有子句,根据指定条件返回table2相应的字段,不符合的以null显示

3.右连接:right join 或 right outer join

(1)右向外联接是左向外联接的反向联接。将返回右表的所有行。如果右表的某行在左表中没有匹配行,则将为左表返回空值。

(2)sql 语句

select * from table1 right join table2 on table1.id=table2.id

-------------结果------------- idnameidscore ------------------------------ 1lee190 2zhang2100 NULLNULL370 ------------------------------

注释:包含table2的所有子句,根据指定条件返回table1相应的字段,不符合的以null显示

4.完整外部联接:full join 或 full outer join

(1)完整外部联接返回左表和右表中的所有行。当某行在另一个表中没有匹配行时,则另一个表的选择列表列包含空值。如果表之间有匹配行,则整个结果集行包含基表的数据值。

(2)sql 语句

select * from table1 full join table2 on table1.id=table2.id

-------------结果------------- idnameidscore ------------------------------ 1lee190 2zhang2100 4wangNULLNULL NULLNULL370 ------------------------------

注释:返回左右连接的和(见上左、右连接)

二、内连接

1.概念:内联接是用比较运算符比较要联接列的值的联接

2.内连接:join 或 inner join

3.sql 语句

select * from table1 join table2 on table1.id=table2.id

-------------结果------------- idnameidscore ------------------------------ 1lee190 2zhang2100 ------------------------------

注释:只返回符合条件的table1和table2的列

4.等价(与下列执行效果相同)

A:select a.*,b.* from table1 a,table2 b where a.id=b.id

B:select * from table1 cross join table2 where table1.id=table2.id (注:cross join后加条件只能用where,不能用on)

三、交叉连接(完全)

1.概念:没有 WHERE 子句的交叉联接将产生联接所涉及的表的笛卡尔积。第一个表的行数乘以第二个表的行数等于笛卡尔积结果集的大小。(table1和table2交叉连接产生3*3=9条记录)

2.交叉连接:cross join (不带条件where...)

3.sql语句

select * from table1 cross join table2

-------------结果------------- idnameidscore ------------------------------ 1lee190 2zhang190 4wang190 1lee2100 2zhang2100 4wang2100 1lee370 2zhang370 4wang370 ------------------------------

注释:返回3*3=9条记录,即笛卡尔积

4.等价(与下列执行效果相同)

A:select * from table1,table2

7、数据库范式

1) 第一范式(1NF)

在任何一个关系数据库中,第一范式(1NF)是对关系模式的基本要求,不满足第一范式(1NF)的数据库就不是关系数据库。

所谓第一范式(1NF)是指数据库表的每一列都是不可分割的基本数据项,同一列中不能有多个值,即实体中的某个属性不能有多个值或者不能有重复的属性。如果出现重复的属性,就可能需要定义一个新的实体,新的实体由重复的属性构成,新实体与原实体之间为一对多关系。在第一范式(1NF)中表的每一行只包含一个实例的信息。简而言之,第一范式就是无重复的列。

2 )第二范式(2NF)

第二范式(2NF)是在第一范式(1NF)的基础上建立起来的,即满足第二范式(2NF)必须先满足第一范式(1NF)。第二范式(2NF)要求数据库表中的每个实例或行必须可以被惟一地区分。为实现区分通常需要为表加上一个列,以存储各个实例的惟一标识。这个惟一属性列被称为主关键字或主键、主码。

第二范式(2NF)要求实体的属性完全依赖于主关键字。所谓完全依赖是指不能存在仅依赖主关键字一部分的属性,如果存在,那么这个属性和主关键字的这一部分应该分离出来形成一个新的实体,新实体与原实体之间是一对多的关系。为实现区分通常需要为表加上一个列,以存储各个实例的惟一标识。简而言之,第二范式就是非主属性非部分依赖于主关键字。

3 )第三范式(3NF)

满足第三范式(3NF)必须先满足第二范式(2NF)。简而言之,第三范式(3NF)要求一个数据库表中不包含已在其它表中已包含的非主关键字信息。例如,存在一个部门信息表,其中每个部门有部门编号(dept_id)、部门名称、部门简介等信息。那么在员工信息表中列出部门编号后就不能再将部门名称、部门简介等与部门有关的信息再加入员工信息表中。如果不存在部门信息表,则根据第三范式(3NF)也应该构建它,否则就会有大量的数据冗余。简而言之,第三范式就是属性不依赖于其它非主属性。(我的理解是消除冗余)

8、数据库优化的思路

这个我借鉴了慕课上关于数据库优化的课程。

一、SQL语句优化

1)应尽量避免在 where 子句中使用!=或操作符,否则将引擎放弃使用索引而进行全表扫描。

2)应尽量避免在 where 子句中对字段进行 null 值判断,否则将导致引擎放弃使用索引而进行全表扫描,如:

select id from t where num is null

可以在num上设置默认值0,确保表中num列没有null值,然后这样查询:

select id from t where num=0

3)很多时候用 exists 代替 in 是一个好的选择

4)用Where子句替换HAVING 子句 因为HAVING 只会在检索出所有记录之后才对结果集进行过滤

二、索引优化

看上文索引

三、数据库结构优化

1)范式优化: 比如消除冗余(节省空间。。)

2)反范式优化:比如适当加冗余等(减少join)

3)拆分表: 分区将数据在物理上分隔开,不同分区的数据可以制定保存在处于不同磁盘上的数据文件里。这样,当对这个表进行查询时,只需要在表分区中进行扫描,而不必进行全表扫描,明显缩短了查询时间,另外处于不同磁盘的分区也将对这个表的数据传输分散在不同的磁盘I/O,一个精心设置的分区可以将数据传输对磁盘I/O竞争均匀地分散开。对数据量大的时时表可采取此方法。可按月自动建表分区。

4)拆分其实又分垂直拆分和水平拆分: 案例: 简单购物系统暂设涉及如下表: 1.产品表(数据量10w,稳定) 2.订单表(数据量200w,且有增长趋势) 3.用户表 (数据量100w,且有增长趋势) 以mysql为例讲述下水平拆分和垂直拆分,mysql能容忍的数量级在百万静态数据可以到千万 垂直拆分:解决问题:表与表之间的io竞争 不解决问题:单表中数据量增长出现的压力 方案: 把产品表和用户表放到一个server上 订单表单独放到一个server上 水平拆分: 解决问题:单表中数据量增长出现的压力 不解决问题:表与表之间的io争夺

方案: 用户表通过性别拆分为男用户表和女用户表 订单表通过已完成和完成中拆分为已完成订单和未完成订单 产品表 未完成订单放一个server上 已完成订单表盒男用户表放一个server上 女用户表放一个server上(女的爱购物 哈哈)

四、服务器硬件优化

这个么多花钱咯!

9、存储过程与触发器的区别

Триггеры очень похожи на хранимые процедуры. Триггеры также представляют собой наборы операторов SQL. Единственная разница между ними заключается в том, что триггеры не могут быть вызваны с помощью оператора EXECUTE, но автоматически запускаются (активируются), когда пользователь выполняет оператор Transact-SQL. Триггер — это хранимая процедура, которая выполняется при изменении данных в указанной таблице. Триггеры часто создаются для обеспечения ссылочной целостности и согласованности логически связанных данных в разных таблицах. Поскольку пользователи не могут обойти триггеры, их можно использовать для обеспечения соблюдения сложных бизнес-правил для обеспечения целостности данных. Триггеры отличаются от хранимых процедур. Триггеры в основном выполняются через триггеры выполнения событий, а хранимые процедуры можно вызывать непосредственно через имя хранимой процедуры. Когда такие операции, как UPDATE, INSERT и DELETE, выполняются над определенной таблицей, SQLSERVER автоматически выполняет операторы SQL, определенные триггером, тем самым гарантируя, что обработка данных должна соответствовать правилам, определенным этими операторами SQL.

Исходный адрес: https://www.cnblogs.com/frankielf0921/p/5930743.html

The above is the detailed content of MySQL interview questions with answers-2019. For more information, please follow other related articles on the PHP Chinese website!