Spark is an open source cluster computing system based on memory computing, which aims to make data analysis faster. Spark is very small and exquisite, and was developed by a small team led by Matei from the AMP Laboratory at the University of California, Berkeley. The language used is Scala, and the code for the core part of the project only has 63 Scala files, which is very short and concise.

Spark is an open source cluster computing environment similar to Hadoop, but there are some differences between the two. These useful differences make Spark superior in certain workloads. In other words, Spark enables in-memory distributed datasets that can optimize iterative workloads in addition to being able to provide interactive queries.

Spark is implemented in the Scala language and uses Scala as its application framework. Unlike Hadoop, Spark and Scala are tightly integrated, with Scala making it possible to manipulate distributed data sets as easily as local collection objects.

Although Spark was created to support iterative jobs on distributed data sets, it is actually complementary to Hadoop and can run in parallel on the Hadoop file system. This behavior is supported through a third-party cluster framework called Mesos. Developed by the UC Berkeley AMP Lab (Algorithms, Machines, and People Lab), Spark can be used to build large-scale, low-latency data analysis applications.

Spark Cluster Computing Architecture

Although Spark has similarities with Hadoop, it provides a new cluster computing framework with useful differences. First, Spark is designed for a specific type of workload in cluster computing, namely those that reuse working data sets (such as machine learning algorithms) between parallel operations. To optimize these types of workloads, Spark introduces the concept of in-memory cluster computing, where data sets are cached in memory to reduce access latency.

Spark also introduces an abstraction called Resilient Distributed Dataset (RDD). An RDD is a collection of read-only objects distributed across a set of nodes. These collections are resilient and can be reconstructed if part of the data set is lost. The process of reconstructing a partial dataset relies on a fault-tolerant mechanism that maintains "lineage" (i.e., information that allows partial reconstruction of the dataset based on data derivation processes). An RDD is represented as a Scala object, which can be created from a file; a parallelized slice (spread across nodes); another transformed form of the RDD; and ultimately a complete change to the persistence of the existing RDD, such as requests Cached in memory.

Applications in Spark are called drivers, and these drivers implement operations that are performed on a single node or in parallel on a set of nodes. Like Hadoop, Spark supports single-node clusters or multi-node clusters. For multi-node operation, Spark relies on the Mesos cluster manager. Mesos provides an efficient platform for resource sharing and isolation for distributed applications. This setup allows Spark and Hadoop to coexist in a shared pool of nodes.

For more technical articles related to Apache, please visit the Apache Tutorial column to learn!

The above is the detailed content of what is apache spark. For more information, please follow other related articles on the PHP Chinese website!

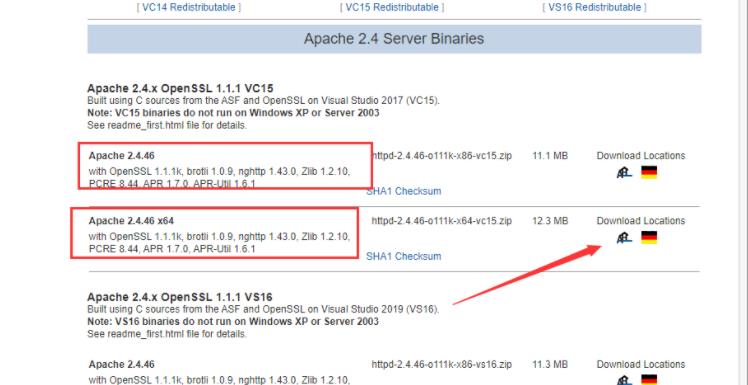

图文详解apache2.4+php8.0的安装配置方法Dec 06, 2022 pm 04:53 PM

图文详解apache2.4+php8.0的安装配置方法Dec 06, 2022 pm 04:53 PM本文给大家介绍如何安装apache2.4,以及如何配置php8.0,文中附有图文详细步骤,下面就带大家一起看看怎么安装配置apache2.4+php8.0吧~

Linux apache怎么限制并发连接和下载速度May 12, 2023 am 10:49 AM

Linux apache怎么限制并发连接和下载速度May 12, 2023 am 10:49 AMmod_limitipconn,这个是apache的一个非官方模块,根据同一个来源ip进行并发连接控制,bw_mod,它可以根据来源ip进行带宽限制,它们都是apache的第三方模块。1.下载:wgetwget2.安装#tar-zxvfmod_limitipconn-0.22.tar.gz#cdmod_limitipconn-0.22#vimakefile修改:apxs=“/usr/local/apache2/bin/apxs”#这里是自己apache的apxs路径,加载模块或者#/usr/lo

apache版本怎么查看?Jun 14, 2019 pm 02:40 PM

apache版本怎么查看?Jun 14, 2019 pm 02:40 PM查看apache版本的步骤:1、进入cmd命令窗口;2、使用cd命令切换到Apache的bin目录下,语法“cd bin目录路径”;3、执行“httpd -v”命令来查询版本信息,在输出结果中即可查看apache版本号。

超细!Ubuntu20.04安装Apache+PHP8环境Mar 21, 2023 pm 03:26 PM

超细!Ubuntu20.04安装Apache+PHP8环境Mar 21, 2023 pm 03:26 PM本篇文章给大家带来了关于PHP的相关知识,其中主要跟大家分享在Ubuntu20.04 LTS环境下安装Apache的全过程,并且针对其中可能出现的一些坑也会提供解决方案,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

nginx,tomcat,apache的区别是什么May 15, 2023 pm 01:40 PM

nginx,tomcat,apache的区别是什么May 15, 2023 pm 01:40 PM1.Nginx和tomcat的区别nginx常用做静态内容服务和代理服务器,直接外来请求转发给后面的应用服务器(tomcat,Django等),tomcat更多用来做一个应用容器,让javawebapp泡在里面的东西。严格意义上来讲,Apache和nginx应该叫做HTTPServer,而tomcat是一个ApplicationServer是一个Servlet/JSO应用的容器。客户端通过HTTPServer访问服务器上存储的资源(HTML文件,图片文件等),HTTPServer是中只是把服务器

php站用iis乱码而apache没事怎么解决Mar 23, 2023 pm 02:48 PM

php站用iis乱码而apache没事怎么解决Mar 23, 2023 pm 02:48 PM在使用 PHP 进行网站开发时,你可能会遇到字符编码问题。特别是在使用不同的 Web 服务器时,会发现 IIS 和 Apache 处理字符编码的方法不同。当你使用 IIS 时,可能会发现在使用 UTF-8 编码时出现了乱码现象;而在使用 Apache 时,一切正常,没有出现任何问题。这种情况应该怎么解决呢?

如何在 RHEL 9/8 上设置高可用性 Apache(HTTP)集群Jun 09, 2023 pm 06:20 PM

如何在 RHEL 9/8 上设置高可用性 Apache(HTTP)集群Jun 09, 2023 pm 06:20 PMPacemaker是适用于类Linux操作系统的高可用性集群软件。Pacemaker被称为“集群资源管理器”,它通过在集群节点之间进行资源故障转移来提供集群资源的最大可用性。Pacemaker使用Corosync进行集群组件之间的心跳和内部通信,Corosync还负责集群中的投票选举(Quorum)。先决条件在我们开始之前,请确保你拥有以下内容:两台RHEL9/8服务器RedHat订阅或本地配置的仓库通过SSH访问两台服务器root或sudo权限互联网连接实验室详情:服务器1:node1.exa

Linux下如何查看nginx、apache、mysql和php的编译参数May 14, 2023 pm 10:22 PM

Linux下如何查看nginx、apache、mysql和php的编译参数May 14, 2023 pm 10:22 PM快速查看服务器软件的编译参数:1、nginx编译参数:your_nginx_dir/sbin/nginx-v2、apache编译参数:catyour_apache_dir/build/config.nice3、php编译参数:your_php_dir/bin/php-i|grepconfigure4、mysql编译参数:catyour_mysql_dir/bin/mysqlbug|grepconfigure以下是完整的实操例子:查看获取nginx的编译参数:[root@www~]#/usr/lo

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Zend Studio 13.0.1

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.