To put it simply, it is asynchronous, non-blocking, using epoll and a lot of underlying code optimization.

In a little more detail, it is the design of nginx’s special process model and event model.

Video Course Recommendation →: "Concurrency Solution for Tens of Millions of Data (Theory and Practice)"

Process model

nginx adopts a master process and multiple worker processes.

The master process is mainly responsible for collecting and distributing requests. When a request comes, the master starts a worker process to handle the request.

The master process is also responsible for monitoring the status of the woker to ensure high reliability

The woker process is generally set to match the number of CPU cores. The woker process of nginx is different from apache. The apche process can only handle one request at the same time, so it will open many processes, hundreds or even thousands. The number of requests that nginx's woker process can handle at the same time is only limited by memory, so it can handle multiple requests.

Event model

nginx is asynchronous and non-blocking.

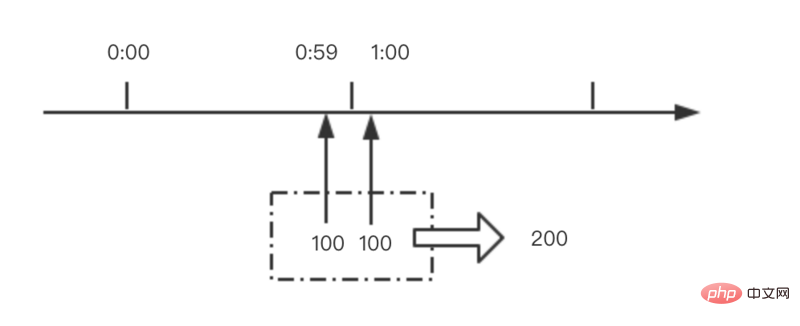

Every time a request comes in, there will be a worker process to process it. But it’s not the entire process. To what extent? Process where blocking may occur, such as forwarding the request to the upstream (backend) server and waiting for the request to return. Then, the processing worker will not wait so stupidly. After sending the request, he will register an event: "If the upstream returns, tell me and I will continue." So he went to rest. At this time, if another request comes in, he can quickly handle it in this way. Once the upstream server returns, this event will be triggered, the worker will take over, and the request will continue to go down.

The working nature of the web server determines that most of the life of each request is in network transmission. In fact, not much time is spent on the server machine. This is the secret to solving high concurrency with just a few processes.

IO multiplexing model epoll

epoll(), the kernel maintains a linked list, epoll_wait directly checks whether the linked list is empty to know whether a file descriptor is ready . The kernel implements epoll based on the callback function established with the device driver on each sockfd. Then, when an event on a sockfd occurs, its corresponding callback function will be called to add this sockfd to the linked list, and other "idle" states will not.

select(), the kernel uses the rotation training method to check whether there is fd ready. The sockfd is saved in an array-like data structure fd_set, the key is fd, and the value is 0 or 1.

poll()

[Summary]: The biggest advantage of epoll compared with select is that the efficiency will not decrease as the number of sockfd increases.

For more Nginx related technical articles, please visit the Nginx usage tutorial column to learn!

The above is the detailed content of How nginx achieves high concurrency. For more information, please follow other related articles on the PHP Chinese website!

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM本篇文章给大家带来了关于nginx的相关知识,其中主要介绍了nginx拦截爬虫相关的,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

nginx限流模块源码分析May 11, 2023 pm 06:16 PM

nginx限流模块源码分析May 11, 2023 pm 06:16 PM高并发系统有三把利器:缓存、降级和限流;限流的目的是通过对并发访问/请求进行限速来保护系统,一旦达到限制速率则可以拒绝服务(定向到错误页)、排队等待(秒杀)、降级(返回兜底数据或默认数据);高并发系统常见的限流有:限制总并发数(数据库连接池)、限制瞬时并发数(如nginx的limit_conn模块,用来限制瞬时并发连接数)、限制时间窗口内的平均速率(nginx的limit_req模块,用来限制每秒的平均速率);另外还可以根据网络连接数、网络流量、cpu或内存负载等来限流。1.限流算法最简单粗暴的

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM实验环境前端nginx:ip192.168.6.242,对后端的wordpress网站做反向代理实现复杂均衡后端nginx:ip192.168.6.36,192.168.6.205都部署wordpress,并使用相同的数据库1、在后端的两个wordpress上配置rsync+inotify,两服务器都开启rsync服务,并且通过inotify分别向对方同步数据下面配置192.168.6.205这台服务器vim/etc/rsyncd.confuid=nginxgid=nginxport=873ho

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AM

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AMnginx php403错误的解决办法:1、修改文件权限或开启selinux;2、修改php-fpm.conf,加入需要的文件扩展名;3、修改php.ini内容为“cgi.fix_pathinfo = 0”;4、重启php-fpm即可。

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM跨域是开发中经常会遇到的一个场景,也是面试中经常会讨论的一个问题。掌握常见的跨域解决方案及其背后的原理,不仅可以提高我们的开发效率,还能在面试中表现的更加

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PM

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PMnginx部署react刷新404的解决办法:1、修改Nginx配置为“server {listen 80;server_name https://www.xxx.com;location / {root xxx;index index.html index.htm;...}”;2、刷新路由,按当前路径去nginx加载页面即可。

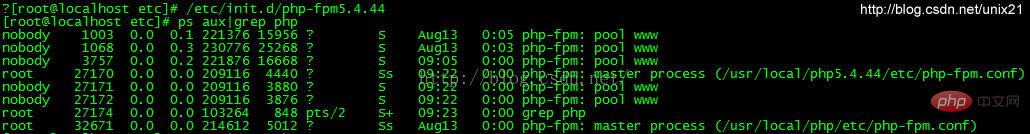

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PM

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PMlinux版本:64位centos6.4nginx版本:nginx1.8.0php版本:php5.5.28&php5.4.44注意假如php5.5是主版本已经安装在/usr/local/php目录下,那么再安装其他版本的php再指定不同安装目录即可。安装php#wgethttp://cn2.php.net/get/php-5.4.44.tar.gz/from/this/mirror#tarzxvfphp-5.4.44.tar.gz#cdphp-5.4.44#./configure--pr

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AM

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AMnginx禁止访问php的方法:1、配置nginx,禁止解析指定目录下的指定程序;2、将“location ~^/images/.*\.(php|php5|sh|pl|py)${deny all...}”语句放置在server标签内即可。

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

WebStorm Mac version

Useful JavaScript development tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.