In order to make graphics obtain material effects close to real objects, textures are generally used. There are two main types of textures: diffuse reflection maps and specular highlight maps. The diffuse reflection map can achieve the effects of diffuse reflection light and ambient light at the same time.

Please see the demo for the actual effect: Texture mapping

2D texture

You need to use texture to implement the texture , commonly used texture formats are: 2D texture, cube texture, 3D texture. We can use the most basic 2D texture to achieve the effects required in this section. Let's take a look at the APIs required to use textures. Related tutorials: js video tutorial

Because the coordinate origin of the texture is in the lower left corner, which is exactly opposite to our usual coordinate origin in the upper left corner, the following is to invert it according to the Y axis for convenience We set the coordinates.

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, 1);

Activate and bind textures, gl.TEXTURE0 means texture No. 0, which can increase from 0 all the way up. TEXTURE_2D represents 2D texture.

gl.activeTexture(gl.TEXTURE0);//激活纹理 gl.bindTexture(gl.TEXTURE_2D, texture);//绑定纹理

The next step is to set the texture parameters. This API is very important and is also the most complex part of the texture.

gl.texParameteri(target, pname, param), assign the value of param to the pname parameter of the texture object bound to the target. Parameters:

target: gl.TEXTURE_2D or gl.TEXTURE_CUBE_MAP

-

pname: Yes Specify 4 texture parameters

- Magnify (gl.TEXTURE_MAP_FILTER): How to get the texture color when the texture's drawing range is larger than the texture itself. For example, when a 16*16 texture image is mapped to a 32*32 pixel space, the texture size becomes twice the original size. The default value is gl.LINEAR.

- Minify (gl.TEXTURE_MIN_FILTER): How to get the texel color when the texture's draw return is smaller than the texture itself. For example, if a 32*32 texture image is mapped to a 16*16 pixel space, the size of the texture will only be the original size. The default value is gl.NEAREST_MIPMAP_LINEAR.

- Horizontal filling (gl.TEXTURE_WRAP_S): Indicates how to fill the left or right area of the texture image. The default value is gl.REPEAT.

- Vertical filling (gl.TEXTURE_WRAP_T): Indicates how to fill the area above and below the texture image. The default value is gl.REPEAT.

-

param: The value of the texture parameter

-

can be assigned to gl.TEXTURE_MAP_FILTER and gl .TEXTURE_MIN_FILTER Parameter value

gl.NEAREST: Use the color value of the pixel on the original texture that is closest to the center of the mapped pixel as the value of the new pixel.

gl.LINEAR: Use the weighted average of the color values of the four pixels closest to the center of the new pixel as the value of the new pixel (compared with gl.NEAREST, this method has better image quality Better, but also has greater overhead.)

-

Constants assignable to gl.TEXTURE_WRAP_S and gl.TEXTURE_WRAP_T:

gl.REPEAT: Tile repeated texture

gl.MIRRORED_REPEAT: Mirrored symmetrical repeated texture

gl.CLAMP_TO_EDGE: Using texture image edge values

-

The setting example is as follows:

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR); gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.NEAREST);

gl .texImage2D, assign pixels to the bound texture object. This API has more than a dozen overloaded functions in WebGL1 and WebGL2, and the format types are very diverse. The pixels parameter can be an image, canvas, or video. We only look at the calling form in WebGL1.

// WebGL1: void gl.texImage2D(target, level, internalformat, width, height, border, format, type, ArrayBufferView? pixels); void gl.texImage2D(target, level, internalformat, format, type, ImageData? pixels); void gl.texImage2D(target, level, internalformat, format, type, HTMLImageElement? pixels); void gl.texImage2D(target, level, internalformat, format, type, HTMLCanvasElement? pixels); void gl.texImage2D(target, level, internalformat, format, type, HTMLVideoElement? pixels); void gl.texImage2D(target, level, internalformat, format, type, ImageBitmap? pixels); // WebGL2: //...

I have encapsulated a texture loading function. The calling format of each API can be used to view the information. We should first achieve the effect we want.

function loadTexture(url) {

const texture = gl.createTexture();

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, 1);

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_2D, texture);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

let textureInfo = {

width: 1,

height: 1,

texture: texture,

};

const img = new Image();

return new Promise((resolve,reject) => {

img.onload = function() {

textureInfo.width = img.width;

textureInfo.height = img.height;

gl.bindTexture(gl.TEXTURE_2D, textureInfo.texture);

gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGBA, gl.RGBA, gl.UNSIGNED_BYTE, img);

resolve(textureInfo);

};

img.src = url;

});

}Diffuse reflection map

First implement the diffuse reflection light map and download a floor map from the Internet, which contains various types of maps.

The buffer needs to add the texture coordinates corresponding to the vertices, so that the corresponding texture pixels, referred to as texels, can be found through the texture coordinates.

const arrays = {

position: [

-1, 0, -1,

-1, 0, 1,

1, 0, -1,

1, 0, 1

],

texcoord: [

0.0, 1.0,

0.0, 0.0,

1.0, 1.0,

1.0, 0.0

],

normal: [ 0, 0, 1, 0, 0, 1, 0, 0, 1, 0, 0, 1 ],

};The only difference between the vertex shaders is the addition of texture coordinates, which require interpolation to be passed into the fragment shader

//...

attribute vec2 a_texcoord;

varying vec2 v_texcoord;

void main() {

//...

v_texcoord = a_texcoord;

}The fragment shader needs to be modified more. Mainly use texture2D to obtain the texel at the corresponding coordinates and replace the previous color. The following is the code related to the fragment shader

//... vec3 normal = normalize(v_normal); vec4 diffMap = texture2D(u_samplerD, v_texcoord); //光线方向 vec3 lightDirection = normalize(u_lightPosition - v_position); // 计算光线方向和法向量夹角 float nDotL = max(dot(lightDirection, normal), 0.0); // 漫反射光亮度 vec3 diffuse = u_diffuseColor * nDotL * diffMap.rgb; // 环境光亮度 vec3 ambient = u_ambientColor * diffMap.rgb; //...

js part loads the image corresponding to the texture, passes the texture unit, and then renders

//...

(async function (){

const ret = await loadTexture('/model/floor_tiles_06_diff_1k.jpg')

setUniforms(program, {

u_samplerD: 0//0号纹理

});

//...

draw();

})()The effect is as follows. The specular highlight part seems too dazzling because the floor is There will be no smooth and strong reflection like a mirror.

镜面Web Learning: How to Use Texture Maps

为了实现更逼真的高光效果,继续实现Web Learning: How to Use Texture Maps,实现原理和漫反射一样,把对应的高光颜色替换成Web Learning: How to Use Texture Maps纹素就可以了。

下面就是片元着色器增加修改高光部分

//... vec3 normal = normalize(v_normal); vec4 diffMap = texture2D(u_samplerD, v_texcoord); vec4 specMap = texture2D(u_samplerS, v_texcoord); //光线方向 vec3 lightDirection = normalize(u_lightPosition - v_position); // 计算光线方向和法向量夹角 float nDotL = max(dot(lightDirection, normal), 0.0); // 漫反射光亮度 vec3 diffuse = u_diffuseColor * nDotL * diffMap.rgb; // 环境光亮度 vec3 ambient = u_ambientColor * diffMap.rgb; // 镜面高光 vec3 eyeDirection = normalize(u_viewPosition - v_position);// 反射方向 vec3 halfwayDir = normalize(lightDirection + eyeDirection); float specularIntensity = pow(max(dot(normal, halfwayDir), 0.0), u_shininess); vec3 specular = (vec3(0.2,0.2,0.2) + specMap.rgb) * specularIntensity; //...

js同时加载漫反射和Web Learning: How to Use Texture Maps

//...

(async function (){

const ret = await Promise.all([

loadTexture('/model/floor_tiles_06_diff_1k.jpg'),

loadTexture('/model/floor_tiles_06_spec_1k.jpg',1)

]);

setUniforms(program, {

u_samplerD: 0,//0号纹理

u_samplerS: 1 //1号纹理

});

//...

draw();

})()最后实现的效果如下,明显更加接近真实的地板

The above is the detailed content of Web Learning: How to Use Texture Maps. For more information, please follow other related articles on the PHP Chinese website!

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

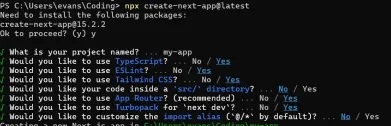

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

SublimeText3 English version

Recommended: Win version, supports code prompts!

WebStorm Mac version

Useful JavaScript development tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

Zend Studio 13.0.1

Powerful PHP integrated development environment