Home >Operation and Maintenance >Linux Operation and Maintenance >Detailed explanation of Linux virtual network device veth-pair, this article is very informative

Detailed explanation of Linux virtual network device veth-pair, this article is very informative

- 若昕forward

- 2019-04-01 13:03:506268browse

This article introduces veth-pair and its connectivity, as well as the connectivity between two namespaces.

01 What is veth-pair

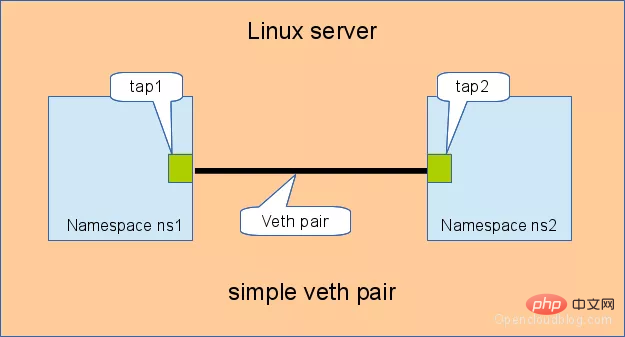

As the name suggests , veth-pair is a pair of virtual device interfaces. Different from tap/tun devices, they all appear in pairs. One end is connected to the protocol stack, and the other end is connected to each other. As shown in the figure below:

#Because of this feature, it often acts as a bridge to connect various virtual network devices. A typical example is like "between two namespaces" "Connection between", "Connection between Bridge and OVS", "Connection between Docker containers", etc., to build a very complex virtual network structure, such as OpenStack Neutron.

02 veth-pair connectivity

We assign IPs to veth0 and veth1 in the above figure: 10.1.1.2 and 10.1.1.3 respectively, and then ping veth1 from veth0. Theoretically, they are on the same network segment and can be pinged successfully, but the result is that pinging fails.

Grab a packet and take a look, tcpdump -nnt -i veth0

root@ubuntu:~# tcpdump -nnt -i veth0 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on veth0, link-type EN10MB (Ethernet), capture size 262144 bytes ARP, Request who-has 10.1.1.3 tell 10.1.1.2, length 28 ARP, Request who-has 10.1.1.3 tell 10.1.1.2, length 28

You can see that since veth0 and veth1 are in the same network segment, and this is the first time connection, so an ARP packet will be sent in advance, but veth1 does not respond to the ARP packet.

After checking, this is caused by some ARP-related default configuration restrictions in the Ubuntu system kernel I use. I need to modify the configuration items:

echo 1 > /proc/sys/net/ipv4/conf/veth1/accept_local echo 1 > /proc/sys/net/ipv4/conf/veth0/accept_local echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter echo 0 > /proc/sys/net/ipv4/conf/veth0/rp_filter echo 0 > /proc/sys/net/ipv4/conf/veth1/rp_filter

Just ping again after finishing.

root@ubuntu:~# ping -I veth0 10.1.1.3 -c 2 PING 10.1.1.3 (10.1.1.3) from 10.1.1.2 veth0: 56(84) bytes of data. 64 bytes from 10.1.1.3: icmp_seq=1 ttl=64 time=0.047 ms 64 bytes from 10.1.1.3: icmp_seq=2 ttl=64 time=0.064 ms --- 10.1.1.3 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 3008ms rtt min/avg/max/mdev = 0.047/0.072/0.113/0.025 ms

We are more interested in this communication process and can capture the packets to take a look.

For veth0 port:

root@ubuntu:~# tcpdump -nnt -i veth0 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on veth0, link-type EN10MB (Ethernet), capture size 262144 bytes ARP, Request who-has 10.1.1.3 tell 10.1.1.2, length 28 ARP, Reply 10.1.1.3 is-at 5a:07:76:8e:fb:cd, length 28 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2189, seq 1, length 64 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2189, seq 2, length 64 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2189, seq 3, length 64 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2244, seq 1, length 64

For veth1 port:

root@ubuntu:~# tcpdump -nnt -i veth1 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on veth1, link-type EN10MB (Ethernet), capture size 262144 bytes ARP, Request who-has 10.1.1.3 tell 10.1.1.2, length 28 ARP, Reply 10.1.1.3 is-at 5a:07:76:8e:fb:cd, length 28 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2189, seq 1, length 64 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2189, seq 2, length 64 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2189, seq 3, length 64 IP 10.1.1.2 > 10.1.1.3: ICMP echo request, id 2244, seq 1, length 64

Strange, we did not see the ICMP echo reply packet, then it is How can I ping?

Actually, echo reply here uses the localback port. If you don’t believe me, grab a package and have a look:

root@ubuntu:~# tcpdump -nnt -i lo tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on lo, link-type EN10MB (Ethernet), capture size 262144 bytes IP 10.1.1.3 > 10.1.1.2: ICMP echo reply, id 2244, seq 1, length 64 IP 10.1.1.3 > 10.1.1.2: ICMP echo reply, id 2244, seq 2, length 64 IP 10.1.1.3 > 10.1.1.2: ICMP echo reply, id 2244, seq 3, length 64 IP 10.1.1.3 > 10.1.1.2: ICMP echo reply, id 2244, seq 4, length 64

Why?

We will understand if we look at the entire communication process.

- First, the ping program constructs ICMP

echo requestand sends it to the protocol stack through the socket. - Since ping specifies the veth0 port, if it is the first time, you need to send an ARP request, otherwise the protocol stack will directly hand over the data packet to veth0.

- Since veth0 is connected to veth1, the ICMP request is sent directly to veth1.

- After receiving the request, veth1 hands it to the protocol stack at the other end.

- The protocol stack sees that there is an IP address of 10.1.1.3 locally, so it constructs an ICMP reply packet, checks the routing table, and finds that the data packet returned to the 10.1.1.0 network segment should go through the localback port, so it hands the reply packet to lo port (it will check table 0 of the routing table first,

ip route show table 0to check). - lo After receiving the reply packet from the protocol stack, it did nothing but changed hands and returned it to the protocol stack.

- After the protocol stack receives the reply packet, it finds that there is a socket waiting for the packet, so it gives the packet to the socket.

- Waiting for the ping program in user mode to find that the socket returns, so the ICMP reply packet is received.

The entire process is shown in the figure below:

03 Connectivity between two namespaces

namespace is a feature supported by Linux 2.6.x kernel version and later, mainly used for resource isolation. With namespace, a Linux system can abstract multiple network subsystems. Each subsystem has its own network equipment, protocol stack, etc., without affecting each other.

What should we do if we need to communicate between namespaces? The answer is to use veth-pair as a bridge.

According to the connection method and scale, it can be divided into "direct connection", "connection through Bridge" and "connection through OVS".

3.1 Direct connection

Direct connection is the simplest way. As shown in the figure below, a pair of veth-pair directly connects two namespaces together.

Configure IP for veth-pair and test connectivity:

# 创建 namespace ip netns a ns1 ip netns a ns2 # 创建一对 veth-pair veth0 veth1 ip l a veth0 type veth peer name veth1 # 将 veth0 veth1 分别加入两个 ns ip l s veth0 netns ns1 ip l s veth1 netns ns2 # 给两个 veth0 veth1 配上 IP 并启用 ip netns exec ns1 ip a a 10.1.1.2/24 dev veth0 ip netns exec ns1 ip l s veth0 up ip netns exec ns2 ip a a 10.1.1.3/24 dev veth1 ip netns exec ns2 ip l s veth1 up # 从 veth0 ping veth1 [root@localhost ~]# ip netns exec ns1 ping 10.1.1.3 PING 10.1.1.3 (10.1.1.3) 56(84) bytes of data. 64 bytes from 10.1.1.3: icmp_seq=1 ttl=64 time=0.073 ms 64 bytes from 10.1.1.3: icmp_seq=2 ttl=64 time=0.068 ms --- 10.1.1.3 ping statistics --- 15 packets transmitted, 15 received, 0% packet loss, time 14000ms rtt min/avg/max/mdev = 0.068/0.084/0.201/0.032 ms

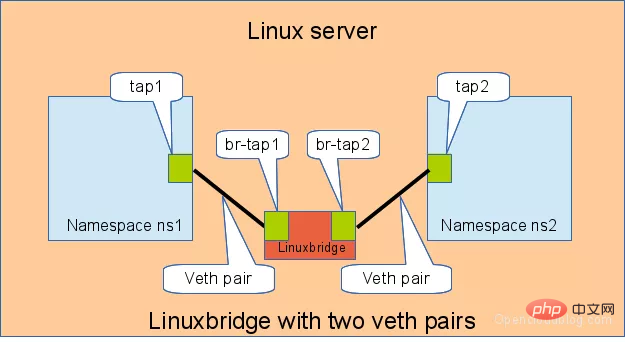

3.2 Connect through Bridge

Linux Bridge is equivalent to a switch , can transfer the traffic of two namespaces. Let's see what role veth-pair plays in it.

As shown below, two pairs of veth-pairs connect two namespaces to the Bridge.

Similarly configure IP for veth-pair and test its connectivity:

# 首先创建 bridge br0 ip l a br0 type bridge ip l s br0 up # 然后创建两对 veth-pair ip l a veth0 type veth peer name br-veth0 ip l a veth1 type veth peer name br-veth1 # 分别将两对 veth-pair 加入两个 ns 和 br0 ip l s veth0 netns ns1 ip l s br-veth0 master br0 ip l s br-veth0 up ip l s veth1 netns ns2 ip l s br-veth1 master br0 ip l s br-veth1 up # 给两个 ns 中的 veth 配置 IP 并启用 ip netns exec ns1 ip a a 10.1.1.2/24 dev veth0 ip netns exec ns1 ip l s veth0 up ip netns exec ns2 ip a a 10.1.1.3/24 dev veth1 ip netns exec ns2 ip l s veth1 up # veth0 ping veth1 [root@localhost ~]# ip netns exec ns1 ping 10.1.1.3 PING 10.1.1.3 (10.1.1.3) 56(84) bytes of data. 64 bytes from 10.1.1.3: icmp_seq=1 ttl=64 time=0.060 ms 64 bytes from 10.1.1.3: icmp_seq=2 ttl=64 time=0.105 ms --- 10.1.1.3 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 999ms rtt min/avg/max/mdev = 0.060/0.082/0.105/0.024 ms

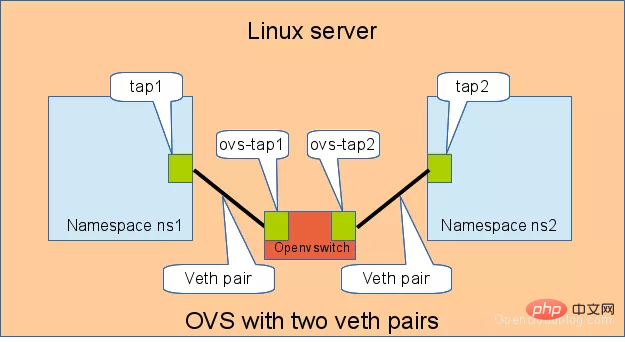

3.3 Connect through OVS

OVS is a third-party open source Bridge with more powerful functions than Linux Bridge. For the same experiment, we use OVS to see what the effect is.

As shown in the figure below:

# 用 ovs 提供的命令创建一个 ovs bridge ovs-vsctl add-br ovs-br # 创建两对 veth-pair ip l a veth0 type veth peer name ovs-veth0 ip l a veth1 type veth peer name ovs-veth1 # 将 veth-pair 两端分别加入到 ns 和 ovs bridge 中 ip l s veth0 netns ns1 ovs-vsctl add-port ovs-br ovs-veth0 ip l s ovs-veth0 up ip l s veth1 netns ns2 ovs-vsctl add-port ovs-br ovs-veth1 ip l s ovs-veth1 up # 给 ns 中的 veth 配置 IP 并启用 ip netns exec ns1 ip a a 10.1.1.2/24 dev veth0 ip netns exec ns1 ip l s veth0 up ip netns exec ns2 ip a a 10.1.1.3/24 dev veth1 ip netns exec ns2 ip l s veth1 up # veth0 ping veth1 [root@localhost ~]# ip netns exec ns1 ping 10.1.1.3 PING 10.1.1.3 (10.1.1.3) 56(84) bytes of data. 64 bytes from 10.1.1.3: icmp_seq=1 ttl=64 time=0.311 ms 64 bytes from 10.1.1.3: icmp_seq=2 ttl=64 time=0.087 ms ^C --- 10.1.1.3 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 999ms rtt min/avg/max/mdev = 0.087/0.199/0.311/0.112 ms

相关课程推荐:Linux视频教程

总结

veth-pair 在虚拟网络中充当着桥梁的角色,连接多种网络设备构成复杂的网络。

veth-pair 的三个经典实验,直接相连、通过 Bridge 相连和通过 OVS 相连。

参考

http://www.opencloudblog.com/?p=66

https://segmentfault.com/a/1190000009251098

The above is the detailed content of Detailed explanation of Linux virtual network device veth-pair, this article is very informative. For more information, please follow other related articles on the PHP Chinese website!