Home >Java >javaTutorial >Introduction to Java High Concurrency Architecture Design (Pictures and Text)

Introduction to Java High Concurrency Architecture Design (Pictures and Text)

- 不言forward

- 2018-10-20 17:29:005871browse

This article brings you an introduction (pictures and texts) about Java's high concurrency architecture design. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Preface

High concurrency often occurs in business scenarios with a large number of active users and a high concentration of users, such as: flash sales activities, receiving red envelopes at regular intervals, etc.

In order to allow the business to run smoothly and give users a good interactive experience, we need to design a high-concurrency processing solution suitable for our own business scenario based on factors such as the estimated concurrency of the business scenario.

Video Course Recommendation →: "Ten Million Level Data Concurrency Solution (Theory and Practice)"

On the Internet Over the years of business-related product development, I have been fortunate enough to encounter various pitfalls in concurrency. There has been a lot of blood and tears along the way. The summary here is used as my own archive record and I will share it with everyone.

1. Server Architecture

From the early stage of development to the gradual maturity of business, the server architecture has also evolved from relatively single to clustered and then to distributed services.

A service that can support high concurrency is indispensable for a good server architecture. It needs balanced load, the database needs a master-slave cluster, the nosql cache needs a master-slave cluster, and static files need to be uploaded to cdn. These are all things that can enable business programs Powerful backing for smooth operation.

The server part mostly requires operation and maintenance personnel to cooperate with the construction. I won’t go into details. I’ll stop there.

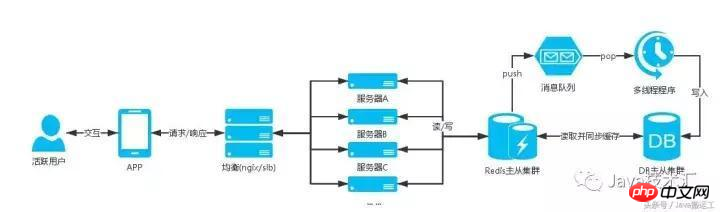

The roughly required server architecture is as follows:

Server

Balanced load (such as: nginx, Alibaba Cloud SLB)

Resource Monitoring

Distributed

Database

Master-slave separation, cluster

DBA table optimization, index optimization, etc.

Distribution Formula

nosql

Master-slave separation, cluster

Master-slave separation, cluster

Master-slave separation, cluster

redis

mongodb

memcache

cdn

html

css

js

image

Concurrency testing

High concurrency-related businesses require concurrency testing, and a large amount of data analysis is used to evaluate the amount of concurrency that the entire architecture can support.

To test high concurrency, you can use a third-party server or your own test server, use testing tools to test concurrent requests, and analyze the test data to get an estimate of the number of concurrencies that can be supported. This can be used as an early warning reference. As the saying goes, know your enemy. Fight a hundred battles without danger.

Third-party services:

Alibaba Cloud Performance Test

Concurrency testing tool:

Apache JMeter

Visual Studio Performance Load Test

Microsoft Web Application Stress Tool

Practical plan

General plan

The daily user traffic is large, but it is relatively scattered, and occasionally there will be high gatherings of users;

Scenarios: User check-in, user center, user order, etc.

Server architecture diagram:

##Note:

These businesses in the scenario are basically what users will operate after entering the APP. Except for event days (618, Double 11, etc.), these businesses The number of users will not be high. At the same time, these business-related tables are all big data tables, and the business is mostly query operations, so we need to reduce the queries that users directly hit the DB; query the cache first, and if the cache does not exist, then perform DB queries. , cache the query results. Updating user-related caches requires distributed storage, such as using user IDs for hash grouping and distributing users to different caches. In this way, the total amount of a cache set will not be large and will not affect query efficiency.Schemes such as:

Users sign in to obtain pointsCalculate the key of user distribution, and find the user's check-in information today in redis hashIf the check-in information is queried, return the check-in informationIf the check-in information is not queried, the DB queries whether the check-in has been done today. If so, the check-in information is synchronized with the redis cache. If today's check-in record is not queried in the DB, the check-in logic is performed, and the DB is operated to add today's check-in record and check-in points (this entire DB operation is a transaction)Cache check-in Send the information to redis and return the check-in informationNoteThere will be logical problems in concurrency situations, such as: checking in multiple times a day and issuing multiple points to users. My blog post [High Concurrency in the Eyes of Big Talk Programmers] has relevant solutions.User Orders

Here we only cache the user’s order information on the first page. There are 40 pieces of data on one page. Users generally only see the order data on the first page. If the user accesses the order list, if it is the first page to read the cache, if it is not reading the DBCalculate the key distributed by the user, and search the user order information in the redis hashIf Query the user's order information and return the order informationIf it does not exist, perform a DB query for the order data on the first page, then cache redis and return the order information

User Center

Calculate the key of user distribution , Search user order information in redis hash

If the user information is queried, return the user information

If it does not exist, query the user DB, then cache redis and return the user information

Other Business

The above example is a relatively simple high-concurrency architecture, which can be well supported when the concurrency is not very high. However, as the business grows, user concurrency increases. Our architecture will also continue to be optimized and evolved, such as servicing the business. Each service has its own concurrency architecture, its own balanced server, distributed database, and NoSQL master-slave cluster, such as user services and order services;

Message Queue

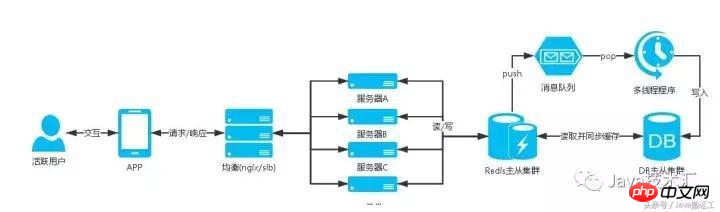

Second sale, instant grab and other activity businesses, users flood in instantly and generate high concurrent requests

Scenario: receive red envelopes regularly, etc.

Server architecture diagram:

Description:

Scheduled collection in the scenario It is a high-concurrency business. For example, users of flash sale activities will flood in at the right time. The DB will receive a critical hit in an instant. If it cannot hold it, it will go down, which will affect the entire business;

like This kind of business is not only a query operation but also involves highly concurrent data insertion or update. The general solution mentioned above cannot support it. During concurrency, it directly hits the DB;

This business is designed The message queue will be used when the message queue is used. You can add the participating user information to the message queue, and then write a multi-threaded program to consume the queue and issue red envelopes to the users in the queue;

The solution is as follows :

Receive red envelopes regularly

It is generally customary to use the redis list

When a user participates in an activity, push the user participation information to the queue

Then write a multi-threaded program to pop data and carry out the business of issuing red envelopes

This can support users with high concurrency to participate in activities normally and avoid the danger of database server downtime

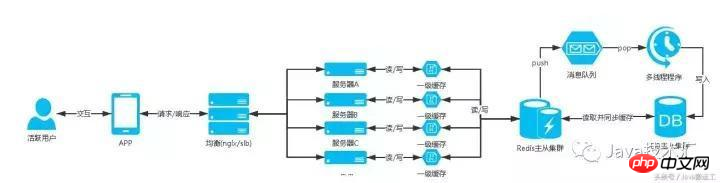

Level 1 Cache

The high concurrent request connection cache server exceeds the number of request connections that the server can receive, and some users have the problem of establishing a connection that times out and cannot read the data;

Therefore There needs to be a solution that can reduce the number of hits to the cache server when concurrency is high;

At this time, the first-level cache solution appears. The first-level cache uses the site server cache to store data. Note that only part of the request volume is stored. Large data, and the amount of cached data must be controlled. The memory of the site server cannot be used excessively and affect the normal operation of the site application. The first-level cache needs to set an expiration time in seconds. The specific time is set according to the business scenario. The purpose When there are high concurrent requests, the data can be retrieved from the first-level cache without having to connect to the cached nosql data server, reducing the pressure on the nosql data server. For example, in the product data interface on the first screen of the APP, these data are The public ones will not be customized for users, and these data will not be updated frequently. If the request volume of this interface is relatively large, it can be added to the first-level cache;

Server architecture diagram:

#Reasonably standardize and use the nosql cache database, and split the cache database cluster according to the business. This can basically support the business well, and the first-level cache After all, you are using the site server cache so you still have to make good use of it.

#Reasonably standardize and use the nosql cache database, and split the cache database cluster according to the business. This can basically support the business well, and the first-level cache After all, you are using the site server cache so you still have to make good use of it.

If high concurrent request data does not change, if you do not need to request your own server to obtain data, you can reduce the resource pressure on the server.

If the update frequency is not high, and the data allows a short delay, you can statically convert the data into JSON, XML, HTML and other data files and upload them to CDN. When pulling the data, give priority to the CDN. If the data is not obtained, then obtain it from the cache or database. When the administrator operates the background, edit the data, regenerate the static file and upload it to the CDN, so that the data can be obtained on the CDN server during times of high concurrency. There is a certain delay in CDN node synchronization, so it is also important to find a reliable CDN server provider.

The above is the detailed content of Introduction to Java High Concurrency Architecture Design (Pictures and Text). For more information, please follow other related articles on the PHP Chinese website!