Backend Development

Backend Development Python Tutorial

Python Tutorial Let you briefly understand the content of creating neural network models in python

Let you briefly understand the content of creating neural network models in pythonThis article brings you a brief understanding of how to create a neural network model in Python. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Summary: Curious about how neural networks work? Give it a try. The best way to understand how neural networks work is to create a simple neural network yourself.

Neural networks (NN), also known as artificial neural networks (ANN), are a subset of learning algorithms in the field of machine learning, loosely borrowing the concept of biological neural networks. At present, neural networks are widely used in fields such as computer vision and natural language processing. Andrey Bulezyuk, a senior German machine learning expert, said, "Neural networks are revolutionizing machine learning because they can effectively simulate complex abstractions in various disciplines and industries without much human involvement."

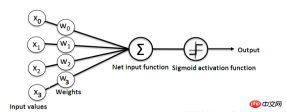

In general, artificial neural networks The network basically includes the following components:

The input layer that receives and transmits data;

hidden layer;

Output layer;

The weight between layers;

Activation function used in each hidden layer;

In this tutorial, a simple Sigmoid activation function is used, but please note that in deep neural In network models, the sigmoid activation function is generally not the first choice because it is prone to gradient dispersion.

In addition, there are several different types of artificial neural networks, such as feedforward neural networks, convolutional neural networks, and recurrent neural networks. This article will take a simple feedforward or perceptual neural network as an example. This type of artificial neural network transfers data directly from front to back, which is referred to as the forward propagation process.

Training feedforward neurons usually requires a backpropagation algorithm, which requires corresponding input and output sets for the network. When input data is transmitted to a neuron, it is processed accordingly and the resulting output is transmitted to the next layer.

The following figure simply shows a neural network structure:

In addition, the best way to understand how a neural network works is to learn how to Build one from scratch using any toolbox. In this article, we will demonstrate how to create a simple neural network using Python.

Problem

The table below shows the problem we will solve:

We will train the neural network so that it can predict the correct output value when given a new set of data .

As you can see from the table, the output value is always equal to the first value in the input section. Therefore, we can expect the output (?) value of the new situation to be 1.

Let's see if we can get the same result using some Python code.

Create Neural Network Class|NeuralNetwork Class

We will create a NeuralNetwork class in Python to train neurons to provide accurate predictions. This class also contains other auxiliary functions. We will not use the neural network library to create this simple neural network example, but will import the basic Numpy library to assist in the calculation.

The Numpy library is a basic library for processing data. It has the following four important calculation methods:

EXP - used to generate the natural exponent;

array——used to generate matrices;

dot——used for matrix multiplication;

-

random - used to generate random numbers;

Applying the Sigmoid function

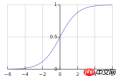

We will use the Sigmoid function, which draws an "S" shaped curve, It serves as the activation function of the neural network created in this article.

This function can map any value between 0 and 1 and helps us normalize the weighted sum of the input.

After this, we will create the derivative of the Sigmoid function to help calculate basic adjustments to the weights.

The output of the Sigmoid function can be used to generate its derivative. For example, if the output variable is "x", then its derivative will be x *(1-x).

Training the model

Training the model means the stage where we will teach the neural network to make accurate predictions. Each input has a weight - positive or negative, which means that inputs with large positive weights or large negative weights will have a greater impact on the resulting output.

Note that when the model is initially trained, each weight is initialized with a random number.

The following is the training process in the neural network example problem constructed in this article:

1. Get the inputs from the training data set, make some adjustments according to their weights, and calculate the neural network through The output method is used to transmit layer by layer;

2. Calculate the error rate of backpropagation. In this case, it is the error between the predicted output of the neuron and the expected output of the training data set;

3. Based on the error range obtained, use the error weighted derivative The formula makes some small weight adjustments;

4. Repeat this process 15,000 times, and during each iteration, the entire training set is processed simultaneously;

Here, we use the ".T" function to bias the matrix. Therefore, the numbers will be stored this way:

Eventually, the weights of the neurons will be optimized for the training data provided. Therefore, if the output of the neural network is consistent with the expected output, it means that the training is completed and accurate predictions can be made. This is the method of backpropagation.

Encapsulation

Finally, after initializing the NeuralNetwork class and running the entire program, the following is the complete code for how to create a neural network in a Python project:

import numpy as np

class NeuralNetwork():

def __init__(self):

# 设置随机数种子

np.random.seed(1)

# 将权重转化为一个3x1的矩阵,其值分布为-1~1,并且均值为0

self.synaptic_weights = 2 * np.random.random((3, 1)) - 1

def sigmoid(self, x):

# 应用sigmoid激活函数

return 1 / (1 + np.exp(-x))

def sigmoid_derivative(self, x):

#计算Sigmoid函数的偏导数

return x * (1 - x)

def train(self, training_inputs, training_outputs, training_iterations):

# 训练模型

for iteration in range(training_iterations):

# 得到输出

output = self.think(training_inputs)

# 计算误差

error = training_outputs - output

# 微调权重

adjustments = np.dot(training_inputs.T, error * self.sigmoid_derivative(output))

self.synaptic_weights += adjustments

def think(self, inputs):

# 输入通过网络得到输出

# 转化为浮点型数据类型

inputs = inputs.astype(float)

output = self.sigmoid(np.dot(inputs, self.synaptic_weights))

return output

if __name__ == "__main__":

# 初始化神经类

neural_network = NeuralNetwork()

print("Beginning Randomly Generated Weights: ")

print(neural_network.synaptic_weights)

#训练数据

training_inputs = np.array([[0,0,1],

[1,1,1],

[1,0,1],

[0,1,1]])

training_outputs = np.array([[0,1,1,0]]).T

# 开始训练

neural_network.train(training_inputs, training_outputs, 15000)

print("Ending Weights After Training: ")

print(neural_network.synaptic_weights)

user_input_one = str(input("User Input One: "))

user_input_two = str(input("User Input Two: "))

user_input_three = str(input("User Input Three: "))

print("Considering New Situation: ", user_input_one, user_input_two, user_input_three)

print("New Output data: ")

print(neural_network.think(np.array([user_input_one, user_input_two, user_input_three])))

print("Wow, we did it!")

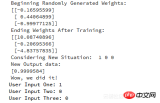

The following is after running the code Resulting output:

# The above is a simple neural network we managed to create. First the neural network starts assigning itself some random weights, after that it trains itself using training examples.

So if a new sample input [1,0,0] appears, its output value is 0.9999584. The expected correct answer is 1. It can be said that the two are very close. Considering that the Sigmoid function is a nonlinear function, this error is acceptable.

In addition, this article only uses one layer of neural network to perform simple tasks. What would happen if we put thousands of these artificial neural networks together? Can we imitate human thinking 100%? The answer is yes, but it is currently difficult to implement. It can only be said to be very similar. Readers who are interested in this can read materials related to deep learning.

The above is the detailed content of Let you briefly understand the content of creating neural network models in python. For more information, please follow other related articles on the PHP Chinese website!

GNN的基础、前沿和应用Apr 11, 2023 pm 11:40 PM

GNN的基础、前沿和应用Apr 11, 2023 pm 11:40 PM近年来,图神经网络(GNN)取得了快速、令人难以置信的进展。图神经网络又称为图深度学习、图表征学习(图表示学习)或几何深度学习,是机器学习特别是深度学习领域增长最快的研究课题。本次分享的题目为《GNN的基础、前沿和应用》,主要介绍由吴凌飞、崔鹏、裴健、赵亮几位学者牵头编撰的综合性书籍《图神经网络基础、前沿与应用》中的大致内容。一、图神经网络的介绍1、为什么要研究图?图是一种描述和建模复杂系统的通用语言。图本身并不复杂,它主要由边和结点构成。我们可以用结点表示任何我们想要建模的物体,可以用边表示两

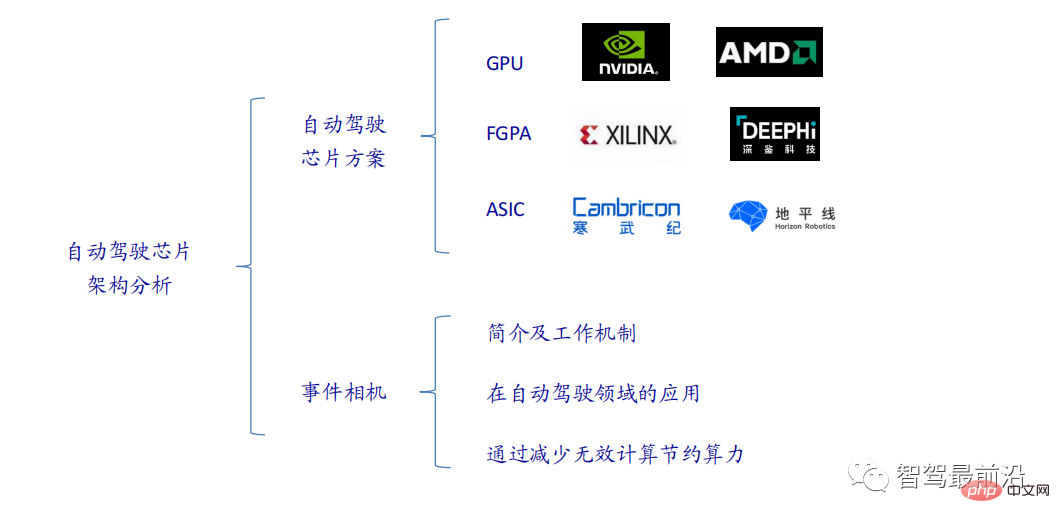

一文通览自动驾驶三大主流芯片架构Apr 12, 2023 pm 12:07 PM

一文通览自动驾驶三大主流芯片架构Apr 12, 2023 pm 12:07 PM当前主流的AI芯片主要分为三类,GPU、FPGA、ASIC。GPU、FPGA均是前期较为成熟的芯片架构,属于通用型芯片。ASIC属于为AI特定场景定制的芯片。行业内已经确认CPU不适用于AI计算,但是在AI应用领域也是必不可少。 GPU方案GPU与CPU的架构对比CPU遵循的是冯·诺依曼架构,其核心是存储程序/数据、串行顺序执行。因此CPU的架构中需要大量的空间去放置存储单元(Cache)和控制单元(Control),相比之下计算单元(ALU)只占据了很小的一部分,所以CPU在进行大规模并行计算

扛住强风的无人机?加州理工用12分钟飞行数据教会无人机御风飞行Apr 09, 2023 pm 11:51 PM

扛住强风的无人机?加州理工用12分钟飞行数据教会无人机御风飞行Apr 09, 2023 pm 11:51 PM当风大到可以把伞吹坏的程度,无人机却稳稳当当,就像这样:御风飞行是空中飞行的一部分,从大的层面来讲,当飞行员驾驶飞机着陆时,风速可能会给他们带来挑战;从小的层面来讲,阵风也会影响无人机的飞行。目前来看,无人机要么在受控条件下飞行,无风;要么由人类使用遥控器操作。无人机被研究者控制在开阔的天空中编队飞行,但这些飞行通常是在理想的条件和环境下进行的。然而,要想让无人机自主执行必要但日常的任务,例如运送包裹,无人机必须能够实时适应风况。为了让无人机在风中飞行时具有更好的机动性,来自加州理工学院的一组工

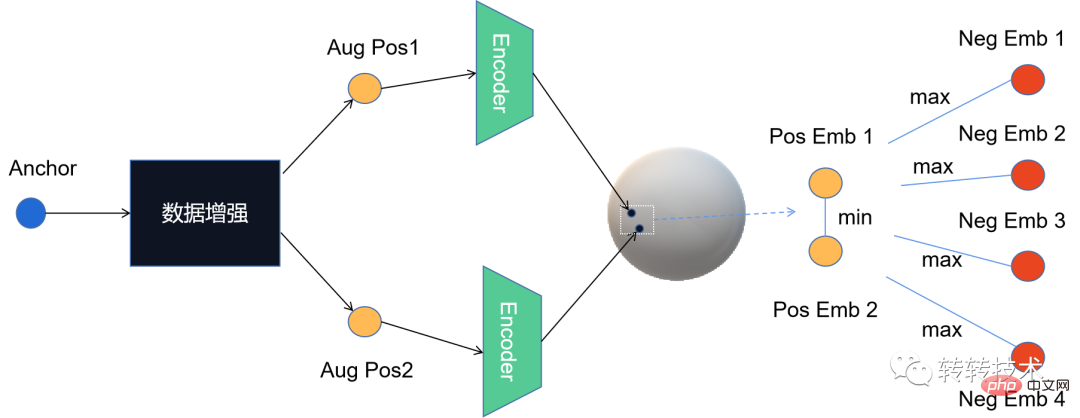

对比学习算法在转转的实践Apr 11, 2023 pm 09:25 PM

对比学习算法在转转的实践Apr 11, 2023 pm 09:25 PM1 什么是对比学习1.1 对比学习的定义1.2 对比学习的原理1.3 经典对比学习算法系列2 对比学习的应用3 对比学习在转转的实践3.1 CL在推荐召回的实践3.2 CL在转转的未来规划1 什么是对比学习1.1 对比学习的定义对比学习(Contrastive Learning, CL)是近年来 AI 领域的热门研究方向,吸引了众多研究学者的关注,其所属的自监督学习方式,更是在 ICLR 2020 被 Bengio 和 LeCun 等大佬点名称为 AI 的未来,后陆续登陆 NIPS, ACL,

python random库如何使用demoMay 05, 2023 pm 08:13 PM

python random库如何使用demoMay 05, 2023 pm 08:13 PMpythonrandom库简单使用demo当我们需要生成随机数或者从一个序列中随机选择元素时,可以使用Python内置的random库。下面是一个带有注释的例子,演示了如何使用random库:#导入random库importrandom#生成一个0到1之间的随机小数random_float=random.random()print(random_float)#生成一个指定范围内的随机整数(包括端点)random_int=random.randint(1,10)print(random_int)#

Java使用Random类的nextDouble()函数生成随机的双精度浮点数Jul 25, 2023 am 09:06 AM

Java使用Random类的nextDouble()函数生成随机的双精度浮点数Jul 25, 2023 am 09:06 AMJava使用Random类的nextDouble()函数生成随机的双精度浮点数Java中的Random类是一个伪随机数生成器,可以用来生成不同类型的随机数。其中,nextDouble()函数用于生成一个随机的双精度浮点数。在使用Random类之前,我们需要先导入java.util包。接下来我们可以创建一个Random对象,然后使用nextDouble()函数

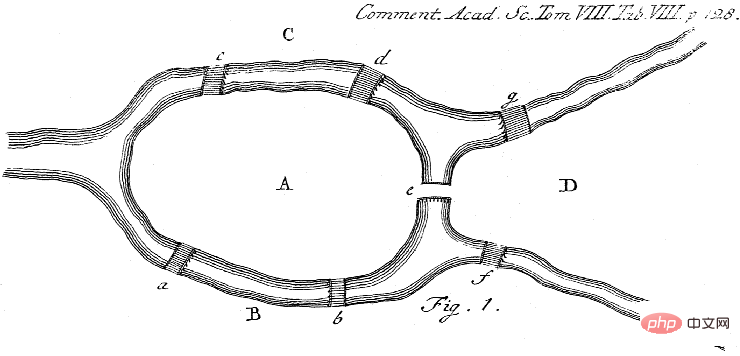

Michael Bronstein从代数拓扑学取经,提出了一种新的图神经网络计算结构!Apr 09, 2023 pm 10:11 PM

Michael Bronstein从代数拓扑学取经,提出了一种新的图神经网络计算结构!Apr 09, 2023 pm 10:11 PM本文由Cristian Bodnar 和Fabrizio Frasca 合著,以 C. Bodnar 、F. Frasca 等人发表于2021 ICML《Weisfeiler and Lehman Go Topological: 信息传递简单网络》和2021 NeurIPS 《Weisfeiler and Lehman Go Cellular: CW 网络》论文为参考。本文仅是通过微分几何学和代数拓扑学的视角讨论图神经网络系列的部分内容。从计算机网络到大型强子对撞机中的粒子相互作用,图可以用来模

用AI寻找大屠杀后失散的亲人!谷歌工程师研发人脸识别程序,可识别超70万张二战时期老照片Apr 08, 2023 pm 04:21 PM

用AI寻找大屠杀后失散的亲人!谷歌工程师研发人脸识别程序,可识别超70万张二战时期老照片Apr 08, 2023 pm 04:21 PMAI面部识别领域又开辟新业务了?这次,是鉴别二战时期老照片里的人脸图像。近日,来自谷歌的一名软件工程师Daniel Patt 研发了一项名为N2N(Numbers to Names)的 AI人脸识别技术,它可识别二战前欧洲和大屠杀时期的照片,并将他们与现代的人们联系起来。用AI寻找失散多年的亲人2016年,帕特在参观华沙波兰裔犹太人纪念馆时,萌生了一个想法。这一张张陌生的脸庞,会不会与自己存在血缘的联系?他的祖父母/外祖父母中有三位是来自波兰的大屠杀幸存者,他想帮助祖母找到被纳粹杀害的家人的照

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

Atom editor mac version download

The most popular open source editor

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 English version

Recommended: Win version, supports code prompts!