Web Front-end

Web Front-end JS Tutorial

JS Tutorial How can Node crawl headline videos in batches and save them (code implementation)

How can Node crawl headline videos in batches and save them (code implementation)How can Node crawl headline videos in batches and save them (code implementation)

The content of this article is about how Node implements batch crawling and saving of headline videos (code implementation). It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Introduction

The general routine for crawling videos or pictures in batches is to use a crawler to obtain a collection of file links, and then save the files one by one through methods such as writeFile. However, the video link of Toutiao cannot be captured in the html file (server-side rendering output) that needs to be crawled. The video link is dynamically calculated and added to the video tag based on the known key or hash value of the video through the algorithm or decryption method in certain js files when the page is rendered on the client side. This is also an anti-crawling measure for the website.

When we browse these pages, we can see the calculated file address through the audit element. However, when downloading in batches, it is obviously not advisable to manually obtain video links one by one. Fortunately, puppeteer provides the function of simulating access to Chrome, allowing us to crawl the final page rendered by the browser.

Project Start

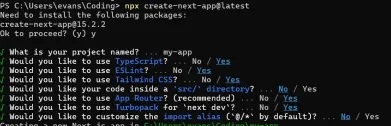

Commandnpm i npm start

Notice: The process of installing puppeteer is a little slow, please wait patiently.

Configuration file// 配置相关

module.exports = {

originPath: 'https://www.ixigua.com', // 页面请求地址

savePath: 'D:/videoZZ' // 存放路径

}

Technical points

puppeteerOfficial API

puppeteer provides a high-level API to control Chrome or Chromium.

puppeteer Main function:

Use web pages to generate PDFs and images

Crawl SPA applications and generate pre-rendered content (i.e. "SSR" server-side rendering)

Can capture content from the website

Automated form submission, UI testing, keyboard input, etc.

API used:

puppeteer.launch() Launch browser instance

browser .newPage() Create a new page

page.goto() Enter the specified webpage

page.screenshot() Screenshot

page.waitFor() The page waits, which can be time, a certain element, or a certain function

page.$eval() Gets a specified element, Equivalent to document.querySelector

- ##page.$$eval() to obtain a certain type of element, equivalent to document.querySelectorAll

- page.$( '#id .className') Get an element in the document, the operation is similar to jQuery

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com');

await page.screenshot({path: 'example.png'});

await browser.close();

})();Video file download method

- Download video main method

const downloadVideo = async video => {

// 判断视频文件是否已经下载

if (!fs.existsSync(`${config.savePath}/${video.title}.mp4`)) {

await getVideoData(video.src, 'binary').then(fileData => {

console.log('下载视频中:', video.title)

savefileToPath(video.title, fileData).then(res =>

console.log(`${res}: ${video.title}`)

)

})

} else {

console.log(`视频文件已存在:${video.title}`)

}

}

- Get video data

getVideoData (url, encoding) {

return new Promise((resolve, reject) => {

let req = http.get(url, function (res) {

let result = ''

encoding && res.setEncoding(encoding)

res.on('data', function (d) {

result += d

})

res.on('end', function () {

resolve(result)

})

res.on('error', function (e) {

reject(e)

})

})

req.end()

})

}

- Save video data to local

savefileToPath (fileName, fileData) {

let fileFullName = `${config.savePath}/${fileName}.mp4`

return new Promise((resolve, reject) => {

fs.writeFile(fileFullName, fileData, 'binary', function (err) {

if (err) {

console.log('savefileToPath error:', err)

}

resolve('已下载')

})

})

} Target website: 西瓜视频 Project function: Download the latest 20 videos under the headline account [Weichen Finance] Project address:

Github address

The above is the detailed content of How can Node crawl headline videos in batches and save them (code implementation). For more information, please follow other related articles on the PHP Chinese website!

Understanding the JavaScript Engine: Implementation DetailsApr 17, 2025 am 12:05 AM

Understanding the JavaScript Engine: Implementation DetailsApr 17, 2025 am 12:05 AMUnderstanding how JavaScript engine works internally is important to developers because it helps write more efficient code and understand performance bottlenecks and optimization strategies. 1) The engine's workflow includes three stages: parsing, compiling and execution; 2) During the execution process, the engine will perform dynamic optimization, such as inline cache and hidden classes; 3) Best practices include avoiding global variables, optimizing loops, using const and lets, and avoiding excessive use of closures.

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AMPython is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Dreamweaver Mac version

Visual web development tools

Dreamweaver CS6

Visual web development tools