Home >Java >javaTutorial >Java learning: What is a thread? The most detailed explanation

Java learning: What is a thread? The most detailed explanation

- php是最好的语言Original

- 2018-08-06 16:44:402033browse

What is a thread: Sometimes called a lightweight process, it is the smallest unit of program execution flow.

A standard thread is composed of thread ID, current instruction pointer (PC), register set and stack.

A process is composed of one or more threads. Each thread shares the program's memory space (including code segments, data segment heaps, etc.) and some process-level resources (such as open files and signals)

Multiple threads can execute concurrently without interfering with each other, and share the process's global variables and heap data;

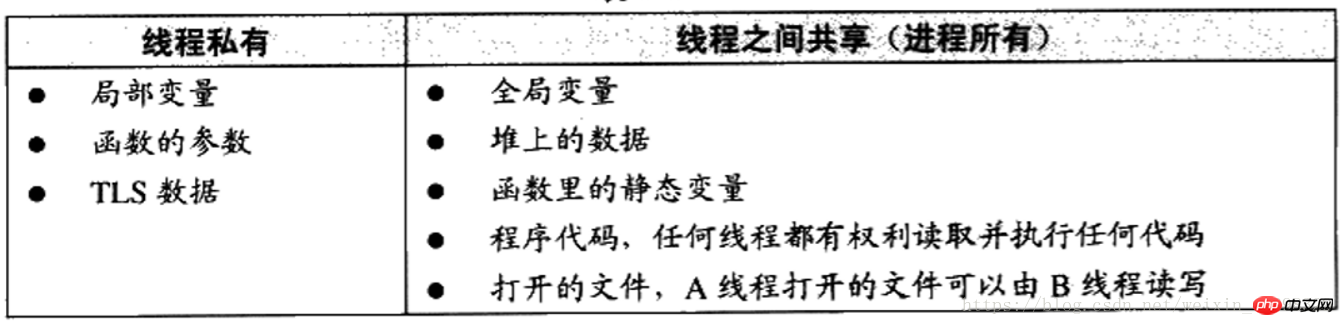

Access permissions of threads

Threads have very free access rights and can access all data in the process memory;

Thread scheduling and priority

Single processor corresponds to multiple threads: The operating system allows these multiple threads to execute in turn, each time only executing for a short period of time (usually tens of seconds), so that each thread "looks" like Executed simultaneously; the operation of continuously switching threads on such a processor becomes "thread scheduling"

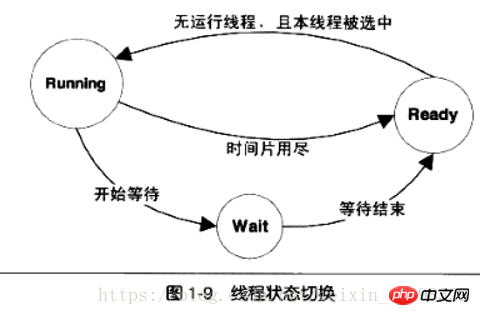

Three states in thread scheduling:

(1): Running, the thread is running at this time

(2 ): ready. At this time, the thread can run immediately, but CPU is already occupied

(3): Waiting. At this time, the thread is waiting for a certain event to occur and cannot be executed.

Whenever a program leaves the running state, the scheduling system will select a ready thread to run. ;A thread in the waiting state enters the ready state after an event occurs.

In the case of priority scheduling, there are generally three ways to change thread priority:

User specified Priority;

Raise or lower the priority according to the frequency of entering the waiting state;

The priority will be raised if it is not executed for a long time;

Multi-threading of Linux

Under Linux, you can use the following three methods to create a new task:

(1) fork: copy the current process

(2) exec: use new The executable image overwrites the current executable image

(3) clone: Create a child process and start execution from the specified location;

fork:

pid_t pid;

if(pid==fork()){…}

After the fork call, a new task is started and returned from the fork function together with this task, but the difference is that the fork of this task will return The pid of the new task, and the fork of the new task will return 0;

Fork generates new tasks very quickly, because fork does not copy the memory space of the original task, but shares a with the original task. Copy the memory space of while writing;

The so-called Copy while writing: refers to that two tasks can be done at the same time Read the memory freely, but when any task attempts to modify the memory, a copy of the memory will be provided to the modifying party for separate use, so as not to affect the use of other tasks;

fork can only generate the image of this task, so you must use exec to start other new tasks. exec can replace the current executable image with a new executable image, so in After fork generates a new task, the new task can call exec to execute the new executable file;

Header files are defined in pthread.h

Create a thread: #include

int pthread_create (pthread_t *thread,const pthread_attr_t* attr,void*(*start_routine)(void*),void*arg)

The thread parameter is the identifier of the new thread , subsequent pthread_* functions refer to the new thread through it;

The attr parameter is used to set the attributes of the new thread. Passing NULL to it means using the default thread attributes;

The Start_routine and arg parameters respectively specify the functions and parameters that the new thread will run;

pthread_create returns 0 when successful and an error code when failed;

End a thread: void pthread_exit(void *retval)

The function passes its exit information to the recycler of the thread through the retval parameter;

Thread safety:

Multi-threaded programs are in a changing environment, and accessible global variables and heap data may be changed by other threads at any time;

Means: Synchronization and locking

Atomic: A single instruction operation is called atomic. In any case, the execution of a single instruction will not be interrupted;

In order to avoid unpredictable consequences caused by multiple threads reading and writing a data at the same time, we need to synchronize each thread's access to the same data (the so-called synchronization means that when one thread accesses the data, other threads must not access the data. The same data is accessed), therefore, access to data is atomic;

Common methods of synchronization: (semaphores, mutexes, critical sections, read-write locks, condition variables)

Lock: Each thread first attempts to acquire the lock when accessing data or resources, and releases the lock after the access is completed;

Semaphore: When a thread accesses resources, it first acquires the semaphore. ;

The operation is as follows:

(1) Decrease the value of the semaphore by 1;

(2) If the value of the semaphore is less than 0, enter the waiting state; otherwise Continue execution;

After accessing the resource, the thread releases the semaphore;

(3) Add 1 to the value of the semaphore;

(4) If the value of the semaphore Less than 1, wake up a waiting thread;

Mutex&&Semaphore

Same as: resource only Allow one thread to access at the same time;

Exception: The semaphore can be acquired and released by any thread in the entire system. That is to say, after the same semaphore can be acquired by one thread in the system, another thread Release;

The mutex requires which thread acquires the mutex, which thread is responsible for releasing the lock. It is invalid for other threads to release the mutex on their behalf;

Critical section: is a more strict synchronization method than a mutex

The acquisition of the lock in the critical section is called entering the critical section;

and the release of the lock is called leaving critical section.

Difference (with semaphore and mutex)

Mutex and semaphore are visible in any process of the system;

That is to say, a process When a mutex or semaphore is created, it is legal for another process to try to acquire the lock;

The scope of the critical section is limited to this process, and other processes cannot obtain the lock

Read-write lock:

Two methods: shared and exclusive

When the lock is in the free state, try to Any method of acquiring the lock can be successful and put the lock in the corresponding state; (as shown above)

Condition variable: As a means of synchronization, it acts like a fence ;

For condition variables, threads have two operations:

(1) First, the thread can wait for the condition variable, and a condition variable can be waited by multiple threads;

( 2) The thread can wake up the condition variable. At this time, one or all threads waiting for this condition variable will be awakened and continue to support; The occurrence of an event, when the event occurs, all threads can resume execution together).

Related articles:

What is a process? What is a thread? Implementation method of multi-threading based on java learningThe above is the detailed content of Java learning: What is a thread? The most detailed explanation. For more information, please follow other related articles on the PHP Chinese website!