Home >Java >javaTutorial >Java garbage collection time can also be easily reduced. An example explains Ali-HBase's GC.

Java garbage collection time can also be easily reduced. An example explains Ali-HBase's GC.

- php是最好的语言Original

- 2018-07-28 16:55:512380browse

How to reduce 90% of Java garbage collection time? Everyone should be familiar with GC in Java. How GC optimization is performed is explained in detail below. The JVM's GC mechanism shields developers from the details of memory management and improves development efficiency. apache php mysql

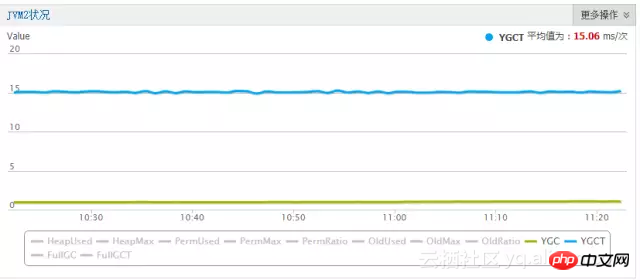

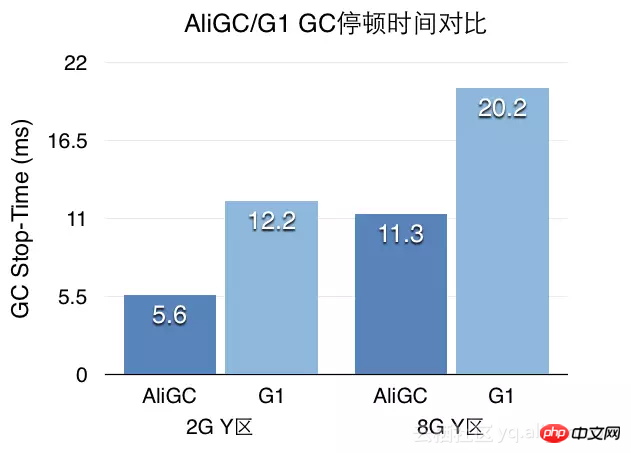

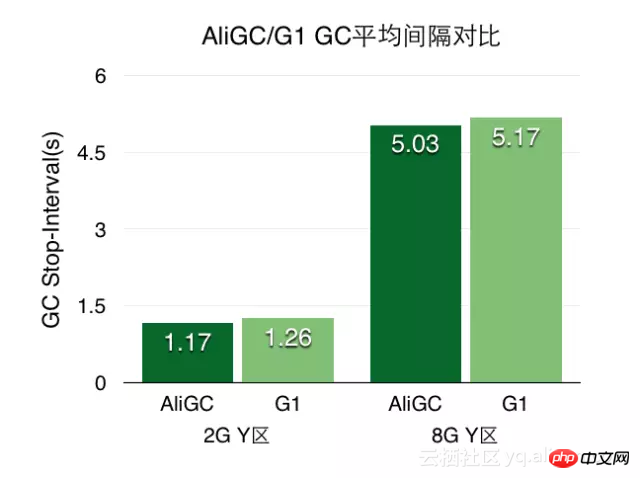

Not long ago, we prepared to break through this commonly recognized pain point on Ali-HBase. To this end, we conducted in-depth analysis and comprehensive innovation work, and achieved some relatively good results. Taking the Ant risk control scenario as an example, the online young GC time of HBase was reduced from 120ms to 15ms. Combined with ZenGC, a tool provided by the Alibaba JDK team, it further reached 5ms in the laboratory stress test environment. This article mainly introduces some of our past work and technical ideas in this area.

Background introduction

The GC mechanism of JVM shields developers from the details of memory management and improves development efficiency. Speaking of GC, many people's first reaction may be that the JVM pauses for a long time or that FGC causes the process to become stuck and unserviceable. But for big data storage services like HBase, the GC challenges brought by JVM are quite complex and difficult. There are three reasons:

1. The memory size is huge. Most of the online HBase processes are large heaps of 96G. This year, new models have been launched with some heap configurations of more than 160G

2. The object status is complex. The HBase server maintains a large number of read and write caches internally, reaching a scale of tens of GB. HBase provides ordered service data in the form of tables, and the data is organized in a certain structure. These data structures generate over 100 million objects and references

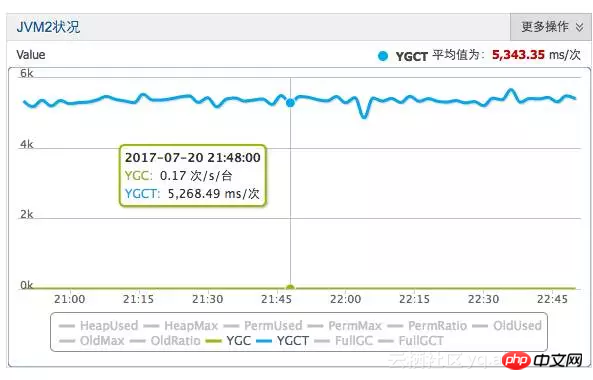

3. Young GC frequency is high. The greater the access pressure, the faster the memory consumption in the young area. Some busy clusters can reach 1 to 2 young GCs per second. A large young area can reduce the GC frequency, but it will cause greater young GC pauses and damage the business. Real-time requirements.

Thoughts

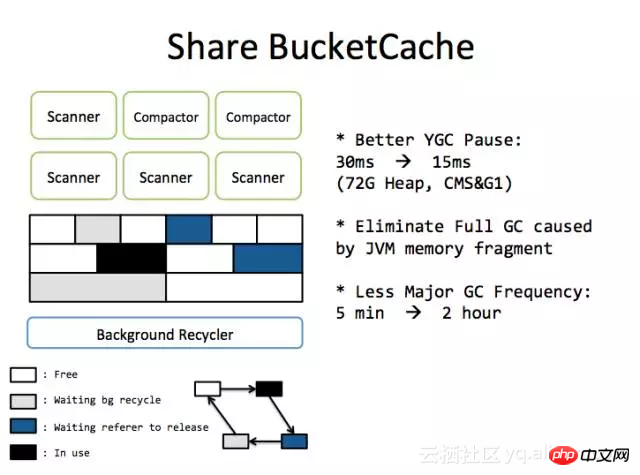

As a storage system, HBase uses a large amount of memory as write buffer and read cache, such as a 96G large heap (4G young 92G old). Writing buffer and reading cache will occupy more than 70% of the memory (about 70G). The memory level in the heap itself will be controlled at 85%, and the remaining occupied memory will only be within 10G. Therefore, if we can self-manage this 70G memory at the application level, then for the JVM, the GC pressure of a large heap of 100G will be equivalent to the GC pressure of a small heap of 10G, and it will also face larger heaps in the future. Will not worsen bloating. Under this solution, our online young GC time has been optimized from 120ms to 15ms.

In a high-throughput data-intensive service system, a large number of temporary objects are frequently created and recycled. How to manage the allocation and recycling of these temporary objects in a targeted manner, developed by the AliJDK team A new tenant-based GC algorithm—ZenGC. The Group's HBase was transformed based on this new ZenGC algorithm. The young GC time we measured in the laboratory was reduced from 15ms to 5ms. This is an unexpected and extreme effect.

The following will introduce the key technologies used in GC optimization of Ali-HBase version one by one.

Fast and more economical CCSMap

The storage model currently used by HBase is the LSMTree model. The written data will be temporarily stored in the memory to a certain size and then dumped to the disk to form a file.

We will refer to it as write cache below. The write cache is queryable, which requires the data to be ordered in memory. In order to improve the efficiency of concurrent reading and writing, and to achieve the basic requirements of ordering data and supporting seek&scan, SkipList is a widely used data structure.

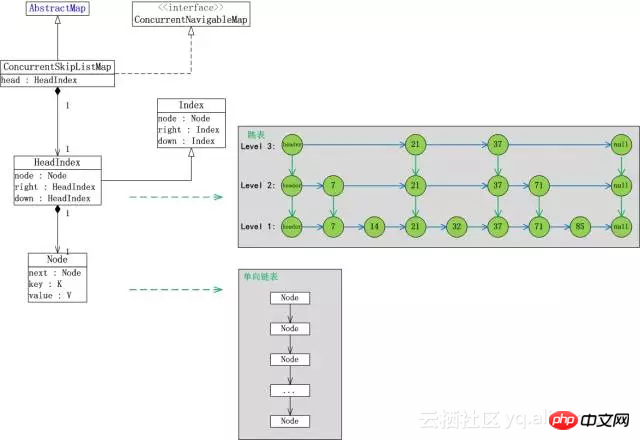

We take the ConcurrentSkipListMap that comes with the JDK as an example for analysis. It has the following three problems:

There are many internal objects. Each time an element is stored, an average of 4 objects (index node key value, average layer height is 1) are required

Newly inserted objects are in the young area, and old objects are in the old area. When elements are continuously inserted, the internal reference relationship will change frequently. Whether it is the CardTable mark of the ParNew algorithm or the RSet mark of the G1 algorithm, it is possible to trigger the old area scan.

The KeyValue element written by the business is not of regular length. When it is promoted to the old area, a large number of memory fragments may be generated.

Problem 1 makes the object scanning cost of young area GC very high, and more objects are promoted during young GC. Problem 2 causes the old area that needs to be scanned during young GC to expand. Problem 3 increases the probability of FGC caused by memory fragmentation. The problem becomes more severe when the elements being written are smaller. We have made statistics on the online RegionServer process and found that there are as many as 120 million active Objects!

After analyzing the biggest enemy of the current young GC, a bold idea came up. Since the allocation, access, destruction and recycling of the write cache are all managed by us, if the JVM "cannot see "With write cache, we manage the life cycle of the write cache ourselves, and the GC problem will naturally be solved.

Speaking of making the JVM "invisible", many people may think of the off-heap solution, but this is not that simple for write caching, because even if the KeyValue is placed off heap, it cannot Avoid questions 1 and 2. And 1 and 2 are also the biggest problems for young GC.

The question is now transformed into: How to build an ordered Map that supports concurrent access without using JVM objects.

Of course we cannot accept performance loss, because the speed of writing Map is closely related to the write throughput of HBase.

The demand is reinforced again: How to build an ordered Map that supports concurrent access without using objects, without any performance loss.

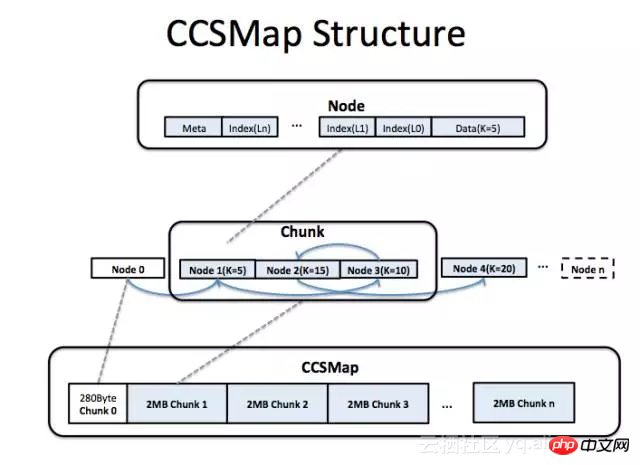

In order to achieve this goal, we designed such a data structure:

It uses continuous memory (inside the heap or outside the heap), and we control the internal structure through code Rather than relying on the object mechanism of the JVM

is also logically a SkipList, supporting lock-free concurrent writing and query

Control pointer and data are stored in continuous memory

The above figure shows the memory structure of CCSMap (CompactedConcurrentSkipListMap). We apply for write cache memory in the form of large memory segments (Chunk). Each Chunk contains multiple Nodes, and each Node corresponds to an element. Newly inserted elements are always placed at the end of used memory. The complex structure inside Node stores maintenance information and data such as Index/Next/Key/Value. Newly inserted elements need to be copied into the Node structure. When a write cache dump occurs in HBase, all Chunks of the entire CCSMap will be recycled. When an element is deleted, we only logically "kick" the element out of the linked list, and will not actually recover the element from the memory (of course there are ways to do actual recycling, but as far as HBase is concerned, there is no need).

Although there is an extra copy when inserting KeyValue data, in most cases, copying will be faster. Because from the structure of CCSMap, the control node and KeyValue of an element in a Map are adjacent in memory, using CPU cache is more efficient and seek will be faster. For SkipList, the writing speed is actually bounded by the seek speed, and the overhead caused by the actual copy is far less than the seek overhead. According to our tests, compared with the ConcurrentSkipListMap that comes with the JDK, the read and write throughput increased by 20~30% in the 50Byte length KV test.

Since there are no JVM objects, each JVM object occupies at least 16 Byte of space and can be saved (8 bytes are reserved for tags and 8 bytes are type pointers). Taking the 50Byte length KeyValue as an example, compared with the ConcurrentSkipListMap that comes with the JDK, the memory usage of CCSMap is reduced by 40%.

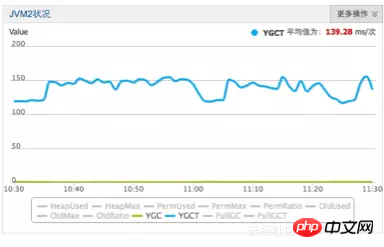

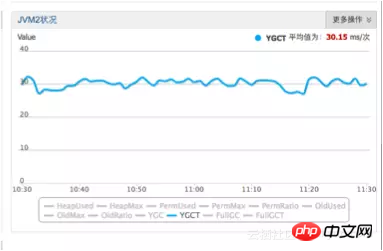

After CCSMap was launched in production, the actual optimization effect: young GC was reduced from 120ms to 30ms

Before optimization

Before optimization

After optimization

After optimization

After using CCSMap, the original 120 million surviving objects have been reduced to less than 10 million levels, greatly reducing GC pressure. Due to the compact memory arrangement, write throughput has also been improved by 30%.

Cache: BucketCache

HBase organizes data on the disk in the form of Blocks. A typical HBase Block size is between 16K~64K. HBase maintains BlockCache internally to reduce disk I/O. BlockCache, like the write cache, does not conform to the generational hypothesis in the GC algorithm theory and is inherently unfriendly to the GC algorithm - it is neither fleeting nor permanent.

A piece of Block data is loaded from the disk into the JVM memory. The life cycle ranges from minutes to months. Most Blocks will enter the old area and will only be recycled by the JVM during Major GC. Its troubles are mainly reflected in:

The size of HBase Block is not fixed and relatively large, and the memory is easily fragmented

In the ParNew algorithm, promotion is troublesome. The trouble is not reflected in the cost of copying, but because of the large size and the high cost of finding suitable space to store the HBase Block.

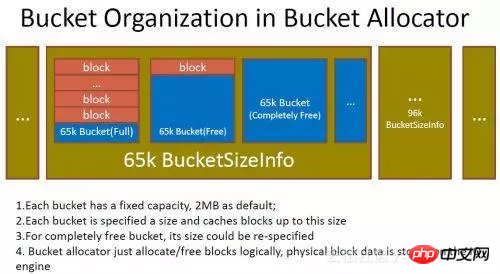

The idea of optimizing the read cache is to apply to the JVM for a piece of memory that will never be returned as BlockCache. We segment the memory into fixed-size segments ourselves. When the Block is loaded into the memory, we copy the Block to into segments and marked as used. When this Block is no longer needed, we will mark the interval as available and new Blocks can be re-stored. This is the BucketCache. Regarding memory space allocation and recycling in BucketCache (the design and development of this area were completed many years ago)

BucketCache

BucketCache

Many RPC frameworks based on off-heap memory will also Manage the allocation and recycling of off-heap memory, usually through explicit release. But for HBase, there are some difficulties. We think of Block objects as memory segments that need to be self-managed. Blocks may be referenced by multiple tasks. To solve the problem of Block recycling, the simplest way is to copy the Block to the stack for each task (the copied block is generally not promoted to the old area), and then transfer it to the JVM for management.

In fact, we have been using this method before, which is simple to implement, JVM endorsed, safe and reliable. But this is a lossy memory management method. In order to solve the GC problem, a copy cost for each request is introduced. Since copying to the stack requires additional CPU copy costs and young area memory allocation costs, this price seems high today when CPUs and buses are becoming more and more precious.

So we turned to using reference counting to manage memory. The main difficulty encountered in HBase is:

There will be multiple tasks inside HBase referencing the same Block

There may be multiple variables in the same task referencing the same Block. The reference may be a temporary variable on the stack or an object field on the heap.

The processing logic on Block is relatively complex. Block will be passed between multiple functions and objects in the form of parameters, return values, and field assignments.

Blocks may be managed by us, or they may not be managed by us (some Blocks need to be released manually, and some do not).

Block may be converted to a subtype of Block.

Taking these points together, it is a challenge to write correct code. But in C, it is natural to use smart pointers to manage the object life cycle. Why is it difficult in Java?

Assignment of variables in Java, at the user code level, will only produce reference assignments, while variable assignment in C can use the constructor and destructor of the object to do many things, smart pointers That is, based on this implementation (of course, improper use of C's constructors and destructors will also cause many problems, each with its own advantages and disadvantages, which will not be discussed here)

So we referred to C's smart pointers and designed a Block The reference management and recycling framework ShrableHolder is used to eliminate various if else difficulties in coding. It has the following paradigm:

ShrableHolder can manage reference-counted objects and non-reference-counted objects

ShrableHolder is used when When reassigning, the previous object is released. If it is a managed object, the reference count is decremented by 1, if not, there is no change.

ShrableHolder must be called reset when the task ends or the code segment ends

ShrableHolder cannot be assigned directly. The method provided by ShrableHolder must be called to transfer the content

Because ShrableHolder cannot be directly assigned, and when you need to pass a Block containing life cycle semantics to a function, ShrableHolder cannot be used as a parameter of the function.

The code written according to this paradigm has very few logical changes to the original code and no if else is introduced. Although there still seems to be some complexity, fortunately, the range affected by this is still limited to a very local lower layer, which is still acceptable for HBase. To be on the safe side and avoid memory leaks, we have added a detection mechanism to this framework to detect references that have been inactive for a long time. Once found, they will be forcibly marked for deletion.

After using BucketCache, the promotion overhead of BlockCache is reduced and the young GC time is reduced:

The GC frequency of ordinary tenants is very high, but because there are few promoted objects and few cross-generation references, the GC time in the Young zone is well controlled. In the laboratory scene simulation environment, we optimized young GC to 5ms.

(ZenGC optimized effect, unit problem, here is us)

5 Suggestions to Reduce Java Garbage Collection Overhead

Related videos:Garbage collection mechanism-Han Shunping’s latest PHP object-oriented programming video tutorial in 2016

The above is the detailed content of Java garbage collection time can also be easily reduced. An example explains Ali-HBase's GC.. For more information, please follow other related articles on the PHP Chinese website!