Home >Java >javaTutorial >Take you to understand volatile variables-Java concurrent programming and technology insider

Take you to understand volatile variables-Java concurrent programming and technology insider

- php是最好的语言Original

- 2018-07-26 15:29:521755browse

The Java language provides a slightly weaker synchronization mechanism, namely volatile variables, to ensure that other threads are notified of variable update operations. Volatile performance: The read performance consumption of volatile is almost the same as that of ordinary variables, but the write operation is slightly slower because it requires inserting many memory barrier instructions in the native code to ensure that the processor does not execute out of order.

1. Volatile variables

The Java language provides a slightly weaker synchronization mechanism, namely volatile variables, which is used to ensure that other threads are notified of variable update operations . When a variable is declared as volatile, the compiler and runtime will notice that the variable is shared, and therefore operations on the variable will not be reordered with other memory operations. Volatile variables are not cached in registers or somewhere invisible to other processors, so reading a variable of volatile type always returns the most recently written value.

No locking operation is performed when accessing volatile variables, so the execution thread will not be blocked. Therefore, volatile variables are a more lightweight synchronization mechanism than the sychronized keyword.

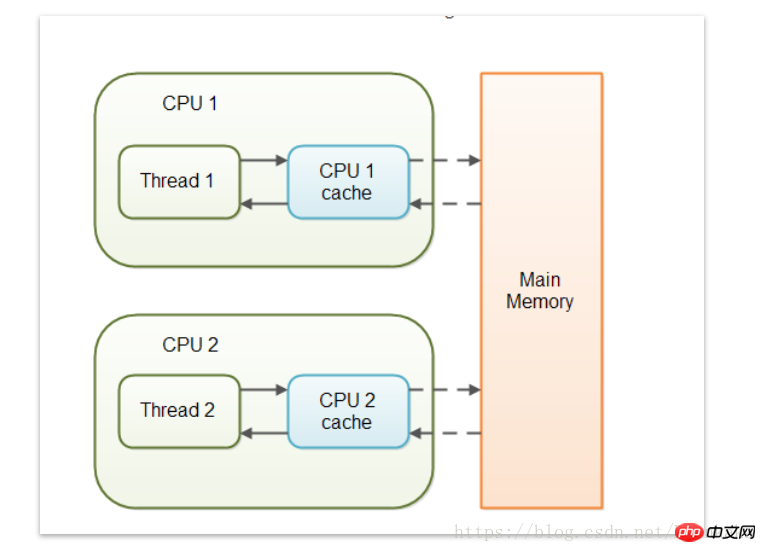

When reading or writing non-volatile variables, each thread first copies the variable from memory to the CPU cache. If the computer has multiple CPUs, each thread may be processed on a different CPU, which means that each thread may be copied to a different CPU cache.

As a volatile variable's variable is declared to be volatile.

When a variable is defined as volatile, it will have two characteristics:

1. Ensure the visibility of this variable to all threads. The "visibility" here is as mentioned at the beginning of this article. As mentioned above, when a thread modifies the value of this variable, volatile ensures that the new value is immediately synchronized to main memory and refreshed from main memory immediately before each use. But ordinary variables cannot do this. The value of ordinary variables needs to be transferred between threads through main memory (see: Java memory model for details).

2. Disable instruction reordering optimization. For variables with volatile modifications, an additional "load addl $0x0, (%esp)" operation is performed after assignment. This operation is equivalent to a memory barrier (when reordering instructions, subsequent instructions cannot be reordered to the position before the memory barrier. ), when only one CPU accesses the memory, no memory barrier is needed; (What is instruction reordering: It means that the CPU uses a method that allows multiple instructions to be sent separately to each corresponding circuit unit for processing in an order not specified by the program).

volatile performance:

The read performance consumption of volatile is almost the same as that of ordinary variables, but the write operation is slightly slower because it requires inserting many memory barrier instructions in the native code to ensure that the processor does not Execution out of order.

2. Memory Visibility

Due to the Java Memory Model (JMM), all variables are stored in main memory, and each thread has its own working memory (cache) .

When the thread is working, it needs to copy the data in the main memory to the working memory. In this way, any operation on the data is based on the working memory (efficiency is improved), and the data in the main memory and the working memory of other threads cannot be directly manipulated, and then the updated data is refreshed into the main memory.

The main memory mentioned here can simply be considered as heap memory, while the working memory can be considered as stack memory.

So when running concurrently, it may happen that the data read by thread B is the data before thread A updated it.

Obviously this will definitely cause problems, so the role of volatile appears:

When a variable is modified by volatile, any thread's write operation to it will Immediately flush to the main memory, and will force the data in the thread that cached the variable to be cleared, and the latest data must be re-read from the main memory.

After volatile modification, the thread does not directly obtain data from the main memory. It still needs to copy the variable to the working memory.

Application of memory visibility

When we need to communicate between two threads based on main memory, the communication variable must be modified with volatile:

public class Test {

private static /*volatile*/ boolean stop = false;

public static void main(String[] args) throws Exception {

Thread t = new Thread(

() -> {

int i = 0;

while (!stop) {

i++;

System.out.println("hello");

}

});

t.start();

Thread.sleep(1000);

TimeUnit.SECONDS.sleep(1);

System.out.println("Stop Thread");

stop = true;

}

}If the above example is not set to volatile, the thread may never exit

But there is a misunderstanding here. This way of using it may easily give people the impression:

Concurrent operations on volatile-modified variables are thread-safe.

It should be emphasized here that volatile does not guarantee thread safety!

The following program:

public class VolatileInc implements Runnable {

private static volatile int count = 0; //使用 volatile 修饰基本数据内存不能保证原子性

//private static AtomicInteger count = new AtomicInteger() ;

@Override

public void run() {

for (int i = 0; i < 100; i++) {

count++;

//count.incrementAndGet() ;

}

}

public static void main(String[] args) throws InterruptedException {

VolatileInc volatileInc = new VolatileInc();

IntStream.range(0,100).forEach(i->{

Thread t= new Thread(volatileInc, "t" + i);

t.start();

try {

t.join();

} catch (InterruptedException e) {

e.printStackTrace();

}

});

System.out.println(count);

}

}When our three threads (t1, t2, main) accumulate an int at the same time, we will find that the final value will be less than 100000.

这是因为虽然 volatile 保证了内存可见性,每个线程拿到的值都是最新值,但 count ++ 这个操作并不是原子的,这里面涉及到获取值、自增、赋值的操作并不能同时完成。

所以想到达到线程安全可以使这三个线程串行执行(其实就是单线程,没有发挥多线程的优势)。也可以使用 synchronize 或者是锁的方式来保证原子性。还可以用 Atomic 包中 AtomicInteger 来替换 int,它利用了 CAS 算法来保证了原子性。

三、指令重排序

内存可见性只是 volatile 的其中一个语义,它还可以防止 JVM 进行指令重排优化。

举一个伪代码:

int a=10 ;//1 int b=20 ;//2 int c= a+b ;//3

一段特别简单的代码,理想情况下它的执行顺序是:1>2>3。但有可能经过 JVM 优化之后的执行顺序变为了 2>1>3。

可以发现不管 JVM 怎么优化,前提都是保证单线程中最终结果不变的情况下进行的。

可能这里还看不出有什么问题,那看下一段伪代码:

private static Map<String,String> value ;

private static volatile boolean flag = fasle ;

//以下方法发生在线程 A 中 初始化 Map

public void initMap(){

//耗时操作

value = getMapValue() ;//1

flag = true ;//2

}

//发生在线程 B中 等到 Map 初始化成功进行其他操作

public void doSomeThing(){

while(!flag){

sleep() ;

}

//dosomething

doSomeThing(value);

}这里就能看出问题了,当 flag 没有被 volatile 修饰时,JVM 对 1 和 2 进行重排,导致 value都还没有被初始化就有可能被线程 B 使用了。

所以加上 volatile 之后可以防止这样的重排优化,保证业务的正确性。

指令重排的的应用

一个经典的使用场景就是双重懒加载的单例模式了:

class Singleton{

private volatile static Singleton instance = null;

private Singleton() {

}

public static Singleton getInstance() {

if(instance==null) {

synchronized (Singleton.class) {

if(instance==null)

instance = new Singleton();

}

}

return instance;

}这里的 volatile 关键字主要是为了防止指令重排。主要在于instance = new Singleton()这句,这并非是一个原子操作,事实上在 JVM 中这句话大概做了下面 3 件事情:

1.给 instance 分配内存

2.调用 Singleton 的构造函数来初始化成员变量

3.将instance对象指向分配的内存空间(执行完这步 instance 就为非 null 了)。

但是在 JVM 的即时编译器中存在指令重排序的优化。也就是说上面的第二步和第三步的顺序是不能保证的,最终的执行顺序可能是 1-2-3 也可能是 1-3-2。如果是后者,则在 3 执行完毕、2 未执行之前,被线程二抢占了,这时 instance 已经是非 null 了(但却没有初始化),所以线程二会直接返回 instance,然后使用,然后顺理成章地报错。

相关文章:

相关视频:

The above is the detailed content of Take you to understand volatile variables-Java concurrent programming and technology insider. For more information, please follow other related articles on the PHP Chinese website!