Backend Development

Backend Development PHP Tutorial

PHP Tutorial What are the differences between clustering, distribution and load balancing? (pictures and text)

What are the differences between clustering, distribution and load balancing? (pictures and text)There is a big difference between clustering, distribution and load balancing in php. In the following article, I will give you a detailed description of the specific differences between clustering, distribution and load balancing. Without further ado, let’s take a look.

The concept of cluster

Computer clusters are connected through a set of loosely integrated computer software and/or hardware to collaborate highly closely to complete computing work. In a sense, they can be thought of as a computer. The individual computers in a clustered system are usually called nodes and are usually connected via a local area network, but other connections are possible. Cluster computers are often used to improve the computing speed and/or reliability of a single computer. In general, cluster computers are much more cost-effective than single computers, such as workstations or supercomputers.

For example, a single heavy-load operation is shared among multiple node devices for parallel processing. After each node device completes processing, the results are summarized and returned to the user. This greatly improves the system's processing capabilities. Generally divided into several types:

High availability cluster: Generally means that when a node in the cluster fails, the tasks on it will automatically be transferred to other normal nodes. . It also means that a node in the cluster can be maintained offline and then brought online again. This process does not affect the operation of the entire cluster.

Load balancing cluster: When the load balancing cluster is running, the workload is generally distributed through one or more front-end load balancers. On a group of servers at the back end, thereby achieving high performance and high availability of the entire system.

High-performance computing cluster: High-performance computing cluster improves computing power by distributing computing tasks to different computing nodes in the cluster, thus Mainly used in the field of scientific computing.

Distributed

Cluster: The same business is deployed on multiple servers. Distributed: A business is split into multiple sub-businesses, or they are different businesses and are deployed on different servers.

Simply put, distribution improves efficiency by shortening the execution time of a single task, while clustering improves efficiency by increasing the number of tasks executed per unit time. For example: Take Sina.com, if there are more people visiting it, it can create a cluster, put a balancing server in the front, and several servers in the back to complete the same business. If there is a business access, the response server will see which server is not heavily loaded. If it is heavy, it will be assigned to which one will complete it. If one server collapses, other servers can step up. Each distributed node completes different services. If one node collapses, the business may fail.

Load Balancing

Concept

With the increase in business volume, the number of visits and data traffic in each core part of the existing network has increased rapidly. Its processing power and computing intensity have also increased accordingly, making it impossible for a single server device to bear it. In this case, if you throw away the existing equipment and do a lot of hardware upgrades, this will cause a waste of existing resources, and if you face the next increase in business volume, this will lead to high costs for another hardware upgrade. Cost investment, even the most excellent equipment cannot meet the needs of current business volume growth.

Load balancing technology virtualizes the application resources of multiple real servers at the backend into a high-performance application server by setting up a virtual server IP (VIP). Through the load balancing algorithm, the user's request is forwarded to the backend intranet server. The intranet server returns the response to the request to the load balancer, and the load balancer then sends the response to the user. This hides the intranet structure from Internet users and prevents users from directly accessing the backend (intranet) server, making the server more secure. , which can prevent attacks on the core network stack and services running on other ports. And the load balancing device (software or hardware) will continuously check the application status on the server and automatically isolate invalid application servers, realizing a simple, scalable and highly reliable application solution that solves the problem. A single server handles the problems of insufficient performance, insufficient scalability, and low reliability.

System expansion can be divided into vertical (vertical) expansion and horizontal (horizontal) expansion. Vertical expansion is to increase the processing power of the server from the perspective of a single machine by increasing hardware processing capabilities, such as CPU processing power, memory capacity, disk, etc., which cannot meet the needs of large distributed systems (websites), large traffic, high concurrency, and massive volumes. Data issues. Therefore, it is necessary to adopt a horizontal expansion method by adding machines to meet the processing capabilities of large-scale website services. For example, if one machine cannot meet the requirements, add two or more machines to share the access pressure.

One of the most important applications of load balancing is to use multiple servers to provide a single service. This solution is sometimes called a server farm. Usually, load balancing is mainly used in Web websites, large Internet Relay Chat networks, high-traffic file download websites, NNTP (Network News Transfer Protocol) services and DNS services. Now load balancers also start to support database services, called database load balancers.

Server load balancing has three basic features: load balancing algorithm, health check and session maintenance. These three features are the basic elements to ensure the normal operation of load balancing. Some other functions are some deepening on these three functions. Below we will introduce the functions and principles of each function in detail.

Before the load balancing device is deployed, users directly access the server address (the server address may be mapped to other addresses on the firewall, but it is essentially one-to-one access). When a single server cannot handle the access of many users due to insufficient performance, it is necessary to consider using multiple servers to provide services. The way to achieve this is load balancing. The implementation principle of the load balancing device is to map the addresses of multiple servers to an external service IP (we usually call it VIP. For server mapping, you can directly map the server IP to a VIP address, or you can map the server IP:Port. into VIP:Port. Different mapping methods will take corresponding health checks. During port mapping, the server port and VIP port can be different). This process is invisible to the user. The user actually does not know what the server has done. Load balancing, because they still access the same destination IP, then after the user's access reaches the load balancing device, how to distribute the user's access to the appropriate server is the job of the load balancing device. Specifically, it uses The three major features mentioned above.

Let’s do a detailed access process analysis:

When a user (IP: 207.17.117.20) accesses the domain name www.a10networks.com, he will first pass DNS query resolves the public address of this domain name: 199.237.202.124. Next, user 207.17.117.20 will access the address 199.237.202.124, so the data packet will reach the load balancing device, and then the load balancing device will distribute the data packet to the appropriate destination. Server, look at the picture below:

When the load balancing device sends the data packet to the server, the data packet has made some changes. As shown in the picture above, the data packet reaches the load balancing Before the device, the source address was: 207.17.117.20, and the destination address was: 199.237.202.124. When the load balancing device forwarded the data packet to the selected server, the source address was still: 207.17.117.20, and the destination address changed to 172.16.20.1. We This method is called destination address NAT (DNAT, destination address translation). Generally speaking, DNAT must be done in server load balancing (there is another mode called server direct return-DSR, which does not do DNAT, we will discuss it separately), and the source address depends on the deployment mode. Sometimes it is also necessary to convert to other addresses, which we call: source address NAT (SNAT). Generally speaking, bypass mode requires SNAT, but serial mode does not. This diagram is in serial mode, so the source address No NAT was done.

Let’s look at the return packet from the server, as shown in the figure below. It has also gone through the IP address conversion process, but the source/destination address in the response packet and the request packet are exactly swapped. The source address of the packet returned from the server is 172.16.20.1 , the destination address is 207.17.117.20. After reaching the load balancing device, the load balancing device changes the source address to 199.237.202.124, and then forwards it to the user, ensuring the consistency of access.

Load balancing algorithm

Generally speaking, load balancing equipment supports multiple load balancing distribution strategies by default, such as:

Polling (RoundRobin) sends requests to each server in a cyclical sequence. When one of the servers fails, AX takes it out of the sequential circular queue and does not participate in the next polling until it returns to normal.

Ratio (Ratio): Assign a weighted value to each server as a ratio. Based on this ratio, allocate user requests to each server. When one of the servers fails, AX takes it out of the server queue and does not participate in the distribution of the next user request until it returns to normal.

Priority: Group all servers, define priorities for each group, and assign user requests to priorities The highest-level server group (within the same group, using a preset polling or ratio algorithm to allocate user requests); when all servers or a specified number of servers in the highest priority level fail, AX will send the request to the next Priority server group. This method actually provides users with a hot backup method.

LeastConnection: AX will record the current number of connections on each server or service port, and new connections will be passed to the server with the smallest number of connections. When one of the servers fails, AX takes it out of the server queue and does not participate in the distribution of the next user request until it returns to normal.

Fast Response time: New connections are passed to those servers with the fastest response. When one of the servers fails, AX takes it out of the server queue and does not participate in the distribution of the next user request until it returns to normal.

Hash algorithm (hash): Hash the client’s source address and port, and forward the result to a Each server is processed. When one of the servers fails, it is taken out of the server queue and does not participate in the distribution of the next user request until it returns to normal.

Content distribution based on data packets: For example, judging the HTTP URL, if the URL has a .jpg extension, then The packet is forwarded to the specified server.

Health Check

Health check is used to check the availability status of various services opened by the server. Load balancing devices are generally configured with various health check methods, such as Ping, TCP, UDP, HTTP, FTP, DNS, etc. Ping belongs to the third layer of health check, used to check the connectivity of the server IP, while TCP/UDP belongs to the fourth layer of health check, used to check the UP/DOWN of the service port. If you want to check more accurately, you need to use To a health check based on layer 7, for example, create an HTTP health check, get a page back, and check whether the page content contains a specified string. If it does, the service is UP. If it does not contain or the page cannot be retrieved, It is considered that the server's Web service is unavailable (DOWN). For example, if the load balancing device detects that port 80 of the server 172.16.20.3 is DOWN, the load balancing device will not forward subsequent connections to this server, but will forward the data packets to other servers based on the algorithm. When creating a health check, you can set the check interval and number of attempts. For example, if you set the interval to 5 seconds and the number of attempts to 3, then the load balancing device will initiate a health check every 5 seconds. If the check fails, it will try 3 times. , if the check fails three times, the service will be marked as DOWN, and then the server will still check the DOWN server every 5 seconds. When it is found that the server health check is successful again at a certain moment, the server will be re-marked. for UP. The interval and number of health check attempts should be set according to the comprehensive situation. The principle is that it will neither affect the business nor cause a large burden on the load balancing equipment.

Session persistence

How to ensure that two http requests from a user are forwarded to the same server requires the load balancing device to configure session persistence.

Session persistence is used to maintain the continuity and consistency of sessions. Since it is difficult to synchronize user access information in real time between servers, it is required to keep the user's previous and subsequent access sessions on one server for processing. For example, when a user visits an e-commerce website, if the user logs in, it is processed by the first server, but the user's purchase of goods is processed by the second server. Since the second server does not know the user's information, So this purchase will not be successful. In this case, the session needs to be maintained, and the user's operations must be processed by the first server to succeed. Of course, not all access requires session maintenance. For example, if the server provides static pages such as the news channel of the website, and each server has the same content, such access does not require session maintenance.

Most load balancing products support two basic types of session persistence: source/destination address session persistence and cookie session persistence. In addition, hash, URL Persist, etc. are also commonly used methods, but not all devices support them. . Different session retention must be configured based on different applications, otherwise it will cause load imbalance or even access exceptions. We mainly analyze the session maintenance of the B/S structure.

Application based on B/S structure:

For application content with ordinary B/S structure, such as static pages of websites, you do not need to configure any session persistence. However, for a business system based on B/S structure, especially middleware platform, session persistence must be configured. , Under normal circumstances, we configure source address session retention to meet the needs, but considering that the client may have the above-mentioned environment that is not conducive to source address session retention, using Cookie session retention is a better way. Cookie session persistence will save the server information selected by the load balancing device in a cookie and send it to the client. When the client continues to visit, it will bring the cookie. The load balancer analyzes the cookie to maintain the session to the previously selected server. Cookies are divided into file cookies and memory cookies. File cookies are stored on the hard drive of the client computer. As long as the cookie file does not expire, it can be kept on the same server regardless of whether the browser is closed repeatedly or not. Memory cookies store cookie information in memory. The cookie's survival time begins when the browser is opened and ends when the browser is closed. Since current browsers have certain default security settings for cookies, some clients may stipulate that the use of file cookies is not allowed, so current application development mostly uses memory cookies.

However, memory cookies are not omnipotent. For example, the browser may completely disable cookies for security reasons, so that cookie session retention loses its effect. We can maintain the session through Session-id, that is, use session-id as a url parameter or put it in the hidden field <input type="hidden">, and then analyze the Session-id for distribution.

Another solution is to save each session information in a database. Since this solution will increase the load on the database, this solution is not good at improving performance. The database is best used to store session data for longer sessions. In order to avoid single points of failure in the database and improve its scalability, the database is usually replicated on multiple servers, and requests are distributed to the database servers through a load balancer.

Session persistence based on source/destination address is not very easy to use because customers may connect to the Internet through DHCP, NAT or Web proxy, and their IP addresses may change frequently, which makes the service quality of this solution unguaranteed.

NAT (Network Address Translation, Network Address Translation): When some hosts within the private network have been assigned local IP addresses (that is, private addresses used only within this private network), but Now when you want to communicate with a host on the Internet (without encryption), you can use the NAT method. This method requires NAT software to be installed on the router connecting the private network to the Internet. A router equipped with NAT software is called a NAT router, and it has at least one valid external global IP address. In this way, when all hosts using local addresses communicate with the outside world, their local addresses must be converted into global IP addresses on the NAT router before they can connect to the Internet.

Other benefits of load balancing

High scalability

By adding or reducing the number of servers, you can better cope with high concurrent requests.

(Server) Health Check

The load balancer can check the health of the backend server application layer and remove failed servers from the server pool to improve reliability.

TCP Connection Reuse

TCP connection reuse technology multiplexes HTTP requests from multiple clients on the front end to a TCP connection established between the back end and the server. This technology can greatly reduce the performance load of the server, reduce the delay caused by new TCP connections with the server, minimize the number of concurrent connection requests from the client to the back-end server, and reduce the resource occupation of the server.

Generally, before sending an HTTP request, the client needs to perform a TCP three-way handshake with the server, establish a TCP connection, and then send the HTTP request. The server processes the HTTP request after receiving it and sends the processing result back to the client. Then the client and the server send FIN to each other and close the connection after receiving the ACK confirmation of the FIN. In this way, a simple HTTP request requires more than a dozen TCP packets to be processed.

After adopting TCP connection reuse technology, a three-way handshake is performed between the client (such as ClientA) and the load balancing device and an HTTP request is sent. After receiving the request, the load balancing device will detect whether there is an idle long connection on the server. If it does not exist, the server will establish a new connection. When the HTTP request response is completed, the client negotiates with the load balancing device to close the connection, and the load balancing maintains the connection with the server. When another client (such as ClientB) needs to send an HTTP request, the load balancing device will directly send the HTTP request to the idle connection maintained with the server, avoiding the delay and server resource consumption caused by the new TCP connection.

In HTTP 1.1, the client can send multiple HTTP requests in a TCP connection. This technology is called HTTP Multiplexing. The most fundamental difference between it and TCP connection multiplexing is that TCP connection multiplexing is to multiplex multiple client HTTP requests to a server-side TCP connection, while HTTP multiplexing is to multiplex a client's HTTP requests through a TCP The connection is processed. The former is a unique feature of the load balancing device; while the latter is a new feature supported by the HTTP 1.1 protocol and is currently supported by most browsers.

HTTP Cache

Load balancers can store static content and respond directly to users when they request them without having to make requests to the backend server.

TCP Buffering

TCP buffering is to solve the problem of wasting server resources caused by the mismatch between the back-end server network speed and the customer's front-end network speed. The link between the client and the load balancer has high latency and low bandwidth, while the link between the load balancer and the server uses a LAN connection with low latency and high bandwidth. Since the load balancer can temporarily store the response data of the backend server to the client, and then forward them to those clients with longer response times and slower network speeds, the backend web server can release the corresponding threads to handle other tasks.

SSL Acceleration

Under normal circumstances, HTTP is transmitted on the network in clear text, which may be illegally eavesdropped, especially the password information used for authentication. In order to avoid such security issues, the SSL protocol (ie: HTTPS) is generally used to encrypt the HTTP protocol to ensure the security of the entire transmission process. In SSL communication, asymmetric key technology is first used to exchange authentication information, and the session key used to encrypt data between the server and the browser is exchanged, and then the key is used to encrypt and decrypt the information during the communication process.

SSL is a security technology that consumes a lot of CPU resources. Currently, most load balancing devices use SSL acceleration chips (hardware load balancers) to process SSL information. This method provides higher SSL processing performance than the traditional server-based SSL encryption method, thereby saving a large amount of server resources and allowing the server to focus on processing business requests. In addition, centralized SSL processing can also simplify the management of certificates and reduce the workload of daily management.

Content filtering

Some load balancers can modify the data passing through them as required.

Intrusion prevention function

On the basis of the firewall ensuring network layer/transport layer security, it provides application layer security prevention.

Classification

The following discusses the implementation of load balancing from different levels:

DNS Load Balancing

DNS is responsible for providing domain name resolution services. When accessing a certain site In fact, you first need to obtain the IP address pointed to by the domain name through the DNS server of the site domain name. In this process, the DNS server completes the mapping of the domain name to the IP address. Similarly, this mapping can also be one-to-many. At this time, the DNS server acts as a load balancing scheduler, distributing user requests to multiple servers. Use the dig command to take a look at the DNS settings of "baidu":

It can be seen that baidu has three A records.

The advantages of this technology are that it is simple to implement, easy to implement, low cost, suitable for most TCP/IP applications, and the DNS server can find the server closest to the user among all available A records. . However, its shortcomings are also very obvious. First of all, this solution is not load balancing in the true sense. The DNS server evenly distributes HTTP requests to the background Web servers (or according to geographical location), regardless of the current status of each Web server. Load situation; if the configuration and processing capabilities of the backend Web servers are different, the slowest Web server will become the bottleneck of the system, and the server with strong processing capabilities cannot fully play its role; secondly, fault tolerance is not considered, if a certain Web server in the background fails , the DNS server will still assign DNS requests to this failed server, resulting in the inability to respond to the client. The last point is fatal. It may cause a considerable number of customers to be unable to enjoy Web services, and due to DNS caching, the consequences will last for a long period of time (the general refresh cycle of DNS is about 24 hours). Therefore, in the latest foreign construction center Web site solutions, this solution is rarely used.

Link layer (OSI layer 2) load balancing

Modify the mac address in the data link layer of the communication protocol for load balancing.

When distributing data, do not modify the IP address (because the IP address cannot be seen yet), only modify the target mac address, and configure all back-end server virtual IPs to be consistent with the load balancer IP address, so that the source of the data packet is not modified. address and destination address for the purpose of data distribution.

The actual processing server IP is consistent with the data request destination IP. There is no need to go through the load balancing server for address translation. The response data packet can be returned directly to the user's browser to avoid the load balancing server network card bandwidth becoming a bottleneck. Also called direct routing mode (DR mode). As shown below:

The performance is very good, but the configuration is complicated and it is currently widely used.

Transport layer (OSI layer 4) load balancing

The transport layer is OSI layer 4, including TCP and UDP. Popular transport layer load balancers are HAProxy (this one is also used for application layer load balancing) and IPVS.

Mainly determine the final selected internal server through the destination address and port in the message, plus the server selection method set by the load balancing device.

Taking common TCP as an example, when the load balancing device receives the first SYN request from the client, it selects an optimal server through the above method and modifies the target IP address in the message (changed to Backend server IP), forwarded directly to the server. TCP connection establishment, that is, the three-way handshake is established directly between the client and the server, and the load balancing device only acts as a router-like forwarding action. In some deployment situations, in order to ensure that the server return packet can be correctly returned to the load balancing device, the original source address of the packet may be modified while forwarding the packet.

Application layer (OSI layer 7) load balancing

The application layer is OSI layer 7. It includes HTTP, HTTPS, and WebSockets. A very popular and proven application layer load balancer is Nginx [Engine X = Engine X].

The so-called seven-layer load balancing, also known as "content switching", is mainly based on the truly meaningful application layer content in the message, coupled with the server selection method set by the load balancing device, to determine the final internal selection. server. Note that you can see the complete URL of the specific http request at this time, so you can achieve the distribution shown in the figure below:

Taking common TCP as an example, if the load balancing device wants to be based on the real The application layer content and then select the server can only be seen after the final server and client are proxied to establish a connection (three-way handshake), and then the real application layer content message sent by the client can be seen. field, combined with the server selection method set by the load balancing device, determines the final selection of the internal server. The load balancing device in this case is more similar to a proxy server. The load balancing and front-end clients and back-end servers will establish TCP connections respectively. Therefore, from the perspective of this technical principle, seven-layer load balancing obviously has higher requirements for load balancing equipment, and the ability to handle seven layers will inevitably be lower than the four-layer mode deployment method. So, why do we need layer 7 load balancing?

The benefit of seven-layer load balancing is to make the entire network more "intelligent". For example, most of the benefits of load balancing listed above are based on seven-layer load balancing. For example, user traffic visiting a website can forward requests for images to a specific image server and use caching technology through the seven-layer approach; requests for text can be forwarded to a specific text server and compression can be used. technology. Of course, this is just a small case of a seven-layer application. From a technical perspective, this method can modify the client's request and the server's response in any sense, greatly improving the flexibility of the application system at the network layer.

Another feature that is often mentioned is security. The most common SYN Flood attack in the network is that hackers control many source clients and use false IP addresses to send SYN attacks to the same target. Usually, this attack will send a large number of SYN messages and exhaust related resources on the server to achieve Denial. of Service (DoS) purpose. It can also be seen from the technical principles that in the four-layer mode, these SYN attacks will be forwarded to the back-end server; in the seven-layer mode, these SYN attacks will naturally end on the load balancing device and will not affect the normal operation of the back-end server. . In addition, the load balancing device can set multiple policies at the seven-layer level to filter specific messages, such as SQL Injection and other application-level attack methods, to further improve the overall system security from the application level.

The current seven-layer load balancing mainly focuses on the widely used HTTP protocol, so its application scope is mainly systems developed based on B/S such as numerous websites or internal information platforms. Layer 4 load balancing corresponds to other TCP applications, such as ERP and other systems developed based on C/S.

Related recommendations:

Summarize the points to note about distributed clusters

The above is the detailed content of What are the differences between clustering, distribution and load balancing? (pictures and text). For more information, please follow other related articles on the PHP Chinese website!

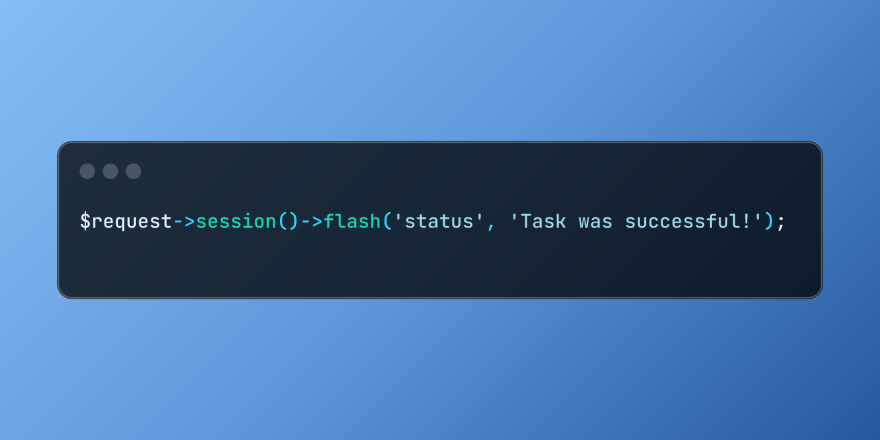

Working with Flash Session Data in LaravelMar 12, 2025 pm 05:08 PM

Working with Flash Session Data in LaravelMar 12, 2025 pm 05:08 PMLaravel simplifies handling temporary session data using its intuitive flash methods. This is perfect for displaying brief messages, alerts, or notifications within your application. Data persists only for the subsequent request by default: $request-

Build a React App With a Laravel Back End: Part 2, ReactMar 04, 2025 am 09:33 AM

Build a React App With a Laravel Back End: Part 2, ReactMar 04, 2025 am 09:33 AMThis is the second and final part of the series on building a React application with a Laravel back-end. In the first part of the series, we created a RESTful API using Laravel for a basic product-listing application. In this tutorial, we will be dev

Simplified HTTP Response Mocking in Laravel TestsMar 12, 2025 pm 05:09 PM

Simplified HTTP Response Mocking in Laravel TestsMar 12, 2025 pm 05:09 PMLaravel provides concise HTTP response simulation syntax, simplifying HTTP interaction testing. This approach significantly reduces code redundancy while making your test simulation more intuitive. The basic implementation provides a variety of response type shortcuts: use Illuminate\Support\Facades\Http; Http::fake([ 'google.com' => 'Hello World', 'github.com' => ['foo' => 'bar'], 'forge.laravel.com' =>

cURL in PHP: How to Use the PHP cURL Extension in REST APIsMar 14, 2025 am 11:42 AM

cURL in PHP: How to Use the PHP cURL Extension in REST APIsMar 14, 2025 am 11:42 AMThe PHP Client URL (cURL) extension is a powerful tool for developers, enabling seamless interaction with remote servers and REST APIs. By leveraging libcurl, a well-respected multi-protocol file transfer library, PHP cURL facilitates efficient execution of various network protocols, including HTTP, HTTPS, and FTP. This extension offers granular control over HTTP requests, supports multiple concurrent operations, and provides built-in security features.

12 Best PHP Chat Scripts on CodeCanyonMar 13, 2025 pm 12:08 PM

12 Best PHP Chat Scripts on CodeCanyonMar 13, 2025 pm 12:08 PMDo you want to provide real-time, instant solutions to your customers' most pressing problems? Live chat lets you have real-time conversations with customers and resolve their problems instantly. It allows you to provide faster service to your custom

Notifications in LaravelMar 04, 2025 am 09:22 AM

Notifications in LaravelMar 04, 2025 am 09:22 AMIn this article, we're going to explore the notification system in the Laravel web framework. The notification system in Laravel allows you to send notifications to users over different channels. Today, we'll discuss how you can send notifications ov

Explain the concept of late static binding in PHP.Mar 21, 2025 pm 01:33 PM

Explain the concept of late static binding in PHP.Mar 21, 2025 pm 01:33 PMArticle discusses late static binding (LSB) in PHP, introduced in PHP 5.3, allowing runtime resolution of static method calls for more flexible inheritance.Main issue: LSB vs. traditional polymorphism; LSB's practical applications and potential perfo

PHP Logging: Best Practices for PHP Log AnalysisMar 10, 2025 pm 02:32 PM

PHP Logging: Best Practices for PHP Log AnalysisMar 10, 2025 pm 02:32 PMPHP logging is essential for monitoring and debugging web applications, as well as capturing critical events, errors, and runtime behavior. It provides valuable insights into system performance, helps identify issues, and supports faster troubleshoot

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SublimeText3 English version

Recommended: Win version, supports code prompts!

SublimeText3 Mac version

God-level code editing software (SublimeText3)