Home >Backend Development >Python Tutorial >How to solve the 403 problem in crawlers

How to solve the 403 problem in crawlers

- 零到壹度Original

- 2018-04-03 11:25:247350browse

When writing a crawler in python, html.getcode() will encounter the problem of 403 access forbidden, which is a ban on automated crawlers on the website. This article mainly introduces how to solve the 403 problem of crawlers in Angular2 Advanced. The editor thinks it is quite good, so I will share it with you now and give it as a reference. Let’s follow the editor and take a look.

To solve this problem, you need to use the python module urllib2 module

urllib2 module It is an advanced crawler crawling module. There are many methods

For example, connect url=http://blog.csdn.net/qysh123

There may be a 403 access forbidden problem for this connection

To solve this problem, the following steps are required:

<span style="font-size:18px;">req = urllib2.Request(url)

req.add_header("User-Agent","Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36")

req.add_header("GET",url)

req.add_header("Host","blog.csdn.net")

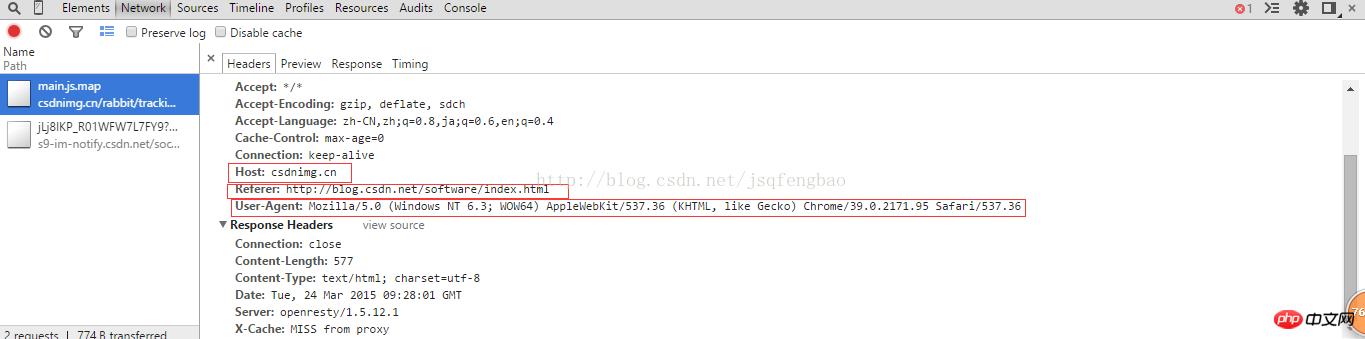

req.add_header("Referer","http://blog.csdn.net/")</span>User-Agent is a browser-specific attribute. You can view the source code through the browser to see

then html=urllib2.urlopen (req)

print html.read()

can download all the web page code without the problem of 403 forbidden access.

For the above problems, it can be encapsulated into a function for easy use in future calls. The specific code is:

- ##

#-*-coding:utf-8-*-

import urllib2

import random

url="http://blog.csdn.net/qysh123/article/details/44564943"

my_headers=["Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/35.0.1916.153 Safari/537.36",

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:30.0) Gecko/20100101 Firefox/30.0"

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.75.14 (KHTML, like Gecko) Version/7.0.3 Safari/537.75.14",

"Mozilla/5.0 (compatible; MSIE 10.0; Windows NT 6.2; Win64; x64; Trident/6.0)"

]

def get_content(url,headers):

'''''

@获取403禁止访问的网页

'''

randdom_header=random.choice(headers)

req=urllib2.Request(url)

req.add_header("User-Agent",randdom_header)

req.add_header("Host","blog.csdn.net")

req.add_header("Referer","http://blog.csdn.net/")

req.add_header("GET",url)

content=urllib2.urlopen(req).read()

return content

print get_content(url,my_headers)

The random function is used to automatically obtain the User-Agent information of the browser type that has been written. In the custom function, you need to write your own Host, Referer, GET information, etc. , solve these problems, you can access smoothly, and the 403 access information will no longer appear.

Of course, if the access frequency is too fast, some websites will still be filtered. To solve this problem, you need to use a proxy IP method. . . Specific solution by yourself

Related recommendations:

Python crawler solves 403 access forbidden error

python3 HTTP Error 403:Forbidden

The above is the detailed content of How to solve the 403 problem in crawlers. For more information, please follow other related articles on the PHP Chinese website!