What is load balancing

When the number of visits per unit time of a server is greater, the pressure on the server will be greater. When it exceeds its own capacity, the server will will crash. In order to avoid server crashes and provide users with a better experience, we use load balancing to share server pressure.

We can build many servers to form a server cluster. When a user accesses the website, he first visits an intermediate server, and then lets the intermediate server choose a server with less pressure in the server cluster, and then transfers the server to the server cluster. Access requests are directed to the server. In this way, every visit by a user will ensure that the pressure of each server in the server cluster tends to be balanced, sharing the server pressure and avoiding server crash.

Load balancing is implemented using the principle of reverse proxy.

Several common methods of load balancing

1. Polling (default)

Each request is assigned to different servers one by one in chronological order The end server can be automatically eliminated if the backend server goes down.

upstream backserver { server 192.168.0.14; server 192.168.0.15;

}2. Weight

Specifies the polling probability, weight is proportional to the access ratio, and is used for

situations where back-end server performance is uneven.

upstream backserver { server 192.168.0.14 weight=3; server 192.168.0.15 weight=7;

}The higher the weight, the greater the probability of being accessed. As in the above example, they are 30% and 70% respectively.

3. There is a problem with the above method, that is, in the load balancing system, if the user logs in to a certain server, then when the user makes a second request, because we are a load balancing system, every time Every request will be redirected to one of the server clusters. If a user who has logged in to a server is redirected to another server, his login information will be lost. This is obviously inappropriate.

We can use the ip_hash instruction to solve this problem. If the customer has already visited a certain server, when the user visits again, the request will be automatically located to the server through the hash algorithm.

Each request is allocated according to the hash result of the access IP, so that each visitor has fixed access to a back-end server, which can solve the session problem.

upstream backserver {

ip_hash; server 192.168.0.14:88; server 192.168.0.15:80;

}4. fair (third party)

Requests are allocated according to the response time of the backend server, and those with short response times are allocated first.

upstream backserver { server server1; server server2;

fair;

}5. url_hash (third party)

Distribute requests according to the hash result of the accessed URL, so that each URL is directed to the same back-end server. It is more effective when the back-end server is cached.

upstream backserver {

server squid1:3128;

server squid2:3128; hash $request_uri; hash_method crc32;

}The status of each device is set to:

1.down means that the previous server will not participate in the load temporarily

2.weight The default is 1. The greater the weight, the weight of the load The bigger it gets.

3.max_fails: The number of allowed request failures is 1 by default. When the maximum number is exceeded, the error defined by the proxy_next_upstream module is returned

4.fail_timeout: The time to pause after max_fails failures.

5.backup: When all other non-backup machines are down or busy, request the backup machine. So this machine will have the least pressure.

Configuration example:

#user nobody;worker_processes 4;

events { # 最大并发数

worker_connections 1024;

}

http{ # 待选服务器列表

upstream myproject{ # ip_hash指令,将同一用户引入同一服务器。

ip_hash; server 125.219.42.4 fail_timeout=60s; server 172.31.2.183;

} server{ # 监听端口

listen 80; # 根目录下

location / { # 选择哪个服务器列表

proxy_pass http://myproject;

}

}

}Related recommendations:

Nginx reverse proxy and load balancing practice

##nginx four Layer load balancing configuration

Detailed explanation of load balancing Nginx

The above is the detailed content of Several ways for Nginx to achieve load balancing. For more information, please follow other related articles on the PHP Chinese website!

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM

内存飙升!记一次nginx拦截爬虫Mar 30, 2023 pm 04:35 PM本篇文章给大家带来了关于nginx的相关知识,其中主要介绍了nginx拦截爬虫相关的,感兴趣的朋友下面一起来看一下吧,希望对大家有帮助。

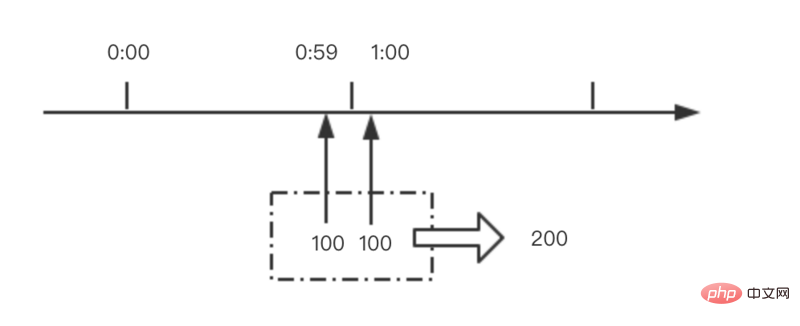

nginx限流模块源码分析May 11, 2023 pm 06:16 PM

nginx限流模块源码分析May 11, 2023 pm 06:16 PM高并发系统有三把利器:缓存、降级和限流;限流的目的是通过对并发访问/请求进行限速来保护系统,一旦达到限制速率则可以拒绝服务(定向到错误页)、排队等待(秒杀)、降级(返回兜底数据或默认数据);高并发系统常见的限流有:限制总并发数(数据库连接池)、限制瞬时并发数(如nginx的limit_conn模块,用来限制瞬时并发连接数)、限制时间窗口内的平均速率(nginx的limit_req模块,用来限制每秒的平均速率);另外还可以根据网络连接数、网络流量、cpu或内存负载等来限流。1.限流算法最简单粗暴的

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM

nginx+rsync+inotify怎么配置实现负载均衡May 11, 2023 pm 03:37 PM实验环境前端nginx:ip192.168.6.242,对后端的wordpress网站做反向代理实现复杂均衡后端nginx:ip192.168.6.36,192.168.6.205都部署wordpress,并使用相同的数据库1、在后端的两个wordpress上配置rsync+inotify,两服务器都开启rsync服务,并且通过inotify分别向对方同步数据下面配置192.168.6.205这台服务器vim/etc/rsyncd.confuid=nginxgid=nginxport=873ho

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AM

nginx php403错误怎么解决Nov 23, 2022 am 09:59 AMnginx php403错误的解决办法:1、修改文件权限或开启selinux;2、修改php-fpm.conf,加入需要的文件扩展名;3、修改php.ini内容为“cgi.fix_pathinfo = 0”;4、重启php-fpm即可。

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM

如何解决跨域?常见解决方案浅析Apr 25, 2023 pm 07:57 PM跨域是开发中经常会遇到的一个场景,也是面试中经常会讨论的一个问题。掌握常见的跨域解决方案及其背后的原理,不仅可以提高我们的开发效率,还能在面试中表现的更加

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PM

nginx部署react刷新404怎么办Jan 03, 2023 pm 01:41 PMnginx部署react刷新404的解决办法:1、修改Nginx配置为“server {listen 80;server_name https://www.xxx.com;location / {root xxx;index index.html index.htm;...}”;2、刷新路由,按当前路径去nginx加载页面即可。

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AM

nginx怎么禁止访问phpNov 22, 2022 am 09:52 AMnginx禁止访问php的方法:1、配置nginx,禁止解析指定目录下的指定程序;2、将“location ~^/images/.*\.(php|php5|sh|pl|py)${deny all...}”语句放置在server标签内即可。

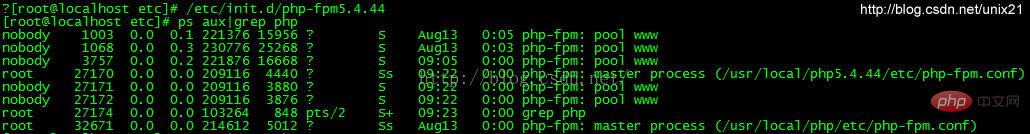

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PM

Linux系统下如何为Nginx安装多版本PHPMay 11, 2023 pm 07:34 PMlinux版本:64位centos6.4nginx版本:nginx1.8.0php版本:php5.5.28&php5.4.44注意假如php5.5是主版本已经安装在/usr/local/php目录下,那么再安装其他版本的php再指定不同安装目录即可。安装php#wgethttp://cn2.php.net/get/php-5.4.44.tar.gz/from/this/mirror#tarzxvfphp-5.4.44.tar.gz#cdphp-5.4.44#./configure--pr

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SublimeText3 Linux new version

SublimeText3 Linux latest version

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment