Backend Development

Backend Development Python Tutorial

Python Tutorial Detailed example of how python3 uses the requests module to crawl page content

Detailed example of how python3 uses the requests module to crawl page contentThis article mainly introduces the actual practice of using python3 to crawl page content using the requests module. It has certain reference value. If you are interested, you can learn more

1. Install pip

My personal desktop system uses linuxmint. The system does not have pip installed by default. Considering that pip will be used to install the requests module later, I will install pip as the first step here.

$ sudo apt install python-pip

The installation is successful, check the PIP version:

$ pip -V

2. Install the requests module

Here I installed it through pip:

$ pip install requests

Run import requests, if no error is prompted, then It means the installation has been successful!

Verify whether the installation is successful

3. Install beautifulsoup4

Beautiful Soup is a tool that can be downloaded from HTML or XML Python library for extracting data from files. It enables customary document navigation, ways to find and modify documents through your favorite converter. Beautiful Soup will save you hours or even days of work.

$ sudo apt-get install python3-bs4

Note: I am using the python3 installation method here. If you are using python2, you can use the following command to install it.

$ sudo pip install beautifulsoup4

4.A brief analysis of the requests module

1) Send a request

First of all, of course, you must import Requests Module:

>>> import requests

Then, get the target crawled web page. Here I take the following as an example:

>>> r = requests.get('http://www.jb51.net/article/124421.htm')

Here returns a response object named r. We can get all the information we want from this object. The get here is the response method of http, so you can also replace it with put, delete, post, and head by analogy.

2) Pass URL parameters

Sometimes we want to pass some kind of data for the query string of the URL. If you build the URL by hand, the data is placed in the URL as key/value pairs, followed by a question mark. For example, cnblogs.com/get?key=val. Requests allow you to use the params keyword argument to provide these parameters as a dictionary of strings.

For example, when we google search for the keyword "python crawler", parameters such as newwindow (new window opens), q and oq (search keywords) can be manually formed into the URL, then you can use the following code :

>>> payload = {'newwindow': '1', 'q': 'python爬虫', 'oq': 'python爬虫'}

>>> r = requests.get("https://www.google.com/search", params=payload)3) Response content

Get the page response content through r.text or r.content.

>>> import requests

>>> r = requests.get('https://github.com/timeline.json')

>>> r.textRequests automatically decode content from the server. Most unicode character sets can be decoded seamlessly. Here is a little addition about the difference between r.text and r.content. To put it simply:

resp.text returns Unicode data;

resp.content returns data of bytes type. It is binary data;

So if you want to get text, you can pass r.text. If you want to get pictures or files, you can pass r.content.

4) Get the web page encoding

>>> r = requests.get('http://www.cnblogs.com/') >>> r.encoding 'utf-8'

5) Get the response status code

We can detect the response status code:

>>> r = requests.get('http://www.cnblogs.com/') >>> r.status_code 200

5. Case Demonstration

The company has just introduced an OA system recently. Here I take its official documentation page as an example, and Only capture useful information such as article titles and content on the page.

Demo environment

Operating system: linuxmint

Python version: python 3.5.2

Using modules: requests, beautifulsoup4

Code As follows:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

_author_ = 'GavinHsueh'

import requests

import bs4

#要抓取的目标页码地址

url = 'http://www.ranzhi.org/book/ranzhi/about-ranzhi-4.html'

#抓取页码内容,返回响应对象

response = requests.get(url)

#查看响应状态码

status_code = response.status_code

#使用BeautifulSoup解析代码,并锁定页码指定标签内容

content = bs4.BeautifulSoup(response.content.decode("utf-8"), "lxml")

element = content.find_all(id='book')

print(status_code)

print(element)The program runs and returns the crawling result:

The crawl is successful

About the problem of garbled crawling results

In fact, at first I directly used the python2 that comes with the system by default, but I struggled for a long time with the problem of garbled encoding of the content returned by crawling, google Various solutions have failed. After being "made crazy" by python2, I had no choice but to use python3 honestly. Regarding the problem of garbled content in crawled pages in python2, seniors are welcome to share their experiences to help future generations like me avoid detours.

The above is the detailed content of Detailed example of how python3 uses the requests module to crawl page content. For more information, please follow other related articles on the PHP Chinese website!

python中CURL和python requests的相互转换如何实现May 03, 2023 pm 12:49 PM

python中CURL和python requests的相互转换如何实现May 03, 2023 pm 12:49 PMcurl和Pythonrequests都是发送HTTP请求的强大工具。虽然curl是一种命令行工具,可让您直接从终端发送请求,但Python的请求库提供了一种更具编程性的方式来从Python代码中发送请求。将curl转换为Pythonrequestscurl命令的基本语法如下所示:curl[OPTIONS]URL将curl命令转换为Python请求时,我们需要将选项和URL转换为Python代码。这是一个示例curlPOST命令:curl-XPOSThttps://example.com/api

Python爬虫Requests库怎么使用May 16, 2023 am 11:46 AM

Python爬虫Requests库怎么使用May 16, 2023 am 11:46 AM1、安装requests库因为学习过程使用的是Python语言,需要提前安装Python,我安装的是Python3.8,可以通过命令python--version查看自己安装的Python版本,建议安装Python3.X以上的版本。安装好Python以后可以直接通过以下命令安装requests库。pipinstallrequestsPs:可以切换到国内的pip源,例如阿里、豆瓣,速度快为了演示功能,我这里使用nginx模拟了一个简单网站。下载好了以后,直接运行根目录下的nginx.exe程序就可

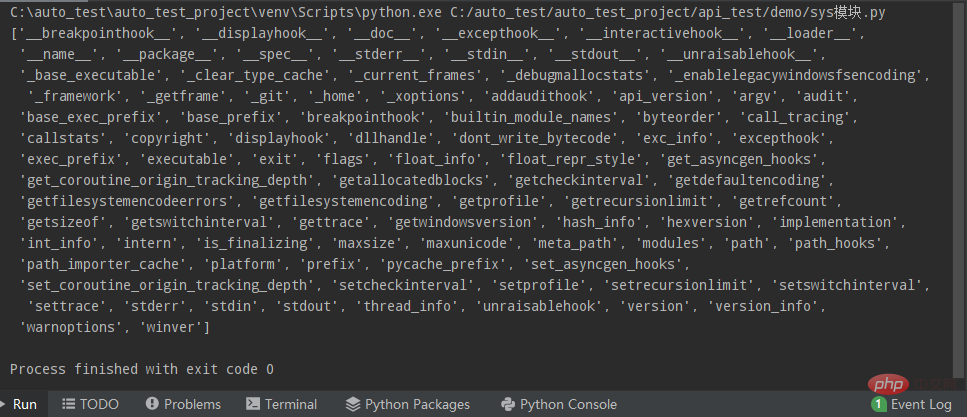

Python常用标准库及第三方库2-sys模块Apr 10, 2023 pm 02:56 PM

Python常用标准库及第三方库2-sys模块Apr 10, 2023 pm 02:56 PM一、sys模块简介前面介绍的os模块主要面向操作系统,而本篇的sys模块则主要针对的是Python解释器。sys模块是Python自带的模块,它是与Python解释器交互的一个接口。sys 模块提供了许多函数和变量来处理 Python 运行时环境的不同部分。二、sys模块常用方法通过dir()方法可以查看sys模块中带有哪些方法:import sys print(dir(sys))1.sys.argv-获取命令行参数sys.argv作用是实现从程序外部向程序传递参数,它能够获取命令行参数列

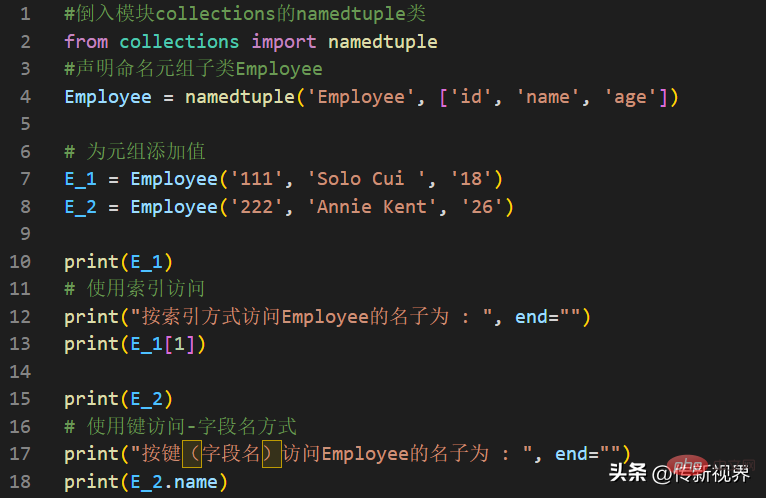

Python编程:详解命名元组(namedtuple)的使用要点Apr 11, 2023 pm 09:22 PM

Python编程:详解命名元组(namedtuple)的使用要点Apr 11, 2023 pm 09:22 PM前言本文继续来介绍Python集合模块,这次主要简明扼要的介绍其内的命名元组,即namedtuple的使用。闲话少叙,我们开始——记得点赞、关注和转发哦~ ^_^创建命名元组Python集合中的命名元组类namedTuples为元组中的每个位置赋予意义,并增强代码的可读性和描述性。它们可以在任何使用常规元组的地方使用,且增加了通过名称而不是位置索引方式访问字段的能力。其来自Python内置模块collections。其使用的常规语法方式为:import collections XxNamedT

Python如何使用Requests请求网页Apr 25, 2023 am 09:29 AM

Python如何使用Requests请求网页Apr 25, 2023 am 09:29 AMRequests继承了urllib2的所有特性。Requests支持HTTP连接保持和连接池,支持使用cookie保持会话,支持文件上传,支持自动确定响应内容的编码,支持国际化的URL和POST数据自动编码。安装方式利用pip安装$pipinstallrequestsGET请求基本GET请求(headers参数和parmas参数)1.最基本的GET请求可以直接用get方法'response=requests.get("http://www.baidu.com/"

python requests post如何使用Apr 29, 2023 pm 04:52 PM

python requests post如何使用Apr 29, 2023 pm 04:52 PMpython模拟浏览器发送post请求importrequests格式request.postrequest.post(url,data,json,kwargs)#post请求格式request.get(url,params,kwargs)#对比get请求发送post请求传参分为表单(x-www-form-urlencoded)json(application/json)data参数支持字典格式和字符串格式,字典格式用json.dumps()方法把data转换为合法的json格式字符串次方法需要

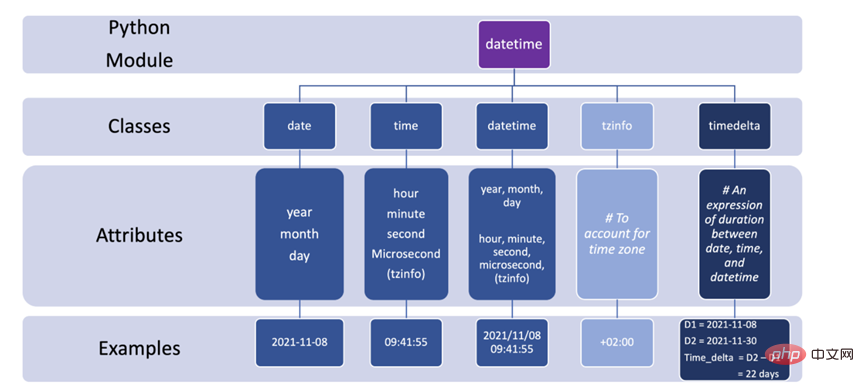

如何在 Python 中使用 DateTimeApr 19, 2023 pm 11:55 PM

如何在 Python 中使用 DateTimeApr 19, 2023 pm 11:55 PM所有数据在开始时都会自动分配一个“DOB”(出生日期)。因此,在某些时候处理数据时不可避免地会遇到日期和时间数据。本教程将带您了解Python中的datetime模块以及使用一些外围库,如pandas和pytz。在Python中,任何与日期和时间有关的事情都由datetime模块处理,它将模块进一步分为5个不同的类。类只是与对象相对应的数据类型。下图总结了Python中的5个日期时间类以及常用的属性和示例。3个有用的片段1.将字符串转换为日期时间格式,也许是使用datet

Python 的 import 是怎么工作的?May 15, 2023 pm 08:13 PM

Python 的 import 是怎么工作的?May 15, 2023 pm 08:13 PM你好,我是somenzz,可以叫我征哥。Python的import是非常直观的,但即使这样,有时候你会发现,明明包就在那里,我们仍会遇到ModuleNotFoundError,明明相对路径非常正确,就是报错ImportError:attemptedrelativeimportwithnoknownparentpackage导入同一个目录的模块和不同的目录的模块是完全不同的,本文通过分析使用import经常遇到的一些问题,来帮助你轻松搞定import,据此,你可以轻松创建属

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 English version

Recommended: Win version, supports code prompts!