How to build a system for extracting structured information and data from unstructured text? What methods use this type of behavior? Which corpora are suitable for this work? Is it possible to train and evaluate the model?

Information extraction, especially structured information extraction, can be compared to database records. The corresponding relationship binds the corresponding data information. For unstructured data such as natural language, in order to obtain the corresponding relationship, the special relationship corresponding to the entity should be searched and recorded using some data structures such as strings and elements.

Entity recognition: chunking technology

For example: We saw the yellow dog, according to the idea of chunking, the last three words will be divided into NP, and the three words inside Each word corresponds to DT/JJ/NN respectively; saw is divided into VBD; We is divided into NP. For the last three words, NP is the chunk (larger set). In order to achieve this, you canuse NLTK's own chunking syntax, similar to regular expressions, to implement sentence chunking.

Construction of chunking syntax

Just pay attention to three points:

- ##Basic chunking:

Chunking:{ Sub-block under the block}

(similar to:"NP: {- ?

* }" A string like this). And ?*+ saves the meaning of the regular expression. - ?

import nltk

sentence = [('the','DT'),('little','JJ'),('yellow','JJ'),('dog','NN'),('brak','VBD')]

grammer = "NP: {<DT>?<JJ>*<NN>}"cp = nltk.RegexpParser(grammer) #生成规则result = cp.parse(sentence) #进行分块print(result)

result.draw() #调用matplotlib库画出来

- You can define a ## for sequences of identifiers that are not included in chunks. #gap

:}

##+{ import nltk sentence = [('the','DT'),('little','JJ'),('yellow','JJ'),('dog','NN'),('bark','VBD'),('at','IN'),('the','DT'),('cat','NN')] grammer = """NP: {<DT>?<JJ>*<NN>} }<VBD|NN>+{ """ #加缝隙,必须保存换行符cp = nltk.RegexpParser(grammer) #生成规则result = cp.parse(sentence) #进行分块print(result)

- VP: {

- . At this time, the parameter

loop

Tree diagramof theRegexpParserfunction can be set to 2 and looped multiple times to prevent omissions.

print(type(result))

to view the type, you will find that it isnltk.tree. Tree. As you can tell from the name, this is a tree-like structure. nltk.Tree can realize tree structure, and supports splicing technology, providing node query and tree drawing. <pre class='brush:php;toolbar:false;'>tree1 = nltk.Tree(&#39;NP&#39;,[&#39;Alick&#39;])print(tree1)

tree2 = nltk.Tree(&#39;N&#39;,[&#39;Alick&#39;,&#39;Rabbit&#39;])print(tree2)

tree3 = nltk.Tree(&#39;S&#39;,[tree1,tree2])print(tree3.label()) #查看树的结点tree3.draw()</pre>

IOB mark

Developing and evaluating chunkers

NLTK already provides us with chunkers, reducing manual building rules. At the same time, it also provides content that has been divided into chunks for reference when we build our own rules.

#这段代码在python2下运行from nltk.corpus import conll2000print conll2000.chunked_sents('train.txt')[99] #查看已经分块的一个句子text = """ he /PRP/ B-NP accepted /VBD/ B-VP the DT B-NP position NN I-NP of IN B-PP vice NN B-NP chairman NN I-NP of IN B-PP Carlyle NNP B-NP Group NNP I-NP , , O a DT B-NP merchant NN I-NP banking NN I-NP concern NN I-NP . . O"""result = nltk.chunk.conllstr2tree(text,chunk_types=['NP'])

For the previously defined rules

cp

cp.evaluate(conll2000.chunked_sents(' train.txt')[99]) to test the accuracy. Using the Unigram tagger we learned before, we can segment noun phrases into chunks and test the accuracy<pre class='brush:php;toolbar:false;'>class UnigramChunker(nltk.ChunkParserI):""" 一元分块器, 该分块器可以从训练句子集中找出每个词性标注最有可能的分块标记, 然后使用这些信息进行分块 """def __init__(self, train_sents):""" 构造函数 :param train_sents: Tree对象列表 """train_data = []for sent in train_sents:# 将Tree对象转换为IOB标记列表[(word, tag, IOB-tag), ...]conlltags = nltk.chunk.tree2conlltags(sent)# 找出每个词性标注对应的IOB标记ti_list = [(t, i) for w, t, i in conlltags]

train_data.append(ti_list)# 使用一元标注器进行训练self.__tagger = nltk.UnigramTagger(train_data)def parse(self, tokens):""" 对句子进行分块 :param tokens: 标注词性的单词列表 :return: Tree对象 """# 取出词性标注tags = [tag for (word, tag) in tokens]# 对词性标注进行分块标记ti_list = self.__tagger.tag(tags)# 取出IOB标记iob_tags = [iob_tag for (tag, iob_tag) in ti_list]# 组合成conll标记conlltags = [(word, pos, iob_tag) for ((word, pos), iob_tag) in zip(tokens, iob_tags)]return nltk.chunk.conlltags2tree(conlltags)

test_sents = conll2000.chunked_sents("test.txt", chunk_types=["NP"])

train_sents = conll2000.chunked_sents("train.txt", chunk_types=["NP"])

unigram_chunker = UnigramChunker(train_sents)print(unigram_chunker.evaluate(test_sents))</pre>

Named entity recognition and information extraction

Named entity: an exact noun phrase that refers to a specific type of individual, such as a date, person, organization, etc.

. If you go to Xu Yan classifier by yourself, you will definitely have a big head (ˉ▽ ̄~)~~. NLTK provides a trained classifier--nltk.ne_chunk(tagged_sent[,binary=False]). If binary is set to True, then named entities are only tagged as NE; otherwise the tags are a bit more complex. <pre class='brush:php;toolbar:false;'>sent = nltk.corpus.treebank.tagged_sents()[22]print(nltk.ne_chunk(sent,binary=True))</pre>

If the named entity is determined,

Relationship extraction

#请在Python2下运行import re

IN = re.compile(r'.*\bin\b(?!\b.+ing)')for doc in nltk.corpus.ieer.parsed_docs('NYT_19980315'):for rel in nltk.sem.extract_rels('ORG','LOC',doc,corpus='ieer',pattern = IN):print nltk.sem.show_raw_rtuple(rel)

The above is the detailed content of How to build a system?. For more information, please follow other related articles on the PHP Chinese website!

如何在Microsoft Word中删除作者和上次修改的信息Apr 15, 2023 am 11:43 AM

如何在Microsoft Word中删除作者和上次修改的信息Apr 15, 2023 am 11:43 AMMicrosoft Word文档在保存时包含一些元数据。这些详细信息用于在文档上识别,例如创建时间、作者是谁、修改日期等。它还具有其他信息,例如字符数,字数,段落数等等。如果您可能想要删除作者或上次修改的信息或任何其他信息,以便其他人不知道这些值,那么有一种方法。在本文中,让我们看看如何删除文档的作者和上次修改的信息。删除微软Word文档中的作者和最后修改的信息步骤 1 –转到

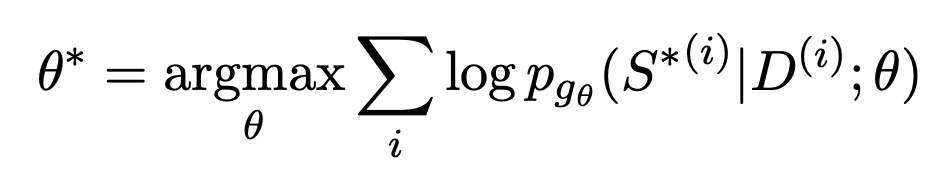

利用大模型打造文本摘要训练新范式Jun 10, 2023 am 09:43 AM

利用大模型打造文本摘要训练新范式Jun 10, 2023 am 09:43 AM1、文本任务这篇文章主要讨论的是生成式文本摘要的方法,如何利用对比学习和大模型实现最新的生成式文本摘要训练范式。主要涉及两篇文章,一篇是BRIO:BringingOrdertoAbstractiveSummarization(2022),利用对比学习在生成模型中引入ranking任务;另一篇是OnLearningtoSummarizewithLargeLanguageModelsasReferences(2023),在BRIO基础上进一步引入大模型生成高质量训练数据。2、生成式文本摘要训练方法和

获取 Windows 11 中 GPU 的方法及显卡详细信息检查Nov 07, 2023 am 11:21 AM

获取 Windows 11 中 GPU 的方法及显卡详细信息检查Nov 07, 2023 am 11:21 AM使用系统信息单击“开始”,然后输入“系统信息”。只需单击程序,如下图所示。在这里,您可以找到大多数系统信息,而显卡信息也是您可以找到的一件事。在“系统信息”程序中,展开“组件”,然后单击“显示”。让程序收集所有必要的信息,一旦准备就绪,您就可以在系统上找到特定于显卡的名称和其他信息。即使您有多个显卡,您也可以从这里找到与连接到计算机的专用和集成显卡相关的大多数内容。使用设备管理器Windows11就像大多数其他版本的Windows一样,您也可以从设备管理器中找到计算机上的显卡。单击“开始”,然后

如何与NameDrop共享联系人详细信息:iOS 17的操作指南Sep 16, 2023 pm 06:09 PM

如何与NameDrop共享联系人详细信息:iOS 17的操作指南Sep 16, 2023 pm 06:09 PM在iOS17中,有一个新的AirDrop功能,让你通过触摸两部iPhone来与某人交换联系信息。它被称为NameDrop,这是它的工作原理。NameDrop允许您简单地将iPhone放在他们的iPhone附近以交换联系方式,而不是输入新人的号码来给他们打电话或发短信,以便他们拥有您的号码。将两个设备放在一起将自动弹出联系人共享界面。点击弹出窗口会显示一个人的联系信息及其联系人海报(您可以自定义和编辑自己的照片,也是iOS17的新功能)。该屏幕还包括“仅接收”或共享您自己的联系信息作为响应的选项。

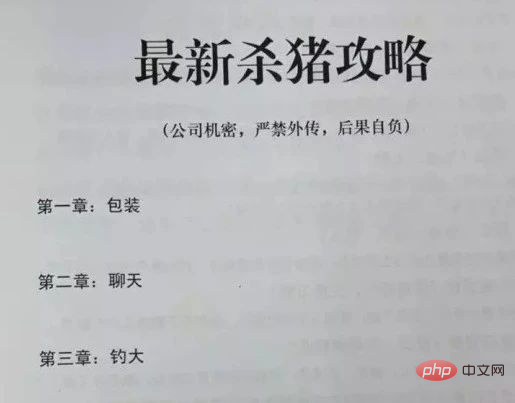

网聊一个月,杀猪盘骗子竟被AI整破防!200万网友大呼震撼Apr 12, 2023 am 09:40 AM

网聊一个月,杀猪盘骗子竟被AI整破防!200万网友大呼震撼Apr 12, 2023 am 09:40 AM说起「杀猪盘」,大家肯定都恨得牙痒痒。在这类交友婚恋类网络诈骗中,骗子会提前物色好容易上钩的受害者,而她们,往往是单纯善良、对爱情怀有美好幻想的高知乖乖女。而为了能和这些骗子大战500回合,B站大名鼎鼎的科技圈up主「图灵的猫」训练了一个聊起天来频出爆梗,甚至比真人还6的AI。结果,随着AI的一通操作,骗子竟然被这个以假乱真的小姐姐搞得方寸大乱,直接给「她」转了520。更好笑的是,发现根本无机可乘的骗子,最后不仅自己破了防,还被AI附送一段「名句」:视频一出,立刻爆火,在B站冲浪的小伙伴们纷纷被

win7系统无法打开txt文本怎么办Jul 06, 2023 pm 04:45 PM

win7系统无法打开txt文本怎么办Jul 06, 2023 pm 04:45 PMwin7系统无法打开txt文本怎么办?我们电脑中需要进行文本文件的编辑时,最简单的方式就是去使用文本工具。但是有的用户却发现自己的电脑无法打开txt文本文件了,那么这样的问题要怎么去解决呢?一起来看看详细的解决win7系统无法打开txt文本教程吧。解决win7系统无法打开txt文本教程 1、在桌面上右键点击桌面的任意一个txt文件,如果没有的可以右键点击新建一个文本文档,然后选择属性,如下图所示: 2、在打开的txt属性窗口中,常规选项下找到更改按钮,如下图所示: 3、在弹出的打开方式设置

ChatGPT是什么?G、P、T代表什么?May 08, 2023 pm 12:01 PM

ChatGPT是什么?G、P、T代表什么?May 08, 2023 pm 12:01 PM比尔盖茨:ChatGPT是1980年以来最具革命性的科技进步。身处这个AI变革的时代,唯有躬身入局,脚步跟上。这是一篇我的学习笔记,希望对你了解ChatGPT有帮助。1、ChatGPT里的GPT,分别代表什么?GPT,GenerativePre-trainedTransformer,生成式预训练变换模型。什么意思?Generative,生成式,是指它能自发的生成内容。Pre-trained,预训练,是不需要你拿到它再训练,它直接给你做好了一个通用的语言模型。Transformer,变换模型,谷歌

利用多光照信息的单视角NeRF算法S^3-NeRF,可恢复场景几何与材质信息Apr 13, 2023 am 10:58 AM

利用多光照信息的单视角NeRF算法S^3-NeRF,可恢复场景几何与材质信息Apr 13, 2023 am 10:58 AM目前图像 3D 重建工作通常采用恒定自然光照条件下从多个视点(multi-view)捕获目标场景的多视图立体重建方法(Multi-view Stereo)。然而,这些方法通常假设朗伯表面,并且难以恢复高频细节。另一种场景重建方法是利用固定视点但不同点光源下捕获的图像。例如光度立体 (Photometric Stereo) 方法就采用这种设置并利用其 shading 信息来重建非朗伯物体的表面细节。然而,现有的单视图方法通常采用法线贴图(normal map)或深度图(depth map)来表征可

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

Notepad++7.3.1

Easy-to-use and free code editor