Backend Development

Backend Development Python Tutorial

Python Tutorial Product environment model deployment, Docker image, Bazel workspace, export model, server, client

Product environment model deployment, Docker image, Bazel workspace, export model, server, clientProduct environment model deployment, create a simple Web APP, users upload images, run the Inception model, and realize automatic image classification.

Build a TensorFlow service development environment. Install Docker, . Use the configuration file to create a Docker image locally, docker build --pull -t $USER/tensorflow-serving-devel . To run the container on the image, docker run -v $HOME:/mnt/home -p 9999:9999 -it $USER/tensorflow-serving-devel , load it into the container/mnt/home path in the home directory, and work in the terminal. Use an IDE or editor to edit the code, use the container to run the build tool, and the host is accessed through port 9999 to build the server. The exit command exits the container terminal and stops running.

The TensorFlow service program is written in C++ and uses Google's Bazel build tool. The container runs Bazel. Bazel manages third-party dependencies at the code level. Bazel automatically downloads the build. The project library root directory defines the WORKSPACE file. The TensorFlow model library contains the Inception model code.

TensorFlow service is included in the project as a Git submodule. mkdir ~/serving_example, cd ~/serving_example, git init, git submodule add, tf_serving, git submodule update --init --recursive.

WORKSPACE file local_repository rule defines third-party dependencies as local storage files. The project imports the tf_workspace rule to initialize TensorFlow dependencies.

workspace(name = "serving")

local_repository(

name = "tf_serving",

path = __workspace_dir__ + "/tf_serving",

)

local_repository(

name = "org_tensorflow",

path = __workspace_dir__ + "/tf_serving/tensorflow",

)

load('//tf_serving/tensorflow/tensorflow:workspace .bzl', 'tf_workspace')

tf_workspace("tf_serving/tensorflow/", "@org_tensorflow")

bind(

name = "libssl",

actual = "@boringssl_git //:ssl",

)

bind(

name = "zlib",

actual = "@zlib_archive//:zlib",

)

local_repository(

name = "inception_model",

path = __workspace_dir__ + "/tf_serving/tf_models/inception",

)

Export the trained model, export the data flow diagram and variables , for products. The model data flow graph must receive input from placeholders and calculate the output in a single step of inference. Inception model (or image recognition model in general), JPEG encoded image string input, is different from reading input from TFRecord file. Define the input placeholder, call the function to convert the placeholder to represent the external input to the original inference model input format, convert the image string into a pixel tensor with each component located within [0, 1], scale the image size to meet the expected width and height of the model, The pixel value is transformed into the model required interval [-1, 1]. Call the original model inference method and infer the results based on the transformed input.

Assign values to each parameter of the inference method. Restore parameter values from checkpoint. Periodically save model training checkpoint files, which contain learning parameters. The last saved training checkpoint file contains the last updated model parameters. Go to download the pre-training checkpoint file: . In the Docker container, cd /tmp, curl -0 , tar -xzf inception-v3-2016-03-01.tar.gz.

tensorflow_serving.session_bundle.exporter.Exporter class exports the model. Pass in the saver instance to create the instance, and use exporter.classification_signature to create the model signature. Specify input_tensor and output tensor. classes_tensor contains a list of output class names, and the model assigns each category score (or probability) socres_tensor. For models with multiple categories, the configuration specifies that only tf.nntop_k will be returned to select categories, and the model allocation scores will be sorted into the top K categories in descending order. Call the exporter.Exporter.init method signature. The export method exports the model and receives the output path, model version number, and session object. The Exporter class automatically generates code that has dependencies, and the Doker container uses bazel to run the exporter. The code is saved to bazel workspace exporter.py.

import time

import sys

import tensorflow as tf

from tensorflow_serving.session_bundle import exporter

from inception import inception_model

NUM_CLASSES_TO_RETURN = 10

def convert_external_inputs(external_x):

image = tf.image.convert_image_dtype(tf.image.decode_jpeg(external_x, channels=3), tf.float32)

images = tf.image.resize_bilinear(tf .expand_dims(image, 0), [299, 299])

images = tf.mul(tf.sub(images, 0.5), 2)

return images

def inference(images) ; = inference(x)

saver = tf.train.Saver()

with tf.Session() as sess:

ckpt = tf.train.get_checkpoint_state(sys.argv[1])

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, sys.argv[1] + "/" + ckpt.model_checkpoint_path)

else:

print("Checkpoint file not found")

raise SystemExit

scores, class_ids = tf.nn.top_k(y, NUM_CLASSES_TO_RETURN)

classes = tf.contrib.lookup.index_to_string(tf.to_int64(class_ids),

mapping=tf.constant([str(i) for i in range(1001)]))

model_exporter = exporter.Exporter(saver)

signature = exporter.classification_signature(

input_tensor=external_x, classes_tensor=classes, scores_tensor=scores)

model_exporter.init(default_graph_signature=signature, init_op=tf.initialize_all_tables())

model_exporter.export(sys.argv[1] + "/export", tf.constant(time.time()), sess)

一个构建规则BUILD文件。在容器命令运行导出器,cd /mnt/home/serving_example, hazel run:export /tmp/inception-v3 ,依据/tmp/inception-v3提到的检查点文件在/tmp/inception-v3/{currenttimestamp}/创建导出器。首次运行要对TensorFlow编译。load从外部导入protobuf库,导入cc_proto_library规则定义,为proto文件定义构建规则。通过命令bazel run :server 9999 /tmp/inception-v3/export/{timestamp},容器运行推断服务器。

py_binary(

name = "export",

srcs = [

"export.py",

],

deps = [

"@tf_serving//tensorflow_serving/session_bundle:exporter",

"@org_tensorflow//tensorflow:tensorflow_py",

"@inception_model//inception",

],

)

load("@protobuf//:protobuf.bzl", "cc_proto_library")

cc_proto_library(

name="classification_service_proto",

srcs=["classification_service.proto"],

cc_libs = ["@protobuf//:protobuf"],

protoc="@protobuf//:protoc",

default_runtime="@protobuf//:protobuf",

use_grpc_plugin=1

)

cc_binary(

name = "server",

srcs = [

"server.cc",

],

deps = [

":classification_service_proto",

"@tf_serving//tensorflow_serving/servables/tensorflow:session_bundle_factory",

"@grpc//:grpc++",

],

)

定义服务器接口。TensorFlow服务使用gRPC协议(基于HTTP/2二进制协议)。支持创建服务器和自动生成客户端存根各种语言。在protocol buffer定义服务契约,用于gRPC IDL(接口定义语言)和二进制编码。接收JPEG编码待分类图像字符串输入,返回分数排列推断类别列表。定义在classification_service.proto文件。接收图像、音频片段、文字服务可用可一接口。proto编译器转换proto文件为客户端和服务器类定义。bazel build:classification_service_proto可行构建,通过bazel-genfiles/classification_service.grpc.pb.h检查结果。推断逻辑,ClassificationService::Service接口必须实现。检查bazel-genfiles/classification_service.pb.h查看request、response消息定义。proto定义变成每种类型C++接口。

syntax = "proto3";

message ClassificationRequest {

// bytes input = 1;

float petalWidth = 1;

float petalHeight = 2;

float sepalWidth = 3;

float sepalHeight = 4;

};

message ClassificationResponse {

repeated ClassificationClass classes = 1;

};

message ClassificationClass {

string name = 1; # }

Implement the inference server. Load the exported model, call the inference method, and implement ClassificationService::Service. Export the model, create a SessionBundle object, include a fully loaded data flow graph TF session object, and define the export tool classification signature metadata. The SessionBundleFactory class creates a SessionBundle object, configures it to load the export model at the path specified by pathToExportFiles, and returns a unique pointer to the created SessionBundle instance. Define ClassificationServiceImpl and receive SessionBundle instance parameters.

Load the classification signature, the GetClassificationSignature function loads the model export metadata ClassificationSignature, the signature specifies the input tensor logical name of the real name of the received image, and the data flow graph output tensor logical name mapping inference result. Transform the protobuf input into an inference input tensor, and the request parameter copies the JPEG encoded image string to the inference tensor. To run inference, sessionbundle obtains the TF session object, runs it once, and passes in the input and output tensor inference. The inferred output tensor transforms the protobuf output, and the output tensor result is copied to the ClassificationResponse message and the response output parameter format is specified in the shape. Set up the gRPC server, configure the SessionBundle object, and create the ClassificationServiceImpl instance sample code.

#include

#include

#include

#include "classification_service.grpc.pb.h"

#include "tensorflow_serving/servables/tensorflow/session_bundle_factory.h"

using namespace std;

using namespace tensorflow:: serving;using namespace grpc;

unique_ptr

SessionBundleFactory: }

private:

unique_ptr

ClassificationServiceImpl(unique_ptr

sessionBundle(move(sessionBundle)) {};

Status classify(ServerContext* context, const ClassificationRequest* request,

ClassificationResponse* response) override {

ClassificationSignature signature;

const tensorflow::Status signatureStatus =

if (!signatureStatus.ok()) {

}

tensorflow::Tensor input(tensorflow::DT_STRING, tensorflow::TensorShape());

vector<:tensor> outputs;

const tensorflow::Status inferenceStatus = sessionBundle->session->Run(

## &outputs);

##

for (int i = 0; i ClassificationClass *classificationClass = response->add_classes();

classificationClass->set_name(outputs[0].flat

classificationClass->set_score(outputs[1].flat

}

return Status::OK;

}

};

int main(int argc, char** argv) {

if (argc cerr /path/to/export/files" return 1;

}

const string serverAddress(string("0.0.0.0:") + argv[1]);

const string pathToExportFiles(argv[2]);

unique_ptr

ClassificationServiceImpl classificationServiceImpl(move(sessionBundle));

ServerBuilder builder;

builder.AddListeningPort(serverAddress, grpc::InsecureServerCredentials());

builder.RegisterService(&classificationServiceImpl);

unique_ptr server->Wait(); return 0; 通过服务器端组件从webapp访问推断服务。运行Python protocol buffer编译器,生成ClassificationService Python protocol buffer客户端:pip install grpcio cython grpcio-tools, python -m grpc.tools.protoc -I. --python_out=. --grpc_python_out=. classification_service.proto。生成包含调用服务stub classification_service_pb2.py 。服务器接到POST请求,解析发送表单,创建ClassificationRequest对象 。分类服务器设置一个channel,请求提交,分类响应渲染HTML,送回用户。容器外部命令python client.py,运行服务器。浏览器导航http://localhost:8080 访问UI。 from BaseHTTPServer import HTTPServer, BaseHTTPRequestHandler import cgi class ClientApp(BaseHTTPRequestHandler): def respond_form(self, response=""): form = """

cout

}

import classification_service_pb2

from grpc.beta import implementations

def do_GET(self):

self.respond_form()

Image classification service

%s

"""

response = form % response

self.send_response(200)

self.send_header("Content-type", "text/html")

self.send_header("Content-length", len(response))

self.end_headers()

self.wfile.write(response)

def do_POST(self):

form = cgi.FieldStorage(

fp=self.rfile,

headers=self.headers,

environ={

'REQUEST_METHOD': 'POST',

'CONTENT_TYPE': self.headers['Content-Type'],

})

request = classification_service_pb2.ClassificationRequest()

request.input = form['file'].file.read()

channel = implementations.insecure_channel("127.0.0.1", 9999)

stub = classification_service_pb2.beta_create_ClassificationService_stub(channel)

response = stub.classify(request, 10) # 10 secs timeout

self.respond_form("

if __name__ == '__main__':

host_port = ('0.0.0.0', 8080)

print "Serving in %s:%s" % host_port

HTTPServer(host_port, ClientApp).serve_forever()

产品准备,分类服务器应用产品。编译服务器文件复制到容器永久位置,清理所有临时构建文件。容器中,mkdir /opt/classification_server, cd /mnt/home/serving_example, cp -R bazel-bin/. /opt/classification_server, bazel clean 。容器外部,状态提交新Docker镜像,创建记录虚拟文件系统变化快照。容器外,docker ps, dock commit

参考资料:

《面向机器智能的TensorFlow实践》

欢迎付费咨询(150元每小时),我的微信:qingxingfengzi

The above is the detailed content of Product environment model deployment, Docker image, Bazel workspace, export model, server, client. For more information, please follow other related articles on the PHP Chinese website!

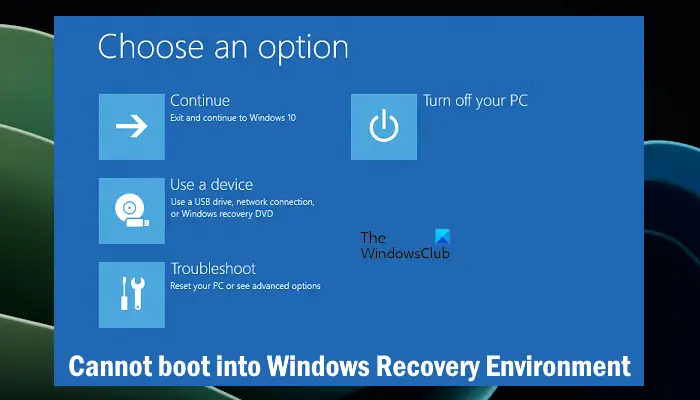

无法引导到Windows恢复环境Feb 19, 2024 pm 11:12 PM

无法引导到Windows恢复环境Feb 19, 2024 pm 11:12 PMWindows恢复环境(WinRE)是用于修复Windows操作系统错误的环境。进入WinRE后,您可以执行系统还原、出厂重置、卸载更新等操作。如果无法引导到WinRE,本文将指导您使用修复程序解决此问题。无法引导到Windows恢复环境如果无法引导至Windows恢复环境,请使用下面提供的修复程序:检查Windows恢复环境的状态使用其他方法进入Windows恢复环境您是否意外删除了Windows恢复分区?执行Windows的就地升级或全新安装下面,我们已经详细解释了所有这些修复。1]检查Wi

Python和Anaconda之间有什么区别?Sep 06, 2023 pm 08:37 PM

Python和Anaconda之间有什么区别?Sep 06, 2023 pm 08:37 PM在本文中,我们将了解Python和Anaconda之间的差异。Python是什么?Python是一种开源语言,非常重视使代码易于阅读并通过缩进行和提供空白来理解。Python的灵活性和易于使用使其非常适用于各种应用,包括但不限于对于科学计算、人工智能和数据科学,以及创造和发展的在线应用程序。当Python经过测试时,它会立即被翻译转化为机器语言,因为它是一种解释性语言。有些语言,比如C++,需要编译才能被理解。精通Python是一个重要的优势,因为它非常易于理解、开发,执行并读取。这使得Pyth

产品参数是什么意思Jul 05, 2023 am 11:13 AM

产品参数是什么意思Jul 05, 2023 am 11:13 AM产品参数是指产品属性的意思。比如服装参数有品牌、材质、型号、大小、风格、面料、适应人群和颜色等;食品参数有品牌、重量、材质、卫生许可证号、适应人群和颜色等;家电参数有品牌、尺寸、颜色、产地、适应电压、信号、接口和功率等。

小米 14 Ultra怎么设置拍照镜像?Mar 18, 2024 am 11:10 AM

小米 14 Ultra怎么设置拍照镜像?Mar 18, 2024 am 11:10 AM小米14Ultra发布之后,很多喜欢拍照的小伙伴都选择了下单,小米14Ultra提供了更多的选择,比如说是拍照镜像功能,可以选择开启“拍摄镜像旋转”功能。这样,当你在拍摄照片时,就可以以自己习惯的样子来进行自拍啦,但是小米14Ultra应该要怎么设置拍照镜像呢?小米14Ultra怎么设置拍照镜像?1、打开小米14Ultra的相机2、在屏幕上找到“设置”。3、在这个页面中,你将看到一个标有“拍摄设置”的选项。4、点击这个选项,然后在下拉菜单中找到“拍照镜像”选项。5、只需要将它打开即可。小米14U

php集成环境包有哪些Jul 24, 2023 am 09:36 AM

php集成环境包有哪些Jul 24, 2023 am 09:36 AMphp集成环境包有:1、PhpStorm,功能强大的PHP集成环境;2、Eclipse,开放源代码的集成开发环境;3、Visual Studio Code,轻量级的开源代码编辑器;4、Sublime Text,受欢迎的文本编辑器,广泛用于各种编程语言;5、NetBeans,由Apache软件基金会开发的集成开发环境;6、Zend Studio,为PHP开发者设计的集成开发环境。

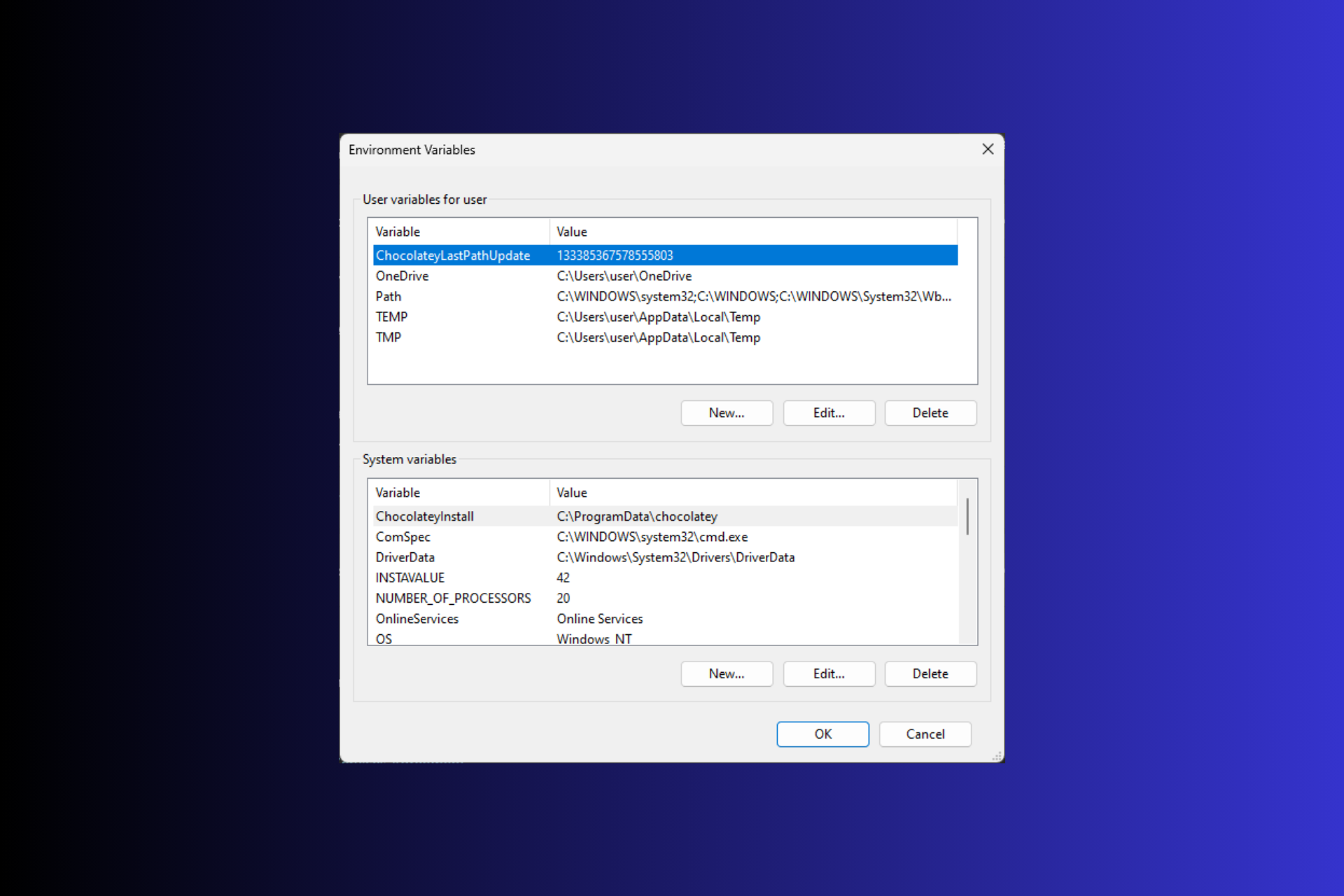

在 Windows 3 上设置环境变量的 11 种方法Sep 15, 2023 pm 12:21 PM

在 Windows 3 上设置环境变量的 11 种方法Sep 15, 2023 pm 12:21 PM在Windows11上设置环境变量可以帮助您自定义系统、运行脚本和配置应用程序。在本指南中,我们将讨论三种方法以及分步说明,以便您可以根据自己的喜好配置系统。有三种类型的环境变量系统环境变量–全局变量处于最低优先级,可由Windows上的所有用户和应用访问,通常用于定义系统范围的设置。用户环境变量–优先级越高,这些变量仅适用于在该帐户下运行的当前用户和进程,并由在该帐户下运行的用户或应用程序设置。进程环境变量–具有最高优先级,它们是临时的,适用于当前进程及其子进程,为程序提供

Laravel环境配置文件.env的常见问题及解决方法Mar 10, 2024 pm 12:51 PM

Laravel环境配置文件.env的常见问题及解决方法Mar 10, 2024 pm 12:51 PMLaravel环境配置文件.env的常见问题及解决方法在使用Laravel框架开发项目时,环境配置文件.env是非常重要的,它包含了项目的关键配置信息,如数据库连接信息、应用密钥等。然而,有时候在配置.env文件时会出现一些常见问题,本文将针对这些问题进行介绍并提供解决方法,同时附上具体的代码示例供参考。问题一:无法读取.env文件当我们配置好了.env文件

Java工厂模式解析:评估三种实现方式的优点、缺点和适用范围Dec 28, 2023 pm 06:32 PM

Java工厂模式解析:评估三种实现方式的优点、缺点和适用范围Dec 28, 2023 pm 06:32 PM探究Java工厂模式:详解三种实现方式的优缺点及适用场景引言:在软件开发过程中,经常会遇到对象的创建和管理问题。为了解决这个问题,设计模式中的工厂模式应运而生。工厂模式是一种创建型设计模式,通过将对象的创建过程封装在工厂类中,来实现对象的创建与使用的分离。Java中的工厂模式有三种常见的实现方式:简单工厂模式、工厂方法模式和抽象工厂模式。本文将详解这三种实现

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SublimeText3 Mac version

God-level code editing software (SublimeText3)

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

Zend Studio 13.0.1

Powerful PHP integrated development environment