Home >Backend Development >Python Tutorial >How should Python crawler data be processed?

How should Python crawler data be processed?

- PHP中文网Original

- 2017-06-20 16:27:572558browse

1. First understand the following functions

Set the variable length() function char_length() replace() function max() function

1.1. Set the variable set @variable name=value

set @address='中国-山东省-聊城市-莘县';select @address

1.2, length() function char_length() function difference

select length('a') ,char_length('a') ,length('中') ,char_length('中')

1.3, replace() function and length() function combination

set @address='中国-山东省-聊城市-莘县';select @address ,replace(@address,'-','') as address_1 ,length(@address) as len_add1 ,length(replace(@address,'-','')) as len_add2 ,length(@address)-length(replace(@address,'-','')) as _count

etlThere are obvious separators when cleaning fields How to determine how many split fields to add to a new data table

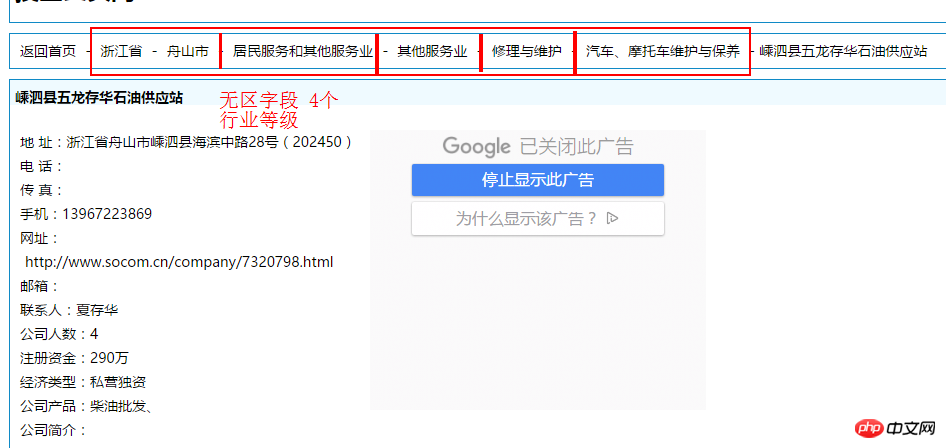

Calculate the maximum number of - characters in com_industry to determine how many fields to add. The maximum value + 1 is the number of fields that can be split into. This table is 3 Therefore, 4 industry fields can be split, that is, 4 industry levels

select max(length(com_industry)-length(replace(com_industry,'-',''))) as _max_count from etl1_socom_data

1.4. Set the variable substring_index() string interception function usage

set @address='中国-山东省-聊城市-莘县'; select substring_index(@address,'-',1) as china, substring_index(substring_index(@address,'-',2),'-',-1) as province, substring_index(substring_index(@address,'-',3),'-',-1) as city, substring_index(@address,'-',-1) as district

1.5. Conditional judgment function case when

case when then when then else value end as field name

select case when 89>101 then '大于' else '小于' end as betl1_socom_data

2. Kettle conversion etl1 cleaning

The first table creation step is in the video

Field index does not mention the index algorithm recommended BTREE algorithm enhances query efficiency

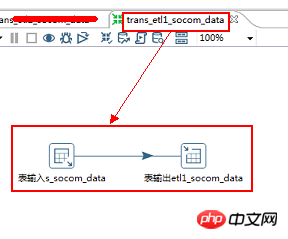

2.1.kettle file name: trans_etl1_socom_data

2.2.Including controls: table input>>>table output

2.3.Data flow direction: s_socom_data>>> ;>etl1_socom_data

2.4, table input 2.4, SQL script preliminary cleaning of com_district and com_industry fields

<code class="sql"><span class="hljs-keyword">select a.*,<span class="hljs-keyword">case <span class="hljs-keyword">when com_district <span class="hljs-keyword">like <span class="hljs-string">'%业' <span class="hljs-keyword">or com_district <span class="hljs-keyword">like <span class="hljs-string">'%织' <span class="hljs-keyword">or com_district <span class="hljs-keyword">like <span class="hljs-string">'%育' <span class="hljs-keyword">then <span class="hljs-literal">null <span class="hljs-keyword">else com_district <span class="hljs-keyword">end <span class="hljs-keyword">as com_district1 ,<span class="hljs-keyword">case <span class="hljs-keyword">when com_district <span class="hljs-keyword">like <span class="hljs-string">'%业' <span class="hljs-keyword">or com_district <span class="hljs-keyword">like <span class="hljs-string">'%织' <span class="hljs-keyword">or com_district <span class="hljs-keyword">like <span class="hljs-string">'%育' <span class="hljs-keyword">then <span class="hljs-keyword">concat(com_district,<span class="hljs-string">'-',com_industry) <span class="hljs-keyword">else com_industry <span class="hljs-keyword">end <span class="hljs-keyword">as com_industry_total ,<span class="hljs-keyword">replace(com_addr,<span class="hljs-string">'地 址:',<span class="hljs-string">'') <span class="hljs-keyword">as com_addr1 ,<span class="hljs-keyword">replace(com_phone,<span class="hljs-string">'电 话:',<span class="hljs-string">'') <span class="hljs-keyword">as com_phone1 ,<span class="hljs-keyword">replace(com_fax,<span class="hljs-string">'传 真:',<span class="hljs-string">'') <span class="hljs-keyword">as com_fax1 ,<span class="hljs-keyword">replace(com_mobile,<span class="hljs-string">'手机:',<span class="hljs-string">'') <span class="hljs-keyword">as com_mobile1 ,<span class="hljs-keyword">replace(com_url,<span class="hljs-string">'网址:',<span class="hljs-string">'') <span class="hljs-keyword">as com_url1 ,<span class="hljs-keyword">replace(com_email,<span class="hljs-string">'邮箱:',<span class="hljs-string">'') <span class="hljs-keyword">as com_email1 ,<span class="hljs-keyword">replace(com_contactor,<span class="hljs-string">'联系人:',<span class="hljs-string">'') <span class="hljs-keyword">as com_contactor1 ,<span class="hljs-keyword">replace(com_emploies_nums,<span class="hljs-string">'公司人数:',<span class="hljs-string">'') <span class="hljs-keyword">as com_emploies_nums1 ,<span class="hljs-keyword">replace(com_reg_capital,<span class="hljs-string">'注册资金:万',<span class="hljs-string">'') <span class="hljs-keyword">as com_reg_capital1 ,<span class="hljs-keyword">replace(com_type,<span class="hljs-string">'经济类型:',<span class="hljs-string">'') <span class="hljs-keyword">as com_type1 ,<span class="hljs-keyword">replace(com_product,<span class="hljs-string">'公司产品:',<span class="hljs-string">'') <span class="hljs-keyword">as com_product1 ,<span class="hljs-keyword">replace(com_desc,<span class="hljs-string">'公司简介:',<span class="hljs-string">'') <span class="hljs-keyword">as com_desc1<span class="hljs-keyword">from s_socom_data <span class="hljs-keyword">as a</span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></span></code><br/><br/><br/>

2.5. Table output

Notes:

①Involves crawler increment Do not check the cut table option during operation

②Data connection problem Select the database where the table in the table output is located

③Field mapping problem Ensure that the number of fields in the data stream and the physical table are consistent

3. Kettle conversion etl2 cleaning

First create a table and add 4 fields to demonstrate the steps in the video

The field index does not mention the index algorithm. It is recommended to use the BTREE algorithm to enhance query efficiency

Mainly for etl1 The generated new com_industry is field split and cleaned

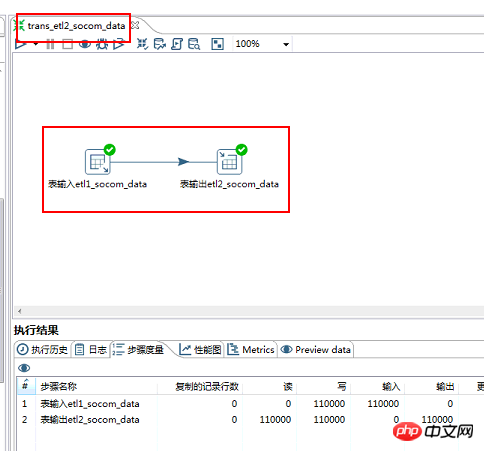

3.1.kettle file name: trans_etl2_socom_data

3.2. Includes controls: table input>>> table output

3.3. Data flow direction: etl1_socom_data>> ;>>etl2_socom_data

Notes:

① Do not check the clipping table option when it comes to crawler incremental operations

②Data connection issuesSelect the database where the table in the table output is located

③Field mapping issuesEnsure The number of fields in the data stream and the physical table are consistent

3.4. SQL script to dismantle com_industry Complete the cleaning of all fields in one step, the registered capital field and the time relationship without detailed disassembly and adjustment of the code

select a.*,case #行业为''的值 置为空when length(com_industry)=0 then null #其他的取第一个-分隔符之前else substring_index(com_industry,'-',1) end as com_industry1,case when length(com_industry)-length(replace(com_industry,'-',''))=0 then null #'交通运输、仓储和邮政业-' 这种值 行业2 也置为nullwhen length(com_industry)-length(replace(com_industry,'-',''))=1 and length(substring_index(com_industry,'-',-1))=0 then nullwhen length(com_industry)-length(replace(com_industry,'-',''))=1 then substring_index(com_industry,'-',-1)else substring_index(substring_index(com_industry,'-',2),'-',-1)end as com_industry2,case when length(com_industry)-length(replace(com_industry,'-',''))<=1 then nullwhen length(com_industry)-length(replace(com_industry,'-',''))=2 then substring_index(com_industry,'-',-1)else substring_index(substring_index(com_industry,'-',3),'-',-1)end as com_industry3,case when length(com_industry)-length(replace(com_industry,'-',''))<=2 then nullelse substring_index(com_industry,'-',-1)end as com_industry4from etl1_socom_data as a

4. Quality inspection of cleaning effect

4.1 Whether the crawler data source data is consistent with the website data

If your own work is to handle crawlers and data processing together, and the judgment has already been made when crawling, this step can be omitted. If you connect to the upstream crawler colleagues, this step must be judged first, otherwise the cleaning will be in vain, and the crawler colleagues are generally required. Storing the requested url is convenient for subsequent data processing to check the data quality

4.2 Calculate the crawler data source and the data volume of each etl cleaning data table

Note: There is no aggregation filtering of the data volume of the three tables in the SQL script Should be equal

4.2.1. The sql query for the following table is in the same database. If it is not in the same database, the name of the database where the table is located should be added after from.

Not recommended when the amount of data is large

select count(1) from s_socom_dataunion all select count(1) from etl1_socom_dataunion all select count(1) from etl2_socom_data

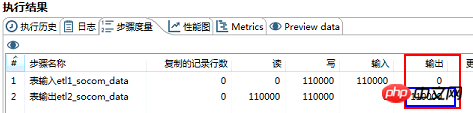

4.2.2 Comparison of the total data output of the table after the kettle conversion is completed

4.3 View etl cleaning quality

Ensure that the first two steps are correct. The self-inspection of the etl cleaning work responsible for data processing begins with writing a script to check the fields of data source cleaning. The socom website mainly cleans the region and industry and other fields. Replacement of redundant fields was done, so a script was used to check.

Find the page_url and website data for verification.

Writing like this makes it easy to check the cleaning status of a certain field.

select * from etl2_socom_data where com_district is null and length(com_industry)-length(replace(com_industry,'-',''))=3

This page data Comparison with the final cleaning data of the etl2_socom_data table

##etl2_socom_data table data

626062078, let’s learn Python together!

The above is the detailed content of How should Python crawler data be processed?. For more information, please follow other related articles on the PHP Chinese website!