Home >Backend Development >Python Tutorial >Detailed graphic explanation of Python set type (list tuple dict set generator)

Detailed graphic explanation of Python set type (list tuple dict set generator)

- 高洛峰Original

- 2017-03-20 10:24:002008browse

PythonThe built-in collection types include list, tuple, set, and dict.

List list: Looks like an array, but is more powerful than an array and supports functions such as indexing, slicing, search, and addition.

Tuple tuple: The function is similar to the list, but once generated, the length and elements are not variable (the elements of the elements are still variable), and it seems to be a more lightweight and safe list.

Dictionary dict: a key-value pair structure hash table, which has the same properties as a hash table. key is unordered and non-repeating, and addition, deletion and modification are convenient and fast.

set: An unordered and non-repeating set is a dict with only keys and no values. Java's HashSet is implemented using HashMap. I hope Python will not be like this. After all, set does not require value, so it is omitted. Lots of pointers.

Generator:

Called generator, or list comprehension, is a special data type# in python ##, is not actually a data structure, it only includes the algorithm and temporary status , and has the function of iteration.

First look at their memory usage, and use generators to generate set, dict, generator, tuple, and list of 100,000 elements. The memory consumed by dict, set, list, and tuple decreases in sequence, and the size of the generated objects is also the same. Since the generator does not generate a data table, there is no need to consume memory:import sys

from memory_profiler import profile

@profile

def create_data(data_size):

data_generator = (x for x in xrange(data_size))

data_set = {x for x in xrange(data_size)}

data_dict = {x:None for x in xrange(data_size)}

data_tuple = tuple(x for x in xrange(data_size))

data_list = [x for x in xrange(data_size)]

return data_set, data_dict, data_generator, data_tuple, data_list

data_size = 100000

for data in create_data(data_size):

print data.__class__, sys.getsizeof(data)

Line # Mem usage Increment Line Contents

================================================

14.6 MiB 0.0 MiB @profile

def create_data(data_size):

14.7 MiB 0.0 MiB data_generator = (x for x in xrange(data_size))

21.4 MiB 6.7 MiB data_set = {x for x in xrange(data_size)}

29.8 MiB 8.5 MiB data_dict = {x:None for x in xrange(data_size)}

33.4 MiB 3.6 MiB data_tuple = tuple(x for x in xrange(data_size))

38.2 MiB 4.8 MiB data_list = [x for x in xrange(data_size)]

38.2 MiB 0.0 MiB return data_set, data_dict, data_generator, data_tuple, data_list

<type 'set'> 4194528

<type 'dict'> 6291728

<type 'generator'> 72

<type 'tuple'> 800048

<type 'list'> 824464 Let’s look at the search performance again. dict and set are constant search times (O(1)), and list and tuple are linear search times (O (n)), use the generator to generate an object of specified size elements, and use randomly generated numbers to find: import time

import sys

import random

from memory_profiler import profile

def create_data(data_size):

data_set = {x for x in xrange(data_size)}

data_dict = {x:None for x in xrange(data_size)}

data_tuple = tuple(x for x in xrange(data_size))

data_list = [x for x in xrange(data_size)]

return data_set, data_dict, data_tuple, data_list

def cost_time(func):

def cost(*args, **kwargs):

start = time.time()

r = func(*args, **kwargs)

cost = time.time() - start

print 'find in %s cost time %s' % (r, cost)

return r, cost #返回数据的类型和方法执行消耗的时间

return cost

@cost_time

def test_find(test_data, data):

for d in test_data:

if d in data:

pass

return data.__class__.__name__

data_size = 100

test_size = 10000000

test_data = [random.randint(0, data_size) for x in xrange(test_size)]

#print test_data

for data in create_data(data_size):

test_find(test_data, data)

输出:

----------------------------------------------

find in <type 'set'> cost time 0.47200012207

find in <type 'dict'> cost time 0.429999828339

find in <type 'tuple'> cost time 5.36500000954

find in <type 'list'> cost time 5.53399991989A collection of 100 elements, each searched 1000W times, and the difference is very obvious. However, these random numbers can all be found in the collection. Modify the random number method so that half of the generated numbers can be found and half cannot be found. It can be seen from the printed information that list and tuple perform even worse when there are half of the worst search examples. def randint(index, data_size):

return random.randint(0, data_size) if (x % 2) == 0 else random.randint(data_size, data_size * 2)

test_data = [randint(x, data_size) for x in xrange(test_size)]

输出:

----------------------------------------------

find in <type 'set'> cost time 0.450000047684

find in <type 'dict'> cost time 0.397000074387

find in <type 'tuple'> cost time 7.83299994469

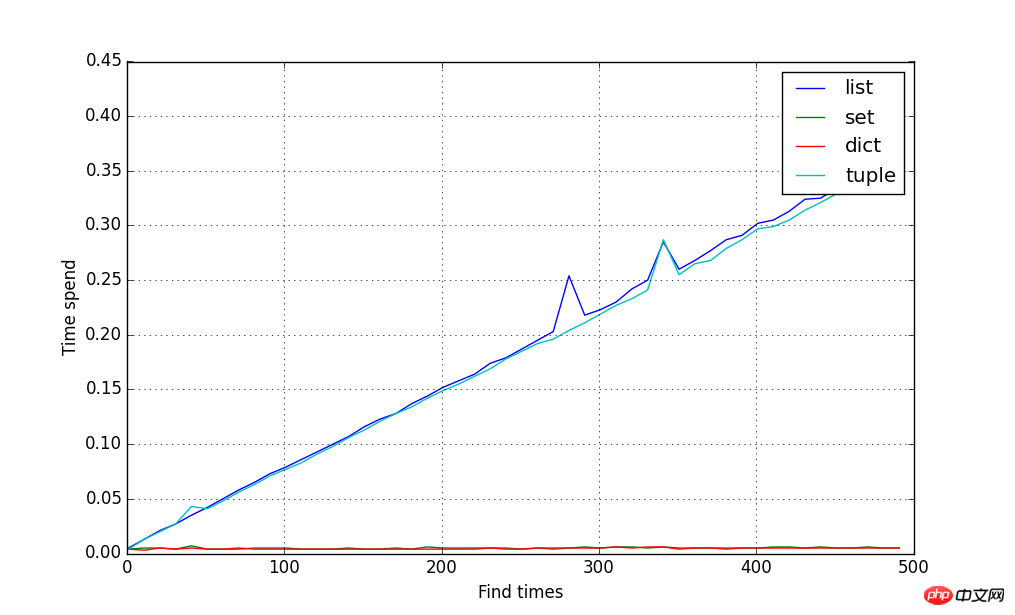

find in <type 'list'> cost time 8.27800011635The number of elements increases from 10 to 500. Statistics of the time required to search 100,000 times each time are used to fit the time consumption curve. The results are as shown below. The results prove that no matter how many elements there are in dict and set, they are always It is a constant search time. As the elements grow, dict and tuple show a linear growth time: import matplotlib.pyplot as plot

from numpy import *

data_size = array([x for x in xrange(10, 500, 10)])

test_size = 100000

cost_result = {}

for size in data_size:

test_data = [randint(x, size) for x in xrange(test_size)]

for data in create_data(size):

name, cost = test_find(test_data, data) #装饰器函数返回函数的执行时间

cost_result.setdefault(name, []).append(cost)

plot.figure(figsize=(10, 6))

xline = data_size

for data_type, result in cost_result.items():

yline = array(result)

plot.plot(xline, yline, label=data_type)

plot.ylabel('Time spend')

plot.xlabel('Find times')

plot.grid()

plot.legend()

plot.show()

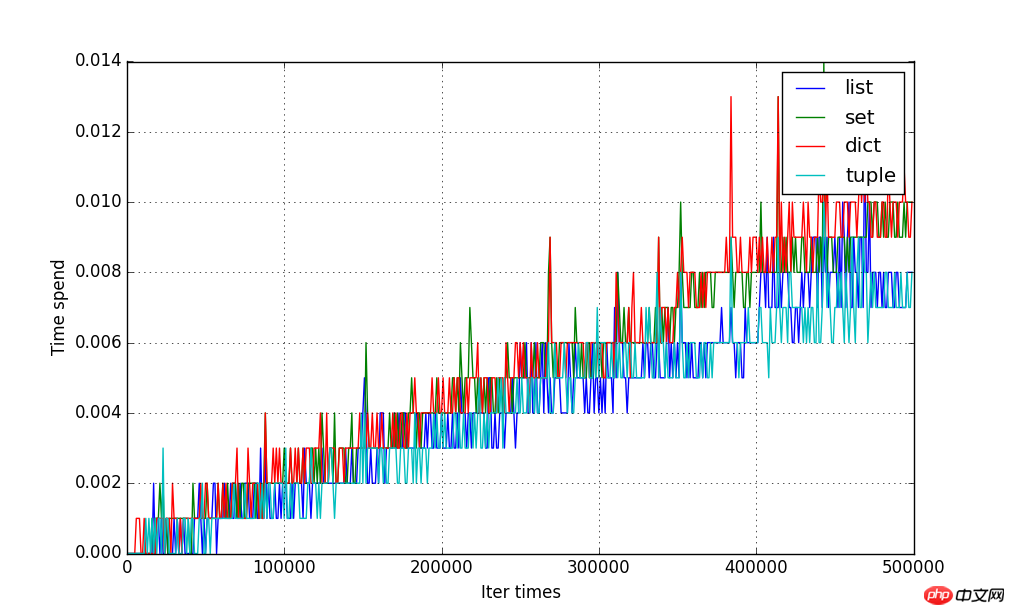

@cost_time

def test_iter(data):

for d in data:

pass

return data.__class__ .__name__

data_size = array([x for x in xrange(1, 500000, 1000)])

cost_result = {}

for size in data_size:

for data in create_data(size):

name, cost = test_iter(data)

cost_result.setdefault(name, []).append(cost)

#拟合曲线图

plot.figure(figsize=(10, 6))

xline = data_size

for data_type, result in cost_result.items():

yline = array(result)

plot.plot(xline, yline, label=data_type)

plot.ylabel('Time spend')

plot.xlabel('Iter times')

plot.grid()

plot.legend()

plot.show()

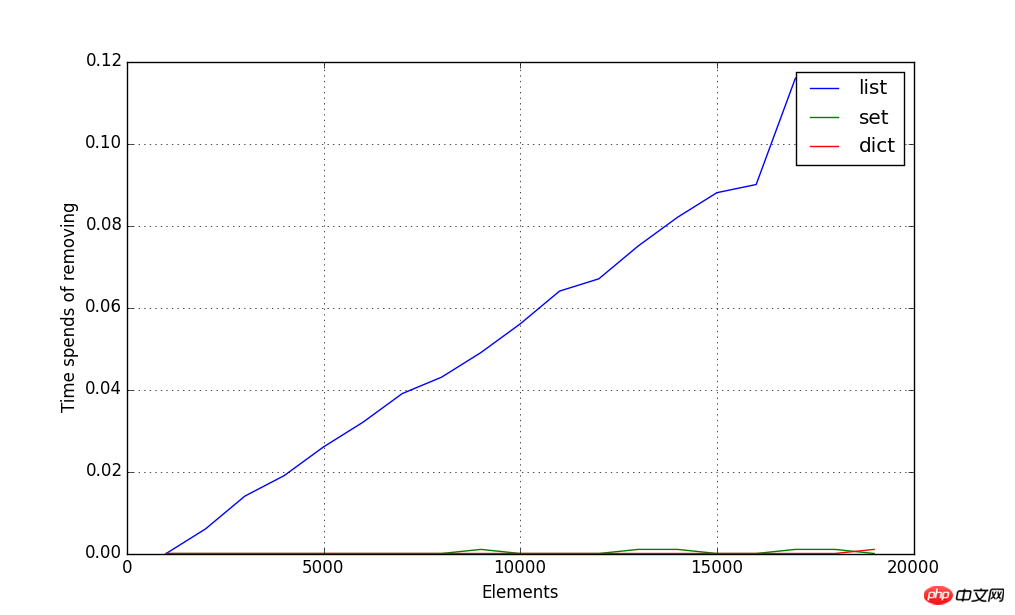

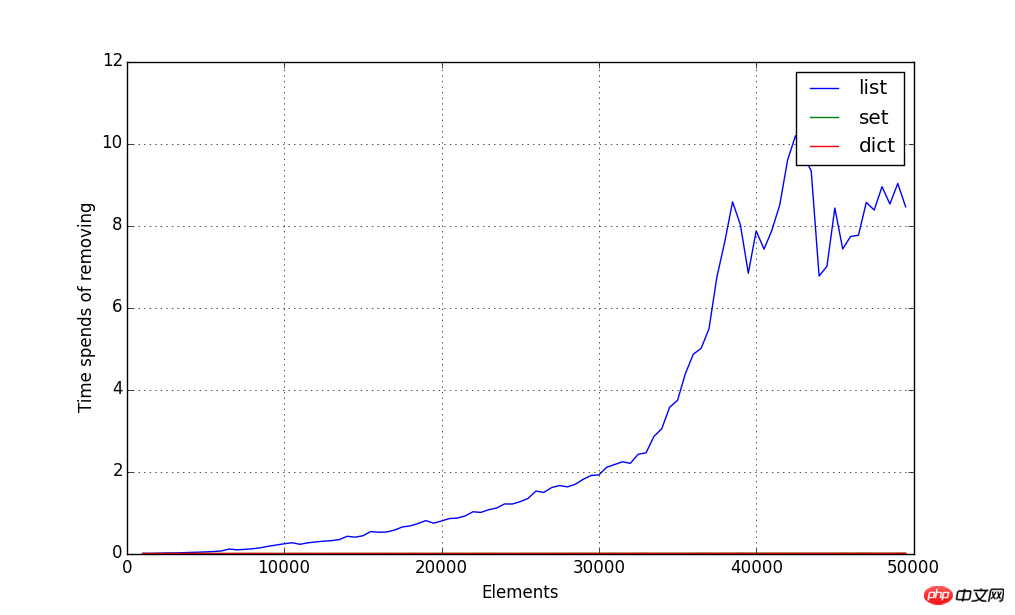

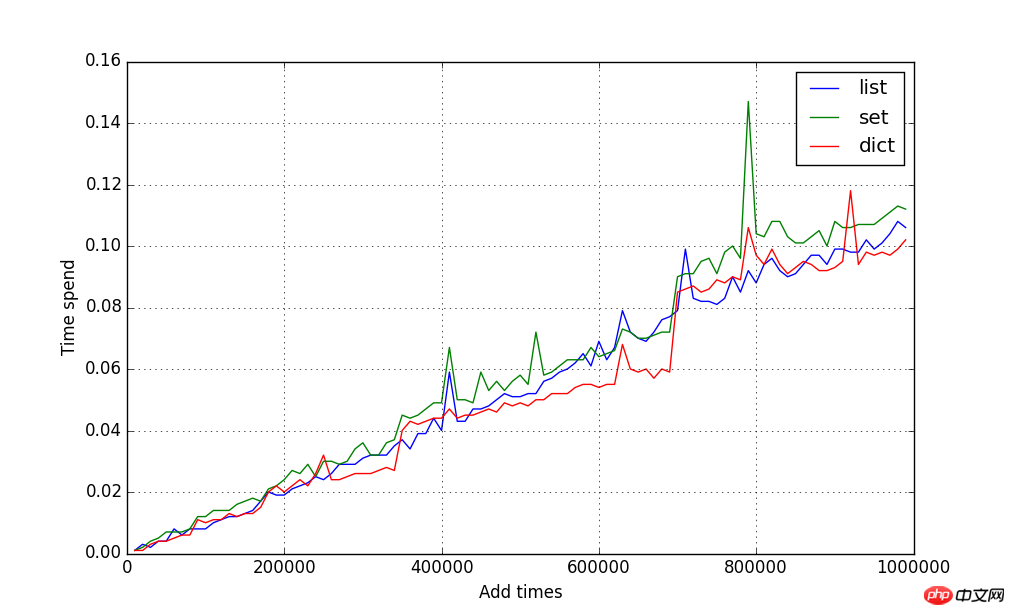

@cost_time

def test_dict_add(test_data, data):

for d in test_data:

data[d] = None

return data.__class__ .__name__

@cost_time

def test_set_add(test_data, data):

for d in test_data:

data.add(d)

return data.__class__ .__name__

@cost_time

def test_list_add(test_data, data):

for d in test_data:

data.append(d)

return data.__class__ .__name__

#初始化数据,指定每种类型对应它添加元素的方法

def init_data():

test_data = {

'list': (list(), test_list_add),

'set': (set(), test_set_add),

'dict': (dict(), test_dict_add)

}

return test_data

#每次检测10000增量大小的数据的添加时间

data_size = array([x for x in xrange(10000, 1000000, 10000)])

cost_result = {}

for size in data_size:

test_data = [x for x in xrange(size)]

for data_type, (data, add) in init_data().items():

name, cost = add(test_data, data) #返回方法的执行时间

cost_result.setdefault(data_type, []).append(cost)

plot.figure(figsize=(10, 6))

xline = data_size

for data_type, result in cost_result.items():

yline = array(result)

plot.plot(xline, yline, label=data_type)

plot.ylabel('Time spend')

plot.xlabel('Add times')

plot.grid()

plot.legend()

plot.show()

The above is the detailed content of Detailed graphic explanation of Python set type (list tuple dict set generator). For more information, please follow other related articles on the PHP Chinese website!