Home >Java >javaTutorial >Detailed explanation of JavaHashMap implementation principle

Detailed explanation of JavaHashMap implementation principle

- 高洛峰Original

- 2017-01-19 10:34:461375browse

HashMap is a Map interface implementation based on a hash table. It provides all optional mapping operations and allows the use of null values and null construction. It is not synchronized and does not guarantee the mapping order. Let's record the research on the implementation principle of HashMap.

HashMap internal storage

Inside HashMap, all key-value pair relationships are stored by maintaining a transient variable array table (also known as: bucket). The bucket is an array of Entry objects, and the size of the bucket Can be resized as needed, length must be a power of 2. The following code:

/**

* 一个空的entry数组,桶 的默认值

*/

static final Entry<?,?>[] EMPTY_TABLE = {};

/**

* 桶,按需调整大小,但必须是2的次幂

*/

transient Entry<K,V>[] table = (Entry<K,V>[]) EMPTY_TABLE;Initial capacity and load factor

HashMap has two parameters that affect performance, initial capacity and load factor. Capacity is the number of buckets in the hash table, initial capacity is simply the capacity of the hash table when it is created, and load factor is a measure of how full the hash table can be before its capacity automatically increases. When the number of entries in the hash table exceeds the product of the load factor and the current capacity, the hash table needs to be rehashed (that is, the internal data structure is rebuilt), and new entries are created with twice the current capacity during reconstruction. The initial capacity and load factor can be set through the constructor. The default initial capacity is 16 entries, the maximum capacity is 2^30 entries, and the default load factor is 0.75.

The bucket is like a bucket that stores water. Its default initial water storage capacity is 16 units of water. By default, when the water is filled to 16*0.75, the capacity will be expanded first to 32 units the next time data is added. 0.75 is the load factor. The initial capacity and load factor can be set when creating the bucket. The maximum capacity of a bucket is 230 units of water. When the initial capacity setting quantity is greater than the maximum capacity, the maximum capacity shall prevail. When expanding, if it is greater than or equal to the maximum capacity, it will be returned directly.

The following is part of the source code of HashMap, which defines the default initial capacity, load factor and some other constants:

/**

* 默认初始化容量,必须为2的次幂The default initial capacity - MUST be a power of two.

*/

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

/**

* 最大容量,如果通过构造函数参数中传递初始化容量大于该最大容量了,也会使用该容量为初始化容量 * 必须是2的次幂且小于等于2的30次方 */

static final int MAXIMUM_CAPACITY = 1 << 30;

/**

* 默认的负载因子,可以通过构造函数指定

*/

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* 一个空的数组表,当 桶没有初始化的时候

*/

static final Entry<?,?>[] EMPTY_TABLE = {};

/**

* 桶 , 存储所有的键值对条目,可以按需调整大小,长度大小必须为2的次幂

*/

transient Entry<K,V>[] table = (Entry<K,V>[]) EMPTY_TABLE;

/**

* Map中键值对的数量,在每次新增或删除的时候都会对size进行+1或者-1操作.

*/

transient int size;

/**

* 负载值,需要调整大小的临界值,为:(capacity * load factor).在每次调整大小后会使用新的容量计算一下

* @serial

*/

// If table == EMPTY_TABLE then this is the initial capacity at which the

// table will be created when inflated.

int threshold;

/**

* 负载因子,如果构造函数中没有指定,则采用默认的负载因子,

*

* @serial

*/

final float loadFactor;

/**

* HashMap结构修改次数,结构修改时改变HashMap中的映射数量或修改其内部结构(例如,* rehash方法,重建内部数据结构),此字段用于在 * HashMap的集合视图上生成的迭代器都处理成快速失败的

*/

transient int modCount;Initial capacity and load factor performance adjustment

Usually, the default load The factor (0.75) seeks a compromise between time and space costs. Although the load factor is too high, it reduces the space overhead, but it also increases the query cost (this is reflected in most HashMap class operations, including get and put operations). The number of entries required in the map and its load factor should be taken into consideration when setting the initial capacity to minimize the number of rehash operations. If the initial capacity is greater than the maximum number of entries divided by the load factor, no rehash operation will occur.

If many mapping relationships are to be stored in a HashMap instance, compared to performing automatic rehash operations on demand to increase the capacity of the table, creating it with a large enough initial capacity will make the mapping relationships more efficient. efficient storage.

The following is the code to reconstruct the HashMap data structure:

void resize(int newCapacity) {

Entry[] oldTable = table;

int oldCapacity = oldTable.length;

if (oldCapacity == MAXIMUM_CAPACITY) { // 如果容量已达最大限制,则设置下负载值后直接返回

threshold = Integer.MAX_VALUE;

return;

}

// 创建新的table存储数据

Entry[] newTable = new Entry[newCapacity];

// 将旧table中的数据转存到新table中去,这一步会花费比较多的时间

transfer(newTable, initHashSeedAsNeeded(newCapacity));

table = newTable;

// 最后设置下下次调整大小的负载值

threshold = (int) Math.min(newCapacity * loadFactor,

MAXIMUM_CAPACITY + 1);

}

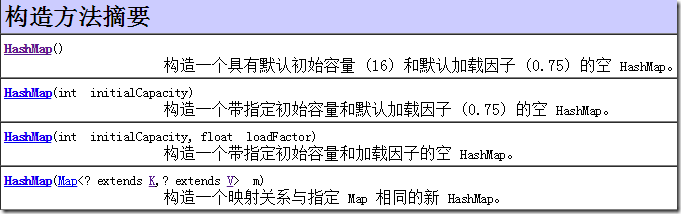

HashMap construction method

The four construction methods create a new HashMap from an existing Map. I will talk about it later. In fact, the first three construction methods ultimately call the third method with two parameters. If no parameters are passed, the default one is used. The numerical value and code are as follows:

/**

* Constructs an empty <tt>HashMap</tt> with the default initial capacity

* (16) and the default load factor (0.75).

*/

public HashMap() {

this(DEFAULT_INITIAL_CAPACITY, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and the default load factor (0.75).

*

* @param initialCapacity the initial capacity.

* @throws IllegalArgumentException if the initial capacity is negative.

*/

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty <tt>HashMap</tt> with the specified initial

* capacity and load factor.

*

* @param initialCapacity the initial capacity

* @param loadFactor the load factor

* @throws IllegalArgumentException if the initial capacity is negative

* or the load factor is nonpositive

*/

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

threshold = initialCapacity;

init();

}As can be seen from the above, in the constructor, if the initial capacity is greater than the maximum capacity, it will be directly replaced by the maximum capacity.

put method

Let’s take a look at the more important parts of HashMap

/**

* 在此映射中关联指定值与指定建。如果该映射以前包含了一个该键的映射关系,则旧值被替换

*

* @param 指定将要关联的键

* @param 指定将要关联的值

* @return 与key关联的旧值,如果key没有任何映射关系,则返回null(返回null还可能表示该映射之前将null与key关联)

*/

public V put(K key, V value) {

if (table == EMPTY_TABLE) {

inflateTable(threshold);

}

if (key == null)

return putForNullKey(value);

int hash = hash(key);

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}1. First, in the put method, first determine whether the bucket is in the default uninitialized state. If it is not initialized, call the inflateTable method to initialize it, and then determine whether the parameter key is null. If it is null, call putForNullKey to specifically put data with a null key. The putForNullKey method is actually the same as the step 3 below. However, the default storage location of data with a null key is the first one, that is, the subscript defaults to 0.

2. If the key is not null, call the hash() method to obtain the hash value of the key. You can calculate the location where the key can be placed in the bucket based on the hash value and the length of the bucket through the indexFor method.

3. There is an attribute next in the Entry object, which can form a one-way linked list to store elements with the same hash value. Therefore, when the calculated hash value of the key is repeated, the storage location will also be repeated. You only need to determine whether the element in the storage location and the next attribute list of the element are completely consistent with the given key and the hash value of the key. If there is a completely consistent one, it means it already exists, replace the old value, and return the old value directly as the return value.

4. Increase the number of structure modifications by 1

5. Call the addEntry method to add the new key-value pair to the HashMap. The addEntity method first determines whether the current entry data is greater than or equal to the load value (bucket capacity * load factor) and the specified position of the bucket is not null. If it is greater than and the specified position is not null, adjust the bucket's capacity to the current capacity. 2 times. To adjust the bucket capacity, refer to the initial capacity and load factor performance adjustment catalog above. Recalculate the Hash value and calculate the storage location. Call the createEntry method and store it in the bucket

void addEntry(int hash, K key, V value, int bucketIndex) {

if ((size >= threshold) && (null != table[bucketIndex])) {

resize(2 * table.length);

hash = (null != key) ? hash(key) : 0;

bucketIndex = indexFor(hash, table.length);

}

createEntry(hash, key, value, bucketIndex);

}

void createEntry(int hash, K key, V value, int bucketIndex) {

Entry<K,V> e = table[bucketIndex];

table[bucketIndex] = new Entry<>(hash, key, value, e);

size++;

}

/**

* Entry构造方法,创建一个新的Entry.

*/

Entry(int h, K k, V v, Entry<K,V> n) {

value = v;

next = n;

key = k;

hash = h;

}

6. 在 createEntry 方法中,首先获取指定位置的entry,然后新生成一个entry,在生成entry时把原有的entry存储到新生成的entry的next属性中(参考Entry的构造方法),并把指定位置的entry替换成新生成的。

因为新增条目的时候,需要计算hash值,长度不够时需要调整长度,当计算的存储位置已有元素的时候需要进行链表式的存储,所以使用HashMap新增操作的效率并不是太高。

get方法

首先看下get方法的源码:

/**

* 返回指定键所映射的值;如果对于该键来说,此映射不包含任何映射关系,则返回null

* 返回null值并不一定表明该映射不包含该键的映射,也可能改映射将该键显示的映射为null,可使用containsKey操作来区分这两种情况

* @see #put(Object, Object)

*/

public V get(Object key) {

if (key == null)

return getForNullKey();

Entry<K,V> entry = getEntry(key);

return null == entry ? null : entry.getValue();

}

final Entry<K,V> getEntry(Object key) {

if (size == 0) {

return null;

}

int hash = (key == null) ? 0 : hash(key);

for (Entry<K,V> e = table[indexFor(hash, table.length)];

e != null;

e = e.next) {

Object k;

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

return e;

}

return null;

}

get方法实现较简单,以下是几个步骤:

首先判断key是否为null,如果为null,则调用 getForNullKey 方法来获取,如果不为null则调用 getEntry 方法来获取。getForNullKey方法与getEntity基本上一致,只不过少了一个步骤,就是默认的key为null的存储位置在第一个,即下标为0,没有去计算位置而已。

getEntity方法根据key计算哈希值,然后用哈希值、桶的长度计算存储位置。

getEntity以获取指定位置的entry作为遍历的开始,遍历entry的next单链表,如果entry的哈希值与计算的哈希值一致且entry的key与指定的相等则返回entry

根据getEntity返回的值,get方法返回对应的值。

通过查看get的源码可以发现,get方法通过key的哈希值与桶的长度计算存储位置,基本上一下就能定位到要找的元素,即使再遍历几个重复哈希值的key,也是很快速的,因为哈希值相对唯一,所以HashMap对于查找性能是非常快的。

自定义对象作为HashMap的键

class User {

// 身份证号码

protected int idNumber;

public User(int id){

idNumber = id;

}

}

public class TestUser{

public static void main(String[] args) {

Map<User, String> map = new HashMap<User, String>();

for (int i=0; i<5; i++) {

map.put(new User(i), "姓名: " + i);

}

System.out.println("User3 的姓名:" + map.get(new User(3)));

}

}

输出:

User3 的姓名:null如上代码,通过自定义的User类实例作为HashMap的对象时,在打印的时候是无法找到User3的姓名的,因为User类自动继承基类Object,所以这里会自动使用Object的hashCode方法生成哈希值,而它默认是使用对象的地址计算哈希值的。因此new User(3)生成的第一个实例的哈希值与生成的第二个实例的哈希值是不一样的。但是如果只需要简单的覆盖hashCode方法,也是无法正常运作的,除非同时覆盖equals方法,它也是Object的一部分。HashMap使用equals()判断当前的键是否与表中存在的键相同,可以参考上面的get或put方法。

正确equals()方法必须满足下列5个条件:---参考《Java编程思想》—489页

自反性。对任意x,x.equals(x)一定返回true

对称性。对任意x和y,如果有y.equals(x)返回true,则x.equals(y)也返回true

传递性。对任意x,y,z,如果有x.equals(y)返回true,y.equals(z)返回true,则x.equals(z)一定返回true

一致性,对任意x和y,如果对象中用于等价比较的信息没有改变,那么无论调用x.equals(y)多少次,返回的结果应该保持一致,要么一致是true,要么一致是false.

对任何不是null的x,x.equals(null)一定返回false

再次强调:默认的Object.equals()只是比较对象的地址,所以一个new User(3)并不等于另一个new User(3)。因此,如果要使用自己的类作为HashMap的键,必须同时重载hashCode()和equals().

如下代码可以正常运作:

class User {

// 身份证号码

protected int idNumber;

public User(int id){

idNumber = id;

}

@Override

public int hashCode() {

return idNumber;

}

@Override

public boolean equals(Object obj) {

return obj instanceof User && (idNumber==((User)obj).idNumber);

}

}

public class TestUser{

public static void main(String[] args) {

Map<User, String> map = new HashMap<User, String>();

for (int i=0; i<5; i++) {

map.put(new User(i), "姓名: " + i);

}

System.out.println("User3 的姓名:" + map.get(new User(3)));

}

}

输出:

User3 的姓名:姓名: 3上面只是简单的在hashCode中返回了idNumber作为唯一的判别,用户也可以根据自己的业务实现自己的方法。在equals方法中,instanceof会悄悄的检查对象是否为null,如果instanceof左边的参数为null,则会返回false,如果equals()的参数不为null且类型正确,则基于每个对象中的实际的idNumber进行比较。从输出可以看出,现在的方式是正确的。

以上就是本文的全部内容,希望本文的内容对大家的学习或者工作能带来一定的帮助,同时也希望多多支持PHP中文网!

更多详解JavaHashMap实现原理相关文章请关注PHP中文网!