Home >Backend Development >Python Tutorial >Detailed explanation of Python crawler using cookies to implement simulated login examples

Detailed explanation of Python crawler using cookies to implement simulated login examples

- 高洛峰Original

- 2017-01-18 16:15:311861browse

Cookie refers to the data (usually encrypted) stored on the user's local terminal by some websites in order to identify the user's identity and perform session tracking.

For example, some websites require logging in to get the information you want. Without logging in, you can only enter guest mode. Then we can use the Urllib2 library to save the cookies we have logged in before, and then load them. Enter the cookie to get the page we want, and then crawl it. Understanding cookies is mainly to prepare us for quickly simulating login and crawling the target web page.

I used the urlopen() function in my previous post to open a web page for crawling. This is just a simple Python web page opener, and its parameters are only urlopen(url, data, timeout), These three parameters are far from enough for us to obtain the cookie of the target web page. At this time we will use another Opener-CookieJar.

Cookielib is also an important module for Python crawlers. It can be combined with urllib2 to crawl the desired content. The object of the CookieJar class of this module can capture cookies and resend them on subsequent connection requests, so that we can achieve the simulated login function we need.

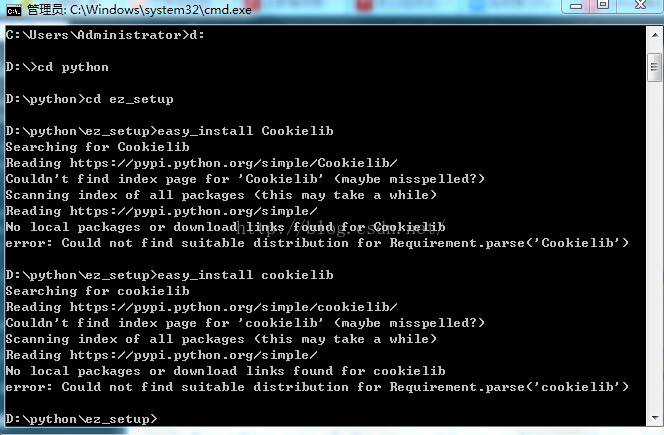

Special note here, cookielib is a built-in module in py2.7. There is no need to reinstall it. If you want to view its built-in modules, you can view the Lib folder in the Python directory, which contains all installed modules. . I didn't think about it at first, but I couldn't find cookielib in pycharm. I used the quick installation and got an error: Couldn't find index page for 'Cookielib' (maybe misspelled?)

Only then did I remember if it came with it. I didn’t expect that it was there when I went to the lib folder. I wasted half an hour messing around with all kinds of things~~

Let’s introduce this. module, the main objects of this module are CookieJar, FileCookieJar, MozillaCookieJar, and LWPCookieJar.

Their relationship: CookieJar --- Derived --> FileCookieJar --- Derived ---> The main usage of MozillaCookieJar and LWPCookieJar, we will also talk about it below. The urllib2.urlopen() function does not support authentication, cookies, or other advanced HTTP features. To support these functions, you must use the build_opener() (can be used to let the python program simulate browser access, you know the function~) function to create a custom Opener object.

1. First, let’s get the cookie of the website

Example:

#coding=utf-8

import cookielib

import urllib2

mycookie = cookielib.CookieJar() #声明一个CookieJar的类对象保存cookie(注意CookieJar的大小写问题)

handler = urllib2.HTTPCookieProcessor(mycookie) #利用urllib2库中的HTTPCookieProcessor来声明一个处理cookie的处理器

opener = urllib2.build_opener(handler) #利用handler来构造opener,opener的用法和urlopen()类似

response = opener.open("http://www.baidu.com") #opener返回的一个应答对象response

for item in my.cookie:

print"name="+item.name

print"value="+item.valueResult:

name=BAIDUID value=73BD718962A6EA0DAD4CB9578A08FDD0:FG=1 name=BIDUPSID value=73BD718962A6EA0DAD4CB9578A08FDD0 name=H_PS_PSSID value=1450_19035_21122_17001_21454_21409_21394_21377_21526_21189_21398 name=PSTM value=1478834132 name=BDSVRTM value=0 name=BD_HOME value=0

In this way we Got the simplest cookie.

2. Save the cookie to the file

We got the cookie above, now we learn how to save the cookie. Here we use its subclass MozillaCookieJar to save Cookie

Example:

#coding=utf-8

import cookielib

import urllib2

mycookie = cookielib.MozillaCookieJar() #声明一个MozillaCookieJar的类对象保存cookie(注意MozillaCookieJar的大小写问题)

handler = urllib2.HTTPCookieProcessor(mycookie) #利用urllib2库中的HTTPCookieProcessor来声明一个处理cookie的处理器

opener = urllib2.build_opener(handler) #利用handler来构造opener,opener的用法和urlopen()类似

response = opener.open("http://www.baidu.com") #opener返回的一个应答对象response

for item in mycookie:

print"name="+item.name

print"value="+item.value

filename='mycookie.txt'#设定保存的文件名

mycookie.save(filename,ignore_discard=True, ignore_expires=True)

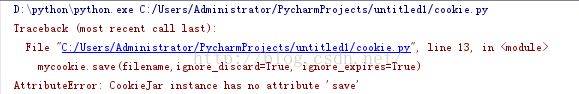

This example can be obtained by simply transforming the above example, using CookieJar Subclass MozillaCookiJar, why? Let’s try replacing MozillaCookiJar with CookieJar. You can understand it in the picture below:

CookieJar does not save the save attribute~

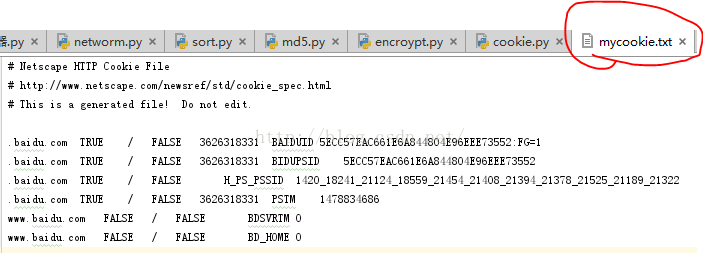

save() In this method: ignore_discard means to save the cookies even if they will be discarded, and ignore_expires means that if the cookies already exist in the file, the original file will be overwritten. Here, we set both of them to True. After running, the cookies will be saved to the cookie.txt file. Let’s check the content:

In this way, we have successfully saved the cookies we want

3. Get the cookie from the file and visit

<pre style="background-color: rgb(255, 255, 255); font-family: 宋体; font-size: 9pt;"><pre name="code" class="python">#coding=utf-8

import urllib2

import cookielib

import urllib

#第一步先给出账户密码网址准备模拟登录

postdata = urllib.urlencode({

'stuid': '1605122162',

'pwd': 'xxxxxxxxx'#密码这里就不泄漏啦,嘿嘿嘿

})

loginUrl = 'http://ids.xidian.edu.cn/authserver/login?service=http%3A%2F%2Fjwxt.xidian.edu.cn%2Fcaslogin.jsp'# 登录教务系统的URL,成绩查询网址

# 第二步模拟登陆并保存登录的cookie

filename = 'cookie.txt' #创建文本保存cookie

mycookie = cookielib.MozillaCookieJar(filename) # 声明一个MozillaCookieJar对象实例来保存cookie,之后写入文件

opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(mycookie)) #定义这个opener,对象是cookie

result = opener.open(loginUrl, postdata)

mycookie.save(ignore_discard=True, ignore_expires=True)# 保存cookie到cookie.txt中

# 第三步利用cookie请求访问另一个网址,教务系统总址

gradeUrl = 'http://ids.xidian.edu.cn/authserver/login?service' #只要是帐号密码一样的网址就可以, 请求访问成绩查询网址

result = opener.open(gradeUrl)

print result.read()创建一个带有cookie的opener,在访问登录的URL时,将登录后的cookie保存下来,然后利用这个cookie来访问其他网址。

核心思想:创建opener,包含了cookie的内容。之后在利用opener时,就会自动使用原先保存的cookie.

Thank you for reading, I hope it can help everyone, thank you for your support of this site!

For more detailed explanations of Python crawlers using cookies to implement simulated login examples, please pay attention to the PHP Chinese website!