Home >Backend Development >Python Tutorial >In-depth analysis of python Chinese garbled problem

In-depth analysis of python Chinese garbled problem

- 高洛峰Original

- 2017-01-13 16:07:131342browse

In this article, 'Ha' is used as an example to explain all the problems. The various encodings of "Ha" are as follows:

1. UNICODE (UTF8-16), C854;

2. UTF-8, E59388;

3. GBK,B9FE.

1. str and unicode in python

The Chinese encoding in python has always been a very big problem, and encoding conversion exceptions are often thrown. What exactly are str and unicode in python? ?

When unicode is mentioned in python, it generally refers to unicode objects. For example, the unicode object of 'haha' is

u'\u54c8\u54c8'

And str is a byte array. This byte The array represents the storage format after encoding the unicode object (can be utf-8, gbk, cp936, GB2312). Here it is just a byte stream, with no other meaning. If you want to make the content displayed by this byte stream meaningful, you must use the correct encoding format, decode and display.

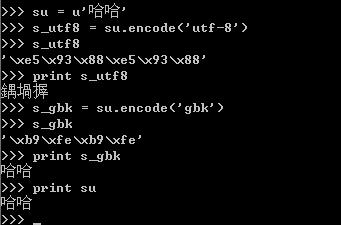

For example:

For encoding the unicode object haha, encode it into a utf-8 encoded str-s_utf8, s_utf8 is a byte array, which is stored '\xe5\x93\x88\xe5\x93\x88', but this is just a byte array. If you want to output it as haha through the print statement, then you will be disappointed. Why?

Because the implementation of the print statement is to transmit the output content to the operating system, the operating system will encode the input byte stream according to the system's encoding, which explains why the string in utf-8 format "Haha", the output is "鍝鍚搱", because '\xe5\x93\x88\xe5\x93\x88' is interpreted by GB2312, and the displayed value is "鍝鍚搱". Let me emphasize again that str records a byte array, which is just a certain encoding storage format. As for the format of output to a file or printed out, it depends entirely on how it is decoded by the decoding encoding.

Here is a little additional explanation about print: when a unicode object is passed to print, the unicode object will be converted internally into the local default encoding (this is just a personal guess)

2. Conversion of str and unicode objects

Conversion of str and unicode objects is achieved through encode and decode. The specific usage is as follows:

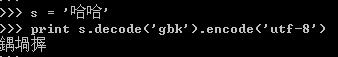

Convert GBK'haha' to unicode, and then convert it to UTF8

3. Setdefaultencoding

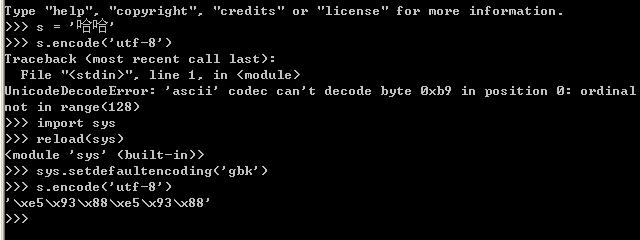

As shown in the demo code above:

When s (gbk string) is directly encoded into utf-8, an exception will be thrown, but by calling the following code:

import sys

reload( sys)

sys.setdefaultencoding('gbk')

The conversion can be successful. Why? In the encoding and decoding process of str and unicode in Python, if a str is directly encoded into another encoding, str will be decoded into unicode first, and the encoding used is the default encoding. Generally, the default encoding is anscii, so in the above example An error will occur during the first conversion in the code. After setting the current default encoding to 'gbk', there will be no error.

As for reload(sys), because Python2.5 will delete the sys.setdefaultencoding method after initialization, we need to reload it.

4. Manipulate files with different encoding formats

Create a file test.txt. The file format is ANSI and the content is:

abc中文

Use python to read

# coding=gbk

print open("Test.txt").read()

Result: abc Chinese

Change the file format to UTF-8:

Result: abc涓枃

Obviously, decoding is required here:

# coding=gbk

import codecs

print open("Test.txt").read().decode("utf-8")

Result: abc Chinese

Above I used Editplus to edit the test.txt, but when I used the Notepad that comes with Windows to edit and save it in UTF-8 format,

an error occurred when running:

Traceback (most recent call last):

File "ChineseTest.py", line 3, in

print open("Test.txt").read().decode("utf-8")

UnicodeEncodeError: 'gbk' codec can't encode character u'\ufeff' in position 0: illegal multibyte sequence

It turns out that some software, such as notepad, is saving a UTF-8 encoded file, three invisible characters (0xEF 0xBB 0xBF, or BOM) will be inserted at the beginning of the file.

So we need to remove these characters ourselves when reading. The codecs module in python defines this constant:

# coding=gbk

import codecs

data = open("Test.txt").read()

if data[:3] == codecs.BOM_UTF8:

data = data[3:]

print data.decode("utf-8")

Result: abc Chinese

5. The encoding format of the file and the role of the encoding statement

The source file What effect does the encoding format have on the declaration of strings? This problem has been bothering me for a long time, and now I finally have some clues. The encoding format of the file determines the encoding format of the string declared in the source file, for example:

str = 'Haha'

print repr(str)

a. If the file format is utf-8, the value of str is: '\xe5\x93\x88\xe5\x93\x88' (haha utf-8 Encoding)

b. If the file format is gbk, the value of str is: '\xb9\xfe\xb9\xfe' (haha gbk encoding)

As mentioned in the first section However, the string in Python is just a byte array, so when the str in case a is output to the gbk-encoded console, it will be displayed as garbled characters: 鍝矚搱; and when the str in case b is output as utf -8 encoded console will also display garbled characters and nothing. Maybe '\xb9\xfe\xb9\xfe' is decoded with utf-8 and displayed, and it will be blank. >_<

After talking about the file format, let’s talk about the role of the encoding statement. At the top of each file, a statement similar to #coding=gbk will be used to declare the encoding, but this statement What's the use? So far, I think it has only three functions:

declares that non-ascii encoding will appear in the source file, usually Chinese;

in advanced In the IDE, the IDE will save your file format into the encoding format you specify.

Determining the encoding format used to decode 'ha' into unicode for statements similar to u'ha' in the source code is also a confusing place. See example:

#coding:gbk