Home >Java >javaTutorial >Write Java Zhihu crawler with zero foundation to obtain content recommended by Zhihu editors (2)

Write Java Zhihu crawler with zero foundation to obtain content recommended by Zhihu editors (2)

- 黄舟Original

- 2016-12-24 11:26:511253browse

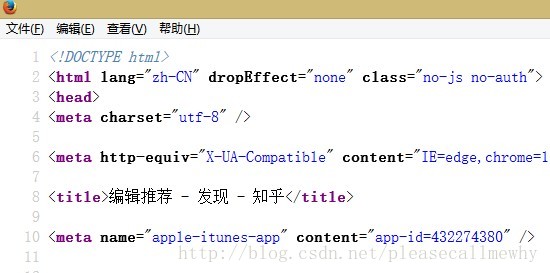

The webpage can set the webpage encoding through the charset of the meta tag, for example:

<meta charset="utf-8" />

We right-click to view the page source code:

You can see that Zhihu uses UTF-8 encoding.

Here I will explain to you the difference between viewing the page source code and inspecting elements.

Viewing the page source code displays all the code of the entire page. It is not formatted according to HTML tags. It is equivalent to viewing the source code directly. This method is more useful for viewing the information of the entire web page, such as meta.

Inspect element, or some browsers call it view element, which is to view the element you right-click, such as a div or img. It is more suitable for viewing the attributes and tags of an object individually.

Okay, now we know that the problem lies in the encoding, and the next step is to convert the encoding of the captured content.

It is very simple to implement in java. You only need to specify the encoding method in the InputStreamReader:

// 初始化 BufferedReader输入流来读取URL的响应

in = new BufferedReader(new InputStreamReader(

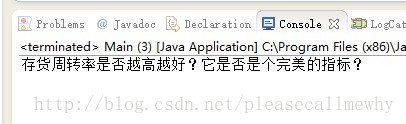

connection.getInputStream(),"UTF-8"));Run the program again at this time, and you will find that the title can be displayed normally:

Okay! very good!

But now there is only one title, what we need is all the titles.

We slightly modify the regular expression and store the searched results in an ArrayList:

import java.io.*;

import java.net.*;

import java.util.ArrayList;

import java.util.regex.*;

public class Main {

static String SendGet(String url) {

// 定义一个字符串用来存储网页内容

String result = "";

// 定义一个缓冲字符输入流

BufferedReader in = null;

try {

// 将string转成url对象

URL realUrl = new URL(url);

// 初始化一个链接到那个url的连接

URLConnection connection = realUrl.openConnection();

// 开始实际的连接

connection.connect();

// 初始化 BufferedReader输入流来读取URL的响应

in = new BufferedReader(new InputStreamReader(

connection.getInputStream(), "UTF-8"));

// 用来临时存储抓取到的每一行的数据

String line;

while ((line = in.readLine()) != null) {

// 遍历抓取到的每一行并将其存储到result里面

result += line;

}

} catch (Exception e) {

System.out.println("发送GET请求出现异常!" + e);

e.printStackTrace();

}

// 使用finally来关闭输入流

finally {

try {

if (in != null) {

in.close();

}

} catch (Exception e2) {

e2.printStackTrace();

}

}

return result;

}

static ArrayList<String> RegexString(String targetStr, String patternStr) {

// 预定义一个ArrayList来存储结果

ArrayList<String> results = new ArrayList<String>();

// 定义一个样式模板,此中使用正则表达式,括号中是要抓的内容

Pattern pattern = Pattern.compile(patternStr);

// 定义一个matcher用来做匹配

Matcher matcher = pattern.matcher(targetStr);

// 如果找到了

boolean isFind = matcher.find();

// 使用循环将句子里所有的kelvin找出并替换再将内容加到sb里

while (isFind) {

//添加成功匹配的结果

results.add(matcher.group(1));

// 继续查找下一个匹配对象

isFind = matcher.find();

}

return results;

}

public static void main(String[] args) {

// 定义即将访问的链接

String url = "http://www.zhihu.com/explore/recommendations";

// 访问链接并获取页面内容

String result = SendGet(url);

// 使用正则匹配图片的src内容

ArrayList<String> imgSrc = RegexString(result, "question_link.+?>(.+?)<");

// 打印结果

System.out.println(imgSrc);

}

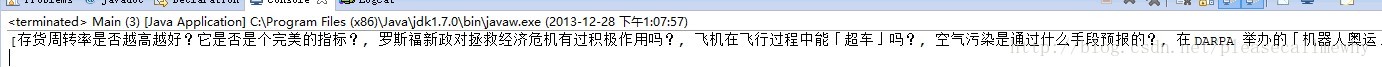

}In this way, all results can be matched (because the ArrayList is printed directly, there will be some square brackets and commas):

OK, this is the first step of Zhihu crawler.

But we can see that there is no way to capture all the questions and answers in this way.

We need to design a Zhihu encapsulation class to store all captured objects.

The above is the content of writing Java Zhihu crawler with zero basic knowledge to obtain content recommended by Zhihu editors (2). For more related content, please pay attention to the PHP Chinese website (www.php.cn)!