We talked about multi-level cache last time. This chapter introduces in detail how to design the memory cache.

1: Analysis and Design

Suppose there is a project with a certain amount of concurrency, which requires the use of multi-level cache, as follows:

Before actually designing a memory cache, we need to consider issues:

1: Memory Data replacement with Redis improves the data hit rate in memory as much as possible and reduces the pressure on the next level.

2: Memory capacity limit, the number of caches needs to be controlled.

3: Hotspot data updates are different and a single key expiration time needs to be configurable.

4: Good cache expiration deletion strategy.

5: Keep the complexity of the cache data structure as low as possible.

About replacement and hit rate: We use the LRU algorithm because it is simple to implement and the cache key hit rate is also very good.

LRU means: eliminate the data that has been accessed least recently, and the data that is frequently accessed is hot data.

About LRU data structure: Because of key priority promotion and key elimination, a sequential structure is required. I have seen that most implementations adopt a linked list structure, that is: new data is inserted into the head of the linked list, and the data when hit is moved to the head. Adding complexity is O(1) and moving and getting complexity is O(N).

Is there anything less complex? There is Dictionary, whose complexity is O(1) and has the best performance. So how to ensure that the cache priority is improved?

Two: O(1) LRU implementation

We define a LRUCache

Use ConcurrentDictionary as our cache container and ensure thread safety.

public class LRUCache<TValue> : IEnumerable<KeyValuePair<string, TValue>>

{

private long ageToDiscard = 0; //淘汰的年龄起点

private long currentAge = 0; //当前缓存最新年龄

private int maxSize = 0; //缓存最大容量

private readonly ConcurrentDictionary<string, TrackValue> cache;

public LRUCache(int maxKeySize)

{

cache = new ConcurrentDictionary<string, TrackValue>();

maxSize = maxKeySize;

}

}The two self-increasing parameters ageToDiscard and currentAge are defined above. Their function is to mark the newness of each key in the cache list.

The core implementation steps are as follows:

1: Each time a key is added, currentAge is incremented and the currentAge value is assigned to the Age of this cache value. CurrentAge always increases.

public void Add(string key, TValue value)

{

Adjust(key);

var result = new TrackValue(this, value);

cache.AddOrUpdate(key, result, (k, o) => result);

}

public class TrackValue

{

public readonly TValue Value;

public long Age;

public TrackValue(LRUCache<TValue> lv, TValue tv)

{

Age = Interlocked.Increment(ref lv.currentAge);

Value = tv;

}

}2: When adding, if the maximum quantity is exceeded. Check whether there is an ageToDiscard age key in the dictionary. If there is no cyclic auto-increment check, the deletion and addition will be successful.

ageToDiscard+maxSize= currentAge, so that the design can ensure that old data can be eliminated under O(1) instead of using linked list movement.

public void Adjust(string key)

{

while (cache.Count >= maxSize)

{

long ageToDelete = Interlocked.Increment(ref ageToDiscard);

var toDiscard =

cache.FirstOrDefault(p => p.Value.Age == ageToDelete);

if (toDiscard.Key == null)

continue;

TrackValue old;

cache.TryRemove(toDiscard.Key, out old);

}

}Expired deletion strategy

In most cases, the LRU algorithm has a high hit rate for hotspot data. However, if a large number of sporadic data accesses occur suddenly, a large amount of cold data will be stored in the memory, which is cache pollution.

will cause LRU to be unable to hit hotspot data, causing the cache system hit rate to drop sharply. Variant algorithms such as LRU-K, 2Q, and MQ can also be used to improve the hit rate.

Expiration configuration

1: We try to avoid cold data resident in memory by setting the maximum expiration time.

2: In most cases, the time requirements of each cache are inconsistent, so the expiration time of a single key is increased.

private TimeSpan maxTime;

public LRUCache(int maxKeySize,TimeSpan maxExpireTime){}

//TrackValue增加创建时间和过期时间

public readonly DateTime CreateTime;

public readonly TimeSpan ExpireTime;Deletion strategy

1: Regarding key expiration deletion, it is best to use scheduled deletion. This can release the occupied memory as quickly as possible, but obviously, a large number of timers are too much for the CPU.

2:所以我们采用惰性删除、在获取key的时检查是否过期,过期直接删除。

public Tuple<TrackValue, bool> CheckExpire(string key)

{

TrackValue result;

if (cache.TryGetValue(key, out result))

{

var age = DateTime.Now.Subtract(result.CreateTime);

if (age >= maxTime || age >= result.ExpireTime)

{

TrackValue old;

cache.TryRemove(key, out old);

return Tuple.Create(default(TrackValue), false);

}

}

return Tuple.Create(result, true);

}3:惰性删除虽然性能最好,对于冷数据来说,还是没解决缓存污染问题。 所以我们还需定期清理。

比如:开个线程,5分钟去遍历检查key一次。这个策略根据实际场景可配置。

public void Inspection()

{

foreach (var item in this)

{

CheckExpire(item.Key);

}

}惰性删除+定期删除基本能满足我们需求了。

总结

如果继续完善下去,就是内存数据库的雏形,类似redis。

比如:增加删除key的通知,增加更多数据类型。 本篇也是参考了redis、Orleans的实现。

计算机编程中常见的if语句是什么Jan 29, 2023 pm 04:31 PM

计算机编程中常见的if语句是什么Jan 29, 2023 pm 04:31 PM计算机编程中常见的if语句是条件判断语句。if语句是一种选择分支结构,它是依据明确的条件选择选择执行路径,而不是严格按照顺序执行,在编程实际运用中要根据程序流程选择适合的分支语句,它是依照条件的结果改变执行的程序;if语句的简单语法“if(条件表达式){// 要执行的代码;}”。

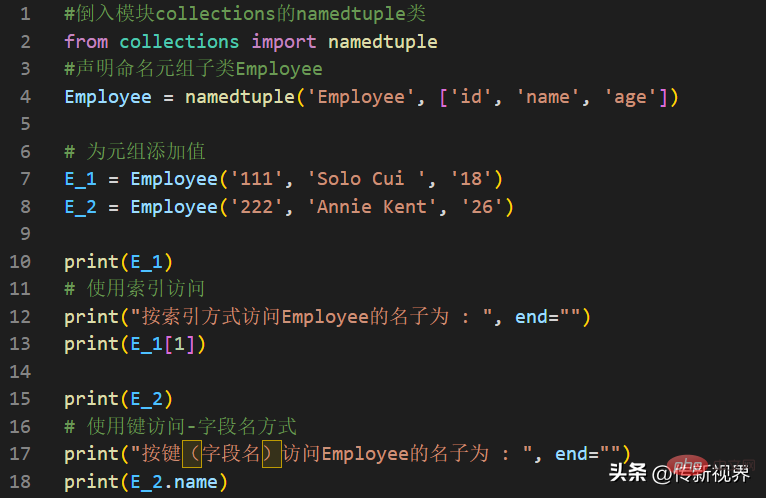

Python编程:详解命名元组(namedtuple)的使用要点Apr 11, 2023 pm 09:22 PM

Python编程:详解命名元组(namedtuple)的使用要点Apr 11, 2023 pm 09:22 PM前言本文继续来介绍Python集合模块,这次主要简明扼要的介绍其内的命名元组,即namedtuple的使用。闲话少叙,我们开始——记得点赞、关注和转发哦~ ^_^创建命名元组Python集合中的命名元组类namedTuples为元组中的每个位置赋予意义,并增强代码的可读性和描述性。它们可以在任何使用常规元组的地方使用,且增加了通过名称而不是位置索引方式访问字段的能力。其来自Python内置模块collections。其使用的常规语法方式为:import collections XxNamedT

如何在Go中进行图像处理?May 11, 2023 pm 04:45 PM

如何在Go中进行图像处理?May 11, 2023 pm 04:45 PM作为一门高效的编程语言,Go在图像处理领域也有着不错的表现。虽然Go本身的标准库中没有提供专门的图像处理相关的API,但是有一些优秀的第三方库可以供我们使用,比如GoCV、ImageMagick和GraphicsMagick等。本文将重点介绍使用GoCV进行图像处理的方法。GoCV是一个高度依赖于OpenCV的Go语言绑定库,其

PHP8.0中的邮件库May 14, 2023 am 08:49 AM

PHP8.0中的邮件库May 14, 2023 am 08:49 AM最近,PHP8.0发布了一个新的邮件库,使得在PHP中发送和接收电子邮件变得更加容易。这个库具有强大的功能,包括构建电子邮件,发送电子邮件,解析电子邮件,获取附件和解决电子邮件获得卡住的问题。在很多项目中,我们都需要使用电子邮件来进行通信和一些必备的业务操作。而PHP8.0中的邮件库可以让我们轻松地实现这一点。接下来,我们将探索这个新的邮件库,并了解如何在我

PHP8.0中的DOMDocumentMay 14, 2023 am 08:18 AM

PHP8.0中的DOMDocumentMay 14, 2023 am 08:18 AM随着PHP8.0的发布,DOMDocument作为PHP内置的XML解析库,也有了新的变化和增强。DOMDocument在PHP中的重要性不言而喻,尤其在处理XML文档方面,它的功能十分强大,而且使用起来也十分简单。本文将介绍PHP8.0中DOMDocument的新特性和应用。一、DOMDocument概述DOM(DocumentObjectModel)

学Python,还不知道main函数吗Apr 12, 2023 pm 02:58 PM

学Python,还不知道main函数吗Apr 12, 2023 pm 02:58 PMPython 中的 main 函数充当程序的执行点,在 Python 编程中定义 main 函数是启动程序执行的必要条件,不过它仅在程序直接运行时才执行,而在作为模块导入时不会执行。要了解有关 Python main 函数的更多信息,我们将从如下几点逐步学习:什么是 Python 函数Python 中 main 函数的功能是什么一个基本的 Python main() 是怎样的Python 执行模式Let’s get started什么是 Python 函数相信很多小伙伴对函数都不陌生了,函数是可

PHP8.0中的Symbol类型May 14, 2023 am 08:39 AM

PHP8.0中的Symbol类型May 14, 2023 am 08:39 AMPHP8.0是PHP语言的最新版本,自发布以来已经引发了广泛的关注和争议。其中,最引人瞩目的新特性之一就是Symbol类型。Symbol类型是PHP8.0中新增的一种数据类型,它类似于JavaScript中的Symbol类型,可用于表示独一无二的值。这意味着,两个Symbol类型的值即使完全相同,它们也是不相等的。Symbol类型的使用可以避免在不同的代码段

为拯救童年回忆,开发者决定采用古法编程:用Flash高清重制了一款游戏Apr 11, 2023 pm 10:16 PM

为拯救童年回忆,开发者决定采用古法编程:用Flash高清重制了一款游戏Apr 11, 2023 pm 10:16 PM两年多前,Adobe 发布了一则引人关注的公告 —— 将在 2020 年 12 月 31 日终止支持 Flash,宣告了一个时代的结束。一晃两年过去了,Adobe 早已从官方网站中删除了 Flash Player 早期版本的所有存档,并阻止基于 Flash 的内容运行。微软也已经终止对 Adobe Flash Player 的支持,并禁止其在任何 Microsoft 浏览器上运行。Adobe Flash Player 组件于 2021 年 7 月通过 Windows 更新永久删除。当 Flash

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Dreamweaver Mac version

Visual web development tools

Notepad++7.3.1

Easy-to-use and free code editor

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft