Home >Backend Development >PHP Tutorial >Programming technology cache writing method (2)

Programming technology cache writing method (2)

- 伊谢尔伦Original

- 2016-11-30 09:22:071382browse

Last time we mainly discussed the various code implementations of cache reading and writing. This article continues the last question and continues to look at our various cache usages over the years.

1: Cache warm-up

A classmate asked about it last time. When loading for the first time, our cache is empty, how to warm it up.

In stand-alone Web situations, we generally use RunTimeCache. Compared with this situation:

1: We can refresh in the startup event

void Application_Start(object sender, EventArgs e)

{

//刷新

}2: Write a single refresh cache page, refresh it manually after going online, or automatically call the refresh when publishing, or simply trigger it by the user.

In the case of distributed cache (Redis, memcached):

For example: when dozens of servers cache, it will take quite a while to fill up the cache.

This kind of preheating is a bit more complicated. Some will write a single application to run it, and some will write a single framework mechanism to handle it (more intelligent).

The purpose is before going online: all caches are pre-loaded.

Two: Multi-level cache

2.1 Introduction

We know that there are generally first-level cache and second-level cache between the CPU and memory to increase the exchange speed.

In this way, when the CPU calls a large amount of data, it can avoid the memory and call it directly from the CPU cache to speed up the reading speed.

The characteristics of multi-level cache based on CPU cache:

1: Each level of cache stores a part of the next level cache.

2: The reading speed decreases in sequence by level, the cost also decreases in sequence, and the capacity increases in sequence.

3: When the current level misses, it will go to the next level to search.

In enterprise application-level development, using multi-level cache has the same purpose and design, but the granularity is coarser and more flexible.

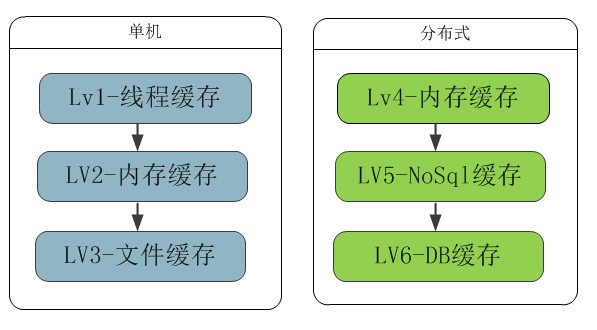

Cache type diagram of lv1-lv6 arranged according to speed:

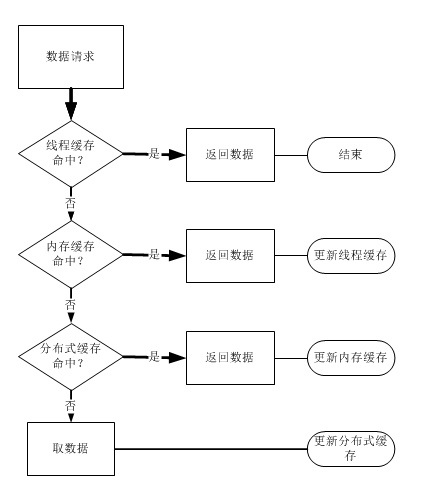

Example of hit flow chart for level 3 cache:

2.2 Thread cache

Web applications are inherently multi-threaded. For some public resources we must consider thread safety, so far we have to use locks to ensure the integrity and correctness of data.

In reality, a web server must handle at least hundreds or thousands of requests. Think about it, in complex business processes, we have to lock every time a function is called.

It is also a big waste for the server. Through thread caching, the thread currently processing user requests can only take what it needs.

public static ThreadLocal<UserScore> localUserInfo = new ThreadLocal<UserScore>();

With the thread local variables provided by Net, we can pull the current user's data at the request entry.

In the entire life cycle of the thread, our business logic can use this data without any worries, without considering thread safety.

And we don’t have to get new data, so we don’t have to worry about data tearing.

Because the data in the current thread cycle is complete and correct, and only the user initiates a request for the second time will the new data be retrieved.

This can improve our server throughput a lot. Pay attention to destroy the data at the thread exit.

2.3 Memory cache

Whether it is remote database reading or cache server reading. It is inevitable to communicate across processes, networks, and sometimes computer rooms.

The frequent reading and writing of applications consumes a lot of money on the Web and DB servers, and the speed is much slower than that of memory.

It is not a good idea to add locking, asynchronous, or even adding a server to the code. Because loading speed is very important to user experience.

So it is very necessary to use local memory for secondary cache in projects that require it. The purpose is 1: anti-concurrency, 2: speed up reading.

There is a famous five-minute cache rule, which means that if a piece of data is accessed frequently, it should be placed in memory.

For example: If there are 100 concurrent requests, locking will cause 99 front-end threads to wait. These 99 threads waiting are actually consuming web server resources. Not adding it is a cache avalanche.

If we pull a cache every minute and cache it into memory, the waiting time of 99 threads will be greatly shortened.

2.4 File Cache

Compared with memory, hard disk capacity is large and the speed is faster than using the Internet.

So we can cache some data that does not change frequently and is wasteful in memory to the local hard disk.

For example, using sqlite for some file databases, we can easily do it.

2.5 Distributed cache

Redis, memcached, etc. based on memory cache.

Casssandra, mongodb, etc. based on file nosql.

redis and memcached are mainstream distributed memory caches and are also the largest cache layers between applications and DB.

Nosql is actually not only used for caching, but also used in the DB layer of some non-core businesses.

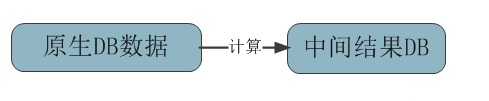

2.6 DB cache

This layer of DB mainly caches the results calculated from the original data. And avoid direct calculation by Web program through SQL or in use.

Of course we can also store the calculated data in redis for caching.

Three: Multi-layer cache

The concept of multi-layer cache has been used in many places:

1: The multi-level cache we mentioned above is a type of cache that divides content into different caches based on the reading frequency level. Hierarchical storage, the higher the frequency, the closer it is.

2: There is also a multi-layer cache index approach, similar to B-tree search, which can improve retrieval efficiency.

3: Architecturally speaking, client caching, CDN caching, reverse proxy caching, server caching, are also multi-layer caching.

Four: Summary

In terms of use, everyone can make various combinations based on actual scenarios. This article is more theoretical, and many details are not elaborated.

For example, the use of distributed cache, cache replacement strategy and algorithm, cache expiration mechanism, etc. Will continue to add more later.