Home >Backend Development >Python Tutorial >Python Scrapy crawler: DEMO of synchronous and asynchronous paging

Python Scrapy crawler: DEMO of synchronous and asynchronous paging

- 高洛峰Original

- 2016-11-22 14:03:263781browse

Paging interaction has two situations: synchronous and asynchronous when requesting data. When synchronous, the page is refreshed as a whole, and when asynchronous, the page is refreshed partially. The two types of paginated data are processed differently when crawling. DEMO is for learning only, all domain names are anonymized as test

Synchronized paging

During synchronized paging, the page is refreshed as a whole, and the url address bar will change

The data object parsed by the crawler is HTML

Test scenario: crawling a recruitment website in Beijing Java jobs in the district

#coding=utf-8import scrapyclass TestSpider(scrapy.Spider):

name='test'

download_delay=3

user_agent='Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36'

page_url = 'http://www.test.com/zhaopin/Java/{0}/?filterOption=2'

page=1

#执行入口

def start_requests(self):

#第一页

yield scrapy.Request(self.page_url.format('1'),

headers={'User-Agent':self.user_agent},

callback=self.parse,

errback=self.errback_httpbin) #解析返回的数据

def parse(self,response):

for li in response.xpath('//*[@id="s_position_list"]/ul/li'): yield{ 'company':li.xpath('@data-company').extract(), 'salary':li.xpath('@data-salary').extract()

} #是否是最后一页,根据下一页的按钮css样式判断

if response.css('a.page_no.pager_next_disabled'):

print('---is the last page,stop!---')

pass

else:

self.page=self.page+1

#抓取下一页

yield scrapy.Request(self.page_url.format(str(self.page)),

headers={'User-Agent':self.user_agent},

callback=self.parse,

errback=self.errback_httpbin) #异常处理

def errback_httpbin(self,failure):

if failure.check(HttpError):

response = failure.value.response print 'HttpError on {0}'.format(response.url) elif failure.check(DNSLookupError):

request = failure.request print'DNSLookupError on {0}'.format(request.url) elif failure.check(TimeoutError, TCPTimedOutError):

request = failure.request print'TimeoutError on {0}'.format(request.url)Start the crawler: scrapy runspider //spiders//test_spider.py -o test.csv After completion, a file in csv format is generated:

Asynchronous paging

During asynchronous paging, the page is partially refreshed, The url address bar does not change

The data object parsed by the crawler is usually Json

Test scenario: crawl the top 100 classic movies of a movie website

#coding=utf-8import scrapyimport jsonclass TestSpider(scrapy.Spider):

name ='test'

download_delay = 3

user_agent = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36'

pre_url = 'https://movie.douban.com/j/search_subjects?type=movie&tag=%E7%BB%8F%E5%85%B8&sort=recommend&page_limit=20&page_start='

page=0

cnt=0

def start_requests(self):

url= self.pre_url+str(0*20) yield scrapy.Request(url,headers={'User-Agent':self.user_agent},callback=self.parse) def parse(self,response):

if response.body: # json字符串转换成Python对象

python_obj=json.loads(response.body)

subjects=python_obj['subjects'] if len(subjects)>0: for sub in subjects:

self.cnt=self.cnt+1

yield { 'title':sub["title"], 'rate':sub["rate"]

} if self.cnt<100: print 'next page-------'

self.page=self.page+1

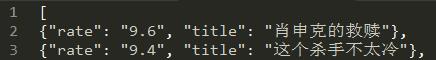

url= self.pre_url+str(self.page*20) yield scrapy.Request(url,headers={'User-Agent':self.user_agent},callback=self.parse)Start the crawler: scrapy runspider //spiders//test_spider.py -o test After the .json is completed, a json format file is generated:

The difference between Scrapy and BeautifulSoup or lxml

scrapy is a complete framework for writing crawlers and crawling data, while BeautifulSoup or lxml is just a library for parsing html/xml, with functions Just like scrapy's xpath and css selectors, they can also be used under scrapy, but their operation efficiency is relatively low. When using scrapy's selector, we can use the browser's F12 mode to directly copy the xpath and css values of any node.