Home >Backend Development >Python Tutorial >Python crawler practice crawling V2EX website posts

Python crawler practice crawling V2EX website posts

- 高洛峰Original

- 2016-11-07 16:43:352071browse

Background:

PySpider: A powerful web crawler system written by a Chinese with a powerful WebUI. It is written in Python language, has a distributed architecture, supports multiple database backends, and the powerful WebUI supports script editor, task monitor, project manager and result viewer. Online example: http://demo.pyspider.org/

Official documentation: http://docs.pyspider.org/en/l...

Github: https://github.com/binux/pysp. ..

The Github address of the crawler code of this article: https://github.com/zhisheng17...

More exciting articles can be read on the WeChat public account: Yuanblog, welcome to follow.

Having said so much, let’s come to the main text!

Prerequisite:

You have already installed Pyspider and MySQL-python (save data)

If you haven’t installed it yet, please take a look at my previous one This article will prevent you from taking detours.

Some pitfalls I went through when learning the Pyspider framework

HTTP 599: SSL certificate problem: unable to get local issuer certificate error

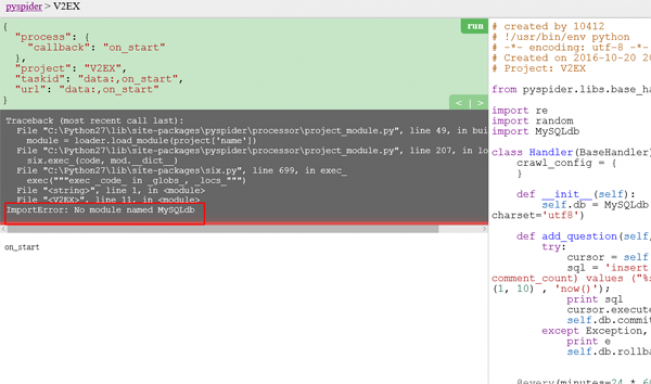

Some errors I encountered:

First of all, the goal of this crawler: use Pyspider The framework crawls the questions and content in the posts on the V2EX website, and then saves the crawled data locally.

Most posts in V2EX do not require logging in to view. Of course, some posts require logging in to view. (Because I found errors all the time when crawling, and after checking the specific reasons, I found out that you need to log in to view those posts) So I don’t think it is necessary to use cookies. Of course, if you have to log in, it is very simple, simple. The local method is to add the cookie after you log in.

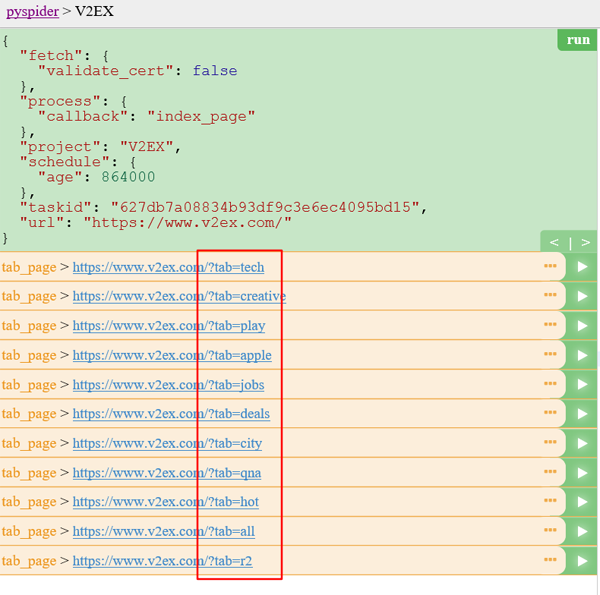

We scanned https://www.v2ex.com/ and found that there is no list that can contain all the posts. We can only do the next best thing and traverse it by grabbing all the tag list pages under the category. All posts: https://www.v2ex.com/?tab=tech Then https://www.v2ex.com/go/progr... Finally, the detailed address of each post is (for example): https: //www.v2ex.com/t/314683...

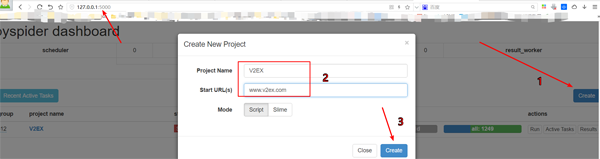

Create a project

In the lower right corner of the pyspider dashboard, click the "Create" button

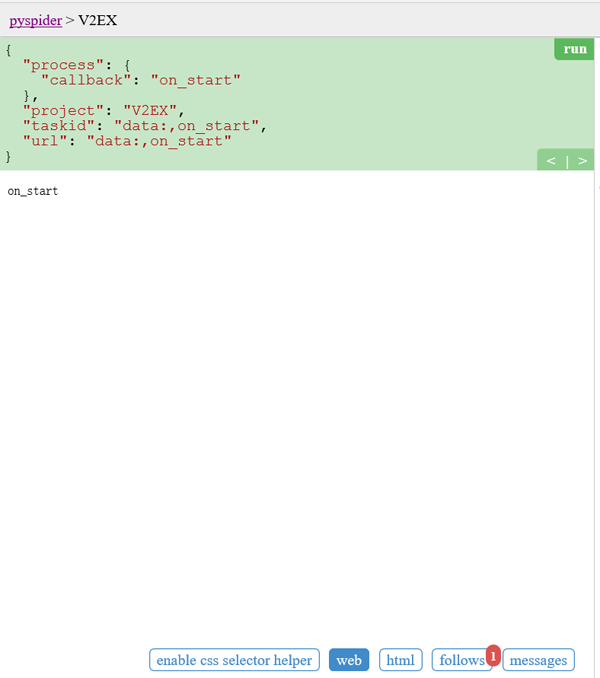

Replace the URL of self.crawl of the on_start function:

@every(minutes=24 * 60)

def on_start(self):

self.crawl('https://www.v2ex.com/', callback=self.index_page, validate_cert=False)self.crawl tells pyspider to crawl the specified page, and then use the callback function to parse the results.

@every) modifier, indicating that on_start will be executed once a day, so that the latest posts can be captured.

validate_cert=False must be like this, otherwise it will report HTTP 599: SSL certificate problem: unable to get local issuer certificate error

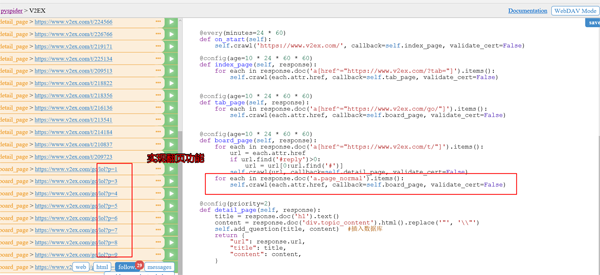

Home page:

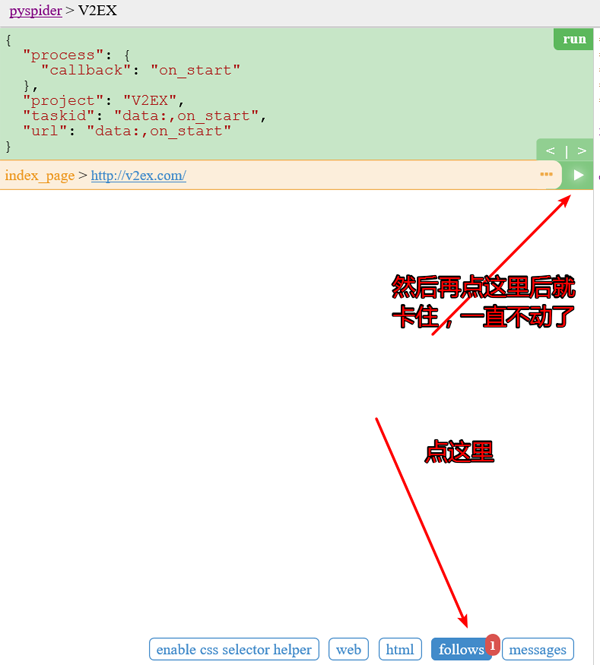

Click the green run to execute, you will see a red 1 on the follows, Switch to the follows panel and click the green play button:

This problem appeared at the beginning in the second screenshot. For the solution, see the article written earlier. Later the problem will no longer occur.

Tab list page:

In the tab list page, we need to extract the URLs of all topic list pages. You may have discovered that the sample handler has extracted a very large URL

Code:

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

for each in response.doc('a[href^="https://www.v2ex.com/?tab="]').items():

self.crawl(each.attr.href, callback=self.tab_page, validate_cert=False)Since the post list page and the tab list page are not the same length, a new callback is created here as self.tab_page

@config (age=10 24 60 * 60) This means that we believe that the page is valid within 10 days and will not be updated and crawled again

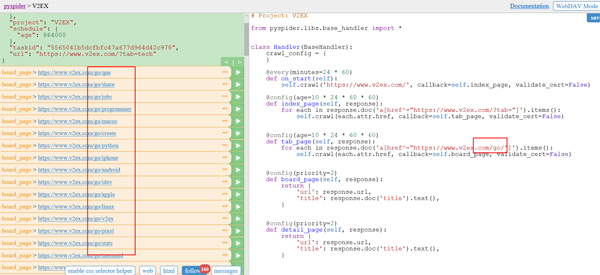

Go list page:

Code:

@config(age=10 * 24 * 60 * 60) def tab_page(self, response): for each in response.doc('a[href^="https://www.v2ex.com/go/"]').items(): self.crawl(each.attr.href, callback=self.board_page, validate_cert=False)

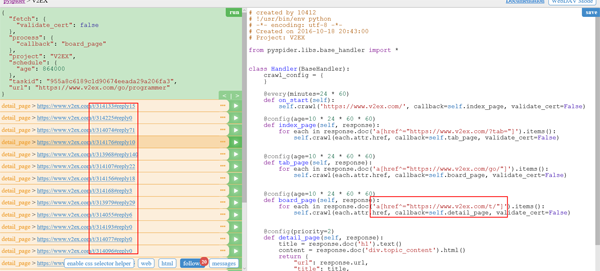

Post details page (T):

You can see that there are some reply things in the results. We don’t need these and we can remove them.

At the same time, we also need to let him realize the automatic page turning function.

Code:

@config(age=10 * 24 * 60 * 60)

def board_page(self, response):

for each in response.doc('a[href^="https://www.v2ex.com/t/"]').items():

url = each.attr.href

if url.find('#reply')>0:

url = url[0:url.find('#')]

self.crawl(url, callback=self.detail_page, validate_cert=False)

for each in response.doc('a.page_normal').items():

self.crawl(each.attr.href, callback=self.board_page, validate_cert=False)

#实现自动翻页功能Screenshot after removing it:

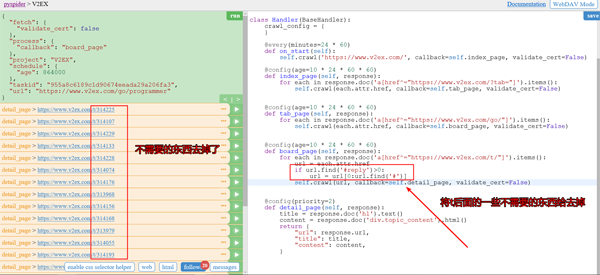

Screenshot after realizing automatic page turning:

此时我们已经可以匹配了所有的帖子的 url 了。

点击每个帖子后面的按钮就可以查看帖子具体详情了。

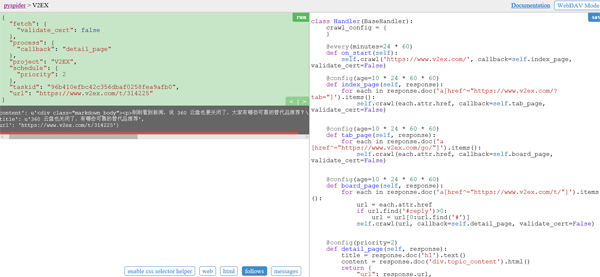

代码:

@config(priority=2)

def detail_page(self, response):

title = response.doc('h1').text()

content = response.doc('p.topic_content').html().replace('"', '\\"')

self.add_question(title, content) #插入数据库

return {

"url": response.url,

"title": title,

"content": content,

}插入数据库的话,需要我们在之前定义一个add_question函数。

#连接数据库

def __init__(self):

self.db = MySQLdb.connect('localhost', 'root', 'root', 'wenda', charset='utf8')

def add_question(self, title, content):

try:

cursor = self.db.cursor()

sql = 'insert into question(title, content, user_id, created_date, comment_count)

values ("%s","%s",%d, %s, 0)' %

(title, content, random.randint(1, 10) , 'now()');

#插入数据库的SQL语句

print sql

cursor.execute(sql)

print cursor.lastrowid

self.db.commit()

except Exception, e:

print e

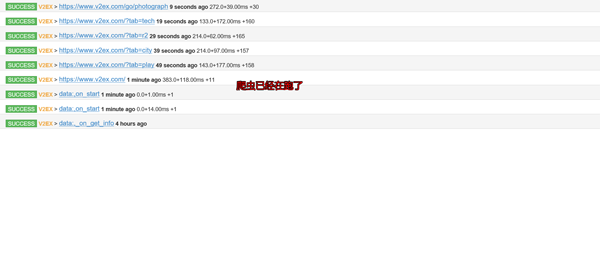

self.db.rollback()查看爬虫运行结果:

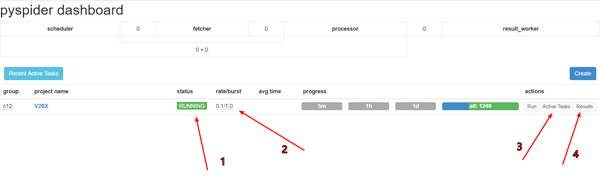

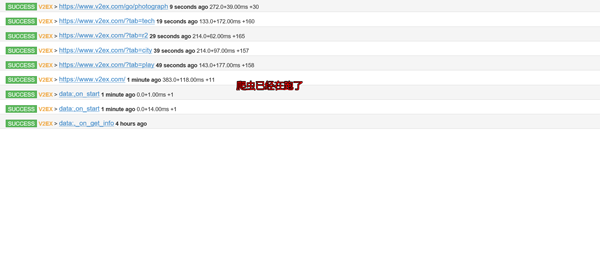

先debug下,再调成running。pyspider框架在windows下的bug

设置跑的速度,建议不要跑的太快,否则很容易被发现是爬虫的,人家就会把你的IP给封掉的

查看运行工作

查看爬取下来的内容

然后再本地数据库GUI软件上查询下就可以看到数据已经保存到本地了。

自己需要用的话就可以导入出来了。

在开头我就告诉大家爬虫的代码了,如果详细的看看那个project,你就会找到我上传的爬取数据了。(仅供学习使用,切勿商用!)

当然你还会看到其他的爬虫代码的了,如果你觉得不错可以给个 Star,或者你也感兴趣的话,你可以fork我的项目,和我一起学习,这个项目长期更新下去。

最后:

代码:

# created by 10412

# !/usr/bin/env python

# -*- encoding: utf-8 -*-

# Created on 2016-10-20 20:43:00

# Project: V2EX

from pyspider.libs.base_handler import *

import re

import random

import MySQLdb

class Handler(BaseHandler):

crawl_config = {

}

def __init__(self):

self.db = MySQLdb.connect('localhost', 'root', 'root', 'wenda', charset='utf8')

def add_question(self, title, content):

try:

cursor = self.db.cursor()

sql = 'insert into question(title, content, user_id, created_date, comment_count)

values ("%s","%s",%d, %s, 0)' % (title, content, random.randint(1, 10) , 'now()');

print sql

cursor.execute(sql)

print cursor.lastrowid

self.db.commit()

except Exception, e:

print e

self.db.rollback()

@every(minutes=24 * 60)

def on_start(self):

self.crawl('https://www.v2ex.com/', callback=self.index_page, validate_cert=False)

@config(age=10 * 24 * 60 * 60)

def index_page(self, response):

for each in response.doc('a[href^="https://www.v2ex.com/?tab="]').items():

self.crawl(each.attr.href, callback=self.tab_page, validate_cert=False)

@config(age=10 * 24 * 60 * 60)

def tab_page(self, response):

for each in response.doc('a[href^="https://www.v2ex.com/go/"]').items():

self.crawl(each.attr.href, callback=self.board_page, validate_cert=False)

@config(age=10 * 24 * 60 * 60)

def board_page(self, response):

for each in response.doc('a[href^="https://www.v2ex.com/t/"]').items():

url = each.attr.href

if url.find('#reply')>0:

url = url[0:url.find('#')]

self.crawl(url, callback=self.detail_page, validate_cert=False)

for each in response.doc('a.page_normal').items():

self.crawl(each.attr.href, callback=self.board_page, validate_cert=False)

@config(priority=2)

def detail_page(self, response):

title = response.doc('h1').text()

content = response.doc('p.topic_content').html().replace('"', '\\"')

self.add_question(title, content) #插入数据库

return {

"url": response.url,

"title": title,

"content": content,

}以上就是Python爬虫实战之爬取 V2EX 网站帖子的内容,更多相关内容请关注PHP中文网(www.php.cn)!