Web Front-end

Web Front-end JS Tutorial

JS Tutorial Code for using ADODB.Stream to determine file encoding in JScript_javascript skills

Code for using ADODB.Stream to determine file encoding in JScript_javascript skillsCode for using ADODB.Stream to determine file encoding in JScript_javascript skills

At first, I used ASCII encoding to read text data and simulated reading binary data. However, I found that if the character encoding is greater than 127, I will only get a value less than 128, which is equivalent to the remainder of 128, so ASCII encoding is not possible.

I continued searching and found an article "Reading And Writing Binary Files Using JScript" on CodeProejct.com, which contained exactly what I needed.

In fact, it is simple to say, just change the encoding and use 437. This is the ASCII encoding extended by IBM. The highest bit of the ASCII encoding is also used to expand the characters in the character set from 128. 256, and the character data read using this character set is equivalent to original binary data.

After solving the obstacle, it is time to start identifying the encoding of the file. By using the ADODB.Stream object to read the first two bytes of the file, and then based on these two bytes, you can determine whether the file encoding is What's up.

If a UTF-8 file has a BOM, then the first two bytes are 0xEF and 0xBB. For example, the first two bytes of a Unicode file are 0xFF and 0xFE. These are the basis for judging the file encoding.

It should be noted that when ADODB.Stream reads characters, there is no one-to-one correspondence. That is to say, if the binary data is 0xEF, the read characters will not be 0xFE after passing through charCodeAt. It is another value. This correspondence table can be found in the article mentioned above.

Program code:

function CheckEncoding( filename) {

var stream = new ActiveXObject("ADODB.Stream");

stream.Mode = 3;

stream.Type = 2;

stream.Open();

stream .Charset = "437";

stream.LoadFromFile(filename);

var bom = escape(stream.ReadText(2));

switch(bom) {

// 0xEF,0xBB = > UTF-8

case "%u2229%u2557":

encoding = "UTF-8";

break;

// 0xFF,0xFE => ; Unicode

case "� %u25A0":

// 0xFE,0xFF => Unicode big endian

case "%u25A0�":

encoding = "Unicode";

break;

// Can’t tell Just use GBK, so that Chinese can be processed correctly in most cases.

delete stream;

stream = null;

return encoding;

}

In this way, when needed, the encoding of the file can be obtained by calling the CheckEncoding function.

I hope this article is helpful to you.

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of UseApr 16, 2025 am 12:12 AMPython is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

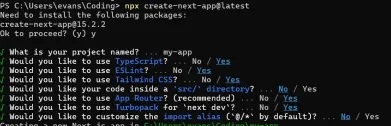

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.