(This does not mean that jQuery's performance is excellent. On the contrary, it can only be said that it is a relatively closed library and cannot be optimized from the outside). This article records a failed optimization experience.

Optimization ideas

The idea of optimization this time comes from the database. When optimizing databases, we often say that "committing a large number of operations together in one transaction can effectively improve efficiency." Although I don’t know the reason because I don’t know much about databases, the idea of "transactions" pointed me in the direction (although it is wrong...).

So I tried to introduce the concept of "transaction" into jQuery, and made some optimizations to jQuery from the outside by "opening" and "submitting" transactions. The most important thing is to reduce the number of loops of each function.

As we all know, jQuery’s DOM operations are based on get all and set first. The operations used to set DOM attributes/styles are almost all traversals of the selected elements. The jQuery.access function is the core of it. part, the code for looping is as follows:

/ / Setting one attribute

if ( value !== undefined ) {

// Optionally, function values get executed if exec is true

exec = !pass && exec && jQuery.isFunction(value);

for ( var i = 0; i fn(

elems[i],

key,

exec ? value.call(elems[i], i, fn(elems[i], key)) : value,

pass

);

}

return elems;

}

For example, jQuery.fn. The css function is like this:

jQuery.fn.css = function( name, value ) {

// Setting 'undefined' is a no-op

if ( arguments.length === 2 && value === undefined ) {

return this;

}

return jQuery.access( this, name, value, true, function( elem, name, value ) {

return value !== undefined ?

jQuery.style( elem, name, value ) :

jQuery.css( elem, name );

});

};

Therefore, the following code, assuming there are 5000 div elements selected, then To loop through 10000 nodes:

jQuery('div').css('height', 300).css('width', 200);

And in my mind, in a "transaction" , like database operations, by saving all operations and performing them uniformly when "submitting transactions", 10,000 node accesses can be reduced to 5,000, which is equivalent to a "1x" performance improvement.

Simple implementation

In "transaction" jQuery operations, 2 functions are provided:

begin: Open a "transaction" and return a transaction object. This object has all functions of jQuery, but calling the function will not take effect immediately, and will only take effect after "submitting the transaction".

Commit: Submit a "transaction" to ensure that all previously called functions take effect and return the original jQuery object.

It is also very convenient to implement:

Create a "transaction object" and copy all functions on jQuery.fn to the object.

When calling a function, add the called function name and related parameters to the pre-prepared "queue".

When the transaction is committed, a traversal is performed on the selected elements, and all functions in the "queue" are applied to each node in the traversal.

Simply the code is as follows:

var slice = Array .prototype.slice;

jQuery.fn.begin = function() {

var proxy = {

_core: c,

_queue: []

},

key,

func;

//Copy the function on jQuery.fn

for (key in jQuery.fn) {

func = jQuery.fn[key];

if (typeof func == ' function') {

//There will be a problem here because of the for loop that the key is always the last loop value

//So a closure must be used to ensure the validity of the key (LIFT effect)

(function( key) {

proxy[key] = function() {

//Put the function call into the queue

this._queue.push([key, slice.call(arguments, 0)]);

return this;

};

})(key);

}

}

//Avoid the commit function from being intercepted too

proxy.commit = jQuery.fn. commit;

return proxy;

};

jQuery.fn.commit = function() {

var core = this._core,

queue = this._queue;

// Only one each loop

core.each(function() {

var i = 0,

item,

jq = jQuery(this);

//Call all functions

for (; item = queue[i]; i ) {

jq[item[0]].apply(jq, item[1]);

}

});

return this.c ;

};

Test environment

The test uses the following conditions:

5000 divs placed in a container ().

Use $('#container>div') to select these 5000 divs.

Each div is required to set a random background color (randomColor function) and a random width below 800px (randomWidth function).

There are 3 calling methods to participate in the test:

Normal usage:

$('#container>div')

.css('background-color', randomColor)

.css('width', randomWidth);

Single loop method:

$('#container>div').each(function() {

$(this).css('background-color', randomColor).css('width', randomWidth) ;

});

Business method:

$('#container>div')

.begin()

.css('background-color', randomColor)

.css(' width', randomWidth)

.commit();

Object assignment method:

$('#container>div').css({

'background-color': randomColor,

'width' : randomWidth

});

Select Chrome 8 series as the test browser (it just hangs when tested with IE).

Sad result

The original prediction result was that the efficiency of the single loop method is much higher than the normal usage method. At the same time, although the transaction method is slower than the single loop method, it should be faster than the normal method. The usage method is faster, and the object assignment method is actually a single loop method supported internally by jQuery, which should be the most efficient.

Unfortunately, the results are as follows:

Normal usage method, single loop method, transaction method, object assignment method

18435ms 18233ms 18918ms 17748ms

From the results, the transaction method has become the slowest one method. At the same time, there is no obvious advantage between a single loop and normal use, and even relying on the object assignment method implemented internally in jQuery does not open a big gap.

Since the operation of 5000 elements is already a very huge loop, such a huge loop cannot widen the performance gap. The most commonly used operations of about 10 elements are even less likely to have obvious advantages, and may even Expand the disadvantage.

The reason is that since the single loop method itself has no obvious performance improvement, it relies on a single loop and is an externally constructed transaction method based on a single loop. Naturally, it is based on a single loop. It also requires additional overhead such as creating transaction objects, saving function queues, traversing function queues, etc. It is reasonable that the result will be defeated by normal usage.

At this point, it can be said that the optimization method of imitating "transactions" has failed. However, this result can be analyzed further.

Where is the performance?

First of all, analyze the usage of the code and compare the normal usage method with the fastest object assignment method in the test. It can be said that the difference between the two is only in the loop. The difference in the number of elements (I put aside the internal problems of jQuery here. In fact, the poor implementation of jQuery.access does hinder the object assignment method, but fortunately it is not serious), normal usage is 10,000 elements. The object assignment method is 5000 elements. Therefore, it can be simply considered that 18435 - 17748 = 687ms is the time it takes to loop through 5000 elements, which accounts for about 3.5% of the entire execution process. It is not the backbone of the entire execution process. In fact, there is really no need for optimization.

So where does the other 96.5% of expenses go? Remember what Doglas said, "In fact, Javascript is not slow, what is slow is the DOM operation." In fact, among the remaining 96.5% of the overhead, excluding basic consumption such as function calls, at least 95% of the time is spent on re-rendering after the style of the DOM element is changed.

After discovering this fact, we actually have a more correct optimization direction, which is also one of the basic principles in front-end performance: when modifying a large number of child elements, first move the root parent DOM node out of the DOM tree. So if you use the following code to test again:

//Not reusing $('#container') is already bad

$('#container').detach().find('div')

.css('background -color', randomColor)

.css('width', randomWidth);

$('#container').appendTo(document.body);

The test result always stays At about 900ms, it is not at all an order of magnitude higher than the previous data, and the real optimization is successful.

Lessons and Summary

Be sure to find the correct performance bottleneck and then optimize it. Blind guessing will only lead to a wrong and extreme path.

Data speaks, no one should speak in front of data!

I don’t think the direction of “transaction” is wrong. If jQuery can natively support the concept of “transaction”, are there other points that can be optimized? For example, a transaction will automatically remove the parent element from the DOM tree...

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AM

Python vs. JavaScript: Community, Libraries, and ResourcesApr 15, 2025 am 12:16 AMPython and JavaScript have their own advantages and disadvantages in terms of community, libraries and resources. 1) The Python community is friendly and suitable for beginners, but the front-end development resources are not as rich as JavaScript. 2) Python is powerful in data science and machine learning libraries, while JavaScript is better in front-end development libraries and frameworks. 3) Both have rich learning resources, but Python is suitable for starting with official documents, while JavaScript is better with MDNWebDocs. The choice should be based on project needs and personal interests.

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

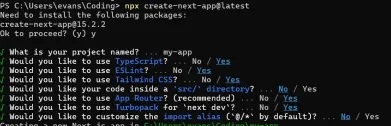

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Chinese version

Chinese version, very easy to use

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver Mac version

Visual web development tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool