Introduction

Large Language Models (LLMs) contributed to the progress of Natural Language Processing (NLP), but they also raised some important questions about computational efficiency. These models have become too large, so the training and inference cost is no longer within reasonable limits.

To address this, the Chinchilla Scaling Law, introduced by Hoffmann et al. in 2022, provides a groundbreaking framework for optimizing the training of LLMs. The Chinchilla Scaling Law offers an essential guide to efficiently scaling LLMs without compromising performance by establishing relationships between model size, training data, and computational resources. We will discuss it in detail in this article.

Overview

- The Chinchilla Scaling Law optimizes LLM training by balancing model size and data volume for enhanced efficiency.

- New scaling insights suggest that smaller language models like Chinchilla can outperform larger ones when trained on more data.

- Chinchilla’s approach challenges traditional LLM scaling by prioritizing data quantity over model size for compute efficiency.

- The Chinchilla Scaling Law offers a new roadmap for NLP, guiding the development of high-performing, resource-efficient models.

- The Chinchilla Scaling Law maximizes language model performance with minimal compute costs by doubling the model size and training data.

Table of contents

- What is Chinchilla Scaling Law?

- A Shift in Focus: From Model Size to Data

- Overview of the Chinchilla Scaling Law

- Key Findings of the Chinchilla Scaling Law

- Compute-Optimal Training

- Empirical Evidence from Over 400 Models

- Revised Estimates and Continuous Improvement

- Benefits of the Chinchilla Approach

- Improved Performance

- Lower Computational Costs

- Implications for Future Research and Model Development

- Challenges and Considerations

- Frequently Asked Questions

What is Chinchilla Scaling Law?

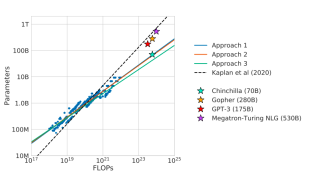

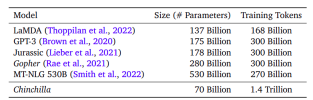

The paper “Training Compute-Optimal Large Language Models,” published in 2022, focuses on identifying the relationship between three key factors: model size, number of tokens, and compute budget. The authors found that existing large language models (LLMs) like GPT-3 (175B parameters), Gopher (280B), and Megatron (530B) are significantly undertrained. While these models increased in size, the amount of training data remained largely constant, leading to suboptimal performance. The authors propose that model size and the number of training tokens must be scaled equally for compute-optimal training. To prove this, they trained around 400 models, ranging from 70 million to over 16 billion parameters, using between 5 and 500 billion tokens.

Based on these findings, the authors trained a new model called Chinchilla, which uses the same compute budget as Gopher (280B) but with only 70B parameters and four times more training data. Chinchilla outperformed several well-known LLMs, including Gopher (280B), GPT-3 (175B), Jurassic-1 (178B), and Megatron (530B). This result contradicts the scaling laws proposed by OpenAI in “Scaling Laws for LLMs,” which suggested that larger models would always perform better. The Chinchilla Scaling Laws demonstrate that smaller models when trained on more data, can achieve superior performance. This approach also makes smaller models easier to fine-tune and reduces inference latency.

The graph shows that, despite being smaller, Chinchilla (70B) follows a different compute-to-parameter ratio and outperforms larger models like Gopher and GPT-3.

The other approaches (1, 2, and 3) explore different ways to optimize model performance based on compute allocation.

From this figure we can see Chinchilla’s Advantage even though Chinchilla is smaller in size (70B parameters), it was trained on a much larger dataset (1.4 trillion tokens), which follows the principle introduced in the Chinchilla Scaling Laws—smaller models can outperform larger ones if they are trained on more data.Other models like Gopher, GPT-3, and MT-NLG 530B have significantly more parameters but were trained on relatively fewer tokens, suggesting that these models may not have fully optimized their compute potential.

A Shift in Focus: From Model Size to Data

Historically, the focus in enhancing LLM performance has been on increasing model size, as seen in models like GPT-3 and Gopher. This was driven by the research of Kaplan et al. (2020), which proposed a power-law relationship between model size and performance. However, as models grew larger, the amount of training data did not scale accordingly, resulting in underutilized compute potential. The Chinchilla Scaling Laws challenge this by showing that a more balanced allocation of resources, particularly in terms of data and model size, can lead to compute-optimal models that perform better without reaching their lowest possible loss.

Overview of the Chinchilla Scaling Law

The trade-off between model size, training tokens, and computational cost is at the core of the Chinchilla Scaling Law. The law establishes a compute-optimal balance between these three parameters:

- Model Size (N):The number of parameters in the model.

- Training Tokens (D):The total number of tokens used during training.

- Computational Cost (C): The total compute resources allocated for training, usually measured in FLOPs (floating point operations per second).

The Chinchilla Scaling Law suggests that for optimal performance, both model size and the amount of training data should scale at equal rates. Specifically, the number of training tokens should also double for every doubling of model size. This approach contrasts earlier methods, which emphasized increasing model size without sufficiently increasing the training data.

This relationship is mathematically expressed as:

Where:

- L is the model’s final loss.

- L_0 is the irreducible loss, representing the best possible performance.

- A and B are constants that capture the model’s underperformance compared to an ideal generative process.

- α and β are exponents that describe how loss scales with respect to model size and data size, respectively.

Key Findings of the Chinchilla Scaling Law

Here are the key findings of the Chinchilla scaling law:

Compute-Optimal Training

The Chinchilla Scaling Law highlights an optimal balance between model size and the amount of training data. Specifically, the study found that an approximate ratio of 20 training tokens per model parameter is ideal for achieving the best performance with a given compute budget. For example, the Chinchilla model, with 70 billion parameters, was trained on 1.4 trillion tokens—four times more than Gopher but with far fewer parameters. This balance resulted in a model significantly outperforming larger models on several benchmarks.

Empirical Evidence from Over 400 Models

To derive the Chinchilla Scaling Laws, Hoffmann et al. trained over 400 transformer models, ranging in size from 70 million to 16 billion parameters, on datasets of up to 500 billion tokens. The empirical evidence strongly supported the hypothesis that models trained with more data (at a fixed compute budget) perform better than simply increasing model size alone.

Revised Estimates and Continuous Improvement

Subsequent research has sought to refine Hoffmann et al.’s initial findings, identifying possible adjustments in the parameter estimates. Some studies have suggested minor inconsistencies in the original results and have proposed revised estimates to fit the observed data better. These adjustments indicate that further research is needed to understand the dynamics of model scaling fully, but the core insights of the Chinchilla Scaling Law remain a valuable guideline.

Benefits of the Chinchilla Approach

Here are the benefits of the Chinchilla approach:

Improved Performance

Chinchilla’s equal scaling of model size and training data yielded remarkable results. Despite being smaller than many other large models, Chinchilla outperformed GPT-3, Gopher, and even the massive Megatron-Turing NLG model (530 billion parameters) on various benchmarks. For instance, on the Massive Multitask Language Understanding (MMLU) benchmark, Chinchilla achieved an average accuracy of 67.5%, a significant improvement over Gopher’s 60%.

Lower Computational Costs

The Chinchilla approach optimizes performance and reduces computational and energy costs for training and inference. Training models like GPT-3 and Gopher require enormous computing resources, making their use in real-world applications prohibitively expensive. In contrast, Chinchilla’s smaller model size and more extensive training data result in lower compute requirements for fine-tuning and inference, making it more accessible for downstream applications.

Implications for Future Research and Model Development

The Chinchilla Scaling Laws offer valuable insights for the future of LLM development. Key implications include:

- Guiding Model Design:Understanding how to balance model size and training data allows researchers and developers to make more informed decisions when designing new models. By adhering to the principles outlined in the Chinchilla Scaling Law, developers can ensure that their models are both compute-efficient and high-performing.

- Guiding Model Design:Knowledge on optimizing the volume and so the training data informs the models’ research and design. Within this guideline scale, the development of their ideas will operate within broad definitions of high efficiency without excessive consumption of computer resources.

- Performance Optimization:The Chinchilla Scaling Law provides a roadmap for optimizing LLMs. By focusing on equal scaling, developers can avoid the pitfalls of under-training large models and ensure that models are optimized for training and inference tasks.

- Exploration Beyond Chinchilla:As research continues, new strategies are emerging to extend the ideas of the Chinchilla Scaling Law. For example, some researchers are investigating ways to achieve similar performance levels with fewer computational resources or to further enhance model performance in data-constrained environments. These explorations are likely to result in even more efficient training pipelines.

Challenges and Considerations

While the Chinchilla Scaling Law marks a significant step forward in understanding LLM scaling, it also raises new questions and challenges:

- Data Collection: As was the case for Chinchilla, training a model with 1.4 trillion tokens implies the availability of many high-quality datasets. However, such a scale of data collection and processing raises organizational problems for researchers and developers, as well as ethical problems, such as privacy and bias.

- Bias and Toxicity:However, proportional reduction of regular bias and toxicity of a model trained using the Chinchilla Scaling law is easier and more efficient than all these inefficiency issues. As LLMs grow in power and reach, ensuring fairness and mitigating harmful outputs will be crucial focus areas for future research.

Conclusion

The Chinchilla Scaling Law represents a pivotal advancement in our understanding of optimizing the training of large language models. By establishing clear relationships between model size, training data, and computational cost, the law provides a compute-optimal framework for efficiently scaling LLMs. The success of the Chinchilla model demonstrates the practical benefits of this approach, both in terms of performance and resource efficiency.

As research in this area continues, the principles of the Chinchilla Scaling Law will likely shape the future of LLM development, guiding the design of models that push the boundaries of what’s possible in natural language processing while maintaining sustainability and accessibility.

Also, if you are looking for a Generative AI course online, then explore: the GenAI Pinnacle Program!

Frequently Asked Questions

Q1. What is the Chinchilla scaling law?Ans. The Chinchilla scaling law is an empirical framework that describes the optimal relationship between the size of a language model (number of parameters), the amount of training data (tokens), and the computational resources required for training. It aims to minimize training compute while maximizing model performance.

Q2. What are the key parameters in the Chinchilla scaling law?Ans. The key parameters include:

1. N: Number of parameters in the model.

2. D: Number of training tokens.

3. C: Total computational cost in FLOPS.

4. L: Average loss achieved by the model on a test dataset.

5. A and B: Constants reflecting underperformance compared to an ideal generative process.

6. α and β: Exponents describing how loss scales concerning model and data size, respectively.

Ans. The law suggests that both model size and training tokens should scale at equal rates for optimal performance. Specifically, for every doubling of model size, the number of training tokens should also double, typically aiming for a ratio of around 20 tokens per parameter.

Q4. What are some criticisms or limitations of the Chinchilla scaling law?Ans. Recent studies have indicated potential issues with Hoffmann et al.’s original estimates, including inconsistencies in reported data and overly tight confidence intervals. Some researchers argue that the scaling law may be too simplistic and does not account for various practical considerations in model training.

Q5. How has the Chinchilla scaling law influenced recent language model development?Ans. The findings from the Chinchilla scaling law have informed several notable models’ design and training processes, including Google’s Gemini suite. It has also prompted discussions about “beyond Chinchilla” strategies, where researchers explore training models larger than optimal according to the original scaling laws.

The above is the detailed content of What is the Chinchilla Scaling Law?. For more information, please follow other related articles on the PHP Chinese website!

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AM

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AMChatGPT Chinese version: Unlock new experience of Chinese AI dialogue ChatGPT is popular all over the world, did you know it also offers a Chinese version? This powerful AI tool not only supports daily conversations, but also handles professional content and is compatible with Simplified and Traditional Chinese. Whether it is a user in China or a friend who is learning Chinese, you can benefit from it. This article will introduce in detail how to use ChatGPT Chinese version, including account settings, Chinese prompt word input, filter use, and selection of different packages, and analyze potential risks and response strategies. In addition, we will also compare ChatGPT Chinese version with other Chinese AI tools to help you better understand its advantages and application scenarios. OpenAI's latest AI intelligence

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AM

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AMThese can be thought of as the next leap forward in the field of generative AI, which gave us ChatGPT and other large-language-model chatbots. Rather than simply answering questions or generating information, they can take action on our behalf, inter

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AM

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AMEfficient multiple account management techniques using ChatGPT | A thorough explanation of how to use business and private life! ChatGPT is used in a variety of situations, but some people may be worried about managing multiple accounts. This article will explain in detail how to create multiple accounts for ChatGPT, what to do when using it, and how to operate it safely and efficiently. We also cover important points such as the difference in business and private use, and complying with OpenAI's terms of use, and provide a guide to help you safely utilize multiple accounts. OpenAI

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Zend Studio 13.0.1

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor