Introduction

Gender detection from facial images is one of the many fascinating applications of computer vision. In this project, we combine OpenCV for confront location and the Roboflow API for gender classification, making a device that identifies faces, checks them, and predicts their gender.We’ll utilize Python, particularly in Google Colab, to type in and run this code. This direct gives an easy-to-follow walkthrough of the code, clarifying each step so you can understand and apply it to your ventures.

Learning Objective

- Understand how to implement face detection using OpenCV’s Haar Cascade.

- Learn how to integrate Roboflow API for gender classification.

- Explore methods to process and manipulate images in Python.

- Visualize detection results using Matplotlib.

- Develop practical skills in combining AI and computer vision for real-world applications.

This article was published as a part of theData Science Blogathon.

Table of contents

- How to Detect Gender Using OpenCV and Roboflow in Python?

- Step 1: Importing Libraries and Uploading Image

- Step 2: Loading Haar Cascade Model for Face Detection

- Step 3: Detecting Faces in the Image

- Step 4: Setting Up the Gender Detection API

- Step 5: Processing Each Detected Face

- Step 6: Displaying the Results

- Frequently Asked Questions

How to Detect Gender Using OpenCV and Roboflow in Python?

Let us learn how to implement OpenCV and Roboflow in Python for gender detection:

Step 1: Importing Libraries and Uploading Image

The primary step is to consequence the vital libraries. We’re utilizing OpenCV for picture preparation, NumPy for dealing with clusters, and Matplotlib to visualize the comes about. We also uploaded an image that contained faces we wanted to analyze.

from google.colab import files

import cv2

import numpy as np

from matplotlib import pyplot as plt

from inference_sdk import InferenceHTTPClient

# Upload image

uploaded = files.upload()

# Load the image

for filename in uploaded.keys():

img_path = filename

In Google Colab, the files.upload() work empowers clients to transfer records, such as pictures, from their neighborhood machines into the Colab environment. Upon uploading, the picture is put away in a word reference named transferred, where the keys compare to the record names. A for loop is then used to extract the file path for further processing. To handle image processing tasks, OpenCV is employed to detect faces and draw bounding boxes around them. At the same time, Matplotlib is utilized to visualize the results, including displaying the image and cropped faces.

Step 2: Loading Haar Cascade Model for Face Detection

Next, we stack OpenCV’s Haar Cascade demonstration, which is pre-trained to identify faces. This model scans the image for patterns resembling human faces and returns their coordinates.

# Load the Haar Cascade model for face detection face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades 'haarcascade_frontalface_default.xml')

It is usually a prevalent strategy for object detection. It identifies edges, textures, and patterns associated with the object (in this case, faces). OpenCV provides a pre-trained face detection model, which is loaded using `CascadeClassifier.`

Step 3: Detecting Faces in the Image

We stack the transferred picture and change it to grayscale, as this makes a difference in making strides in confronting location exactness. Afterward, we use the face detector to find faces in the image.

# Load the image and convert to grayscale img = cv2.imread(img_path) gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # Detect faces in the image faces = face_cascade.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=5, minSize=(30, 30))

-

Image Loading and Conversion:

- Utilize cv2.imread() to stack the transferred picture.

- Change the picture to grayscale with cv2.cvtColor() to diminish complexity and upgrade discovery.

-

Detecting Faces:

- Use detectMultiScale() to find faces in the grayscale image.

- The function scales the image and checks different areas for face patterns.

- Parameters like scaleFactor and minNeighbors adjust detection sensitivity and accuracy.

Step 4: Setting Up the Gender Detection API

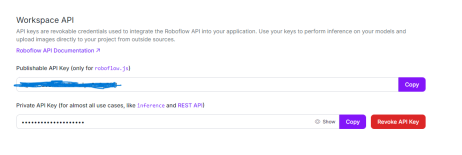

Now that we have detected the faces, we initialize the Roboflow API using InferenceHTTPClient to predict the gender of each detected face.

# Initialize InferenceHTTPClient for gender detection

CLIENT = InferenceHTTPClient(

api_url="https://detect.roboflow.com",

api_key="USE_YOUR_API"

)

The InferenceHTTPClient simplifies interaction with Roboflow’s pre-trained models by configuring a client with the Roboflow API URL and API key. This setup enables requests to be sent to the gender detection model hosted on Roboflow. The API key serves as a unique identifier for authentication, allowing secure access to and utilization of the Roboflow API.

Step 5: Processing Each Detected Face

We loop through each detected face, draw a rectangle around it, and crop the face image for further processing. Each cropped face image is temporarily saved and sent to the Roboflow API, where the gender-detection-qiyyg/2 model is used to predict the gender.

The gender-detection-qiyyg/2 model is a pre-trained deep learning model optimized for classifying gender as male or female based on facial features. It provides predictions with a confidence score, indicating how certain the model is about the classification. The model is trained on a robust dataset, allowing it to make accurate predictions across a wide range of facial images. These predictions are returned by the API and used to label each face with the identified gender and confidence level.

# Initialize face count

face_count = 0

# List to store cropped face images with labels

cropped_faces = []

# Process each detected face

for (x, y, w, h) in faces:

face_count = 1

# Draw rectangles around the detected faces

cv2.rectangle(img, (x, y), (x w, y h), (255, 0, 0), 2)

# Extract the face region

face_img = img[y:y h, x:x w]

# Save the face image temporarily

face_img_path = 'temp_face.jpg'

cv2.imwrite(face_img_path, face_img)

# Detect gender using the InferenceHTTPClient

result = CLIENT.infer(face_img_path, model_)

if 'predictions' in result and result['predictions']:

prediction = result['predictions'][0]

gender = prediction['class']

confidence = prediction['confidence']

# Label the rectangle with the gender and confidence

label = f'{gender} ({confidence:.2f})'

cv2.putText(img, label, (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (255, 0, 0), 2)

# Add the cropped face with label to the list

cropped_faces.append((face_img, label))

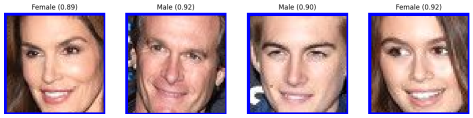

For each recognized face, the system draws a bounding box using cv2.rectangle() to visually highlight the face in the image. It then crops the face region using slicing (face_img = img[y:y h, x:x w]), isolating it for further processing. After temporarily saving the cropped face, the system sends it to the Roboflow model via CLIENT.infer(), which returns the gender prediction along with a confidence score. The system adds these results as text labels above each face using cv2.putText(), providing a clear and informative overlay.

Step 6: Displaying the Results

Finally, we visualize the output. We first convert the image from BGR to RGB (as OpenCV uses BGR by default), then display the detected faces and gender predictions. After that, we show the individual cropped faces with their respective labels.

# Convert image from BGR to RGB for display

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

# Display the image with detected faces and gender labels

plt.figure(figsize=(10, 10))

plt.imshow(img_rgb)

plt.axis('off')

plt.title(f"Detected Faces: {face_count}")

plt.show()

# Display each cropped face with its label horizontally

fig, axes = plt.subplots(1, face_count, figsize=(15, 5))

for i, (face_img, label) in enumerate(cropped_faces):

face_rgb = cv2.cvtColor(face_img, cv2.COLOR_BGR2RGB)

axes[i].imshow(face_rgb)

axes[i].axis('off')

axes[i].set_title(label)

plt.show()

- Image Conversion: Since OpenCV uses the BGR format by default, we convert the image to RGB using cv2.cvtColor() for correct color display in Matplotlib.

-

Displaying Results:

- We use Matplotlib to display the image with the detected faces and the gender labels on top of them.

- We also show each cropped face image and the predicted gender label in a separate subplot.

Original data

Output Result data

Conclusion

In this guide, we have successfully developed a powerful Gender Detection with OpenCV and Roboflow in Python. By implementing OpenCV for face detection and Roboflow for gender prediction, we created a system that can accurately identify and classify gender in images. The addition of Matplotlib for visualization further enhanced our project, providing clear and insightful displays of the results. This project highlights the effectiveness of combining these technologies and demonstrates their practical benefits in real-world applications, offering a robust solution for gender detection tasks.

Key Takeaways

- The project demonstrates an effective approach to detecting and classifying gender from images using a pre-trained AI model. The demonstration precisely distinguishes sexual orientation with tall certainty, displaying its unwavering quality.

- By combining devices such as Roboflow for AI deduction, OpenCV for picture preparation, and Matplotlib for visualization, the venture effectively combines different innovations to realize its objectives.

- The system’s capacity to distinguish and classify the gender of different people in a single picture highlights its vigor and flexibility, making it appropriate for various applications.

- Using a pre-trained demonstration guarantees tall exactness in forecasts, as proven by the certainty scores given within the coming about. This accuracy is crucial for applications requiring reliable gender classification.

- The project uses visualization techniques to annotate images with detected faces and predicted genders. This makes the results more interpretable and valuable for further analysis.

Also Read: Named Based Gender Identification using NLP and Python

Frequently Asked Questions

Q1. What is the purpose of the project?A. The project aims to detect and classify gender from images using AI. It leverages pre-trained models to identify and label individuals’ genders in photos.

Q2. What technologies and tools were used?A. The project utilized the Roboflow gender detection model for AI inference, OpenCV for image processing, and Matplotlib for visualization. It also used Python for scripting and data handling.

Q3. How does the gender detection model work?A. The model analyzes images to detect faces and then classifies each detected face as male or female based on the trained AI algorithms. It outputs confidence scores for the predictions.

Q4. How accurate is gender detection?A. The model demonstrates high accuracy with confidence scores indicating reliable predictions. For example, the confidence scores in the results were above 80%, showing strong performance.

Q5. What kind of images can the model process?The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

The above is the detailed content of Gender Detection with OpenCV and Roboflow in Python - Analytics Vidhya. For more information, please follow other related articles on the PHP Chinese website!

Do I need a phone number to register for ChatGPT? We also explain what to do if you can't registerMay 16, 2025 am 01:24 AM

Do I need a phone number to register for ChatGPT? We also explain what to do if you can't registerMay 16, 2025 am 01:24 AMNo mobile number is required for ChatGPT registration? This article will explain in detail the latest changes in the ChatGPT registration process, including the advantages of no longer mandatory mobile phone numbers, as well as scenarios where mobile phone number authentication is still required in special circumstances such as API usage and multi-account creation. In addition, we will also discuss the security of mobile phone number registration and provide solutions to common errors during the registration process. ChatGPT registration: Mobile phone number is no longer required In the past, registering for ChatGPT required mobile phone number verification. But an update in December 2023 canceled the requirement. Now, you can easily register for ChatGPT by simply having an email address or Google, Microsoft, or Apple account. It should be noted that although it is not necessary

Top Ten Uses Of AI Puts Therapy And Companionship At The #1 SpotMay 16, 2025 am 12:43 AM

Top Ten Uses Of AI Puts Therapy And Companionship At The #1 SpotMay 16, 2025 am 12:43 AMLet's delve into the fascinating world of AI and its top uses as outlined in the latest analysis.This exploration of a groundbreaking AI development is a continuation of my ongoing Forbes column, where I delve into the latest advancements in AI, incl

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AM

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AMChatGPT Chinese version: Unlock new experience of Chinese AI dialogue ChatGPT is popular all over the world, did you know it also offers a Chinese version? This powerful AI tool not only supports daily conversations, but also handles professional content and is compatible with Simplified and Traditional Chinese. Whether it is a user in China or a friend who is learning Chinese, you can benefit from it. This article will introduce in detail how to use ChatGPT Chinese version, including account settings, Chinese prompt word input, filter use, and selection of different packages, and analyze potential risks and response strategies. In addition, we will also compare ChatGPT Chinese version with other Chinese AI tools to help you better understand its advantages and application scenarios. OpenAI's latest AI intelligence

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver CS6

Visual web development tools

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft