Introduction

Artificial Intelligence has entered a new era. Gone are the days when models would simply output information based on predefined rules. The cutting-edge approach in AI today revolves around RAG (Retrieval-Augmented Generation) systems, and more specifically, the use of agents to intelligently retrieve, analyze, and verify information. This is the future of intelligent data retrieval — where machine learning models not only answer questions but do so with unprecedented accuracy and depth.

In this blog, we’ll dive into how you can build your own agent-powered RAG system using CrewAI and LangChain, two of the most powerful tools that are revolutionizing the way we interact with AI. But before we dive into the code, let’s get familiar with these game-changing technologies.

Learning Outcomes

- Learn the fundamentals of RAG and its role in improving AI accuracy through real-time data retrieval.

- Explored the functionality of CrewAI and how its specialized agents contribute to efficient task automation in AI systems.

- Understood how LangChain enables task chaining, creating logical workflows that enhance AI-driven processes.

- Discovered how to build an agentic RAG system using tools like LLaMA 3, Groq API, CrewAI, and LangChain for reliable and intelligent information retrieval.

This article was published as a part of theData Science Blogathon.

Table of contents

- Introduction

- What is Retrieval-Augmented Generation?

- What is CrewAI?

- What is LangChain?

- CrewAI LangChain: The Dream Team for RAG

- Building Your Own Agentic RAG System

- Step1: Setting Up the Environment

- Step2: Adding GROQ API key

- Step3: Setting Up the LLM

- Step3: Retrieving Data from a PDF

- Step4: Creating a RAG Tool to Pass PDF

- Step5: Adding Web Search with Tavily

- Step6: Defining a Router Tool

- Step7: Creating the Agents

- Step8: Defining Tasks

- Step9: Building the Crew

- Step10: Running the Pipeline

- Conclusion

- Frequently Asked Questions

What is Retrieval-Augmented Generation?

RAG represents a hybrid approach in modern AI. Unlike traditional models that solely rely on pre-existing knowledge baked into their training, RAG systems pull real-time information from external data sources (like databases, documents, or the web) to augment their responses.

In simple terms, a RAG system doesn’t just guess or rely on what it “knows”—it actively retrieves relevant, up-to-date information and then generates a coherent response based on it. This ensures that the AI’s answers are not only accurate but also grounded in real, verifiable facts.

Why RAG Matters?

- Dynamic Information: RAG allows the AI to fetch current, real-time data from external sources, making it more responsive and up-to-date.

- Improved Accuracy: By retrieving and referencing external documents, RAG reduces the likelihood of the model generating hallucinated or inaccurate answers.

- Enhanced Comprehension: The retrieval of relevant background information improves the AI’s ability to provide detailed, informed responses.

Now that you understand what RAG is, imagine supercharging it with agents—AI entities that handle specific tasks like retrieving data, evaluating its relevance, or verifying its accuracy. This is where CrewAI and LangChain come into play, making the process even more streamlined and powerful.

What is CrewAI?

Think of CrewAI as an intelligent manager that orchestrates a team of agents. Each agent specializes in a particular task, whether it’s retrieving information, grading its relevance, or filtering out errors. The magic happens when these agents collaborate—working together to process complex queries and deliver precise, accurate answers.

Why CrewAI is Revolutionary?

- Agentic Intelligence: CrewAI breaks down tasks into specialized sub-tasks, assigning each to a unique AI agent.

- Collaborative AI: These agents interact, passing information and tasks between each other to ensure that the final result is robust and trustworthy.

- Customizable and Scalable: CrewAI is highly modular, allowing you to build systems that can adapt to a wide range of tasks—whether it’s answering simple questions or performing in-depth research.

What is LangChain?

While CrewAI brings the intelligence of agents, LangChain enables you to build workflows that chain together complex AI tasks. It ensures that agents perform their tasks in the right order, creating seamless, highly orchestrated AI processes.

Why LangChain is Essential?

LLM Orchestration: LangChain works with a wide variety of large language models (LLMs), from OpenAI to Hugging Face, enabling complex natural language processing.

- Data Flexibility: You can connect LangChain to diverse data sources, from PDFs to databases and web searches, ensuring the AI has access to the most relevant information.

- Scalability: With LangChain, you can build pipelines where each task leads into the next—perfect for sophisticated AI operations like multi-step question answering or research.

CrewAI LangChain: The Dream Team for RAG

By combining CrewAI’s agent-based framework with LangChain’s task orchestration, you can create a robust Agentic RAG system. In this system, each agent plays a role—whether it’s fetching relevant documents, verifying the quality of retrieved information, or grading answers for accuracy. This layered approach ensures that responses are not only accurate but are grounded in the most relevant and recent information available.

Let’s move forward and build an Agent-Powered RAG System that answers complex questions using a pipeline of AI agents.

Building Your Own Agentic RAG System

We will now start building our own agentic RAG System step by step below:

Before diving into the code, let’s install the necessary libraries:

!pip install crewai==0.28.8 crewai_tools==0.1.6 langchain_community==0.0.29 sentence-transformers langchain-groq --quiet !pip install langchain_huggingface --quiet !pip install --upgrade crewai langchain langchain_community

Step1: Setting Up the Environment

We start by importing the necessary libraries:

from langchain_openai import ChatOpenAI import os from crewai_tools import PDFSearchTool from langchain_community.tools.tavily_search import TavilySearchResults from crewai_tools import tool from crewai import Crew from crewai import Task from crewai import Agent

In this step, we imported:

- ChatOpenAI: The interface for interacting with large language models like LLaMA.

- PDFSearchTool: A tool to search and retrieve information from PDFs.

- TavilySearchResults: For retrieving web-based search results.

- Crew, Task, Agent: Core components of CrewAI that allow us to orchestrate agents and tasks.

Step2: Adding GROQ API key

To access the Groq API, you typically need to authenticate by generating an API key. You can generate this key by logging into the Groq Console. Here’s a general outline of the process:

- Log in to the Groq Console using your credentials.

- Navigate to API Keys: Go to the section where you can manage your API keys.

- Generate a New Key: Select the option to create or generate a new API key.

- Save the API Key: Once generated, make sure to copy and securely store the key, as it will be required for authenticating API requests.

This API key will be used in your HTTP headers for API requests to authenticate and interact with the Groq system.

Always refer to the official Groq documentation for specific details or additional steps related to accessing the API.

import os os.environ['GROQ_API_KEY'] = 'Add Your Groq API Key'

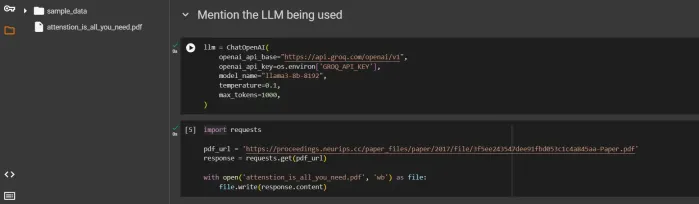

Step3: Setting Up the LLM

llm = ChatOpenAI(

openai_api_base="https://api.groq.com/openai/v1",

openai_api_key=os.environ['GROQ_API_KEY'],

model_name="llama3-8b-8192",

temperature=0.1,

max_tokens=1000,

)

Here, we define the language model that will be used by the system:

- LLaMA3-8b-8192: A large language model with 8 billion parameters, making it powerful enough to handle complex queries.

- Temperature: Set to 0.1 to ensure the model’s outputs are highly deterministic and precise.

- Max tokens: Limited to 1000 tokens, ensuring responses remain concise and relevant.

Step3: Retrieving Data from a PDF

To demonstrate how RAG works, we download a PDF and search through it:

import requests

pdf_url = 'https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf'

response = requests.get(pdf_url)

with open('attenstion_is_all_you_need.pdf', 'wb') as file:

file.write(response.content)

This downloads the famous “Attention is All You Need” paper and saves it locally. We’ll use this PDF in the following step for searching.

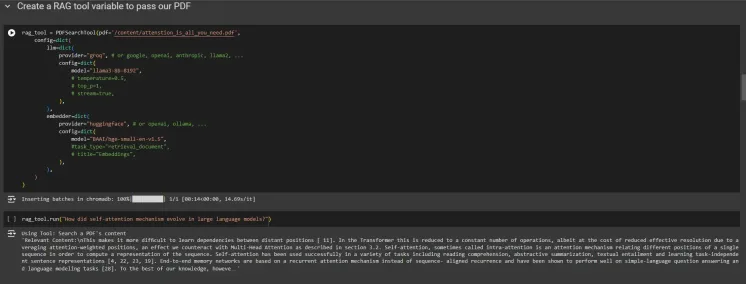

Step4: Creating a RAG Tool to Pass PDF

In this section, we create a RAG tool that searches a PDF using a language model and an embedder for semantic understanding.

- PDF Integration: The PDFSearchTool loads the PDF (attention_is_all_you_need.pdf) for querying, allowing the system to extract information from the document.

- LLM Configuration: We use LLaMA3-8b (via Groq’s API) as the language model to process user queries and provide detailed answers based on the PDF content.

- Embedder Setup: Huggingface’s BAAI/bge-small-en-v1.5 model is used for embedding, enabling the tool to match queries with the most relevant sections of the PDF.

Finally, the rag_tool.run() function is executed with a query like “How did the self-attention mechanism evolve in large language models?” to retrieve information.

rag_tool = PDFSearchTool(pdf='/content/attenstion_is_all_you_need.pdf',

config=dict(

llm=dict(

provider="groq", # or google, openai, anthropic, llama2, ...

config=dict(

model="llama3-8b-8192",

# temperature=0.5,

# top_p=1,

# stream=true,

),

),

embedder=dict(

provider="huggingface", # or openai, ollama, ...

config=dict(

model="BAAI/bge-small-en-v1.5",

#task_type="retrieval_document",

# title="Embeddings",

),

),

)

)

rag_tool.run("How did self-attention mechanism evolve in large language models?")

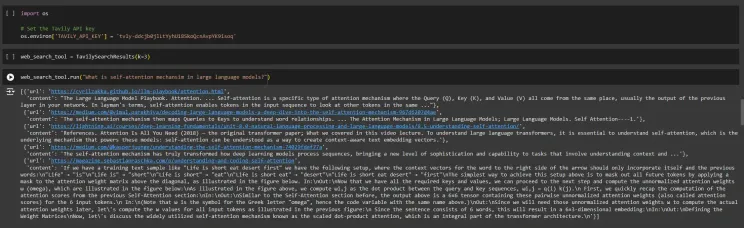

Step5: Adding Web Search with Tavily

Setup Your Tavily API Key in order also to enable web search functionality:

import os

# Set the Tavily API key

os.environ['TAVILY_API_KEY'] = "Add Your Tavily API Key"

web_search_tool = TavilySearchResults(k=3)

web_search_tool.run("What is self-attention mechanism in large language models?")

This tool allows us to perform a web search, retrieving up to 3 results.

Step6: Defining a Router Tool

@tool

def router_tool(question):

"""Router Function"""

if 'self-attention' in question:

return 'vectorstore'

else:

return 'web_search'

The router tool directs queries to either a vectorstore (for highly technical questions) or a web search. It checks the content of the query and makes the appropriate decision.

Step7: Creating the Agents

We define a series of agents to handle different parts of the query-answering pipeline:

Router Agent:

Routes questions to the right retrieval tool (PDF or web search).

Router_Agent = Agent(

role='Router',

goal='Route user question to a vectorstore or web search',

backstory=(

"You are an expert at routing a user question to a vectorstore or web search."

"Use the vectorstore for questions on concept related to Retrieval-Augmented Generation."

"You do not need to be stringent with the keywords in the question related to these topics. Otherwise, use web-search."

),

verbose=True,

allow_delegation=False,

llm=llm,

)

Retriever Agent:

Retrieves the information from the chosen source (PDF or web search).

Retriever_Agent = Agent(

role="Retriever",

goal="Use the information retrieved from the vectorstore to answer the question",

backstory=(

"You are an assistant for question-answering tasks."

"Use the information present in the retrieved context to answer the question."

"You have to provide a clear concise answer."

),

verbose=True,

allow_delegation=False,

llm=llm,

)

Grader Agent:

Ensures the retrieved information is relevant.

Grader_agent = Agent(

role='Answer Grader',

goal='Filter out erroneous retrievals',

backstory=(

"You are a grader assessing relevance of a retrieved document to a user question."

"If the document contains keywords related to the user question, grade it as relevant."

"It does not need to be a stringent test.You have to make sure that the answer is relevant to the question."

),

verbose=True,

allow_delegation=False,

llm=llm,

)

Hallucination Grader:

Filters out hallucinations(incorrect answers).

hallucination_grader = Agent(

role="Hallucination Grader",

goal="Filter out hallucination",

backstory=(

"You are a hallucination grader assessing whether an answer is grounded in / supported by a set of facts."

"Make sure you meticulously review the answer and check if the response provided is in alignmnet with the question asked"

),

verbose=True,

allow_delegation=False,

llm=llm,

)

Answer Grader:

Grades the final answer and ensures it’s useful.

answer_grader = Agent(

role="Answer Grader",

goal="Filter out hallucination from the answer.",

backstory=(

"You are a grader assessing whether an answer is useful to resolve a question."

"Make sure you meticulously review the answer and check if it makes sense for the question asked"

"If the answer is relevant generate a clear and concise response."

"If the answer gnerated is not relevant then perform a websearch using 'web_search_tool'"

),

verbose=True,

allow_delegation=False,

llm=llm,

)

Step8: Defining Tasks

Each task is defined to assign a specific role to the agents:

Router Task:

Determines whether the query should go to the PDF search or web search.

router_task = Task(

description=("Analyse the keywords in the question {question}"

"Based on the keywords decide whether it is eligible for a vectorstore search or a web search."

"Return a single word 'vectorstore' if it is eligible for vectorstore search."

"Return a single word 'websearch' if it is eligible for web search."

"Do not provide any other premable or explaination."

),

expected_output=("Give a binary choice 'websearch' or 'vectorstore' based on the question"

"Do not provide any other premable or explaination."),

agent=Router_Agent,

tools=[router_tool],

)

Retriever Task:

Retrieves the necessary information.

retriever_task = Task(

description=("Based on the response from the router task extract information for the question {question} with the help of the respective tool."

"Use the web_serach_tool to retrieve information from the web in case the router task output is 'websearch'."

"Use the rag_tool to retrieve information from the vectorstore in case the router task output is 'vectorstore'."

),

expected_output=("You should analyse the output of the 'router_task'"

"If the response is 'websearch' then use the web_search_tool to retrieve information from the web."

"If the response is 'vectorstore' then use the rag_tool to retrieve information from the vectorstore."

"Return a claer and consise text as response."),

agent=Retriever_Agent,

context=[router_task],

#tools=[retriever_tool],

)

Grader Task:

Grades the retrieved information.

grader_task = Task(

description=("Based on the response from the retriever task for the quetion {question} evaluate whether the retrieved content is relevant to the question."

),

expected_output=("Binary score 'yes' or 'no' score to indicate whether the document is relevant to the question"

"You must answer 'yes' if the response from the 'retriever_task' is in alignment with the question asked."

"You must answer 'no' if the response from the 'retriever_task' is not in alignment with the question asked."

"Do not provide any preamble or explanations except for 'yes' or 'no'."),

agent=Grader_agent,

context=[retriever_task],

)

Hallucination Task:

Ensures the answer is grounded in facts.

hallucination_task = Task(

description=("Based on the response from the grader task for the quetion {question} evaluate whether the answer is grounded in / supported by a set of facts."),

expected_output=("Binary score 'yes' or 'no' score to indicate whether the answer is sync with the question asked"

"Respond 'yes' if the answer is in useful and contains fact about the question asked."

"Respond 'no' if the answer is not useful and does not contains fact about the question asked."

"Do not provide any preamble or explanations except for 'yes' or 'no'."),

agent=hallucination_grader,

context=[grader_task],

)

Answer Task:

Provides the final answer or performs a web search if needed.

answer_task = Task(

description=("Based on the response from the hallucination task for the quetion {question} evaluate whether the answer is useful to resolve the question."

"If the answer is 'yes' return a clear and concise answer."

"If the answer is 'no' then perform a 'websearch' and return the response"),

expected_output=("Return a clear and concise response if the response from 'hallucination_task' is 'yes'."

"Perform a web search using 'web_search_tool' and return ta clear and concise response only if the response from 'hallucination_task' is 'no'."

"Otherwise respond as 'Sorry! unable to find a valid response'."),

context=[hallucination_task],

agent=answer_grader,

#tools=[answer_grader_tool],

)

Step9: Building the Crew

We group the agents and tasks into a Crew that will manage the overall pipeline:

rag_crew = Crew(

agents=[Router_Agent, Retriever_Agent, Grader_agent, hallucination_grader, answer_grader],

tasks=[router_task, retriever_task, grader_task, hallucination_task, answer_task],

verbose=True,

)

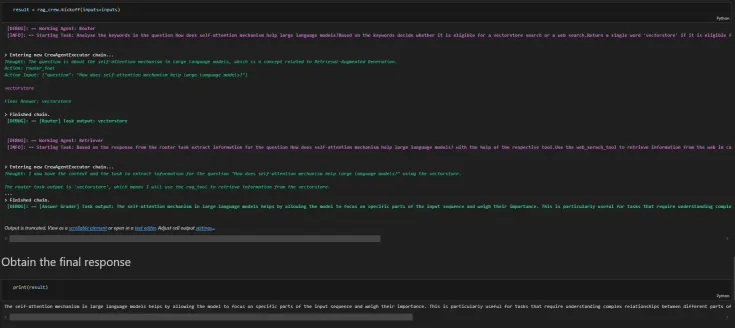

Step10: Running the Pipeline

Finally, we ask a question and kick off the RAG system:

inputs ={"question":"How does self-attention mechanism help large language models?"}

result = rag_crew.kickoff(inputs=inputs)

print(result)

This pipeline processes the question through the agents, retrieves the relevant information, filters out hallucinations, and provides a concise and relevant answer.

Conclusion

The combination of RAG, CrewAI, and LangChain is a glimpse into the future of AI. By leveraging agentic intelligence and task chaining, we can build systems that are smarter, faster, and more accurate. These systems don’t just generate information—they actively retrieve, verify, and filter it to ensure the highest quality of responses.

With tools like CrewAI and LangChain at your disposal, the possibilities for building intelligent, agent-driven AI systems are endless. Whether you’re working in AI research, automated customer support, or any other data-intensive field, Agentic RAG systems are the key to unlocking new levels of efficiency and accuracy.

You can click here to access the link.

Key Takeaways

- RAG systems combine natural language generation with real-time data retrieval, ensuring AI can pull accurate, up-to-date information from external sources for more reliable responses.

- CrewAI employs a team of specialized AI agents, each responsible for different tasks like data retrieval, evaluation, and verification, resulting in a highly efficient, agentic system.

- LangChain enables the creation of multi-step workflows that connect various tasks, allowing AI systems to process information more effectively through logical sequencing and orchestration of large language models (LLMs).

- By combining CrewAI’s agentic framework with LangChain’s task chaining, you can build intelligent AI systems that retrieve and verify information in real time, significantly improving the accuracy and reliability of responses.

- The blog walked through the process of creating your own Agentic RAG system using advanced tools like LLaMA 3, Groq API, CrewAI, and LangChain, making it clear how these technologies work together to automate and enhance AI-driven solutions.

Frequently Asked Questions

Q1. How does CrewAI contribute to building agentic systems?A. CrewAI orchestrates multiple AI agents, each specializing in tasks like retrieving information, verifying relevance, and ensuring accuracy.

Q2. What is LangChain used for in a RAG system?A. LangChain creates workflows that chain AI tasks together, ensuring each step of data processing and retrieval happens in the right order.

Q3. What is the role of agents in a RAG system?A. Agents handle specific tasks like retrieving data, verifying its accuracy, and grading responses, making the system more reliable and precise.

Q4. Why should I use the Groq API in my RAG system?A. The Groq API provides access to powerful language models like LLaMA 3, enabling high-performance AI for complex tasks.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

The above is the detailed content of How Agentic RAG Systems Transform Tech?. For more information, please follow other related articles on the PHP Chinese website!

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AM

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AMChatGPT Chinese version: Unlock new experience of Chinese AI dialogue ChatGPT is popular all over the world, did you know it also offers a Chinese version? This powerful AI tool not only supports daily conversations, but also handles professional content and is compatible with Simplified and Traditional Chinese. Whether it is a user in China or a friend who is learning Chinese, you can benefit from it. This article will introduce in detail how to use ChatGPT Chinese version, including account settings, Chinese prompt word input, filter use, and selection of different packages, and analyze potential risks and response strategies. In addition, we will also compare ChatGPT Chinese version with other Chinese AI tools to help you better understand its advantages and application scenarios. OpenAI's latest AI intelligence

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AM

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AMThese can be thought of as the next leap forward in the field of generative AI, which gave us ChatGPT and other large-language-model chatbots. Rather than simply answering questions or generating information, they can take action on our behalf, inter

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AM

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AMEfficient multiple account management techniques using ChatGPT | A thorough explanation of how to use business and private life! ChatGPT is used in a variety of situations, but some people may be worried about managing multiple accounts. This article will explain in detail how to create multiple accounts for ChatGPT, what to do when using it, and how to operate it safely and efficiently. We also cover important points such as the difference in business and private use, and complying with OpenAI's terms of use, and provide a guide to help you safely utilize multiple accounts. OpenAI

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Dreamweaver Mac version

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

WebStorm Mac version

Useful JavaScript development tools

Atom editor mac version download

The most popular open source editor

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software