Backend Development

Backend Development XML/RSS Tutorial

XML/RSS Tutorial XML/RSS Data Integration: Practical Guide for Developers & Architects

XML/RSS Data Integration: Practical Guide for Developers & ArchitectsXML/RSS data integration can be achieved by parsing and generating XML/RSS files. 1) Use Python's xml.etree.ElementTree or feedparser library to parse XML/RSS files and extract data. 2) Use ElementTree to generate XML/RSS files and gradually add nodes and data.

introduction

In this data-driven world, XML and RSS remain an important part of many applications, especially in content aggregation and data exchange. As a developer or architect, understanding how to effectively integrate XML/RSS data will not only improve work efficiency, but also bring more flexibility and scalability to your project. This article will take you into a hands-on guide to XML/RSS data integration to help you master this key skill.

By reading this article, you will learn how to parse and generate XML/RSS data, understand their application scenarios in modern applications, and master some practical best practices and performance optimization techniques. I will combine my experience to share some problems and solutions encountered in actual projects to help you avoid common pitfalls.

Review of basic knowledge

XML (Extensible Markup Language) and RSS (Really Simple Syndication) are two common data formats. XML is used for the storage and transmission of structured data, while RSS is a standard format for content distribution and aggregation. Understanding the basics of these two formats is the first step in integrating them.

XML files are composed of tags that can be nested to form a tree-like structure. RSS is a specific format based on XML, used to publish frequently updated content, such as blog posts, news, etc. RSS files usually contain fields such as title, link, description, etc., which facilitates content aggregation for other applications.

When processing XML/RSS data, we usually use some libraries or tools, such as Python's xml.etree.ElementTree or feedparser . These tools can help us parse XML/RSS files and extract the data in it.

Core concept or function analysis

XML/RSS parsing and generation

Parsing XML/RSS data is one of the core tasks that integrate them. Let's look at a simple example, using Python's xml.etree.ElementTree to parse an RSS file:

import xml.etree.ElementTree as ET

# Read RSS file tree = ET.parse('example.rss')

root = tree.getroot()

# traverse RSS items for item in root.findall('./channel/item'):

title = item.find('title').text

link = item.find('link').text

print(f'Title: {title}, Link: {link}') This code shows how to read an RSS file and iterate through the items in it, extracting title and link information. Similarly, we can use ElementTree to generate XML/RSS files:

import xml.etree.ElementTree as ET # Create root element root = ET.Element('rss') channel = ET.SubElement(root, 'channel') item = ET.SubElement(channel, 'item') # Add child element ET.SubElement(item, 'title').text = 'Example Title' ET.SubElement(item, 'link').text = 'https://example.com' # Generate XML file tree = ET.ElementTree(root) tree.write('output.rss', encoding='utf-8', xml_declaration=True)

How it works

The core of XML/RSS parsing is the traversal of tree structures and node operations. The parser will read the XML file into a tree structure, and we can then access and manipulate the nodes in it by traversing the tree. For RSS files, channel node is usually first found, and then iterates over item nodes and extracts the data.

To generate XML/RSS files, on the contrary, we start from the root node, gradually add child nodes and data, and finally generate a complete XML tree structure, and then write it to the file.

In terms of performance, the efficiency of XML/RSS parsing and generation mainly depends on the file size and parser implementation. For large files, you may want to consider using a streaming parser to reduce memory footprint.

Example of usage

Basic usage

Let's look at a more practical example, using Python's feedparser library to parse an RSS feed and extract the contents:

import feedparser

# parse RSS feeds

feed = feedparser.parse('https://example.com/feed')

# traverse RSS items for entry in feed.entries:

print(f'Title: {entry.title}, Link: {entry.link}, Published: {entry.published}') This code shows how to use the feedparser library to parse RSS feeds and extract the title, link, and publish time information. feedparser is a very convenient tool that can handle feeds in various RSS and Atom formats, simplifying the parsing process.

Advanced Usage

In some complex scenarios, we may need to handle RSS feeds more deeply. For example, we could write a script that automatically extracts content from multiple RSS feeds and generates a summary report:

import feedparser

from collections import defaultdict

# Define RSS feeds list feeds = [

'https://example1.com/feed',

'https://example2.com/feed',

]

# Initialize the data structure data = defaultdict(list)

# traversal RSS feeds

for feed_url in feeds:

feed = feedparser.parse(feed_url)

for entry in feed.entries:

data[feed_url].append({

'title': entry.title,

'link': entry.link,

'published': entry.published,

})

# Generate summary report for feed_url, entries in data.items():

print(f'Feed: {feed_url}')

for entry in entries:

print(f' - Title: {entry["title"]}, Link: {entry["link"]}, Published: {entry["published"]}') This example shows how to extract content from multiple RSS feeds and generate a summary report. It shows how to use defaultdict to organize data, and how to iterate through multiple feeds and process the data in it.

Common Errors and Debugging Tips

Common problems when processing XML/RSS data include:

- XML format error : The format of the XML file must strictly comply with the specifications, otherwise the parser will report an error. This type of problem can be avoided using XML verification tools or format checks before parsing.

- Coding issues : XML/RSS files may use different encodings and need to make sure that the parser handles these encodings correctly. When using

xml.etree.ElementTree, you can specify the file encoding throughencodingparameter. - Data Loss : During parsing, some fields may not exist or be empty, and appropriate error handling and default value settings are required.

When debugging these problems, you can use the following tips:

- Using debugging tools : Many IDEs and debugging tools can help you gradually track code execution, view variable values, and find out what the problem is.

- Logging : Adding logging to the code can help you track the execution process of the program and find out the specific location where the exception occurs.

- Unit Testing : Writing unit tests can help you verify the correctness of your code and ensure that no new problems are introduced when modifying your code.

Performance optimization and best practices

In practical applications, it is very important to optimize the performance of XML/RSS data integration. Here are some optimization tips and best practices:

- Using Streaming Parser : For large XML/RSS files, using Streaming Parser can reduce memory usage and improve parsing speed. Python's

xml.saxmodule provides a method to stream parse XML files. - Cache results : If you need to frequently parse the same XML/RSS file, you can consider cache the parsing results to avoid the performance overhead caused by repeated parsing.

- Parallel processing : If you need to process multiple RSS feeds, you can consider using multi-threaded or multi-process technology to process these feeds in parallel to improve the overall processing speed.

There are some best practices to note when writing code:

- Code readability : Use meaningful variable names and comments to improve the readability of the code and facilitate subsequent maintenance.

- Error handling : Add appropriate error handling to the code to ensure that the program can handle exceptions gracefully instead of crashing directly.

- Modular design : divide the code into multiple modules or functions to improve the reusability and maintainability of the code.

Through these tips and practices, you can integrate XML/RSS data more effectively to improve the performance and reliability of your project.

Summarize

XML/RSS data integration is an important part of many applications. Through this article, you should have mastered how to parse and generate XML/RSS data, understand their application scenarios, and learn some practical best practices and performance optimization techniques. I hope this knowledge and experience can help you better process XML/RSS data in actual projects and improve your development efficiency and project quality.

The above is the detailed content of XML/RSS Data Integration: Practical Guide for Developers & Architects. For more information, please follow other related articles on the PHP Chinese website!

XML外部实体注入漏洞的示例分析May 11, 2023 pm 04:55 PM

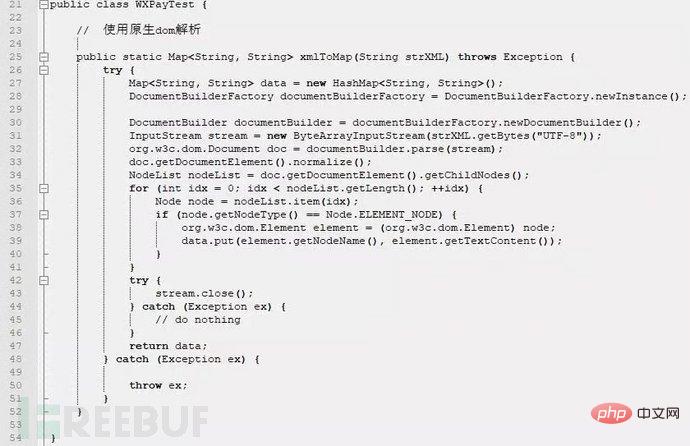

XML外部实体注入漏洞的示例分析May 11, 2023 pm 04:55 PM一、XML外部实体注入XML外部实体注入漏洞也就是我们常说的XXE漏洞。XML作为一种使用较为广泛的数据传输格式,很多应用程序都包含有处理xml数据的代码,默认情况下,许多过时的或配置不当的XML处理器都会对外部实体进行引用。如果攻击者可以上传XML文档或者在XML文档中添加恶意内容,通过易受攻击的代码、依赖项或集成,就能够攻击包含缺陷的XML处理器。XXE漏洞的出现和开发语言无关,只要是应用程序中对xml数据做了解析,而这些数据又受用户控制,那么应用程序都可能受到XXE攻击。本篇文章以java

php如何将xml转为json格式?3种方法分享Mar 22, 2023 am 10:38 AM

php如何将xml转为json格式?3种方法分享Mar 22, 2023 am 10:38 AM当我们处理数据时经常会遇到将XML格式转换为JSON格式的需求。PHP有许多内置函数可以帮助我们执行这个操作。在本文中,我们将讨论将XML格式转换为JSON格式的不同方法。

Python中xmltodict对xml的操作方式是什么May 04, 2023 pm 06:04 PM

Python中xmltodict对xml的操作方式是什么May 04, 2023 pm 06:04 PMPythonxmltodict对xml的操作xmltodict是另一个简易的库,它致力于将XML变得像JSON.下面是一个简单的示例XML文件:elementsmoreelementselementaswell这是第三方包,在处理前先用pip来安装pipinstallxmltodict可以像下面这样访问里面的元素,属性及值:importxmltodictwithopen("test.xml")asfd:#将XML文件装载到dict里面doc=xmltodict.parse(f

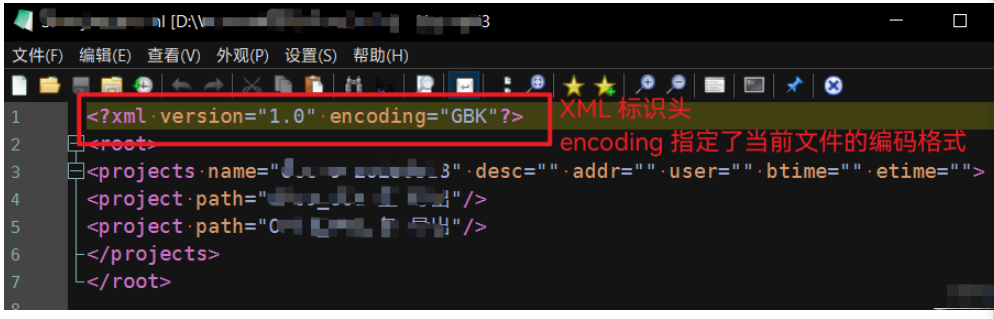

Python中怎么对XML文件的编码进行转换May 21, 2023 pm 12:22 PM

Python中怎么对XML文件的编码进行转换May 21, 2023 pm 12:22 PM1.在Python中XML文件的编码问题1.Python使用的xml.etree.ElementTree库只支持解析和生成标准的UTF-8格式的编码2.常见GBK或GB2312等中文编码的XML文件,用以在老旧系统中保证XML对中文字符的记录能力3.XML文件开头有标识头,标识头指定了程序处理XML时应该使用的编码4.要修改编码,不仅要修改文件整体的编码,还要将标识头中encoding部分的值修改2.处理PythonXML文件的思路1.读取&解码:使用二进制模式读取XML文件,将文件变为

xml中node和element的区别是什么Apr 19, 2022 pm 06:06 PM

xml中node和element的区别是什么Apr 19, 2022 pm 06:06 PMxml中node和element的区别是:Element是元素,是一个小范围的定义,是数据的组成部分之一,必须是包含完整信息的结点才是元素;而Node是节点,是相对于TREE数据结构而言的,一个结点不一定是一个元素,一个元素一定是一个结点。

使用nmap-converter将nmap扫描结果XML转化为XLS实战的示例分析May 17, 2023 pm 01:04 PM

使用nmap-converter将nmap扫描结果XML转化为XLS实战的示例分析May 17, 2023 pm 01:04 PM使用nmap-converter将nmap扫描结果XML转化为XLS实战1、前言作为网络安全从业人员,有时候需要使用端口扫描利器nmap进行大批量端口扫描,但Nmap的输出结果为.nmap、.xml和.gnmap三种格式,还有夹杂很多不需要的信息,处理起来十分不方便,而将输出结果转换为Excel表格,方面处理后期输出。因此,有技术大牛分享了将nmap报告转换为XLS的Python脚本。2、nmap-converter1)项目地址:https://github.com/mrschyte/nmap-

深度使用Scrapy:如何爬取HTML、XML、JSON数据?Jun 22, 2023 pm 05:58 PM

深度使用Scrapy:如何爬取HTML、XML、JSON数据?Jun 22, 2023 pm 05:58 PMScrapy是一款强大的Python爬虫框架,可以帮助我们快速、灵活地获取互联网上的数据。在实际爬取过程中,我们会经常遇到HTML、XML、JSON等各种数据格式。在这篇文章中,我们将介绍如何使用Scrapy分别爬取这三种数据格式的方法。一、爬取HTML数据创建Scrapy项目首先,我们需要创建一个Scrapy项目。打开命令行,输入以下命令:scrapys

Python如何使用Beautiful Soup(BS4)库解析HTML和XMLMay 13, 2023 pm 09:55 PM

Python如何使用Beautiful Soup(BS4)库解析HTML和XMLMay 13, 2023 pm 09:55 PM一、BeautifulSoup概述:BeautifulSoup支持从HTML或XML文件中提取数据的Python库;它支持Python标准库中的HTML解析器,还支持一些第三方的解析器lxml。BeautifulSoup自动将输入文档转换为Unicode编码,输出文档转换为utf-8编码。安装:pipinstallbeautifulsoup4可选择安装解析器pipinstalllxmlpipinstallhtml5lib二、BeautifulSoup4简单使用假设有这样一个Html,具体内容如下

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Zend Studio 13.0.1

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.