LLM Chatbots: Revolutionizing Conversational AI with Retrieval Augmented Generation (RAG)

Since ChatGPT's November 2022 launch, Large Language Model (LLM) chatbots have become ubiquitous, transforming various applications. While the concept of chatbots isn't new—many older chatbots were overly complex and frustrating—LLMs have revitalized the field. This blog explores the power of LLMs, the Retrieval Augmented Generation (RAG) technique, and how to build your own chatbot using OpenAI's GPT API and Pinecone.

This guide covers:

- Retrieval Augmented Generation (RAG)

- Large Language Models (LLMs)

- Utilizing OpenAI GPT and other APIs

- Vector Databases and their necessity

- Creating a chatbot with Pinecone and OpenAI in Python

For a deeper dive, explore our courses on Vector Databases for Embeddings with Pinecone and the code-along on Building Chatbots with OpenAI API and Pinecone.

Large Language Models (LLMs)

Image Source

LLMs, such as GPT-4, are sophisticated machine learning algorithms employing deep learning (specifically, transformer architecture) to understand and generate human language. Trained on massive datasets (trillions of words from diverse online sources), they handle complex language tasks.

LLMs excel at text generation in various styles and formats, from creative writing to technical documentation. Their capabilities include summarization, conversational AI, and language translation, often capturing nuanced language features.

However, LLMs have limitations. "Hallucinations"—generating plausible but incorrect information—and bias from training data are significant challenges. While LLMs represent a major AI advancement, careful management is crucial to mitigate risks.

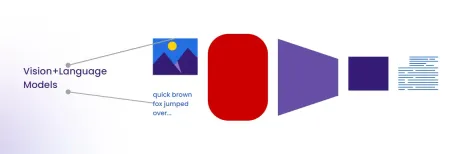

Retrieval Augmented Generation (RAG)

Image Source

LLMs' limitations (outdated, generic, or false information due to data limitations or "hallucinations") are addressed by RAG. RAG enhances accuracy and trustworthiness by directing LLMs to retrieve relevant information from specified sources. This gives developers more control over LLM responses.

The RAG Process (Simplified)

(A detailed RAG tutorial is available separately.)

- Data Preparation: External data (e.g., current research, news) is prepared and converted into a format (embeddings) usable by the LLM.

- Embedding Storage: Embeddings are stored in a Vector Database (like Pinecone), optimized for efficient vector data retrieval.

- Information Retrieval: A semantic search using the user's query (converted into a vector) retrieves the most relevant information from the database.

- Prompt Augmentation: Retrieved data and the user query augment the LLM prompt, leading to more accurate responses.

- Data Updates: External data is regularly updated to maintain accuracy.

Vector Databases

Image Source

Vector databases manage high-dimensional vectors (mathematical data representations). They excel at similarity searches based on vector distance, enabling semantic querying. Applications include finding similar images, documents, or products. Pinecone is a popular, efficient, and user-friendly example. Its advanced indexing techniques are ideal for RAG applications.

OpenAI API

OpenAI's API provides access to models like GPT, DALL-E, and Whisper. Accessible via HTTP requests (or simplified with Python's openai library), it's easily integrated into various programming languages.

Python Example:

import os

os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

from openai import OpenAI

client = OpenAI()

completion = client.chat.completions.create(

model="gpt-4",

messages=[

{"role": "system", "content": "You are expert in Machine Learning."},

{"role": "user", "content": "Explain how does random forest works?."}

]

)

print(completion.choices[0].message)

LangChain (Framework Overview)

LangChain simplifies LLM application development. While powerful, it's still under active development, so API changes are possible.

End-to-End Python Example: Building an LLM Chatbot

This section builds a chatbot using OpenAI GPT-4 and Pinecone. (Note: Much of this code is adapted from the official Pinecone LangChain guide.)

1. OpenAI and Pinecone Setup: Obtain API keys.

2. Install Libraries: Use pip to install langchain, langchain-community, openai, tiktoken, pinecone-client, and pinecone-datasets.

3. Sample Dataset: Load a pre-embedded dataset (e.g., wikipedia-simple-text-embedding-ada-002-100K from pinecone-datasets). (Sampling a subset is recommended for faster processing.)

4. Pinecone Index Setup: Create a Pinecone index (langchain-retrieval-augmentation-fast in this example).

5. Data Insertion: Upsert the sampled data into the Pinecone index.

6. LangChain Integration: Initialize a LangChain vector store using the Pinecone index and OpenAI embeddings.

7. Querying: Use the vector store to perform similarity searches.

8. LLM Integration: Use ChatOpenAI and RetrievalQA (or RetrievalQAWithSourcesChain for source attribution) to integrate the LLM with the vector store.

Conclusion

This blog demonstrated the power of RAG for building reliable and relevant LLM-powered chatbots. The combination of LLMs, vector databases (like Pinecone), and frameworks like LangChain empowers developers to create sophisticated conversational AI applications. Our courses provide further learning opportunities in these areas.

The above is the detailed content of How to Build a Chatbot Using the OpenAI API & Pinecone. For more information, please follow other related articles on the PHP Chinese website!

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AMHey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

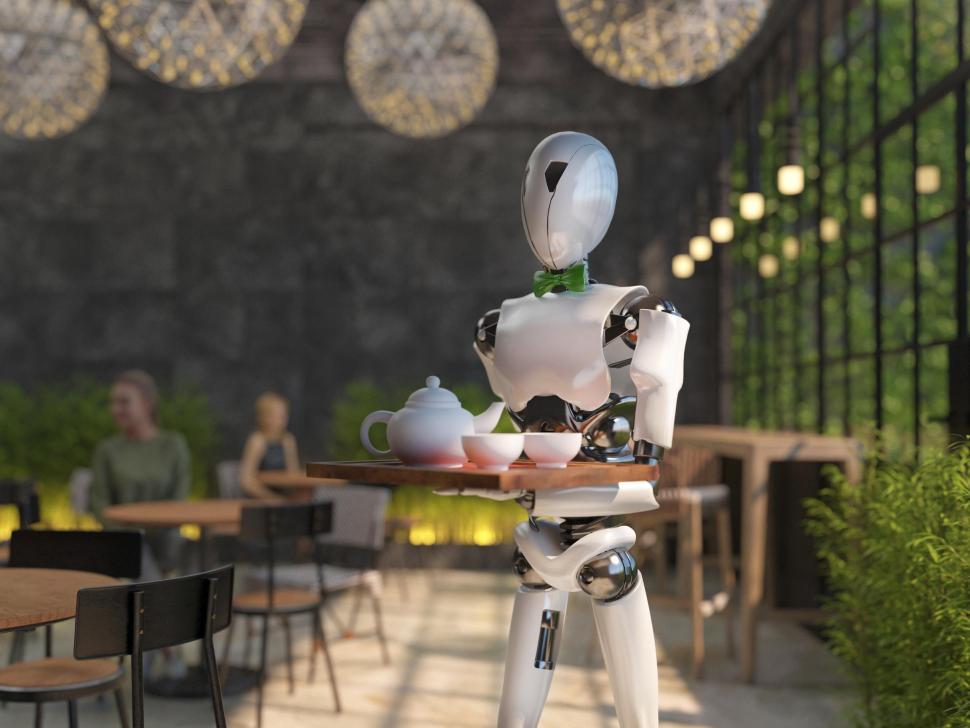

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Linux new version

SublimeText3 Linux latest version

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

Notepad++7.3.1

Easy-to-use and free code editor

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.