Most Large Language Models (LLMs) like GPT-4 are trained on generalized, often outdated datasets. While they excel at responding to general questions, they struggle with queries about recent news, latest developments, and domain-specific topics. In such cases, they may hallucinate or provide inaccurate responses.

Despite the emergence of better-performing models like Claude 3.5 Sonnet, we still need to either model fine-tuning for generating customized responses or use Retrieval-Augmented Generation (RAG) systems to provide extra context to the base model.

In this tutorial, we will explore RAG and fine-tuning, two distinct techniques used to improve LLM responses. We will examine their differences and put theory into practice by evaluating results.

Additionally, we will dive into hybrid techniques that combine fine-tuned models with RAG systems to leverage the best of both worlds. Finally, we will learn how to choose between these three approaches based on specific use cases and requirements.

Overview of RAG and Fine-Tuning

RAG and fine-tuning techniques improve the response generation for domain-specific queries, but they are inherently completely different techniques. Let's learn about them.

Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation is a process where large language models like GPT-4o become context-aware using external data sources. It is a combination of a retriever and a generator. The retriever fetches data from the Internet or a vector database and provides it to the generator with the original user’s query. The generator uses additional context to generate a highly accurate and relevant response.

To find out more, read our article, What is Retrieval Augmented Generation (RAG)? A Guide to the Basics and understand the inner workings of RAG application and various use cases.

Fine-Tuning

Fine-tuning is the process of tuning the pre-trained model using the domain-specific dataset. The pre-trained model is trained on multiple large corpses of general datasets scrapped from the Internet. They are good at responding to general questions, but they will struggle or even hallucinate while responding to domain-specific questions.

For example, a pre-trained model might be proficient in general conversational abilities but could produce wrong answers when asked about intricate medical procedures or legal precedents.

Fine-tuning it on a medical or legal dataset enables the model to understand and respond to questions within those fields with greater accuracy and relevance.

Follow the An Introductory Guide to Fine-Tuning LLMs tutorial to learn about customizing the pre-trained model with visual guides.

RAG vs. Fine-Tuning

We have learned about each methodology for improving the LLMs' response generation. Let’s examine the differences to understand them better.

1. Learning style

RAG uses a dynamic learning style, which allows language models to access and use the latest and most accurate data from databases, the Internet, or even APIs. This approach ensures that the generated responses are always up-to-date and relevant.

Fine-tuning involves static learning, where the model learns through a new dataset during the training phase. While this method allows the model to adapt to domain-specific response generation, it cannot integrate new information post-training without re-training.

2. Adaptability

RAG is best for generalizations. It uses the retrieval process to pull information from different data sources. RAG does not change the model's response; it just provides extra information to guide the model.

Fine-tuning customizes the model output and improves the model performance on a special domain that is closely associated with the training dataset. It also changes the style of response generation and sometimes provides more relevant answers than RAG systems.

3. Resource intensity

RAG is resource-intensive because it is performed during model inference. Compared to simple LLMs without RAG, RAG requires more memory and computing.

Fine-tuning is compute-intensive, but it is performed once. It requires multiple GPUs and high memory during the training process, but after that, it is quite resource-friendly compared to RAG systems.

4. Cost

RAG requires top-of-the-class embedding models and LLMs for better response generation. It also needs a fast vector database. The API and operation costs can rise quite quickly.

Fine-tuning will cost you big only once during the training process, but after that, you will be paying for model inference, which is quite cheaper than RAG.

Overall, on average, fine-tuning costs more than RAG if everything is considered.

5. Implementation Complexity

RAG systems can be built by software engineers and require medium technical expertise. You are required to learn about LLM designs, vector databases, embeddings, prompt engineers, and more, which does require time but is easy to learn in a month.

Fine-tuning the model demands high technical expertise. From preparing the dataset to setting tuning parameters to monitoring the model performance, years of experience in the field of natural language processing are needed.

Putting the Theory to the Test with Practical Examples

Let's test our theory by providing the same prompt to a fine-tuned model, RAG application, and a hybrid approach and then evaluate the results. The hybrid approach will combine the fine-tuned model with the RAG application. For this example, we will use the ruslanmv/ai-medical-chatbot dataset from Hugging Face, which contains conversations between patients and doctors about various health conditions.

Building RAG Application using Llama 3

We will start by building the RAG application using the Llama 3 and LangChain ecosystem.

You can also learn to build a RAG application using LlamaIndex by following the code along, Retrieval Augmented Generation with LlamaIndex.

1. Install all the necessary Python packages.

%%capture %pip install -U langchain langchainhub langchain_community langchain-huggingface faiss-gpu transformers accelerate

2. Load the necessary functions from LangChain and Transformers libraries.

from langchain.document_loaders import HuggingFaceDatasetLoader from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain.embeddings import HuggingFaceEmbeddings from langchain.vectorstores import FAISS from transformers import AutoTokenizer, AutoModelForCausalLM,pipeline from langchain_huggingface import HuggingFacePipeline from langchain.chains import RetrievalQA

3. To access restricted models and datasets, it is recommended that you log in to the Hugging Face hub using the API key.

from huggingface_hub import login

from kaggle_secrets import UserSecretsClient

user_secrets = UserSecretsClient()

hf_token = user_secrets.get_secret("HUGGINGFACE_TOKEN")

login(token = hf_token)

4. Load the dataset by providing the dataset name and the column name to HuggingFaceDatasetLoader. The “Doctor” columns will be our main document, and the rest of the columns will be the metadata.

5. Limiting our dataset to the first 1000 rows. Reducing the dataset will help us reduce the data storage time in the vector database.

# Specify the dataset name dataset_name = "ruslanmv/ai-medical-chatbot" # Create a loader instance using dataset columns loader_doctor = HuggingFaceDatasetLoader(dataset_name,"Doctor") # Load the data doctor_data = loader_doctor.load() # Select the first 1000 entries doctor_data = doctor_data[:1000] doctor_data[:2]

As we can see, the “Doctor” column is the page content, and the rest are considered metadata.

6. Load the embedding model from Hugging Face using specific parameters like enabling GPU acceleration.

7. Test the embedding model by providing it with the sample text.

# Define the path to the embedding model

modelPath = "sentence-transformers/all-MiniLM-L12-v2"

# GPU acceleration

model_kwargs = {'device':'cuda'}

# Create a dictionary with encoding options

encode_kwargs = {'normalize_embeddings': False}

# Initialize an instance of HuggingFaceEmbeddings with the specified parameters

embeddings = HuggingFaceEmbeddings(

model_name=modelPath,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs

)

text = "Why are you a doctor?"

query_result = embeddings.embed_query(text)

query_result[:3]

[-0.059351932257413864, 0.08008933067321777, 0.040729623287916183]

8. Convert the data into the embeddings and save them into the vector database.

9. Save the vector database in the local directory.

10. Perform a similarity search using the sample prompt.

vector_db = FAISS.from_documents(doctor_data, embeddings)

vector_db.save_local("/kaggle/working/faiss_doctor_index")

question = "Hi Doctor, I have a headache, help me."

searchDocs = vector_db.similarity_search(question)

print(searchDocs[0].page_content)

11. Convert the vector database instance to a retriever. This will help us create the RAG chain.

retriever = vector_db.as_retriever()

12. Load the tokenizer and model using the Llama 3 8B Chat model.

13. Use them to create the test generation pipeline.

14. Convert the pipeline into the LangChain LLM client.

import torch

base_model = "/kaggle/input/llama-3/transformers/8b-chat-hf/1"

tokenizer = AutoTokenizer.from_pretrained(base_model)

model = AutoModelForCausalLM.from_pretrained(

base_model,

return_dict=True,

low_cpu_mem_usage=True,

torch_dtype=torch.float16,

device_map="auto",

trust_remote_code=True,

)

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=120

)

llm = HuggingFacePipeline(pipeline=pipe)

15. Create a question and answer chain using the retriever, user query, RAG prompt, and LLM.

from langchain import hub

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

rag_prompt = hub.pull("rlm/rag-prompt")

qa_chain = (

{"context": retriever, "question": RunnablePassthrough()}

| rag_prompt

| llm

| StrOutputParser()

)

16. Test the Q&A chain by asking questions to the doctor.

question = "Hi Doctor, I have a headache, help me."

result = qa_chain.invoke(question)

print(result.split("Answer: ")[1])

It is quite similar to the dataset, but it does not pick up the style. It has understood the context and used it to write the response in its own style.

Let’s try again with another question.

%%capture %pip install -U langchain langchainhub langchain_community langchain-huggingface faiss-gpu transformers accelerate

This is a very direct answer. Maybe we need to fine-tune the model instead of using the RAG approach for the doctor and patient chatbot.

If you are encountering difficulty running the code, please consult the Kaggle notebook: Building RAG Application using Llama 3.

Learn how to improve RAG system performance with techniques like Chunking, Reranking, and Query Transformations by following the How to Improve RAG Performance: 5 Key Techniques with Examples tutorial.

Fine-tuning Llama 3 on Medical data

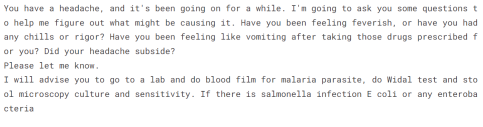

We won't be fine-tuning the model on the doctor and patient dataset because we have already done that in a previous tutorial: Fine-Tuning Llama 3 and Using It Locally: A Step-by-Step Guide. What we are going to do is load the fine-tuned model and provide it with the same question to evaluate the results. The fine-tuned model is available on Hugging Face and Kaggle.

If you are interested in fine-tuning the GPT-4 model using the OpenAI API, you can refer to the easy-to-follow DataCamp tutorial Fine-Tuning OpenAI's GPT-4: A Step-by-Step Guide.

Source: kingabzpro/llama-3-8b-chat-doctor

1. Load the tokenizer and model using the transformer library.

2. Ensure that you use the correct parameters to load the model in the Kaggle GPU T4 x2 environment.

from langchain.document_loaders import HuggingFaceDatasetLoader from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain.embeddings import HuggingFaceEmbeddings from langchain.vectorstores import FAISS from transformers import AutoTokenizer, AutoModelForCausalLM,pipeline from langchain_huggingface import HuggingFacePipeline from langchain.chains import RetrievalQA

3. Apply the chat template to the messages.

4. Create a text generation pipeline using the model and tokenizer.

5. Provide the pipeline object with a prompt and generate the response.

from huggingface_hub import login

from kaggle_secrets import UserSecretsClient

user_secrets = UserSecretsClient()

hf_token = user_secrets.get_secret("HUGGINGFACE_TOKEN")

login(token = hf_token)

The response is quite similar to the dataset. The style is the same, but instead of giving a direct answer, it suggests that the patient undergoes further tests.

6. Let’s ask the second question.

# Specify the dataset name dataset_name = "ruslanmv/ai-medical-chatbot" # Create a loader instance using dataset columns loader_doctor = HuggingFaceDatasetLoader(dataset_name,"Doctor") # Load the data doctor_data = loader_doctor.load() # Select the first 1000 entries doctor_data = doctor_data[:1000] doctor_data[:2]

The style is the same, and the response is quite empathic and explanatory.

If you are encountering difficulty running the code, please consult the Kaggle notebook: Fine-tuned Llama 3 HF Inference.

Hybrid Approach (RAG Fine-tuning)

We will now provide the fine-tuned model with extra context to further tune the response and find the balance.

Instead of writing all the code again, we will dive directly into response generation using the Q&A chain. If you want to see the complete code for how we have combined a fine-tuned model with an RAG Q&A chain, please have a look at the Hybrid Approach (RAG Fine-tuning) Kaggle notebook.

Provide the chain with the same questions we asked the RAG and fine-tuned model.

%%capture %pip install -U langchain langchainhub langchain_community langchain-huggingface faiss-gpu transformers accelerate

The answer is quite accurate, and the response is generated in the doctor's style.

Let’s ask the second question.

from langchain.document_loaders import HuggingFaceDatasetLoader from langchain.text_splitter import RecursiveCharacterTextSplitter from langchain.embeddings import HuggingFaceEmbeddings from langchain.vectorstores import FAISS from transformers import AutoTokenizer, AutoModelForCausalLM,pipeline from langchain_huggingface import HuggingFacePipeline from langchain.chains import RetrievalQA

This is strange. We never provided additional context about whether the acne is pus-filled or not. Maybe the Hybrid model does not apply to some queries.

In the case of a doctor-patient chatbot, the fine-tuned model excels in style adoption and accuracy. However, this might vary for other use cases, which is why it's important to conduct extensive tests to determine the best method for your specific use case.

The official term for the Hybrid approach is RAFT (Retrieval Augmented Fine-Tuning). Learn more about it by reading What is RAFT? Combining RAG and Fine-Tuning To Adapt LLMs To Specialized Domains blog.

How to Choose Between RAG vs Fine-tuning vs RAFT

It all depends on your use case and available resources. If you are a startup with limited resources, then try building a RAG proof of concept using Open AI API and LangChain framework. For that, you will require limited resources, expertise, and datasets.

If you are a mid-level company and want to fine-tune to improve the response accuracy and deploy the open-source model on the cloud, you need to hire experts like data scientists and machine learning operations engineers. Fine-tuning requires top-of-the-class GPUs, large memory, a cleaned dataset, and technical team who understand LLMs.

A hybrid solution is both resource and compute intensive. It also requires an LLMOps engineer who can balance fine-tuning and RAG. You should consider this when you want to improve your response generation even further by taking advantage of the good qualities of RAG and a fine-tuned model.

Please refer to the table below for an overview of RAG, fine-tuning, and RAFT solutions.

|

RAG |

Fine-Tuning |

RAFT |

|

|

Advantages |

Contextual understanding, minimizes hallucinations, easily adapts to new data, cost-effective. |

Task-specific expertise, customization, enhanced accuracy, increased robustness. |

Combines strengths of both RAG and fine-tuning, deeper understanding and context. |

|

Disadvantages |

Data source management, complexity. |

Data bias, resource intensive, high computational costs, substantial memory requirements, time & expertise intensive. |

Complexity in implementation, requires balancing retrieval and fine-tuning processes. |

|

Implementation Complexity |

Higher than prompt engineering. |

Higher than RAG. Requires highly technical experts. |

Most complex of the three. |

|

Learning Style |

Dynamic |

Static |

Dynamic Static |

|

Adaptability |

Easily adapts to new data and evolving facts. |

Customize the outputs to specific tasks and domains. |

Adapts to both real-time data and specific tasks. |

|

Cost |

Low |

Moderate |

High |

|

Resource Intensity |

Low. Resources are used during Inference. |

Moderate. Resources are used during fine-tuning. |

High |

Conclusion

Large Language Models are at the heart of AI development today. Companies are looking for various ways to improve and customize these models without spending millions of dollars on training. They begin with parameter optimization and prompt engineering. They either select RAG or fine-tune the model to obtain an even better response and reduce hallucinations. While there are other techniques to improve the response, these are the most popular options available.

In this tutorial, we have learned about the differences between RAG and fine-tuning through both theory and practical examples. We also explored hybrid models and compared which method might work best for you.

To learn more about deploying LLMs and the various techniques involved, check out our code-along on RAG with LLaMAIndex and our course on deploying LLM applications with LangChain.

The above is the detailed content of RAG vs Fine-Tuning: A Comprehensive Tutorial with Practical Examples. For more information, please follow other related articles on the PHP Chinese website!

![Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]](https://img.php.cn/upload/article/001/242/473/174717025174979.jpg?x-oss-process=image/resize,p_40) Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AM

Can't use ChatGPT! Explaining the causes and solutions that can be tested immediately [Latest 2025]May 14, 2025 am 05:04 AMChatGPT is not accessible? This article provides a variety of practical solutions! Many users may encounter problems such as inaccessibility or slow response when using ChatGPT on a daily basis. This article will guide you to solve these problems step by step based on different situations. Causes of ChatGPT's inaccessibility and preliminary troubleshooting First, we need to determine whether the problem lies in the OpenAI server side, or the user's own network or device problems. Please follow the steps below to troubleshoot: Step 1: Check the official status of OpenAI Visit the OpenAI Status page (status.openai.com) to see if the ChatGPT service is running normally. If a red or yellow alarm is displayed, it means Open

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AM

Calculating The Risk Of ASI Starts With Human MindsMay 14, 2025 am 05:02 AMOn 10 May 2025, MIT physicist Max Tegmark told The Guardian that AI labs should emulate Oppenheimer’s Trinity-test calculus before releasing Artificial Super-Intelligence. “My assessment is that the 'Compton constant', the probability that a race to

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AM

An easy-to-understand explanation of how to write and compose lyrics and recommended tools in ChatGPTMay 14, 2025 am 05:01 AMAI music creation technology is changing with each passing day. This article will use AI models such as ChatGPT as an example to explain in detail how to use AI to assist music creation, and explain it with actual cases. We will introduce how to create music through SunoAI, AI jukebox on Hugging Face, and Python's Music21 library. Through these technologies, everyone can easily create original music. However, it should be noted that the copyright issue of AI-generated content cannot be ignored, and you must be cautious when using it. Let’s explore the infinite possibilities of AI in the music field together! OpenAI's latest AI agent "OpenAI Deep Research" introduces: [ChatGPT]Ope

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AM

What is ChatGPT-4? A thorough explanation of what you can do, the pricing, and the differences from GPT-3.5!May 14, 2025 am 05:00 AMThe emergence of ChatGPT-4 has greatly expanded the possibility of AI applications. Compared with GPT-3.5, ChatGPT-4 has significantly improved. It has powerful context comprehension capabilities and can also recognize and generate images. It is a universal AI assistant. It has shown great potential in many fields such as improving business efficiency and assisting creation. However, at the same time, we must also pay attention to the precautions in its use. This article will explain the characteristics of ChatGPT-4 in detail and introduce effective usage methods for different scenarios. The article contains skills to make full use of the latest AI technologies, please refer to it. OpenAI's latest AI agent, please click the link below for details of "OpenAI Deep Research"

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AM

Explaining how to use the ChatGPT app! Japanese support and voice conversation functionMay 14, 2025 am 04:59 AMChatGPT App: Unleash your creativity with the AI assistant! Beginner's Guide The ChatGPT app is an innovative AI assistant that handles a wide range of tasks, including writing, translation, and question answering. It is a tool with endless possibilities that is useful for creative activities and information gathering. In this article, we will explain in an easy-to-understand way for beginners, from how to install the ChatGPT smartphone app, to the features unique to apps such as voice input functions and plugins, as well as the points to keep in mind when using the app. We'll also be taking a closer look at plugin restrictions and device-to-device configuration synchronization

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AM

How do I use the Chinese version of ChatGPT? Explanation of registration procedures and feesMay 14, 2025 am 04:56 AMChatGPT Chinese version: Unlock new experience of Chinese AI dialogue ChatGPT is popular all over the world, did you know it also offers a Chinese version? This powerful AI tool not only supports daily conversations, but also handles professional content and is compatible with Simplified and Traditional Chinese. Whether it is a user in China or a friend who is learning Chinese, you can benefit from it. This article will introduce in detail how to use ChatGPT Chinese version, including account settings, Chinese prompt word input, filter use, and selection of different packages, and analyze potential risks and response strategies. In addition, we will also compare ChatGPT Chinese version with other Chinese AI tools to help you better understand its advantages and application scenarios. OpenAI's latest AI intelligence

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AM

5 AI Agent Myths You Need To Stop Believing NowMay 14, 2025 am 04:54 AMThese can be thought of as the next leap forward in the field of generative AI, which gave us ChatGPT and other large-language-model chatbots. Rather than simply answering questions or generating information, they can take action on our behalf, inter

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AM

An easy-to-understand explanation of the illegality of creating and managing multiple accounts using ChatGPTMay 14, 2025 am 04:50 AMEfficient multiple account management techniques using ChatGPT | A thorough explanation of how to use business and private life! ChatGPT is used in a variety of situations, but some people may be worried about managing multiple accounts. This article will explain in detail how to create multiple accounts for ChatGPT, what to do when using it, and how to operate it safely and efficiently. We also cover important points such as the difference in business and private use, and complying with OpenAI's terms of use, and provide a guide to help you safely utilize multiple accounts. OpenAI

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SublimeText3 English version

Recommended: Win version, supports code prompts!

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

Zend Studio 13.0.1

Powerful PHP integrated development environment

Notepad++7.3.1

Easy-to-use and free code editor