OpenAI’s latest model, o3-mini, is revolutionizing coding tasks with its advanced reasoning, problem-solving, and code generation capabilities. It efficiently handles complex queries and integrates structured data, setting a new standard in AI applications. This article explores using o3-mini and CrewAI to build a Retrieval-Augmented Generation (RAG) research assistant agent that retrieves information from multiple PDFs and processes user queries intelligently. We will use CrewAI’s CrewDoclingSource, SerperDevTool, and OpenAI’s o3-mini to enhance automation in research workflows.

Table of Contents

- Building the RAG Agent with o3-mini and CrewAI

- Prerequisites

- Step 1: Install Required Libraries

- Step 2: Import Necessary Modules

- Step 3: Set API Keys

- Step 4: Load Research Documents

- Step 5: Define the AI Model

- Step 6: Configure Web Search Tool

- Step 7: Define Embedding Model for Document Search

- Step 8: Create the AI Agents

- Step 9: Define the Tasks for the Agents

- Step 10: Assemble the Crew

- Step 11: Run the Research Assistant

- Conclusion

- Frequently Asked Questions

Building the RAG Agent with o3-mini and CrewAI

With the overwhelming amount of research being published, an automated RAG-based assistant can help researchers quickly find relevant insights without manually skimming through hundreds of papers. The agent we are going to build will process PDFs to extract key information and answer queries based on the content of the documents. If the required information isn’t found in the PDFs, it will automatically perform a web search to provide relevant insights. This setup can be extended for more advanced tasks, such as summarizing multiple papers, detecting contradictory findings, or generating structured reports.

In this hands-on guide, we will build a research agent that will go through articles on DeepSeek-R1 and o3-mini, to answer queries we ask about these models. For building this research assistant agent, we will first go through the prerequisites and set up the environment. We will then import the necessary modules, set the API keys, and load the research documents. Then, we will go on to define the AI model and integrate the web search tool into it. Finally, we will create he AI agents, define their tasks, and assemble the crew. Once ready, we’ll run the research assistant agent to find out if o3-mini is better and safer than DeepSeek-R1.

Prerequisites

Before diving into the implementation, let’s briefly go over what we need to get started. Having the right setup ensures a smooth development process and avoids unnecessary interruptions.

So, ensure you have:

- A working Python environment (3.8 or above)

- API keys for OpenAI and Serper (Google Scholar API)

With these in place, we are ready to start building!

Step 1: Install Required Libraries

First, we need to install the necessary libraries. These libraries provide the foundation for the document processing, AI agent orchestration, and web search functionalities.

!pip install crewai !pip install 'crewai[tools]' !pip install docling

These libraries play a crucial role in building an efficient AI-powered research assistant.

- CrewAI provides a robust framework for designing and managing AI agents, allowing the definition of specialized roles and enabling efficient research automation. It also facilitates task delegation, ensuring smooth collaboration between AI agents.

- Additionally, CrewAI[tools] installs essential tools that enhance AI agents’ capabilities, enabling them to interact with APIs, perform web searches, and process data seamlessly.

- Docling specializes in extracting structured knowledge from research documents, making it ideal for processing PDFs, academic papers, and text-based files. In this project, it is used to extract key findings from arXiv research papers.

Step 2: Import Necessary Modules

import os from crewai import LLM, Agent, Crew, Task from crewai_tools import SerperDevTool from crewai.knowledge.source.crew_docling_source import CrewDoclingSource

In this,

- The os module securely manages environmental variables like API keys for smooth integration.

- LLM powers the AI reasoning and response generation.

- The Agent defines specialized roles to handle tasks efficiently.

- Crew manages multiple agents, ensuring seamless collaboration.

- Task assigns and tracks specific responsibilities.

- SerperDevTool enables Google Scholar searches, improving external reference retrieval.

- CrewDoclingSource integrates research documents, enabling structured knowledge extraction and analysis.

Step 3: Set API Keys

os.environ['OPENAI_API_KEY'] = 'your_openai_api_key' os.environ['SERPER_API_KEY'] = 'your_serper_api_key'

How to Get API Keys?

- OpenAI API Key: Sign up at OpenAI and get an API key.

- Serper API Key: Register at Serper.dev to obtain an API key.

These API keys allow access to AI models and web search capabilities.

Step 4: Load Research Documents

In this step, we will load the research papers from arXiv, enabling our AI model to extract insights from them. The selected papers cover key topics:

- https://arxiv.org/pdf/2501.12948: Explores incentivizing reasoning capabilities in LLMs through reinforcement learning (DeepSeek-R1).

- https://arxiv.org/pdf/2501.18438: Compares the safety of o3-mini and DeepSeek-R1.

- https://arxiv.org/pdf/2401.02954: Discusses scaling open-source language models with a long-term perspective.

content_source = CrewDoclingSource(

file_paths=[

"https://arxiv.org/pdf/2501.12948",

"https://arxiv.org/pdf/2501.18438",

"https://arxiv.org/pdf/2401.02954"

],

)

Step 5: Define the AI Model

Now we will define the AI model.

!pip install crewai !pip install 'crewai[tools]' !pip install docling

- o3-mini: A powerful AI model for reasoning.

- temperature=0: Ensures deterministic outputs (same answer for the same query).

Step 6: Configure Web Search Tool

To enhance research capabilities, we integrate a web search tool that retrieves relevant academic papers when the required information is not found in the provided documents.

import os from crewai import LLM, Agent, Crew, Task from crewai_tools import SerperDevTool from crewai.knowledge.source.crew_docling_source import CrewDoclingSource

- search_url=”https://google.serper.dev/scholar”

This specifies the Google Scholar search API endpoint.It ensures that searches are performed specifically in scholarly articles, research papers, and academic sources, rather than general web pages.

- n_results=2

This parameter limits the number of search results returned by the tool, ensuring that only the most relevant information is retrieved. In this case, it is set to fetch the top two research papers from Google Scholar, prioritizing high-quality academic sources. By reducing the number of results, the assistant keeps responses concise and efficient, avoiding unnecessary information overload while maintaining accuracy.

Step 7: Define Embedding Model for Document Search

To efficiently retrieve relevant information from documents, we use an embedding model that converts text into numerical representations for similarity-based search.

os.environ['OPENAI_API_KEY'] = 'your_openai_api_key' os.environ['SERPER_API_KEY'] = 'your_serper_api_key'

The embedder in CrewAI is used for converting text into numerical representations (embeddings), enabling efficient document retrieval and semantic search. In this case, the embedding model is provided by OpenAI, specifically using “text-embedding-ada-002”, a well-optimized model for generating high-quality embeddings. The API key is retrieved from the environment variables to authenticate requests.

CrewAI supports multiple embedding providers, including OpenAI and Gemini (Google’s AI models), allowing flexibility in choosing the best model based on accuracy, performance, and cost considerations.

Step 8: Create the AI Agents

Now we will create the two AI Agents required for our researching task: the Document Search Agent, and the Web Search Agent.

The Document Search Agent is responsible for retrieving answers from the provided research papers and documents. It acts as an expert in analyzing technical content and extracting relevant insights. If the required information is not found, it can delegate the query to the Web Search Agent for further exploration. The allow_delegation=True setting enables this delegation process.

!pip install crewai !pip install 'crewai[tools]' !pip install docling

The Web Search Agent, on the other hand, is designed to search for missing information online using Google Scholar. It steps in only when the Document Search Agent fails to find an answer in the available documents. Unlike the Document Search Agent, it cannot delegate tasks further (allow_delegation=False). It uses Serper (Google Scholar API) as a tool to fetch relevant academic papers and ensure accurate responses.

import os from crewai import LLM, Agent, Crew, Task from crewai_tools import SerperDevTool from crewai.knowledge.source.crew_docling_source import CrewDoclingSource

Step 9: Define the Tasks for the Agents

Now we will create the two tasks for the agents.

The first task involves answering a given question using available research papers and documents.

Task 1: Extract Information from Documents

os.environ['OPENAI_API_KEY'] = 'your_openai_api_key' os.environ['SERPER_API_KEY'] = 'your_serper_api_key'

The next task comes into play when the document-based search does not yield an answer.

Task 2: Perform Web Search if Needed

content_source = CrewDoclingSource(

file_paths=[

"https://arxiv.org/pdf/2501.12948",

"https://arxiv.org/pdf/2501.18438",

"https://arxiv.org/pdf/2401.02954"

],

)

Step 10: Assemble the Crew

The Crew in CrewAI manages agents to complete tasks efficiently by coordinating the Document Search Agent and Web Search Agent. It first searches within the uploaded documents and delegates to web search if needed.

- knowledge_sources=[content_source] provides relevant documents,

- embedder=embedder enables semantic search, and

- verbose=True logs actions for better tracking, ensuring a smooth workflow.

llm = LLM(model="o3-mini", temperature=0)

Step 11: Run the Research Assistant

The initial query is directed to the document to check if the researcher agent can provide a response. The question being asked is “O3-MINI vs DEEPSEEK-R1: Which one is safer?”

Example Query 1:

serper_tool = SerperDevTool(

search_url="https://google.serper.dev/scholar",

n_results=2 # Fetch top 2 results

)

Response:

Here, we can see that the final answer is generated by the Document Searcher, as it successfully located the required information within the provided documents.

Example Query 2:

Here, the question “Which one is better, O3 Mini or DeepSeek R1?” is not available in the document. The system will check if the Document Search Agent can find an answer; if not, it will delegate the task to the Web Search Agent

embedder = {

"provider": "openai",

"config": {

"model": "text-embedding-ada-002",

"api_key": os.environ['OPENAI_API_KEY']

}

}

Response:

From the output, we observe that the response was generated using the Web Searcher Agent since the required information was not found by the Document Researcher Agent. Additionally, it includes the sources from which the answer was finally retrieved.

Conclusion

In this project, we successfully built an AI-powered research assistant that efficiently retrieves and analyzes information from research papers and the web. By using CrewAI for agent coordination, Docling for document processing, and Serper for scholarly search, we created a system capable of answering complex queries with structured insights.

The assistant first searches within documents and seamlessly delegates to web search if needed, ensuring accurate responses. This approach enhances research efficiency by automating information retrieval and analysis. Additionally, by integrating the o3-mini research assistant with CrewAI’s CrewDoclingSource and SerperDevTool, we further enhanced the system’s document analysis capabilities. With further customization, this framework can be expanded to support more data sources, advanced reasoning, and improved research workflows.

You can explore amazing projects featuring OpenAI o3-mini in our free course – Getting Started with o3-mini!

Frequently Asked Questions

Q1. What is CrewAI?A. CrewAI is a framework that allows you to create and manage AI agents with specific roles and tasks. It enables collaboration between multiple AI agents to automate complex workflows.

Q2. How does CrewAI manage multiple agents?A. CrewAI uses a structured approach where each agent has a defined role and can delegate tasks if needed. A Crew object orchestrates these agents to complete tasks efficiently.

Q3. What is CrewDoclingSource?A. CrewDoclingSource is a document processing tool in CrewAI that extracts structured knowledge from research papers, PDFs, and text-based documents.

Q4. What is Serper API?A. Serper API is a tool that allows AI applications to perform Google Search queries, including searches on Google Scholar for academic papers.

Q5. Is Serper API free to use?A. Serper API offers both free and paid plans, with limitations on the number of search requests in the free tier.

Q6. What is the difference between Serper API and traditional Google Search?A. Unlike standard Google Search, Serper API provides structured access to search results, allowing AI agents to extract relevant research papers efficiently.

Q7. Can CrewDoclingSource handle multiple file formats?A. Yes, it supports common research document formats, including PDFs and text-based files.

The above is the detailed content of RAG-Based Research Assistant Using o3-mini and CrewAI. For more information, please follow other related articles on the PHP Chinese website!

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AM

AI Game Development Enters Its Agentic Era With Upheaval's Dreamer PortalMay 02, 2025 am 11:17 AMUpheaval Games: Revolutionizing Game Development with AI Agents Upheaval, a game development studio comprised of veterans from industry giants like Blizzard and Obsidian, is poised to revolutionize game creation with its innovative AI-powered platfor

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AM

Uber Wants To Be Your Robotaxi Shop, Will Providers Let Them?May 02, 2025 am 11:16 AMUber's RoboTaxi Strategy: A Ride-Hail Ecosystem for Autonomous Vehicles At the recent Curbivore conference, Uber's Richard Willder unveiled their strategy to become the ride-hail platform for robotaxi providers. Leveraging their dominant position in

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AM

AI Agents Playing Video Games Will Transform Future RobotsMay 02, 2025 am 11:15 AMVideo games are proving to be invaluable testing grounds for cutting-edge AI research, particularly in the development of autonomous agents and real-world robots, even potentially contributing to the quest for Artificial General Intelligence (AGI). A

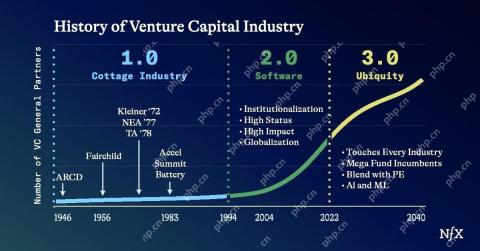

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AM

The Startup Industrial Complex, VC 3.0, And James Currier's ManifestoMay 02, 2025 am 11:14 AMThe impact of the evolving venture capital landscape is evident in the media, financial reports, and everyday conversations. However, the specific consequences for investors, startups, and funds are often overlooked. Venture Capital 3.0: A Paradigm

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AM

Adobe Updates Creative Cloud And Firefly At Adobe MAX London 2025May 02, 2025 am 11:13 AMAdobe MAX London 2025 delivered significant updates to Creative Cloud and Firefly, reflecting a strategic shift towards accessibility and generative AI. This analysis incorporates insights from pre-event briefings with Adobe leadership. (Note: Adob

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AM

Everything Meta Announced At LlamaConMay 02, 2025 am 11:12 AMMeta's LlamaCon announcements showcase a comprehensive AI strategy designed to compete directly with closed AI systems like OpenAI's, while simultaneously creating new revenue streams for its open-source models. This multifaceted approach targets bo

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AM

The Brewing Controversy Over The Proposition That AI Is Nothing More Than Just Normal TechnologyMay 02, 2025 am 11:10 AMThere are serious differences in the field of artificial intelligence on this conclusion. Some insist that it is time to expose the "emperor's new clothes", while others strongly oppose the idea that artificial intelligence is just ordinary technology. Let's discuss it. An analysis of this innovative AI breakthrough is part of my ongoing Forbes column that covers the latest advancements in the field of AI, including identifying and explaining a variety of influential AI complexities (click here to view the link). Artificial intelligence as a common technology First, some basic knowledge is needed to lay the foundation for this important discussion. There is currently a large amount of research dedicated to further developing artificial intelligence. The overall goal is to achieve artificial general intelligence (AGI) and even possible artificial super intelligence (AS)

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AM

Model Citizens, Why AI Value Is The Next Business YardstickMay 02, 2025 am 11:09 AMThe effectiveness of a company's AI model is now a key performance indicator. Since the AI boom, generative AI has been used for everything from composing birthday invitations to writing software code. This has led to a proliferation of language mod

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

WebStorm Mac version

Useful JavaScript development tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.