OpenAI's o1 model, unveiled in September 2024, showcased "advanced reasoning" capabilities through large-scale reinforcement learning. DeepSeek, an AI research lab, has successfully replicated this behavior and openly published their methodology. This article explores the core concepts and underlying mechanisms of this breakthrough.

OpenAI's o1 model revolutionized Large Language Model (LLM) training by introducing "thinking" tokens. These special tokens act as a scratchpad, allowing the model to systematically process problems and user queries. A key finding was the performance improvement with increased test-time compute—more generated tokens equate to better responses. The following graph (from OpenAI's blog) illustrates this:

OpenAI's o1 model revolutionized Large Language Model (LLM) training by introducing "thinking" tokens. These special tokens act as a scratchpad, allowing the model to systematically process problems and user queries. A key finding was the performance improvement with increased test-time compute—more generated tokens equate to better responses. The following graph (from OpenAI's blog) illustrates this:

The left plot shows the established neural scaling laws, where longer training (train-time compute) improves performance. The right plot reveals a novel scaling law: increased token generation during inference (test-time compute) enhances performance.

The left plot shows the established neural scaling laws, where longer training (train-time compute) improves performance. The right plot reveals a novel scaling law: increased token generation during inference (test-time compute) enhances performance.

Thinking Tokens

o1's "thinking" tokens demarcate the model's chain of thought (CoT) reasoning. Their importance is twofold: they clearly delineate the reasoning process for UI development and provide a human-readable record of the model's thought process. While OpenAI kept the training details confidential, DeepSeek's research sheds light on this.

DeepSeek's Research

DeepSeek's January 2025 publication, "DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning" [2], unveiled the o1 model's secrets. They introduced DeepSeek-R1-Zero (trained solely on reinforcement learning) and DeepSeek-R1 (a blend of supervised fine-tuning (SFT) and RL). R1-Zero is crucial as it generated training data for R1 and demonstrated emergent reasoning abilities not explicitly programmed. R1-Zero discovered CoT and test-time compute scaling through RL alone.

DeepSeek-R1-Zero (RL Only)

Reinforcement learning (RL) allows models to learn through trial and error, receiving reward signals without explicit functional relationships to model parameters. Three key aspects of R1-Zero's training are highlighted:

-

Prompt Template: A simple template uses

<think></think>and<answer></answer>tags to structure the model's response:

<code>A conversation between User and Assistant. The user asks a question, and the

Assistant solves it.The assistant first thinks about the reasoning process in

the mind and then provides the user with the answer. The reasoning process and

answer are enclosed within <think></think> and <answer></answer> tags,

respectively, i.e., <think> reasoning process here </think><answer> answer here </answer>. User: {prompt}. Assistant:</code>

The minimal prompting avoids biasing responses and allows for natural evolution during RL.

-

Reward Signal: A rule-based system evaluates accuracy and formatting, avoiding potential "reward hacking" issues often associated with neural reward models.

-

GRPO (Group Relative Policy Optimization): This RL approach aggregates responses to update model parameters, incorporating clipping and KL-divergence regularization for stable training. The loss function is shown below:

R1-Zero Results (Emergent Abilities)

Remarkably, R1-Zero implicitly learned to improve responses through test-time compute and exhibited human-like internal monologues, often including verification steps. An example is provided in the original article.

DeepSeek-R1 (SFT RL)

DeepSeek-R1 addresses R1-Zero's readability issues through a four-step training process combining SFT and RL:

-

SFT with reasoning data: Initial SFT uses thousands of long CoT examples to establish a reasoning framework.

-

R1-Zero style RL ( language consistency reward): RL training similar to R1-Zero, but with added language consistency reward.

-

SFT with mixed data: SFT with both reasoning and non-reasoning data to broaden the model's capabilities.

-

RL RLHF: Final RL training includes reasoning training and RLHF for improved helpfulness and harmlessness.

Accessing R1-Zero and R1

DeepSeek made the model weights publicly available, allowing access through various inference providers and local deployments (DeepSeek, Together, Hyperbolic, Ollama, Hugging Face).

Conclusions

o1 introduced test-time compute as a new dimension for LLM improvement. DeepSeek's replication and open publication demonstrate that reinforcement learning can independently produce models that surpass existing human knowledge limitations. This opens exciting possibilities for future scientific and technological advancements.

[Note: Links to external resources were omitted as they are not relevant to the paraphrased content and could be considered promotional.]

The above is the detailed content of How to Train LLMs to 'Think” (o1 & DeepSeek-R1). For more information, please follow other related articles on the PHP Chinese website!

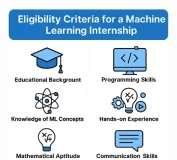

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AM

10 Machine Learning Internships in India (2025)May 12, 2025 am 10:47 AMLand Your Dream Machine Learning Internship in India (2025)! For students and early-career professionals, a machine learning internship is the perfect launchpad for a rewarding career. Indian companies across diverse sectors – from cutting-edge GenA

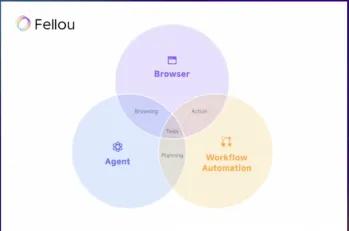

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AM

Try Fellou AI and Say Goodbye to Google and ChatGPTMay 12, 2025 am 10:26 AMThe landscape of online browsing has undergone a significant transformation in the past year. This shift began with enhanced, personalized search results from platforms like Perplexity and Copilot, and accelerated with ChatGPT's integration of web s

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AM

Personal Hacking Will Be A Pretty Fierce BearMay 11, 2025 am 11:09 AMCyberattacks are evolving. Gone are the days of generic phishing emails. The future of cybercrime is hyper-personalized, leveraging readily available online data and AI to craft highly targeted attacks. Imagine a scammer who knows your job, your f

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AM

Pope Leo XIV Reveals How AI Influenced His Name ChoiceMay 11, 2025 am 11:07 AMIn his inaugural address to the College of Cardinals, Chicago-born Robert Francis Prevost, the newly elected Pope Leo XIV, discussed the influence of his namesake, Pope Leo XIII, whose papacy (1878-1903) coincided with the dawn of the automobile and

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AM

FastAPI-MCP Tutorial for Beginners and Experts - Analytics VidhyaMay 11, 2025 am 10:56 AMThis tutorial demonstrates how to integrate your Large Language Model (LLM) with external tools using the Model Context Protocol (MCP) and FastAPI. We'll build a simple web application using FastAPI and convert it into an MCP server, enabling your L

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AM

Dia-1.6B TTS : Best Text-to-Dialogue Generation Model - Analytics VidhyaMay 11, 2025 am 10:27 AMExplore Dia-1.6B: A groundbreaking text-to-speech model developed by two undergraduates with zero funding! This 1.6 billion parameter model generates remarkably realistic speech, including nonverbal cues like laughter and sneezes. This article guide

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AM

3 Ways AI Can Make Mentorship More Meaningful Than EverMay 10, 2025 am 11:17 AMI wholeheartedly agree. My success is inextricably linked to the guidance of my mentors. Their insights, particularly regarding business management, formed the bedrock of my beliefs and practices. This experience underscores my commitment to mentor

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AM

AI Unearths New Potential In The Mining IndustryMay 10, 2025 am 11:16 AMAI Enhanced Mining Equipment The mining operation environment is harsh and dangerous. Artificial intelligence systems help improve overall efficiency and security by removing humans from the most dangerous environments and enhancing human capabilities. Artificial intelligence is increasingly used to power autonomous trucks, drills and loaders used in mining operations. These AI-powered vehicles can operate accurately in hazardous environments, thereby increasing safety and productivity. Some companies have developed autonomous mining vehicles for large-scale mining operations. Equipment operating in challenging environments requires ongoing maintenance. However, maintenance can keep critical devices offline and consume resources. More precise maintenance means increased uptime for expensive and necessary equipment and significant cost savings. AI-driven

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Dreamweaver Mac version

Visual web development tools

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

SublimeText3 English version

Recommended: Win version, supports code prompts!

WebStorm Mac version

Useful JavaScript development tools