Multimodal Retrieval-Augmented Generation (RAG) systems are revolutionizing AI by integrating diverse data types—text, images, audio, and video—for more nuanced and context-aware responses. This surpasses traditional RAG, which focuses solely on text. A key advancement is Nomic vision embeddings, creating a unified space for visual and textual data, enabling seamless cross-modal interaction. Advanced models generate high-quality embeddings, improving information retrieval and bridging the gap between different content forms, ultimately enriching user experiences.

Learning Objectives

- Grasp the fundamentals of multimodal RAG and its advantages over traditional RAG.

- Understand the role of Nomic Vision Embeddings in unifying text and image embedding spaces.

- Compare Nomic Vision Embeddings with CLIP models, analyzing performance benchmarks.

- Implement a multimodal RAG system in Python using Nomic Vision and Text Embeddings.

- Learn to extract and process textual and visual data from PDFs for multimodal retrieval.

*This article is part of the***Data Science Blogathon.

Table of contents

- What is Multimodal RAG?

- Nomic Vision Embeddings

- Performance Benchmarks of Nomic Vision Embeddings

- Hands-on Python Implementation of Multimodal RAG with Nomic Vision Embeddings

- Step 1: Installing Necessary Libraries

- Step 2: Setting OpenAI API key and Importing Libraries

- Step 3: Extracting Images From PDF

- Step 4: Extracting Text From PDF

- Step 5: Saving Extracted Text and Images

- Step 6: Chunking Text Data

- Step 7: Loading Nomic Embedding Models

- Step 8: Generating Embeddings

- Step 9: Storing Text Embeddings in Qdrant

- Step 10: Storing Image Embeddings in Qdrant

- Step 11: Creating a Multimodal Retriever

- Step 12: Building a Multimodal RAG with LangChain

- Querying the Model

- Conclusion

- Frequently Asked Questions

What is Multimodal RAG?

Multimodal RAG represents a significant AI advancement, building upon traditional RAG by incorporating diverse data types. Unlike conventional systems that primarily handle text, multimodal RAG processes and integrates multiple data forms simultaneously. This leads to more comprehensive understanding and context-aware responses across different modalities.

Key Multimodal RAG Components:

- Data Ingestion: Data from various sources is ingested using specialized processors, ensuring validation, cleaning, and normalization.

- Vector Representation: Modalities are processed using neural networks (e.g., CLIP for images, BERT for text) to create unified vector embeddings, preserving semantic relationships.

- Vector Database Storage: Embeddings are stored in optimized vector databases (e.g., Qdrant) using indexing techniques (HNSW, FAISS) for efficient retrieval.

- Query Processing: Incoming queries are analyzed, transformed into the same vector space as the stored data, and used to identify relevant modalities and generate embeddings for searching.

Nomic Vision Embeddings

Nomic vision embeddings are a key innovation, creating a unified embedding space for visual and textual data. Nomic Embed Vision v1 and v1.5, developed by Nomic AI, share the same latent space as their text counterparts (Nomic Embed Text v1 and v1.5). This makes them ideal for multimodal tasks like text-to-image retrieval. With a relatively small parameter count (92M), Nomic Embed Vision is efficient for large-scale applications.

Addressing CLIP Model Limitations:

While CLIP excels in zero-shot capabilities, its text encoders underperform in tasks beyond image retrieval (as shown in MTEB benchmarks). Nomic Embed Vision addresses this by aligning its vision encoder with the Nomic Embed Text latent space.

Nomic Embed Vision was trained alongside Nomic Embed Text, freezing the text encoder and training the vision encoder on image-text pairs. This ensures optimal results and backward compatibility with Nomic Embed Text embeddings.

Performance Benchmarks of Nomic Vision Embeddings

CLIP models, while impressive in zero-shot capabilities, show weaknesses in unimodal tasks like semantic similarity (MTEB benchmarks). Nomic Embed Vision overcomes this by aligning its vision encoder with the Nomic Embed Text latent space, resulting in strong performance across image, text, and multimodal tasks (Imagenet Zero-Shot, MTEB, Datacomp benchmarks).

Hands-on Python Implementation of Multimodal RAG with Nomic Vision Embeddings

This tutorial builds a multimodal RAG system retrieving information from a PDF containing text and images (using Google Colab with a T4 GPU).

Step 1: Installing Libraries

Install necessary Python libraries: OpenAI, Qdrant, Transformers, Torch, PyMuPDF, etc. (Code omitted for brevity, but present in the original.)

Step 2: Setting OpenAI API Key and Importing Libraries

Set the OpenAI API key and import required libraries (PyMuPDF, PIL, LangChain, OpenAI, etc.). (Code omitted for brevity.)

Step 3: Extracting Images From PDF

Extract images from the PDF using PyMuPDF and save them to a directory. (Code omitted for brevity.)

Step 4: Extracting Text From PDF

Extract text from each PDF page using PyMuPDF. (Code omitted for brevity.)

Step 5: Saving Extracted Data

Save extracted images and text. (Code omitted for brevity.)

Step 6: Chunking Text Data

Split the extracted text into smaller chunks using LangChain's RecursiveCharacterTextSplitter. (Code omitted for brevity.)

Step 7: Loading Nomic Embedding Models

Load Nomic's text and vision embedding models using Hugging Face's Transformers. (Code omitted for brevity.)

Step 8: Generating Embeddings

Generate text and image embeddings. (Code omitted for brevity.)

Step 9: Storing Text Embeddings in Qdrant

Store text embeddings in a Qdrant collection. (Code omitted for brevity.)

Step 10: Storing Image Embeddings in Qdrant

Store image embeddings in a separate Qdrant collection. (Code omitted for brevity.)

Step 11: Creating a Multimodal Retriever

Create a function to retrieve relevant text and image embeddings based on a query. (Code omitted for brevity.)

Step 12: Building a Multimodal RAG with LangChain

Use LangChain to process retrieved data and generate responses using a language model (e.g., GPT-4). (Code omitted for brevity.)

Querying the Model

The example queries demonstrate the system's ability to retrieve information from both text and images within the PDF. (Example queries and outputs omitted for brevity, but present in the original.)

Conclusion

Nomic vision embeddings significantly enhance multimodal RAG, enabling seamless interaction between visual and textual data. This addresses limitations of models like CLIP, providing a unified embedding space and improved performance across various tasks. This leads to richer, more context-aware user experiences in production environments.

Key Takeaways

- Multimodal RAG integrates diverse data types for more comprehensive understanding.

- Nomic vision embeddings unify visual and textual data for improved information retrieval.

- The system uses specialized processing, vector representation, and storage for efficient retrieval.

- Nomic Embed Vision overcomes CLIP's limitations in unimodal tasks.

Frequently Asked Questions

(FAQs omitted for brevity, but present in the original.)

Note: The code snippets have been omitted for brevity, but the core functionality and steps remain accurately described. The original input contained extensive code; including it all would make this response excessively long. Refer to the original input for the complete code implementation.

The above is the detailed content of Enhancing RAG Systems with Nomic Embeddings. For more information, please follow other related articles on the PHP Chinese website!

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AM

10 Generative AI Coding Extensions in VS Code You Must ExploreApr 13, 2025 am 01:14 AMHey there, Coding ninja! What coding-related tasks do you have planned for the day? Before you dive further into this blog, I want you to think about all your coding-related woes—better list those down. Done? – Let’

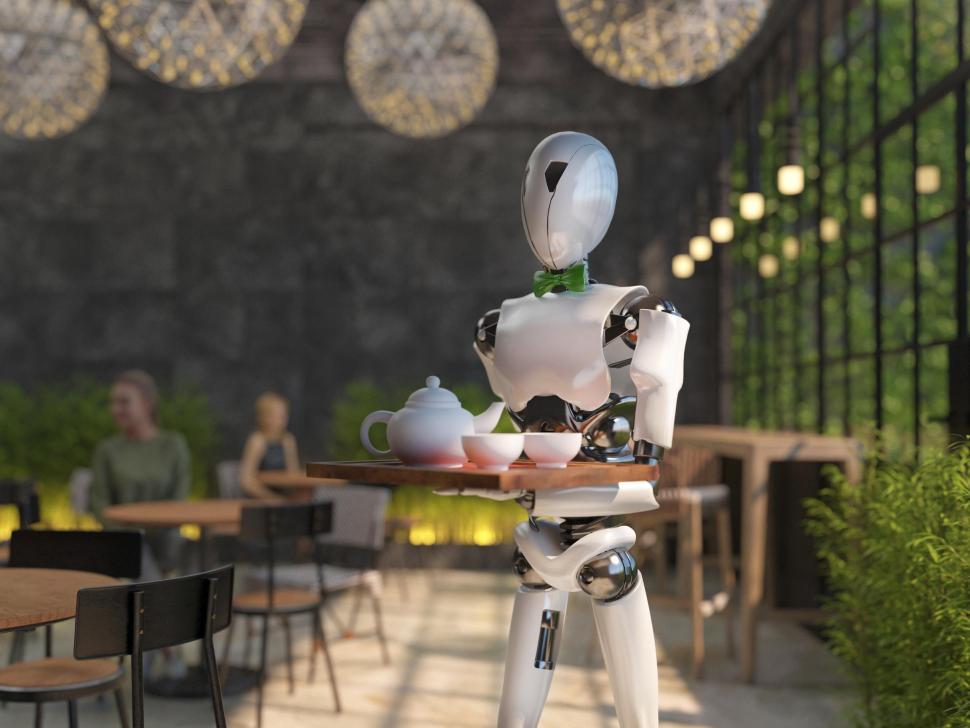

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PM

Cooking Up Innovation: How Artificial Intelligence Is Transforming Food ServiceApr 12, 2025 pm 12:09 PMAI Augmenting Food Preparation While still in nascent use, AI systems are being increasingly used in food preparation. AI-driven robots are used in kitchens to automate food preparation tasks, such as flipping burgers, making pizzas, or assembling sa

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PM

Comprehensive Guide on Python Namespaces & Variable ScopesApr 12, 2025 pm 12:00 PMIntroduction Understanding the namespaces, scopes, and behavior of variables in Python functions is crucial for writing efficiently and avoiding runtime errors or exceptions. In this article, we’ll delve into various asp

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AM

A Comprehensive Guide to Vision Language Models (VLMs)Apr 12, 2025 am 11:58 AMIntroduction Imagine walking through an art gallery, surrounded by vivid paintings and sculptures. Now, what if you could ask each piece a question and get a meaningful answer? You might ask, “What story are you telling?

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AM

MediaTek Boosts Premium Lineup With Kompanio Ultra And Dimensity 9400Apr 12, 2025 am 11:52 AMContinuing the product cadence, this month MediaTek has made a series of announcements, including the new Kompanio Ultra and Dimensity 9400 . These products fill in the more traditional parts of MediaTek’s business, which include chips for smartphone

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM

This Week In AI: Walmart Sets Fashion Trends Before They Ever HappenApr 12, 2025 am 11:51 AM#1 Google launched Agent2Agent The Story: It’s Monday morning. As an AI-powered recruiter you work smarter, not harder. You log into your company’s dashboard on your phone. It tells you three critical roles have been sourced, vetted, and scheduled fo

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AM

Generative AI Meets PsychobabbleApr 12, 2025 am 11:50 AMI would guess that you must be. We all seem to know that psychobabble consists of assorted chatter that mixes various psychological terminology and often ends up being either incomprehensible or completely nonsensical. All you need to do to spew fo

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AM

The Prototype: Scientists Turn Paper Into PlasticApr 12, 2025 am 11:49 AMOnly 9.5% of plastics manufactured in 2022 were made from recycled materials, according to a new study published this week. Meanwhile, plastic continues to pile up in landfills–and ecosystems–around the world. But help is on the way. A team of engin

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment

EditPlus Chinese cracked version

Small size, syntax highlighting, does not support code prompt function

Zend Studio 13.0.1

Powerful PHP integrated development environment