The basic principle of Large Language Models (LLMs) is very simple: to predict the next word (or token) in a sequence of words based on statistical patterns in their training data. However, this seemingly simple capability turns out to be incredibly sophisticated when it can do a number of amazing tasks such as text summarization, idea generation, brainstorming, code generation, information processing, and content creation. That said, LLMs do not have any memory no do they actually “understand” anything, other than sticking to their basic function: predicting the next word.

The process of next-word prediction is probabilistic. The LLM has to select each word from a probability distribution. In the process, they often generate false, fabricated, or inconsistent content in an attempt to produce coherent responses and fill in gaps with plausible-looking but incorrect information. This phenomenon is called hallucination, an inevitable, well-known feature of LLMs that warrants validation and corroboration of their outputs.

Retrieval augment generation (RAG) methods, which make an LLM work with external knowledge sources, do minimize hallucinations to some extent, but they cannot completely eradicate them. Although advanced RAGs can provide in-text citations and URLs, verifying these references could be hectic and time-consuming. Therefore, we need an objective criterion for assessing the reliability or trustworthiness of an LLM’s response, whether it is generated from its own knowledge or an external knowledge base (RAG).

In this article, we will discuss how the output of an LLM can be assessed for trustworthiness by a trustworthy language model which assigns a score to the LLM’s output. We will first discuss how we can use a trustworthy language model to assign scores to an LLM’s answer and explain trustworthiness. Subsequently, we will develop an example RAG with LlamaParse and Llamaindex that assesses the RAG’s answers for trustworthiness.

The entire code of this article is available in the jupyter notebook on GitHub.

Assigning a Trustworthiness Score to an LLM’s Answer

To demonstrate how we can assign a trustworthiness score to an Llm’s response, I will use Cleanlab’s Trustworthy Language Model (TLM). Such TLMs use a combination of uncertainty quantification and consistency analysis to compute trustworthiness scores and explanations for LLM responses.

Cleanlab offers free trial APIs which can be obtained by creating an account at their website. We first need to install Cleanlab’s Python client:

pip install --upgrade cleanlab-studio

Cleanlab supports several proprietary models such as ‘gpt-4o’, ‘gpt-4o-mini’, ‘o1-preview’, ‘claude-3-sonnet’, ‘claude-3.5-sonnet’, ‘claude-3.5-sonnet-v2’ and others. Here is how TLM assigns a trustworhiness score to gpt-4o’s answer. The trustworthiness score ranges from 0 to 1, where higher values indicate greater trustworthiness.

from cleanlab_studio import Studio

studio = Studio("<cleanlab_api_key>") # Get your API key from above

tlm = studio.TLM(options={"log": ["explanation"], "model": "gpt-4o"}) # GPT, Claude, etc

#set the prompt

out = tlm.prompt("How many vowels are there in the word 'Abracadabra'.?")

#the TLM response contains the actual output 'response', trustworthiness score and explanation

print(f"Model's response = {out['response']}")

print(f"Trustworthiness score = {out['trustworthiness_score']}")

print(f"Explanation = {out['log']['explanation']}")

</cleanlab_api_key>

The above code tested the response of gpt-4o for the question “How many vowels are there in the word ‘Abracadabra’.?”. The TLM’s output contains the model’s answer (response), trustworthiness score, and explanation. Here is the output of this code.

Model's response = The word "Abracadabra" contains 6 vowels. The vowels are: A, a, a, a, a, and a. Trustworthiness score = 0.6842228802750124 Explanation = This response is untrustworthy due to a lack of consistency in possible responses from the model. Here's one inconsistent alternate response that the model considered (which may not be accurate either): 5.

It can be seen how the most advanced language model hallucinates for such simple tasks and produces the wrong output.Here is the response and trustworthiness score for the same question for claude-3.5-sonnet-v2.

Model's response = Let me count the vowels in 'Abracadabra': A-b-r-a-c-a-d-a-b-r-a The vowels are: A, a, a, a, a There are 5 vowels in the word 'Abracadabra'. Trustworthiness score = 0.9378276048845285 Explanation = Did not find a reason to doubt trustworthiness.

claude-3.5-sonnet-v2 produces the correct output. Let’s compare the two models’ responses to another question.

from cleanlab_studio import Studio

import markdown

from IPython.core.display import display, Markdown

# Initialize the Cleanlab Studio with API key

studio = Studio("<cleanlab_api_key>") # Replace with your actual API key

# List of models to evaluate

models = ["gpt-4o", "claude-3.5-sonnet-v2"]

# Define the prompt

prompt_text = "Which one of 9.11 and 9.9 is bigger?"

# Loop through each model and evaluate

for model in models:

tlm = studio.TLM(options={"log": ["explanation"], "model": model})

out = tlm.prompt(prompt_text)

md_content = f"""

## Model: {model}

**Response:** {out['response']}

**Trustworthiness Score:** {out['trustworthiness_score']}

**Explanation:** {out['log']['explanation']}

---

"""

display(Markdown(md_content))

</cleanlab_api_key>

Here is the response of the two models:

We can also generate a trustworthiness score for open-source LLMs. Let’s check the recent, much-hyped open-source LLM: deepseek-R1. I will use DeepSeek-R1-Distill-Llama-70B, based on Meta’s Llama-3.3–70B-Instruct model and distilled from DeepSeek’s larger 671-billion parameter Mixture of Experts (MoE) model. Knowledge distillation is a Machine Learning technique that aims to transfer the learnings of a large pre-trained model, the “teacher model,” to a smaller “student model.”

import streamlit as st

from langchain_groq.chat_models import ChatGroq

import os

os.environ["GROQ_API_KEY"]=st.secrets["GROQ_API_KEY"]

# Initialize the Groq Llama Instant model

groq_llm = ChatGroq(model="deepseek-r1-distill-llama-70b", temperature=0.5)

prompt = "Which one of 9.11 and 9.9 is bigger?"

# Get the response from the model

response = groq_llm.invoke(prompt)

#Initialize Cleanlab's studio

studio = Studio("226eeab91e944b23bd817a46dbe3c8ae")

cleanlab_tlm = studio.TLM(options={"log": ["explanation"]}) #for explanations

#Get the output containing trustworthiness score and explanation

output = cleanlab_tlm.get_trustworthiness_score(prompt, response=response.content.strip())

md_content = f"""

## Model: {model}

**Response:** {response.content.strip()}

**Trustworthiness Score:** {output['trustworthiness_score']}

**Explanation:** {output['log']['explanation']}

---

"""

display(Markdown(md_content))

Here is the output of deepseek-r1-distill-llama-70b model.

Developing a Trustworthy RAG

We will now develop an RAG to demonstrate how we can measure the trustworthiness of an LLM response in RAG. This RAG will be developed by scraping data from given links, parsing it in markdown format, and creating a vector store.

The following libraries need to be installed for the next code.

pip install llama-parse llama-index-core llama-index-embeddings-huggingface llama-index-llms-cleanlab requests beautifulsoup4 pdfkit nest-asyncio

To render HTML into PDF format, we also need to install wkhtmltopdf command line tool from their website.

The following libraries will be imported:

from llama_parse import LlamaParse from llama_index.core import VectorStoreIndex import requests from bs4 import BeautifulSoup import pdfkit from llama_index.readers.docling import DoclingReader from llama_index.core import Settings from llama_index.embeddings.huggingface import HuggingFaceEmbedding from llama_index.core import VectorStoreIndex, SimpleDirectoryReader from llama_index.llms.cleanlab import CleanlabTLM from typing import Dict, List, ClassVar from llama_index.core.instrumentation.events import BaseEvent from llama_index.core.instrumentation.event_handlers import BaseEventHandler from llama_index.core.instrumentation import get_dispatcher from llama_index.core.instrumentation.events.llm import LLMCompletionEndEvent import nest_asyncio import os

The next steps will involve scraping data from given URLs using Python’s BeautifulSoup library, saving the scraped data in PDF file(s) using pdfkit, and parsing the data from PDF(s) to markdown file using LlamaParse which is a genAI-native document parsing platform built with LLMs and for LLM use cases.

We will first configure the LLM to be used by CleanlabTLM and the embedding model (Huggingface embedding model BAAI/bge-small-en-v1.5) that will be used to compute the embeddings of the scraped data to create the vector store.

pip install --upgrade cleanlab-studio

We will now define a custom event handler, GetTrustworthinessScore, that is derived from a base event handler class. This handler gets triggered by the end of an LLM completion and extracts a trustworthiness score from the response metadata. A helper function, display_response, displays the LLM’s response along with its trustworthiness score.

from cleanlab_studio import Studio

studio = Studio("<cleanlab_api_key>") # Get your API key from above

tlm = studio.TLM(options={"log": ["explanation"], "model": "gpt-4o"}) # GPT, Claude, etc

#set the prompt

out = tlm.prompt("How many vowels are there in the word 'Abracadabra'.?")

#the TLM response contains the actual output 'response', trustworthiness score and explanation

print(f"Model's response = {out['response']}")

print(f"Trustworthiness score = {out['trustworthiness_score']}")

print(f"Explanation = {out['log']['explanation']}")

</cleanlab_api_key>

We will now generate PDFs by scraping data from given URLs. For demonstration, we will scrap data only from this Wikipedia article about large language models (Creative Commons Attribution-ShareAlike 4.0 License).

Note: Readers are advised to always double-check the status of the content/data they are about to scrape and ensure they are allowed to do so.

The following piece of code scrapes data from the given URLs by making an HTTP request and using BeautifulSoup Python library to parse the HTML content. HTML content is cleaned by converting protocol-relative URLs to absolute ones. Subsequently, the scraped content is converted into a PDF file(s) using pdfkit.

Model's response = The word "Abracadabra" contains 6 vowels. The vowels are: A, a, a, a, a, and a. Trustworthiness score = 0.6842228802750124 Explanation = This response is untrustworthy due to a lack of consistency in possible responses from the model. Here's one inconsistent alternate response that the model considered (which may not be accurate either): 5.

After generating PDF(s) from the scraped data, we parse these PDFs using LlamaParse. We set the parsing instructions to extract the content in markdown format and parse the document(s) page-wise along with the document name and page number. These extracted entities (pages) are referred to as nodes. The parser iterates over the extracted nodes and updates each node’s metadata by appending a citation header which facilitates later referencing.

Model's response = Let me count the vowels in 'Abracadabra': A-b-r-a-c-a-d-a-b-r-a The vowels are: A, a, a, a, a There are 5 vowels in the word 'Abracadabra'. Trustworthiness score = 0.9378276048845285 Explanation = Did not find a reason to doubt trustworthiness.

We now create a vector store and a query engine. We define a customer prompt template to guide the LLM’s behavior in answering the questions. Finally, we create a query engine with the created index to answer queries. For each query, we retrieve the top 3 nodes from the vector store based on their semantic similarity with the query. The LLM uses these retrieved nodes to generate the final answer.

from cleanlab_studio import Studio

import markdown

from IPython.core.display import display, Markdown

# Initialize the Cleanlab Studio with API key

studio = Studio("<cleanlab_api_key>") # Replace with your actual API key

# List of models to evaluate

models = ["gpt-4o", "claude-3.5-sonnet-v2"]

# Define the prompt

prompt_text = "Which one of 9.11 and 9.9 is bigger?"

# Loop through each model and evaluate

for model in models:

tlm = studio.TLM(options={"log": ["explanation"], "model": model})

out = tlm.prompt(prompt_text)

md_content = f"""

## Model: {model}

**Response:** {out['response']}

**Trustworthiness Score:** {out['trustworthiness_score']}

**Explanation:** {out['log']['explanation']}

---

"""

display(Markdown(md_content))

</cleanlab_api_key>

Now let’s test the RAG for some queries and their corresponding trustworthiness scores.

import streamlit as st

from langchain_groq.chat_models import ChatGroq

import os

os.environ["GROQ_API_KEY"]=st.secrets["GROQ_API_KEY"]

# Initialize the Groq Llama Instant model

groq_llm = ChatGroq(model="deepseek-r1-distill-llama-70b", temperature=0.5)

prompt = "Which one of 9.11 and 9.9 is bigger?"

# Get the response from the model

response = groq_llm.invoke(prompt)

#Initialize Cleanlab's studio

studio = Studio("226eeab91e944b23bd817a46dbe3c8ae")

cleanlab_tlm = studio.TLM(options={"log": ["explanation"]}) #for explanations

#Get the output containing trustworthiness score and explanation

output = cleanlab_tlm.get_trustworthiness_score(prompt, response=response.content.strip())

md_content = f"""

## Model: {model}

**Response:** {response.content.strip()}

**Trustworthiness Score:** {output['trustworthiness_score']}

**Explanation:** {output['log']['explanation']}

---

"""

display(Markdown(md_content))

pip install llama-parse llama-index-core llama-index-embeddings-huggingface llama-index-llms-cleanlab requests beautifulsoup4 pdfkit nest-asyncio

Assigning a trustworthiness score to LLM’s response, whether generated through direct inference or RAG, helps to define the reliability of AI’s output and prioritize human verification where needed. This is particularly important for critical domains where a wrong or unreliable response could have severe consequences.

That’s all folks! If you like the article, please follow me on Medium and LinkedIn.

The above is the detailed content of How to Measure the Reliability of a Large Language Model's Response. For more information, please follow other related articles on the PHP Chinese website!

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

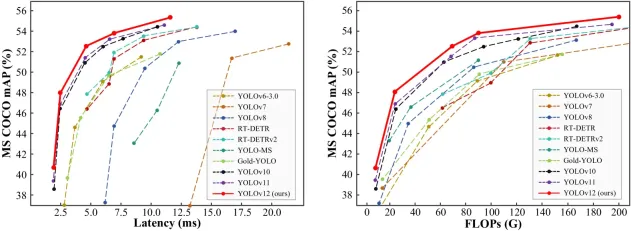

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Is ChatGPT 4 O available?Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?Mar 28, 2025 pm 05:29 PMChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

WebStorm Mac version

Useful JavaScript development tools

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Atom editor mac version download

The most popular open source editor