NeurIPS 2024 Spotlight: Optimizing Language Model Pretraining with Selective Language Modeling (SLM)

Recently, I presented a fascinating paper from NeurIPS 2024, "Not All Tokens Are What You Need for Pretraining," at a local reading group. This paper tackles a surprisingly simple yet impactful question: Is next-token prediction necessary for every token during language model pretraining?

The standard approach involves massive web-scraped datasets and applying causal language modeling (CLM) universally. This paper challenges that assumption, proposing that some tokens hinder, rather than help, the learning process. The authors demonstrate that focusing training on "useful" tokens significantly improves data efficiency and downstream task performance. This post summarizes their core ideas and key experimental findings.

The Problem: Noise and Inefficient Learning

Large web corpora inevitably contain noise. While document-level filtering helps, noise often resides within individual documents. These noisy tokens waste computational resources and potentially confuse the model.

The authors analyzed token-level learning dynamics, categorizing tokens based on their cross-entropy loss trajectory:

- L→L (Low to Low): Quickly learned, providing minimal further benefit.

- H→L (High to Low): Initially difficult, but eventually learned; representing valuable learning opportunities.

- H→H (High to High): Consistently difficult, often due to inherent unpredictability (aleatoric uncertainty).

- L→H (Low to High): Initially learned, but later become problematic, possibly due to context shifts or noise.

Their analysis reveals that only a small fraction of tokens provide meaningful learning signals.

The Solution: Selective Language Modeling (SLM)

The proposed solution, Selective Language Modeling (SLM), offers a more targeted approach:

-

Reference Model (RM) Training: A high-quality subset of the data is used to fine-tune a pre-trained base model, creating a reference model (RM). This RM acts as a benchmark for token "usefulness."

-

Excess Loss Calculation: For each token in the large corpus, the difference between the RM's loss and the current training model's loss (the "excess loss") is calculated. Higher excess loss indicates greater potential for improvement.

-

Selective Backpropagation: The full forward pass is performed on all tokens, but backpropagation only occurs for the top k% of tokens with the highest excess loss. This dynamically focuses training on the most valuable tokens.

Experimental Results: Significant Gains

SLM demonstrates significant advantages across various experiments:

-

Math Domain: On OpenWebMath, SLM achieved up to 10% performance gains on GSM8K and MATH benchmarks compared to standard CLM, reaching baseline performance 5-10 times faster. A 7B model matched a state-of-the-art model using only 3% of its training tokens. Fine-tuning further boosted performance by over 40% for a 1B model.

-

General Domain: Even with a strong pre-trained base model, SLM yielded approximately 5.8% average improvement across 15 benchmarks, particularly in challenging domains like code and math.

-

Self-Referencing: Even a quickly trained RM from the raw corpus provided a 2-3% accuracy boost and a 30-40% reduction in tokens used.

Conclusion and Future Work

This paper offers valuable insights into token-level learning dynamics and introduces SLM, a highly effective technique for optimizing language model pretraining. Future research directions include scaling SLM to larger models, exploring API-based reference models, integrating reinforcement learning, using multiple reference models, and aligning SLM with safety and truthfulness considerations. This work represents a significant advancement in efficient and effective language model training.

The above is the detailed content of Beyond Causal Language Modeling. For more information, please follow other related articles on the PHP Chinese website!

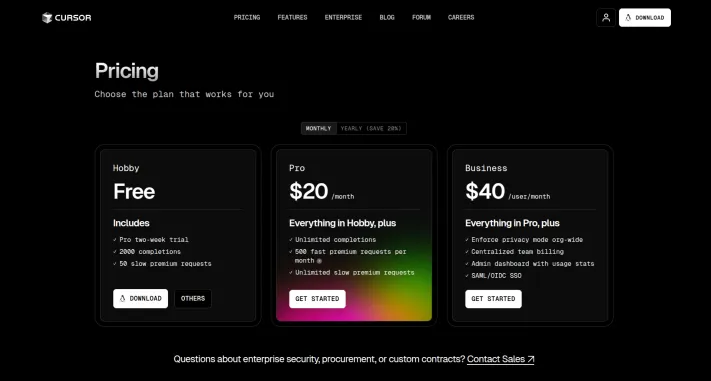

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!Mar 20, 2025 pm 03:34 PMVibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and FeaturesMar 09, 2025 pm 01:00 PMDALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!Mar 22, 2025 am 10:58 AMFebruary 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

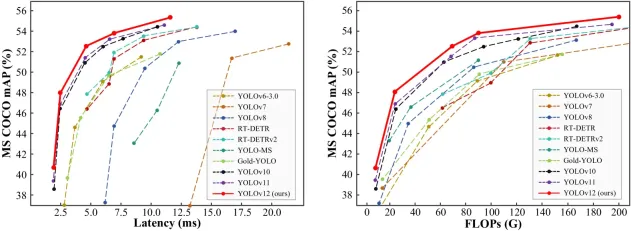

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?Mar 22, 2025 am 11:07 AMYOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate ProjectMar 08, 2025 am 11:15 AMThe $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?Mar 10, 2025 pm 12:22 PMGoogle's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini DemoMar 16, 2025 pm 01:46 PMGoogle DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?Mar 18, 2025 pm 06:05 PMThe article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

PhpStorm Mac version

The latest (2018.2.1) professional PHP integrated development tool

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MinGW - Minimalist GNU for Windows

This project is in the process of being migrated to osdn.net/projects/mingw, you can continue to follow us there. MinGW: A native Windows port of the GNU Compiler Collection (GCC), freely distributable import libraries and header files for building native Windows applications; includes extensions to the MSVC runtime to support C99 functionality. All MinGW software can run on 64-bit Windows platforms.