You are more than just a data point. The exit mechanism will help you regain your privacy.

The latest wave of artificial intelligence development forces many of us to rethink key aspects of life. For example, digital artists now need to focus on protecting their work from image-generating websites, while teachers need to deal with situations where some students may outsource essay writing to ChatGPT.

But the emergence of artificial intelligence also presents important privacy risks that everyone should know – even if you are not going to figure out what this technology thinks you look like as a mermaid.

Laboring transparency

The Brookings Institution (a nonprofit agency based in Washington, D.C., conducts research to solve a wide range of national and global issues) said Jessica Brandt, policy director for AI and Emerging Technology Initiatives: “We often have little understanding of who is using our personal information, how it is used and for what purpose.”

Broadly speaking, machine learning—the process in which artificial intelligence systems become more accurate— requires a lot of data. The more data the system has, the higher its accuracy. Generative artificial intelligence platforms like ChatGPT and Google's Bard, as well as image generator Dall-E, obtain part of the training data through a technology called crawling: They scan the internet to collect useful public information.

However, sometimes due to human error or negligence, private data that should not have been disclosed, such as sensitive company files, images or even login lists, may enter the accessible part of the internet, which anyone can find with the help of Google search operators they. Once this information is crawled and added to the training dataset of AI, few people are able to delete it.

"People should be able to share freely," said Ivana Bartoletti, global chief privacy officer at Indian technology company Wipro and researcher in accessing cybersecurity and privacy enforcement at Virginia Tech's Pampurin School of Business. Photos, without worrying about how it will eventually be used to train generative AI tools, or worse – their images may be used to make deep fakes. “Crawling personal data on the internet destroys people’s abilities to it Data control. ”

Data crawling is only one of the potential sources of problems in artificial intelligence system training data. Another source is the secondary use of personal data, said Katharina Koerner, a senior privacy engineering researcher at the International Association of Privacy Professionals. This happens when you voluntarily give up some of the information for a specific purpose, but it ends up for other purposes that you do not agree to. Businesses have been accumulating customer information for years, including email addresses, delivery details, and the type of product they like, but in the past, they have not been able to do much with that data. Today, sophisticated algorithms and artificial intelligence platforms provide an easy way to process this information so that they can learn more about people’s behavior patterns. This can benefit you by providing you with only advertisements and information that you may really care about, but it may also limit the supply of products and increase prices based on your postal code. Given that some companies already have a lot of data provided by their customers, it is very tempting for businesses to do so, Korner said.

She explained: "AI makes it easy to extract valuable patterns from available data that can support future decision-making, so it's very easy for businesses to get personal data when data is not collected for this purpose. for machine learning. ”

It doesn't help for developers to selectively delete your personal information from large training datasets. Of course, it can be easy to delete specific information (such as your date of birth or social security number (do not provide personal details to a generic AI platform). But for example, implementing a complete deletion request that complies with the European General Data Protection Regulation is another matter and perhaps the most complex challenge to be addressed, Bartoletti said.

[Related: How to stop school equipment from sharing data from your family]

Selective content deletion is difficult even in traditional IT systems due to its complex microservice structure (each part works as a separate unit). But Korner said that in the context of artificial intelligence, this is even more difficult and even impossible at the moment.

That's because it's not just a matter of clicking on "ctrl F" and deleting all data with someone's name - deleting one's data requires expensive programs that retrain the entire model from scratch, she explained.

The exit mechanism will become increasingly difficult

A well-nutritious AI system can provide incredible amounts of analysis, including pattern recognition that helps users understand people's behavior. But it’s not just because of the advantages of technology – it’s because people tend to act in predictable ways. This particular aspect of human nature allows AI systems to work properly without having to know a lot of specific information about you. Because when it's enough to know someone like you, what's the point of knowing you?

Brenda Leong, a partner at BNH.AI, a law firm focusing on artificial intelligence audits and risks in Washington, D.C., said: "We have arrived at the fewest pieces of information that only require — just three to go. Five pieces of data about a person, which is easy to obtain—they will be immediately absorbed into the prediction system. “In short: it is becoming increasingly difficult or even impossible to stay away from this system nowadays.

This leaves us with little freedom because even those who have been working to protect their privacy for years will make decisions and recommendations for them. This may make them feel that all their efforts have been in vain.

Liang continued: "Even if this is a beneficial way for me, like giving me a loan that matches my income level, or an opportunity that I am really interested in, it's also in my inability to control in any way What did it for me under the circumstances."

Using big data to classify the entire population without any nuances—for outliers and exceptions—we all know that life is full of these. The devil is in the details, but also applying generalized conclusions to special circumstances, things can get really bad.

Weaponization of data

Another key challenge is how to instill fairness in algorithmic decision-making—especially when conclusions of AI models may be based on wrong, outdated or incomplete data. It is well known that artificial intelligence systems may perpetuate the biases of their human creators, sometimes with terrible consequences for the entire community.

As more companies rely on algorithms to help them fill positions or determine driver risk profiles, our data is more likely to be used against our own interests. You may one day be harmed by automated decisions, suggestions or predictions made by these systems with little to no recourse available.

[Related: Autonomous Weapons May Make Serious Mistakes in War]

This is also a problem when these predictions or labels become facts in the eyes of artificial intelligence algorithms that cannot distinguish between true and false. For modern artificial intelligence, everything is data, whether it is personal data, public data, factual data or completely fictitious data.

More integration means less security

Just as your internet presence is as powerful as your weakest password, the integration of large AI tools with other platforms also provides attackers with more prying points to try when trying to access private data. Don't be surprised if you find some of them don't meet the standards in terms of safety.

This does not even take into account all the companies and government agencies that collect your data without your knowledge. Think about surveillance cameras near your home, tracking your facial recognition software around concert venues, kids wearing GoPros running around your local park, and even people trying to get popular on TikTok.

The more people and platforms are processing your data, the greater the chance of errors. A larger space for error means that your information is more likely to be leaked to the internet, where it is easily crawled into the training dataset of the AI model. As mentioned above, this is very difficult to undo.

What can you do

The bad news is that there is nothing you can do about it at the moment - neither can you solve the potential security threats derived from the AI training dataset that contains your information, nor can you solve the prediction system that may prevent you from getting your ideal job. At present, our best approach is to require supervision.

The EU has passed the first draft of the Artificial Intelligence Act, which will regulate how companies and governments use the technology based on acceptable levels of risk. Meanwhile, U.S. President Joe Biden has funded the development of ethical and fair AI technologies through executive orders, but Congress has not passed any laws to protect the privacy of U.S. citizens in terms of AI platforms. The Senate has been holding hearings to learn about the technology, but it is not close to creating a federal bill.

In the process of government work, you can and should advocate privacy regulations including artificial intelligence platforms and protect users from misprocessing of their data. Have meaningful conversations with those around you about the development of AI, make sure you understand your representative’s position on federal privacy regulations and vote for those who care most about your interests.

Read more PopSci stories.

The above is the detailed content of The Opt Out: 4 privacy concerns in the age of AI. For more information, please follow other related articles on the PHP Chinese website!

Clipchamp Video Loss on Windows? 2 Ways to Recover Files!May 09, 2025 pm 08:12 PM

Clipchamp Video Loss on Windows? 2 Ways to Recover Files!May 09, 2025 pm 08:12 PMRecover Lost Clipchamp Videos: A Step-by-Step Guide Losing a video you've edited in Clipchamp can be frustrating. This guide provides effective methods to recover your lost Clipchamp video files. Finding Your Clipchamp Videos Before attempting recov

7 Useful Fixes for Action Center Keeps Popping upMay 09, 2025 pm 08:07 PM

7 Useful Fixes for Action Center Keeps Popping upMay 09, 2025 pm 08:07 PMAction Center allows you to access quick settings and notifications. However, some users say that they encounter the “Action Center keeps popping up” issue on Windows 11/10. If you are one of them, refer to this post from MiniTool to get solutions.Qu

Instant Ways to Restore Missing Google Chrome Icon on WindowsMay 09, 2025 pm 08:06 PM

Instant Ways to Restore Missing Google Chrome Icon on WindowsMay 09, 2025 pm 08:06 PMTroubleshoot Missing Google Chrome Icon on Windows Can't find your Google Chrome icon on Windows? This guide offers several solutions to restore it. Why is my Chrome icon missing? Several factors can cause the Chrome icon to vanish from your desktop:

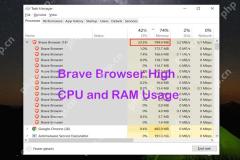

Brave Browser High CPU and RAM Usage: Best 5 Tips to ReduceMay 09, 2025 pm 08:05 PM

Brave Browser High CPU and RAM Usage: Best 5 Tips to ReduceMay 09, 2025 pm 08:05 PMBrave browser CPU and memory usage too high? Under Windows 10/11 system, Brave browser's high CPU and memory usage problems have troubled many users. This tutorial will provide a variety of solutions to help you easily resolve this issue. Quick navigation: Brave browser high CPU and memory footprint Solution 1: Clear cookies and cache data Solution 2: Disable hardware acceleration Solution 3: Close the tab and update the Brave browser Solution 4: Disable the plugin Solution 5: Create a new user profile Optional: Run MiniTool System Booster System Optimization Tool Summarize Brave browser high CP

Targeted Fixes for Xbox Error 0x87e0000f When Installing GamesMay 09, 2025 pm 08:04 PM

Targeted Fixes for Xbox Error 0x87e0000f When Installing GamesMay 09, 2025 pm 08:04 PMTroubleshooting Xbox Error Code 0x87e0000f: A Comprehensive Guide Encountering the Xbox error code 0x87e0000f while downloading games from Xbox Game Pass can be frustrating. This guide provides several solutions to help you resolve this issue and get

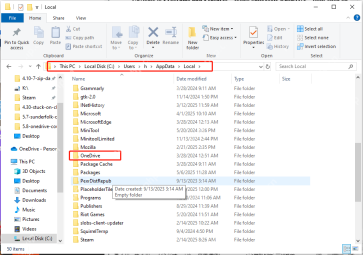

OneDrive Couldn't Start Files on Demand?Top 4 MethodsMay 09, 2025 pm 08:02 PM

OneDrive Couldn't Start Files on Demand?Top 4 MethodsMay 09, 2025 pm 08:02 PMOneDrive Files On-Demand troubleshooting: resolving the "OneDrive couldn't start Files On-Demand" error. This MiniTool guide provides solutions for the persistent "Microsoft OneDrive Couldn’t start files on Demand" error (codes 0x

How to fix 'Microsoft Store is blocked' error in Windows?May 09, 2025 pm 06:00 PM

How to fix 'Microsoft Store is blocked' error in Windows?May 09, 2025 pm 06:00 PMMicrosoft Store is blocked error occurs when Windows prevents access to the Microsoft Store app, displaying the message Microsoft Store is blocked. Check with y

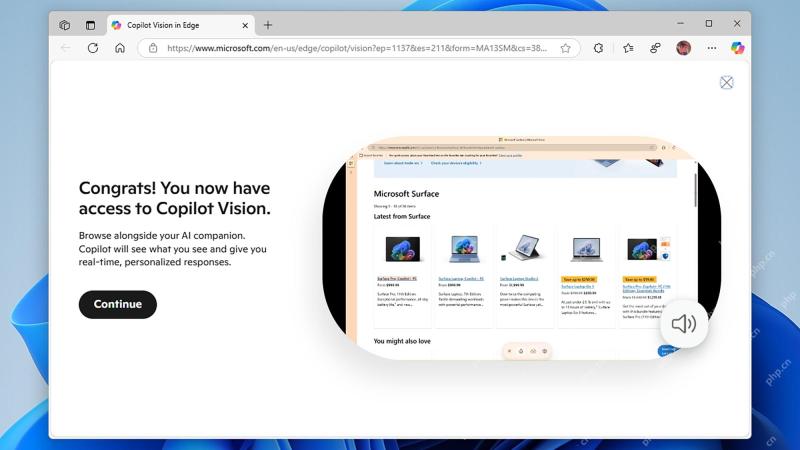

How to use Copilot Vision for free in Microsoft EdgeMay 09, 2025 am 10:32 AM

How to use Copilot Vision for free in Microsoft EdgeMay 09, 2025 am 10:32 AMStaying current with all the new AI tools is a challenge. Many might even overlook readily available AI features. For instance, Copilot Vision is now free for all Microsoft Edge users – a fact easily missed if you don't regularly use Edge or haven't

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Atom editor mac version download

The most popular open source editor

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

SublimeText3 Mac version

God-level code editing software (SublimeText3)

SublimeText3 Chinese version

Chinese version, very easy to use

DVWA

Damn Vulnerable Web App (DVWA) is a PHP/MySQL web application that is very vulnerable. Its main goals are to be an aid for security professionals to test their skills and tools in a legal environment, to help web developers better understand the process of securing web applications, and to help teachers/students teach/learn in a classroom environment Web application security. The goal of DVWA is to practice some of the most common web vulnerabilities through a simple and straightforward interface, with varying degrees of difficulty. Please note that this software