Core points

- Node.js has become a popular server-side running environment for handling high-traffic websites with its event-driven architecture and non-blocking I/O API, allowing asynchronous request processing.

- Node.js' scalability is a key feature it adopts by large companies. Although run in a single thread by default and has memory limits, Node.js can extend an application through a cluster module to split a single process into multiple processes or worker processes.

- Node.js cluster module works by executing the same Node.js process multiple times. It allows the main process to be identified and create worker processes that can share server handles and communicate with the parent Node process.

- Node.js application can be parallelized using cluster modules, allowing multiple processes to run simultaneously. This improves system efficiency, improves application performance, and enhances reliability and uptime.

- Although the Node.js cluster module is mainly recommended for web servers, it can also be used for other applications when carefully considering task allocation between worker processes and effective communication between worker processes and main processes. program.

Node.js is becoming more and more popular as a server-side running environment, especially in high-traffic websites, and statistics also prove this. Furthermore, the availability of numerous frameworks makes it a good environment for rapid prototyping. Node.js has an event-driven architecture that utilizes a non-blocking I/O API to allow asynchronous processing of requests. An important and often overlooked feature of Node.js is its scalability. In fact, this is the main reason why some large companies integrate Node.js (such as Microsoft, Yahoo, Uber, and Walmart) into their platforms and even migrate their server-side operations completely to Node.js (such as PayPal, eBay, and Groupon) . Each Node.js process runs in a single thread, by default, the memory limit for 32-bit systems is 512MB and the memory limit for 64-bit systems is 1GB. While the memory limit can be increased to about 1GB on 32-bit systems and to about 1.7GB on 64-bit systems, memory and processing power can still be a bottleneck for various processes. The elegant solution that Node.js provides for extended applications is to split a single process into multiple processes or worker processes in Node.js terminology. This can be achieved through the cluster module. The cluster module allows you to create child processes (working processes) that share all server ports with the main Node process (main process). In this article, you will learn how to create a Node.js cluster to speed up your application. Cluster is a pool of similar worker processes running under the parent Node process. Worker processes are generated using the fork() method of the child_processes module. This means that the worker process can share the server handle and communicate with the parent Node process using IPC (Interprocess Communication). The main process is responsible for starting and controlling the work process. You can create as many worker processes as you like in the main process. Also, remember that by default, incoming connections are allocated polling between worker processes (except Windows). Actually, there is another way to allocate incoming connections, which I won't discuss here, handing over the assignment to the operating system (the default setting in Windows). The Node.js documentation recommends using the default polling style as the scheduling policy. Although using a cluster module sounds complicated in theory, its implementation is very simple. To get started using it you have to include it in your Node.js application: The cluster module executes the same Node.js process multiple times. So the first thing you need to do is determine which part of the code is used for the main process and which part of the code is used for the worker process. The cluster module allows you to identify the main process as follows: The main process is the process you started, which in turn initializes the worker process. To start the worker process in the main process, we will use the fork() method: This method returns a worker object containing some methods and properties about the derived worker. We will see some examples in the next section. The cluster module contains multiple events. Two common events related to the worker process startup and termination moments are the online and exit events. When the worker process derives and sends an online message, an online event is emitted. When the worker process dies, an exit event is emitted. Later, we will learn how to use these two events to control the life cycle of a worker process. Now, let's put together everything we've seen so far and show a complete runnable example. Example This section contains two examples. The first example is a simple application showing how to use cluster modules in a Node.js application. The second example is an Express server that takes advantage of the Node.js cluster module, which is part of the production code I usually use in large-scale projects. Both examples are available for download from GitHub. In this first example, we set up a simple server that responds to all incoming requests using a message containing the worker process ID that handles the request. The main process derives four worker processes. In each worker process, we start listening to port 8000 to receive incoming requests. The code that implements what I just described is as follows: You can access the URL by starting the server (running the command node simple.js) and accessing the URL https://www.php.cn/link/7d2d180c45c41870f36e747816456190. Express is one of the most popular web application frameworks for Node.js (if not the most popular). We have covered it several times on this website. If you are interested in learning more, I recommend you read the articles "Creating a RESTful API with Express 4" and "Building a Node.js-driven chat room web application: Express and Azure". The second example shows how to develop a highly scalable Express server. It also demonstrates how to migrate a single process server to take advantage of a cluster module with a small amount of code. The first addition to this example is to use the Node.js os module to get the number of CPU cores. The os module contains a cpus() function that returns an array of CPU cores. Using this approach, we can dynamically determine the number of worker processes to derive based on server specifications to maximize the use of resources. The second and more important addition is to deal with the death of the work process. When the worker process dies, the cluster module will issue an exit event. It can be processed by listening for the event and executing a callback function when it is emitted. You can do this by writing statements like cluster.on('exit', callback); In the callback function, we derive a new worker process to maintain the expected number of worker processes. This allows us to keep the application running even with some unhandled exceptions. In this example, I also set up a listener for the online event, which is emitted whenever the worker process is derived and ready to receive incoming requests. This can be used for logging or other operations. There are several tools to benchmark the API, but here I use the Apache Benchmark tool to analyze how using cluster modules affects the performance of the application. To set up the tests, I developed an Express server that has a route and a callback function for that route. In the callback function, perform a virtual operation and return a short message. There are two versions of the server: one has no worker process, where all operations happen in the main process, and the other has 8 worker processes (because my machine has 8 cores). The following table shows how the merge cluster module increases the number of requests processed per second. (Number of requests processed per second) Advanced Operation While using cluster modules is relatively simple, you can use worker processes to perform other operations. For example, you can use cluster modules to achieve (almost!) zero downtime for your application. We'll learn how to do some of these operations in a while. Sometimes, you may need to send a message from the main process to the worker process to assign tasks or perform other actions. In return, the worker process may need to notify the main process that the task has been completed. To listen for messages, you should set the event listener for the message event in both the main process and the worker process: worker object is a reference returned by the fork() method. To listen to messages from the main process in the work process: message can be a string or a JSON object. To send messages to a specific worker process, you can write code like this: Similarly, to send a message to the main process, you can write: In Node.js, messages are generic and have no specific types. Therefore, it is best to send a message as a JSON object that contains some information about the message type, the sender, and the content itself. For example: One thing to note here is that the message event callback is processed asynchronously. There is no defined execution order. You can find a complete example of communication between the main process and the worker process on GitHub. One important result that can be achieved using worker processes is the (almost) zero downtime server. In the main process, you can terminate and restart the worker process one at a time after making changes to the application. This allows you to run the old version while loading the new version. In order to be able to restart your application at runtime, you need to remember two things. First, the main process is running all the time, and only the worker process is terminated and restarted. Therefore, it is important to keep the main process short and only manage the work process. Second, you need to somehow notify the main process that the worker process needs to be restarted. There are several ways to do this, including user input or monitoring file changes. The latter is more efficient, but you need to identify the files to monitor in the main process. The way I recommend restarting the worker process is to first try to close them safely; then force kill them if they do not terminate safely. You can execute the former by sending a shutdown message to the worker process, as shown below: and start safe shutdown in the worker message event handler: To do this for all worker processes, you can use the workers property of the cluster module, which holds a reference to all running worker processes. We can also wrap all tasks in a function in the main process that can be called when we want to restart all worker processes. We can get the IDs of all running worker processes from the workers object in the cluster module. This object holds a reference to all running worker processes and updates dynamically when the worker processes are terminated and restarted. First, we store the IDs of all running worker processes in the workerIds array. This way, we avoid restarting the newly derived worker process. We then request that each worker process be closed safely. If the worker process is still running after 5 seconds and still exists in the workers object, then we call the kill function on the worker process to force it to close. You can find a practical example on GitHub. Conclusion Node.js applications can be parallelized using the cluster module to enable more efficient use of the system. Multiple processes can be run simultaneously with several lines of code, which makes migration relatively easy because Node.js handles difficult parts. As I demonstrated in the performance comparison, it is possible to achieve significant improvement in application performance by leveraging system resources more efficiently. In addition to performance, you can improve application reliability and uptime by restarting worker processes while the application is running. That is, you need to be careful when considering using cluster modules in your application. The main recommended use of cluster modules is for web servers. In other cases, you need to take a closer look at how to allocate tasks between worker processes and how to communicate progress effectively between worker processes and main processes. Even for web servers, make sure that a single Node.js process is a bottleneck (memory or CPU) before making any changes to your application, as your changes may introduce errors. Last but not least, the Node.js website provides good documentation for cluster modules. So be sure to check it out! FAQs about Node.js clusters The main advantage of using Node.js cluster is to improve the performance of the application. Node.js runs on a thread, which means it can only utilize one CPU core at a time. However, modern servers usually have multiple cores. By using the Node.js cluster, you can create a main process that derives multiple worker processes, each running on a different CPU core. This allows your application to process more requests at the same time, which significantly improves its speed and performance. Node.js cluster works by creating a main process that derives multiple worker processes. The main process listens for incoming requests and distributes them to the worker process in a polling manner. Each worker process runs on a separate CPU core and handles requests independently. This allows your application to take advantage of all available CPU cores and process more requests at the same time. Creating a Node.js cluster involves using the "cluster" module provided by Node.js. First, you need to import the "cluster" and "os" modules. You can then use the "cluster.fork()" method to create the worker process. "os.cpus().length" gives you the number of available CPU cores, which you can use to determine the number of worker processes to create. Here is a simple example: You can handle worker process crashes in Node.js cluster by listening for "exit" events on the main process. When the worker process crashes, it sends an "exit" event to the main process. You can then use the "cluster.fork()" method to create a new worker process to replace the crashed worker process. Here is an example: Yes, you can use the Node.js cluster with Express.js. In fact, using Node.js clustering can significantly improve the performance of Express.js applications. You just need to put the Express.js application code in the worker process code block in the cluster script. While Node.js cluster can significantly improve application performance, it also has some limitations. For example, a worker process does not share state or memory. This means you cannot store session data in memory because it is inaccessible in all worker processes. Instead, you need to use a shared session storage, such as a database or a Redis server. By default, the main process in the Node.js cluster distributes incoming requests to the worker process in a polling manner. This provides a basic form of load balancing. However, if you need more advanced load balancing, you may need to use a reverse proxy server, such as Nginx. Yes, you can use Node.js cluster in production environment. In fact, it is highly recommended to use Node.js clusters in production environments to make the most of the server's CPU core and improve the performance of your application. Debugging Node.js clusters can be a bit tricky because you have multiple worker processes running at the same time. However, you can attach the debugger to each process using the "inspect" flag with a unique port for each worker process. Here is an example: Yes, you can use the Node.js cluster with other Node.js modules. However, you need to note that the worker process does not share state or memory. This means that if the module depends on shared state, it may not work properly in the cluster environment. Node.js cluster module: what does it do and how it works

var cluster = require('cluster');

if(cluster.isMaster) { ... }

cluster.fork();

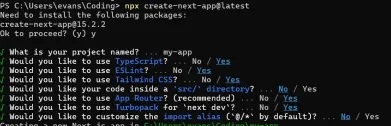

How to use cluster module in Node.js application

var cluster = require('cluster');

var http = require('http');

var numCPUs = 4;

if (cluster.isMaster) {

for (var i = 0; i < numCPUs; i++) {

cluster.fork();

}

} else {

http.createServer(function(req, res) {

res.writeHead(200);

res.end('process ' + process.pid + ' says hello!');

}).listen(8000);

}

How to develop a highly scalable Express server

var cluster = require('cluster');

Performance comparison

并发连接

1

2

4

8

16

单进程

654

711

783

776

754

8个工作进程

594

1198

2110

3010

3024

Communication between the main process and the worker process

var cluster = require('cluster');

if(cluster.isMaster) { ... }

The cluster.fork();

var cluster = require('cluster');

var http = require('http');

var numCPUs = 4;

if (cluster.isMaster) {

for (var i = 0; i < numCPUs; i++) {

cluster.fork();

}

} else {

http.createServer(function(req, res) {

res.writeHead(200);

res.end('process ' + process.pid + ' says hello!');

}).listen(8000);

}

var cluster = require('cluster');

if(cluster.isMaster) {

var numWorkers = require('os').cpus().length;

console.log('Master cluster setting up ' + numWorkers + ' workers...');

for(var i = 0; i < numWorkers; i++) {

cluster.fork();

}

cluster.on('online', function(worker) {

console.log('Worker ' + worker.process.pid + ' is online');

});

cluster.on('exit', function(worker, code, signal) {

console.log('Worker ' + worker.process.pid + ' died with code: ' + code + ', and signal: ' + signal);

console.log('Starting a new worker');

cluster.fork();

});

} else {

var app = require('express')();

app.all('/*', function(req, res) {res.send('process ' + process.pid + ' says hello!').end();})

var server = app.listen(8000, function() {

console.log('Process ' + process.pid + ' is listening to all incoming requests');

});

}

Zero downtime

worker.on('message', function(message) {

console.log(message);

});

process.on('message', function(message) {

console.log(message);

});

var cluster = require('cluster');

What are the main advantages of using Node.js clusters?

How does Node.js cluster work?

How to create a Node.js cluster?

var cluster = require('cluster');

How to deal with worker process crashes in Node.js cluster?

if(cluster.isMaster) { ... }

Can I use Node.js cluster with Express.js?

What are the limitations of Node.js clusters?

How to load balancing requests in Node.js cluster?

Can I use Node.js cluster in production?

How to debug Node.js cluster?

cluster.fork();

Can I use Node.js cluster with other Node.js modules?

The above is the detailed content of How to Create a Node.js Cluster for Speeding Up Your Apps. For more information, please follow other related articles on the PHP Chinese website!

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AM

From C/C to JavaScript: How It All WorksApr 14, 2025 am 12:05 AMThe shift from C/C to JavaScript requires adapting to dynamic typing, garbage collection and asynchronous programming. 1) C/C is a statically typed language that requires manual memory management, while JavaScript is dynamically typed and garbage collection is automatically processed. 2) C/C needs to be compiled into machine code, while JavaScript is an interpreted language. 3) JavaScript introduces concepts such as closures, prototype chains and Promise, which enhances flexibility and asynchronous programming capabilities.

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AM

JavaScript Engines: Comparing ImplementationsApr 13, 2025 am 12:05 AMDifferent JavaScript engines have different effects when parsing and executing JavaScript code, because the implementation principles and optimization strategies of each engine differ. 1. Lexical analysis: convert source code into lexical unit. 2. Grammar analysis: Generate an abstract syntax tree. 3. Optimization and compilation: Generate machine code through the JIT compiler. 4. Execute: Run the machine code. V8 engine optimizes through instant compilation and hidden class, SpiderMonkey uses a type inference system, resulting in different performance performance on the same code.

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AM

Beyond the Browser: JavaScript in the Real WorldApr 12, 2025 am 12:06 AMJavaScript's applications in the real world include server-side programming, mobile application development and Internet of Things control: 1. Server-side programming is realized through Node.js, suitable for high concurrent request processing. 2. Mobile application development is carried out through ReactNative and supports cross-platform deployment. 3. Used for IoT device control through Johnny-Five library, suitable for hardware interaction.

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AM

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AMJavaScript is the cornerstone of modern web development, and its main functions include event-driven programming, dynamic content generation and asynchronous programming. 1) Event-driven programming allows web pages to change dynamically according to user operations. 2) Dynamic content generation allows page content to be adjusted according to conditions. 3) Asynchronous programming ensures that the user interface is not blocked. JavaScript is widely used in web interaction, single-page application and server-side development, greatly improving the flexibility of user experience and cross-platform development.

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Safe Exam Browser

Safe Exam Browser is a secure browser environment for taking online exams securely. This software turns any computer into a secure workstation. It controls access to any utility and prevents students from using unauthorized resources.

MantisBT

Mantis is an easy-to-deploy web-based defect tracking tool designed to aid in product defect tracking. It requires PHP, MySQL and a web server. Check out our demo and hosting services.

SecLists

SecLists is the ultimate security tester's companion. It is a collection of various types of lists that are frequently used during security assessments, all in one place. SecLists helps make security testing more efficient and productive by conveniently providing all the lists a security tester might need. List types include usernames, passwords, URLs, fuzzing payloads, sensitive data patterns, web shells, and more. The tester can simply pull this repository onto a new test machine and he will have access to every type of list he needs.

ZendStudio 13.5.1 Mac

Powerful PHP integrated development environment