Web scraping is an incredibly powerful tool for gathering data from websites. With Puppeteer, Google’s headless browser library for Node.js, you can automate the process of navigating pages, clicking buttons, and extracting information—all while mimicking human browsing behavior. This guide will walk you through the essentials of web scraping with Puppeteer in a simple, clear, and actionable way.

What is Puppeteer?

Puppeteer is a Node.js library that lets you control a headless version of Google Chrome (or Chromium). A headless browser runs without a graphical user interface (GUI), making it faster and perfect for automation tasks like scraping. However, Puppeteer can also run in full browser mode if you need to see what’s happening visually.

Why Choose Puppeteer for Web Scraping?

Flexibility: Puppeteer handles dynamic websites and single-page applications (SPAs) with ease.

JavaScript Support: It executes JavaScript on pages, which is essential for scraping modern web apps.

Automation Power: You can perform tasks like filling out forms, clicking buttons, and even taking screenshots.

Using Proxies with Puppeteer

When scraping websites, proxies are essential for avoiding IP bans and accessing geo-restricted content. Proxies act as intermediaries between your scraper and the target website, masking your real IP address. For Puppeteer, you can easily integrate proxies by passing them as launch arguments:

javascript

Copy code

const browser = await puppeteer.launch({

args: ['--proxy-server=your-proxy-server:port']

});

Proxies are particularly useful for scaling your scraping efforts. Rotating proxies ensure each request comes from a different IP, reducing the chances of detection. Residential proxies, known for their authenticity, are excellent for bypassing bot defenses, while data center proxies are faster and more affordable. Choose the type that aligns with your scraping needs, and always test performance to ensure reliability.

Setting Up Puppeteer

Before you start scraping, you’ll need to set up Puppeteer. Let’s dive into the step-by-step process:

Step 1: Install Node.js and Puppeteer

Install Node.js: Download and install Node.js from the official website.

Set Up Puppeteer: Open your terminal and run the following command:

bash

Copy code

npm install puppeteer

This will install Puppeteer and Chromium, the browser it controls.

Step 2: Write Your First Puppeteer Script

Create a new JavaScript file, scraper.js. This will house your scraping logic. Let’s write a simple script to open a webpage and extract its title:

javascript

Copy code

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

// Navigate to a website

await page.goto('https://example.com');

// Extract the title

const title = await page.title();

console.log(Page title: ${title});

await browser.close();

})();

Run the script using:

bash

Copy code

node scraper.js

You’ve just written your first Puppeteer scraper!

Core Puppeteer Features for Scraping

Now that you’ve got the basics down, let’s explore some key Puppeteer features you’ll use for scraping.

Navigating to Pages

The page.goto(url) method lets you open any URL. Add options like timeout settings if needed:

javascript

Copy code

await page.goto('https://example.com', { timeout: 60000 });Selecting Elements

Use CSS selectors to pinpoint elements on a page. Puppeteer offers methods like:

page.$(selector) for the first match

page.$$(selector) for all matches

Example:

javascript

Copy code

const element = await page.$('h1');

const text = await page.evaluate(el => el.textContent, element);

console.log(Heading: ${text});Interacting with Elements

Simulate user interactions, such as clicks and typing:

javascript

Copy code

await page.click('#submit-button');

await page.type('#search-box', 'Puppeteer scraping');Waiting for Elements

Web pages load at different speeds. Puppeteer allows you to wait for elements before proceeding:

javascript

Copy code

await page.waitForSelector('#dynamic-content');Taking Screenshots

Visual debugging or saving data as images is easy:

javascript

Copy code

await page.screenshot({ path: 'screenshot.png', fullPage: true });

Handling Dynamic Content

Many websites today use JavaScript to load content dynamically. Puppeteer shines here because it executes JavaScript, allowing you to scrape content that might not be visible in the page source.

Example: Extracting Dynamic Data

javascript

Copy code

await page.goto('https://news.ycombinator.com');

await page.waitForSelector('.storylink');

const headlines = await page.$$eval('.storylink', links => links.map(link => link.textContent));

console.log('Headlines:', headlines);

Dealing with CAPTCHA and Bot Detection

Some websites have measures in place to block bots. Puppeteer can help bypass simple checks:

Use Stealth Mode: Install the puppeteer-extra plugin:

bash

Copy code

npm install puppeteer-extra puppeteer-extra-plugin-stealth

Add it to your script:

javascript

Copy code

const puppeteer = require('puppeteer-extra');

const StealthPlugin = require('puppeteer-extra-plugin-stealth');

puppeteer.use(StealthPlugin());

Mimic Human Behavior: Randomize actions like mouse movements and typing speeds to appear more human.

Rotate User Agents: Change your browser’s user agent with each request:

javascript

Copy code

await page.setUserAgent('Mozilla/5.0 (Windows NT 10.0; Win64; x64)');

Saving Scraped Data

After extracting data, you’ll likely want to save it. Here are some common formats:

JSON:

javascript

Copy code

const fs = require('fs');

const data = { name: 'Puppeteer', type: 'library' };

fs.writeFileSync('data.json', JSON.stringify(data, null, 2));

CSV: Use a library like csv-writer:

bash

Copy code

npm install csv-writer

javascript

Copy code

const createCsvWriter = require('csv-writer').createObjectCsvWriter;

const csvWriter = createCsvWriter({

path: 'data.csv',

header: [

{ id: 'name', title: 'Name' },

{ id: 'type', title: 'Type' }

]

});

const records = [{ name: 'Puppeteer', type: 'library' }];

csvWriter.writeRecords(records).then(() => console.log('CSV file written.'));

Ethical Web Scraping Practices

Before you scrape a website, keep these ethical guidelines in mind:

Check the Terms of Service: Always ensure the website allows scraping.

Respect Rate Limits: Avoid sending too many requests in a short time. Use setTimeout or Puppeteer’s page.waitForTimeout() to space out requests:

javascript

Copy code

await page.waitForTimeout(2000); // Waits for 2 seconds

Avoid Sensitive Data: Never scrape personal or private information.

Troubleshooting Common Issues

Page Doesn’t Load Properly: Try adding a longer timeout or enabling full browser mode:

javascript

Copy code

const browser = await puppeteer.launch({ headless: false });

Selectors Don’t Work: Inspect the website with browser developer tools (Ctrl Shift C) to confirm the selectors.

Blocked by CAPTCHA: Use the stealth plugin and mimic human behavior.

Frequently Asked Questions (FAQs)

- Is Puppeteer Free? Yes, Puppeteer is open-source and free to use.

- Can Puppeteer Scrape JavaScript-Heavy Websites? Absolutely! Puppeteer executes JavaScript, making it perfect for scraping dynamic sites.

- Is Web Scraping Legal? It depends. Always check the website’s terms of service before scraping.

- Can Puppeteer Bypass CAPTCHA? Puppeteer can handle basic CAPTCHA challenges, but advanced ones might require third-party tools.

The above is the detailed content of How to Web Scrape with Puppeteer: A Beginner-Friendly Guide. For more information, please follow other related articles on the PHP Chinese website!

Replace String Characters in JavaScriptMar 11, 2025 am 12:07 AM

Replace String Characters in JavaScriptMar 11, 2025 am 12:07 AMDetailed explanation of JavaScript string replacement method and FAQ This article will explore two ways to replace string characters in JavaScript: internal JavaScript code and internal HTML for web pages. Replace string inside JavaScript code The most direct way is to use the replace() method: str = str.replace("find","replace"); This method replaces only the first match. To replace all matches, use a regular expression and add the global flag g: str = str.replace(/fi

8 Stunning jQuery Page Layout PluginsMar 06, 2025 am 12:48 AM

8 Stunning jQuery Page Layout PluginsMar 06, 2025 am 12:48 AMLeverage jQuery for Effortless Web Page Layouts: 8 Essential Plugins jQuery simplifies web page layout significantly. This article highlights eight powerful jQuery plugins that streamline the process, particularly useful for manual website creation

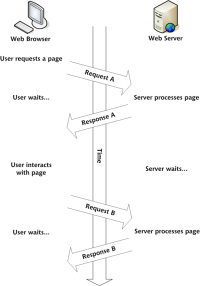

Build Your Own AJAX Web ApplicationsMar 09, 2025 am 12:11 AM

Build Your Own AJAX Web ApplicationsMar 09, 2025 am 12:11 AMSo here you are, ready to learn all about this thing called AJAX. But, what exactly is it? The term AJAX refers to a loose grouping of technologies that are used to create dynamic, interactive web content. The term AJAX, originally coined by Jesse J

10 Mobile Cheat Sheets for Mobile DevelopmentMar 05, 2025 am 12:43 AM

10 Mobile Cheat Sheets for Mobile DevelopmentMar 05, 2025 am 12:43 AMThis post compiles helpful cheat sheets, reference guides, quick recipes, and code snippets for Android, Blackberry, and iPhone app development. No developer should be without them! Touch Gesture Reference Guide (PDF) A valuable resource for desig

Improve Your jQuery Knowledge with the Source ViewerMar 05, 2025 am 12:54 AM

Improve Your jQuery Knowledge with the Source ViewerMar 05, 2025 am 12:54 AMjQuery is a great JavaScript framework. However, as with any library, sometimes it’s necessary to get under the hood to discover what’s going on. Perhaps it’s because you’re tracing a bug or are just curious about how jQuery achieves a particular UI

10 jQuery Fun and Games PluginsMar 08, 2025 am 12:42 AM

10 jQuery Fun and Games PluginsMar 08, 2025 am 12:42 AM10 fun jQuery game plugins to make your website more attractive and enhance user stickiness! While Flash is still the best software for developing casual web games, jQuery can also create surprising effects, and while not comparable to pure action Flash games, in some cases you can also have unexpected fun in your browser. jQuery tic toe game The "Hello world" of game programming now has a jQuery version. Source code jQuery Crazy Word Composition Game This is a fill-in-the-blank game, and it can produce some weird results due to not knowing the context of the word. Source code jQuery mine sweeping game

How do I create and publish my own JavaScript libraries?Mar 18, 2025 pm 03:12 PM

How do I create and publish my own JavaScript libraries?Mar 18, 2025 pm 03:12 PMArticle discusses creating, publishing, and maintaining JavaScript libraries, focusing on planning, development, testing, documentation, and promotion strategies.

jQuery Parallax Tutorial - Animated Header BackgroundMar 08, 2025 am 12:39 AM

jQuery Parallax Tutorial - Animated Header BackgroundMar 08, 2025 am 12:39 AMThis tutorial demonstrates how to create a captivating parallax background effect using jQuery. We'll build a header banner with layered images that create a stunning visual depth. The updated plugin works with jQuery 1.6.4 and later. Download the

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Zend Studio 13.0.1

Powerful PHP integrated development environment

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),

SAP NetWeaver Server Adapter for Eclipse

Integrate Eclipse with SAP NetWeaver application server.