How to Scrape Dynamic Content Generated by JavaScript in Python

Scraping dynamic content from web pages can pose challenges when using static methods like urllib2.urlopen(request) in Python. Such content is often generated and executed by JavaScript embedded within the page.

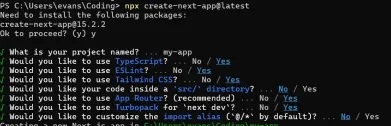

One approach to tackle this issue is to leverage the Selenium framework with Phantom JS as a web driver. Ensure that Phantom JS is installed, and its binary is available in the current path.

Here's an example to illustrate:

import requests from bs4 import BeautifulSoup response = requests.get(my_url) soup = BeautifulSoup(response.text) soup.find(id="intro-text") # Result: <p></p>

This code will retrieve the page without JavaScript support. To scrape with JS support, use Selenium:

from selenium import webdriver driver = webdriver.PhantomJS() driver.get(my_url) p_element = driver.find_element_by_id(id_='intro-text') print(p_element.text) # Result: 'Yay! Supports javascript'

Alternatively, you can utilize Python libraries specifically designed for scraping JavaScript-driven websites, such as dryscrape:

import dryscrape from bs4 import BeautifulSoup session = dryscrape.Session() session.visit(my_url) response = session.body() soup = BeautifulSoup(response) soup.find(id="intro-text") # Result: <p></p>

The above is the detailed content of How to Scrape Dynamic JavaScript-Rendered Content in Python?. For more information, please follow other related articles on the PHP Chinese website!

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AM

Building a Multi-Tenant SaaS Application with Next.js (Backend Integration)Apr 11, 2025 am 08:23 AMI built a functional multi-tenant SaaS application (an EdTech app) with your everyday tech tool and you can do the same. First, what’s a multi-tenant SaaS application? Multi-tenant SaaS applications let you serve multiple customers from a sing

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AM

How to Build a Multi-Tenant SaaS Application with Next.js (Frontend Integration)Apr 11, 2025 am 08:22 AMThis article demonstrates frontend integration with a backend secured by Permit, building a functional EdTech SaaS application using Next.js. The frontend fetches user permissions to control UI visibility and ensures API requests adhere to role-base

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AM

JavaScript: Exploring the Versatility of a Web LanguageApr 11, 2025 am 12:01 AMJavaScript is the core language of modern web development and is widely used for its diversity and flexibility. 1) Front-end development: build dynamic web pages and single-page applications through DOM operations and modern frameworks (such as React, Vue.js, Angular). 2) Server-side development: Node.js uses a non-blocking I/O model to handle high concurrency and real-time applications. 3) Mobile and desktop application development: cross-platform development is realized through ReactNative and Electron to improve development efficiency.

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AM

The Evolution of JavaScript: Current Trends and Future ProspectsApr 10, 2025 am 09:33 AMThe latest trends in JavaScript include the rise of TypeScript, the popularity of modern frameworks and libraries, and the application of WebAssembly. Future prospects cover more powerful type systems, the development of server-side JavaScript, the expansion of artificial intelligence and machine learning, and the potential of IoT and edge computing.

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AM

Demystifying JavaScript: What It Does and Why It MattersApr 09, 2025 am 12:07 AMJavaScript is the cornerstone of modern web development, and its main functions include event-driven programming, dynamic content generation and asynchronous programming. 1) Event-driven programming allows web pages to change dynamically according to user operations. 2) Dynamic content generation allows page content to be adjusted according to conditions. 3) Asynchronous programming ensures that the user interface is not blocked. JavaScript is widely used in web interaction, single-page application and server-side development, greatly improving the flexibility of user experience and cross-platform development.

Is Python or JavaScript better?Apr 06, 2025 am 12:14 AM

Is Python or JavaScript better?Apr 06, 2025 am 12:14 AMPython is more suitable for data science and machine learning, while JavaScript is more suitable for front-end and full-stack development. 1. Python is known for its concise syntax and rich library ecosystem, and is suitable for data analysis and web development. 2. JavaScript is the core of front-end development. Node.js supports server-side programming and is suitable for full-stack development.

How do I install JavaScript?Apr 05, 2025 am 12:16 AM

How do I install JavaScript?Apr 05, 2025 am 12:16 AMJavaScript does not require installation because it is already built into modern browsers. You just need a text editor and a browser to get started. 1) In the browser environment, run it by embedding the HTML file through tags. 2) In the Node.js environment, after downloading and installing Node.js, run the JavaScript file through the command line.

How to send notifications before a task starts in Quartz?Apr 04, 2025 pm 09:24 PM

How to send notifications before a task starts in Quartz?Apr 04, 2025 pm 09:24 PMHow to send task notifications in Quartz In advance When using the Quartz timer to schedule a task, the execution time of the task is set by the cron expression. Now...

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

SublimeText3 Linux new version

SublimeText3 Linux latest version

Zend Studio 13.0.1

Powerful PHP integrated development environment

SublimeText3 Chinese version

Chinese version, very easy to use

VSCode Windows 64-bit Download

A free and powerful IDE editor launched by Microsoft

mPDF

mPDF is a PHP library that can generate PDF files from UTF-8 encoded HTML. The original author, Ian Back, wrote mPDF to output PDF files "on the fly" from his website and handle different languages. It is slower than original scripts like HTML2FPDF and produces larger files when using Unicode fonts, but supports CSS styles etc. and has a lot of enhancements. Supports almost all languages, including RTL (Arabic and Hebrew) and CJK (Chinese, Japanese and Korean). Supports nested block-level elements (such as P, DIV),